Session Overhead Reduction in Adaptive Streaming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Package 'Brotli'

Package ‘brotli’ May 13, 2018 Type Package Title A Compression Format Optimized for the Web Version 1.2 Description A lossless compressed data format that uses a combination of the LZ77 algorithm and Huffman coding. Brotli is similar in speed to deflate (gzip) but offers more dense compression. License MIT + file LICENSE URL https://tools.ietf.org/html/rfc7932 (spec) https://github.com/google/brotli#readme (upstream) http://github.com/jeroen/brotli#read (devel) BugReports http://github.com/jeroen/brotli/issues VignetteBuilder knitr, R.rsp Suggests spelling, knitr, R.rsp, microbenchmark, rmarkdown, ggplot2 RoxygenNote 6.0.1 Language en-US NeedsCompilation yes Author Jeroen Ooms [aut, cre] (<https://orcid.org/0000-0002-4035-0289>), Google, Inc [aut, cph] (Brotli C++ library) Maintainer Jeroen Ooms <[email protected]> Repository CRAN Date/Publication 2018-05-13 20:31:43 UTC R topics documented: brotli . .2 Index 4 1 2 brotli brotli Brotli Compression Description Brotli is a compression algorithm optimized for the web, in particular small text documents. Usage brotli_compress(buf, quality = 11, window = 22) brotli_decompress(buf) Arguments buf raw vector with data to compress/decompress quality value between 0 and 11 window log of window size Details Brotli decompression is at least as fast as for gzip while significantly improving the compression ratio. The price we pay is that compression is much slower than gzip. Brotli is therefore most effective for serving static content such as fonts and html pages. For binary (non-text) data, the compression ratio of Brotli usually does not beat bz2 or xz (lzma), however decompression for these algorithms is too slow for browsers in e.g. -

Application Profile Avi Networks — Technical Reference (16.3)

Page 1 of 12 Application Profile Avi Networks — Technical Reference (16.3) Application Profile view online Application profiles determine the behavior of virtual services, based on application type. The application profile types and their options are described in the following sections: HTTP Profile DNS Profile Layer 4 Profile Syslog Profile Dependency on TCP/UDP Profile The application profile associated with a virtual service may have a dependency on an underlying TCP/UDP profile. For example, an HTTP application profile may be used only if the TCP/UDP profile type used by the virtual service is set to type TCP Proxy. The application profile associated with a virtual service instructs the Service Engine (SE) to proxy the service's application protocol, such as HTTP, and to perform functionality appropriate for that protocol. Application Profile Tab Select Templates > Profiles > Applications to open the Application Profiles tab, which includes the following functions: Search: Search against the name of the profile. Create: Opens the Create Application Profile popup. Edit: Opens the Edit Application Profile popup. Delete: Removes an application profile if it is not currently assigned to a virtual service.Note: If the profile is still associated with any virtual services, the profile cannot be removed. In this case, an error message lists the virtual service that still is referencing the application profile. The table on this tab provides the following information for each application profile: Name: Name of the Profile. Type: Type of application profile, which will be either: DNS: Default for processing DNS traffic. HTTP: Default for processing Layer 7 HTTP traffic. -

SSL/TLS Implementation CIO-IT Security-14-69

DocuSign Envelope ID: BE043513-5C38-4412-A2D5-93679CF7A69A IT Security Procedural Guide: SSL/TLS Implementation CIO-IT Security-14-69 Revision 6 April 6, 2021 Office of the Chief Information Security Officer DocuSign Envelope ID: BE043513-5C38-4412-A2D5-93679CF7A69A CIO-IT Security-14-69, Revision 6 SSL/TLS Implementation VERSION HISTORY/CHANGE RECORD Person Page Change Posting Change Reason for Change Number of Number Change Change Initial Version – December 24, 2014 N/A ISE New guide created Revision 1 – March 15, 2016 1 Salamon Administrative updates to Clarify relationship between this 2-4 align/reference to the current guide and CIO-IT Security-09-43 version of the GSA IT Security Policy and to CIO-IT Security-09-43, IT Security Procedural Guide: Key Management 2 Berlas / Updated recommendation for Clarification of requirements 7 Salamon obtaining and using certificates 3 Salamon Integrated with OMB M-15-13 and New OMB Policy 9 related TLS implementation guidance 4 Berlas / Updates to clarify TLS protocol Clarification of guidance 11-12 Salamon recommendations 5 Berlas / Updated based on stakeholder Stakeholder review / input Throughout Salamon review / input 6 Klemens/ Formatting, editing, review revisions Update to current format and Throughout Cozart- style Ramos Revision 2 – October 11, 2016 1 Berlas / Allow use of TLS 1.0 for certain Clarification of guidance Throughout Salamon server through June 2018 Revision 3 – April 30, 2018 1 Berlas / Remove RSA ciphers from approved ROBOT vulnerability affected 4-6 Salamon cipher stack -

Protecting Encrypted Cookies from Compression Side-Channel Attacks

Protecting encrypted cookies from compression side-channel attacks Janaka Alawatugoda1, Douglas Stebila1;2, and Colin Boyd3 1 School of Electrical Engineering and Computer Science, 2 School of Mathematical Sciences 1;2 Queensland University of Technology, Brisbane, Australia [email protected],[email protected] 3 Department of Telematics, Norwegian University of Science and Technology, Trondheim, Norway [email protected] December 28, 2014 Abstract Compression is desirable for network applications as it saves bandwidth; however, when data is compressed before being encrypted, the amount of compression leaks information about the amount of redundancy in the plaintext. This side channel has led to successful CRIME and BREACH attacks on web traffic protected by the Transport Layer Security (TLS) protocol. The general guidance in light of these attacks has been to disable compression, preserving confidentiality but sacrificing bandwidth. In this paper, we examine two techniques|heuristic separation of secrets and fixed-dictionary compression|for enabling compression while protecting high-value secrets, such as cookies, from attack. We model the security offered by these techniques and report on the amount of compressibility that they can achieve. 1This is the full version of a paper published in the Proceedings of the 19th International Conference on Financial Cryptography and Data Security (FC 2015) in San Juan, Puerto Rico, USA, January 26{30, 2015, organized by the International Financial Cryptography Association in cooperation with IACR. 1 Contents 1 Introduction 3 2 Definitions 6 2.1 Encryption and compression schemes.........................6 2.2 Existing security notions................................7 2.3 New security notions..................................7 2.4 Relations and separations between security notions.................8 3 Technique 1: Separating secrets from user inputs9 3.1 The scheme.......................................9 3.2 CCI security of basic separating-secrets technique................. -

A Comprehensive Study of the BREACH A8ack Against HTTPS

A Comprehensive Study of the BREACH A8ack Against HTTPS Esam Alzahrani, JusCn Nonaka, and Thai Truong 12/03/13 BREACH Overview Browser Reconnaissance and Exfiltraon via AdapCve Compression of Hypertext Demonstrated at BlackHat 2013 by Angelo Prado, Neal Harris, and Yoel Gluck • Chosen plaintext aack against HTTP compression • Client requests a webpage, the web server’s response is compressed • The HTTP compression may leak informaon that will reveal encrypted secrets about the user Network Intrusion DetecCon System Edge Firewall Switch Router DMZ Clients A8acker (Vicm) 2 BREACH Requirements Requirements for chosen plain text (side channel) aack • The web server should support HTTP compression • The web server should support HTTPS sessions • The web server reflects the user’s request • The reflected response must be in the HTML Body • The aacker must be able to measure the size of the encrypted response • The aacker can force the vicCm’s computer to send HTTP requests • The HTTP response contains secret informaon that is encrypted § Cross Site Request Forgery token – browser redirecCon § SessionID (uniquely idenCfies HTTP session) § VIEWSTATE (handles mulCple requests to the same ASP, usually hidden base64 encoded) § Oath tokens (Open AuthenCcaon - one Cme password) § Email address, Date of Birth, etc (PII) SSL/TLS protocol structure • X.509 cerCficaon authority • Secure Socket Layer (SSL) • Transport Layer Security (TLS) • Asymmetric cryptography for authenCcaon – IniCalize on OSI layer 5 (Session Layer) – Use server public key to encrypt pre-master -

A Novel Coding Architecture for Multi-Line Lidar Point Clouds

This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination. IEEE TRANSACTIONS ON INTELLIGENT TRANSPORTATION SYSTEMS 1 A Novel Coding Architecture for Multi-Line LiDAR Point Clouds Based on Clustering and Convolutional LSTM Network Xuebin Sun , Sukai Wang , Graduate Student Member, IEEE, and Ming Liu , Senior Member, IEEE Abstract— Light detection and ranging (LiDAR) plays an preservation of historical relics, 3D sensing for smart city, indispensable role in autonomous driving technologies, such as well as autonomous driving. Especially for autonomous as localization, map building, navigation and object avoidance. driving systems, LiDAR sensors play an indispensable role However, due to the vast amount of data, transmission and storage could become an important bottleneck. In this article, in a large number of key techniques, such as simultaneous we propose a novel compression architecture for multi-line localization and mapping (SLAM) [1], path planning [2], LiDAR point cloud sequences based on clustering and convolu- obstacle avoidance [3], and navigation. A point cloud consists tional long short-term memory (LSTM) networks. LiDAR point of a set of individual 3D points, in accordance with one or clouds are structured, which provides an opportunity to convert more attributes (color, reflectance, surface normal, etc). For the 3D data to 2D array, represented as range images. Thus, we cast the 3D point clouds compression as a range image instance, the Velodyne HDL-64E LiDAR sensor generates a sequence compression problem. Inspired by the high efficiency point cloud of up to 2.2 billion points per second, with a video coding (HEVC) algorithm, we design a novel compression range of up to 120 m. -

A Perfect CRIME?

AA PerfectPerfect CRIME?CRIME? OnlyOnly TIMETIME WillWill TellTell Tal Be'ery, Amichai Shulman i ii Table of Contents 1. Abstract ................................................................................................................ 4 2. Introduction to HTTP Compression ................................................................. 5 2.1 HTTP compression and the web .............................................................................................. 5 2.2 GZIP ........................................................................................................................................ 6 2.2.1 LZ77 ................................................................................................................................ 6 2.2.2 Huffman coding ............................................................................................................... 6 3. CRIME attack ..................................................................................................... 8 3.1 Compression data leaks ........................................................................................................... 8 3.2 Attack outline ........................................................................................................................... 8 3.3 Attack example ........................................................................................................................ 9 4. Extending CRIME ............................................................................................ -

Randomized Lempel-Ziv Compression for Anti-Compression Side-Channel Attacks

Randomized Lempel-Ziv Compression for Anti-Compression Side-Channel Attacks by Meng Yang A thesis presented to the University of Waterloo in fulfillment of the thesis requirement for the degree of Master of Applied Science in Electrical and Computer Engineering Waterloo, Ontario, Canada, 2018 c Meng Yang 2018 I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required final revisions, as accepted by my examiners. I understand that my thesis may be made electronically available to the public. ii Abstract Security experts confront new attacks on TLS/SSL every year. Ever since the compres- sion side-channel attacks CRIME and BREACH were presented during security conferences in 2012 and 2013, online users connecting to HTTP servers that run TLS version 1.2 are susceptible of being impersonated. We set up three Randomized Lempel-Ziv Models, which are built on Lempel-Ziv77, to confront this attack. Our three models change the determin- istic characteristic of the compression algorithm: each compression with the same input gives output of different lengths. We implemented SSL/TLS protocol and the Lempel- Ziv77 compression algorithm, and used them as a base for our simulations of compression side-channel attack. After performing the simulations, all three models successfully pre- vented the attack. However, we demonstrate that our randomized models can still be broken by a stronger version of compression side-channel attack that we created. But this latter attack has a greater time complexity and is easily detectable. Finally, from the results, we conclude that our models couldn't compress as well as Lempel-Ziv77, but they can be used against compression side-channel attacks. -

Compresso: Efficient Compression of Segmentation Data for Connectomics

Compresso: Efficient Compression of Segmentation Data For Connectomics Brian Matejek, Daniel Haehn, Fritz Lekschas, Michael Mitzenmacher, Hanspeter Pfister Harvard University, Cambridge, MA 02138, USA bmatejek,haehn,lekschas,michaelm,[email protected] Abstract. Recent advances in segmentation methods for connectomics and biomedical imaging produce very large datasets with labels that assign object classes to image pixels. The resulting label volumes are bigger than the raw image data and need compression for efficient stor- age and transfer. General-purpose compression methods are less effective because the label data consists of large low-frequency regions with struc- tured boundaries unlike natural image data. We present Compresso, a new compression scheme for label data that outperforms existing ap- proaches by using a sliding window to exploit redundancy across border regions in 2D and 3D. We compare our method to existing compression schemes and provide a detailed evaluation on eleven biomedical and im- age segmentation datasets. Our method provides a factor of 600-2200x compression for label volumes, with running times suitable for practice. Keywords: compression, encoding, segmentation, connectomics 1 Introduction Connectomics|reconstructing the wiring diagram of a mammalian brain at nanometer resolution|results in datasets at the scale of petabytes [21,8]. Ma- chine learning methods find cell membranes and create cell body labelings for every neuron [18,12,14] (Fig. 1). These segmentations are stored as label volumes that are typically encoded in 32 bits or 64 bits per voxel to support labeling of millions of different nerve cells (neurons). Storing such data is expensive and transferring the data is slow. To cut costs and delays, we need compression methods to reduce data sizes. -

Categorizing Efficient XML Compression Schemes

John N. Dyer Categorizing Efficient XML Compression Schemes John N. Dyer, Department of Information Systems, College of Business Administration, Georgia Southern University, P.O. Box 7998, Statesboro, GA 30459 Abstract Web services are Extensible Markup Language (XML) applications mapped to programs, objects, databases, and comprehensive business functions. In essence, Web services transform XML documents into and out of information technology systems. As more businesses turn to web services data transfer, XML has become the language of web services. Unfortunately, the structure of XML results in extremely verbose documents, often 3 times larger than ordinary content files. As XML becomes more common through Web services applications, its large file sizes increasingly burden the systems that must utilize it. This paper provides a qualitative overview of existing and proposed schemes for efficient XML compression, proposes three categories for relating XML compression scheme efficiency for Web services, and makes recommendations relating to efficient XML compression based on the proposed categories of XML documents. The goal of this paper is to aid the practitioner and Web services manager in understanding the impact of XML document size on Web services, and to aid them in selecting the most appropriate schemes for applications of XML compression for Web services. Keywords: Compression, Web services, XML Introduction XML is the foundation upon which Web services are built, and provides the description of data, as well as the storage and transmission format of data exchanged via Web services (Newcomer, 2002). XML is similar to Hypertext Markup Language (HTML), and well-formed XML documents can even be displayed in Web browsers. -

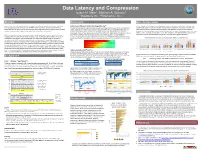

Data Latency and Compression

Data Latency and Compression Joseph M. Steim1, Edelvays N. Spassov2 1Quanterra Inc., 2Kinemetrics, Inc. Abstract DIscussion More Compression Because of interest in the capability of digital seismic data systems to provide low-latency data for “Early Compression and Latency related to Origin Queuing[5] Lossless Lempel-Ziv (LZ) compression methods (e.g. gzip, 7zip, and more recently “brotli” introduced by Warning” applications, we have examined the effect of data compression on the ability of systems to Plausible data compression methods reduce the volume (number of bits) required to represent each data Google for web-page compression) are often used for lossless compression of binary and text files. How deliver data with low latency, and on the efficiency of data storage and telemetry systems. Quanterra Q330 sample. Compression of data therefore always reduces the minimum required bandwidth (bits/sec) for does the best example of modern LZ methods compare with FDSN Level 1 and 2 for waveform data? systems are widely used in telemetered networks, and are considered in particular. transmission compared with transmission of uncompressed data. The volume required to transmit Segments of data representing up to one month of various levels of compressibility were compressed using compressed data is variable, depending on characteristics of the data. Higher levels of compression require Levels 1,2 and 3, and brotli using “quality” 1 and 6. The compression ratio (relative to the original 32 bits) Q330 data acquisition systems transmit data compressed in Steim2-format packets. Some studies have less minimum bandwidth and volume, and maximize the available unused (“free”) bandwidth for shows that in all cases the compression using L1,L2, or L3 is significantly greater than brotli. -

Parquet Data Format Performance

Parquet data format performance Jim Pivarski Princeton University { DIANA-HEP February 21, 2018 1 / 22 What is Parquet? 1974 HBOOK tabular rowwise FORTRAN first ntuples in HEP 1983 ZEBRA hierarchical rowwise FORTRAN event records in HEP 1989 PAW CWN tabular columnar FORTRAN faster ntuples in HEP 1995 ROOT hierarchical columnar C++ object persistence in HEP 2001 ProtoBuf hierarchical rowwise many Google's RPC protocol 2002 MonetDB tabular columnar database “first” columnar database 2005 C-Store tabular columnar database also early, became HP's Vertica 2007 Thrift hierarchical rowwise many Facebook's RPC protocol 2009 Avro hierarchical rowwise many Hadoop's object permanance and interchange format 2010 Dremel hierarchical columnar C++, Java Google's nested-object database (closed source), became BigQuery 2013 Parquet hierarchical columnar many open source object persistence, based on Google's Dremel paper 2016 Arrow hierarchical columnar many shared-memory object exchange 2 / 22 What is Parquet? 1974 HBOOK tabular rowwise FORTRAN first ntuples in HEP 1983 ZEBRA hierarchical rowwise FORTRAN event records in HEP 1989 PAW CWN tabular columnar FORTRAN faster ntuples in HEP 1995 ROOT hierarchical columnar C++ object persistence in HEP 2001 ProtoBuf hierarchical rowwise many Google's RPC protocol 2002 MonetDB tabular columnar database “first” columnar database 2005 C-Store tabular columnar database also early, became HP's Vertica 2007 Thrift hierarchical rowwise many Facebook's RPC protocol 2009 Avro hierarchical rowwise many Hadoop's object permanance and interchange format 2010 Dremel hierarchical columnar C++, Java Google's nested-object database (closed source), became BigQuery 2013 Parquet hierarchical columnar many open source object persistence, based on Google's Dremel paper 2016 Arrow hierarchical columnar many shared-memory object exchange 2 / 22 Developed independently to do the same thing Google Dremel authors claimed to be unaware of any precedents, so this is an example of convergent evolution.