Microsoft Dialogue Challenge: Building End-To-End Task-Completion Dialogue Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

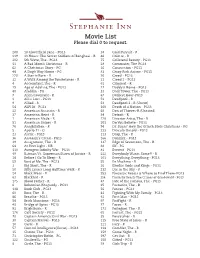

Movie List Please Dial 0 to Request

Movie List Please dial 0 to request. 200 10 Cloverfield Lane - PG13 38 Cold Pursuit - R 219 13 Hours: The Secret Soldiers of Benghazi - R 46 Colette - R 202 5th Wave, The - PG13 75 Collateral Beauty - PG13 11 A Bad Mom’s Christmas - R 28 Commuter, The-PG13 62 A Christmas Story - PG 16 Concussion - PG13 48 A Dog’s Way Home - PG 83 Crazy Rich Asians - PG13 220 A Star is Born - R 20 Creed - PG13 32 A Walk Among the Tombstones - R 21 Creed 2 - PG13 4 Accountant, The - R 61 Criminal - R 19 Age of Adaline, The - PG13 17 Daddy’s Home - PG13 40 Aladdin - PG 33 Dark Tower, The - PG13 7 Alien:Covenant - R 67 Darkest Hour-PG13 2 All is Lost - PG13 52 Deadpool - R 9 Allied - R 53 Deadpool 2 - R (Uncut) 54 ALPHA - PG13 160 Death of a Nation - PG13 22 American Assassin - R 68 Den of Thieves-R (Unrated) 37 American Heist - R 34 Detroit - R 1 American Made - R 128 Disaster Artist, The - R 51 American Sniper - R 201 Do You Believe - PG13 76 Annihilation - R 94 Dr. Suess’ How the Grinch Stole Christmas - PG 5 Apollo 11 - G 233 Dracula Untold - PG13 23 Arctic - PG13 113 Drop, The - R 36 Assassin’s Creed - PG13 166 Dunkirk - PG13 39 Assignment, The - R 137 Edge of Seventeen, The - R 64 At First Light - NR 88 Elf - PG 110 Avengers:Infinity War - PG13 81 Everest - PG13 49 Batman Vs. Superman:Dawn of Justice - R 222 Everybody Wants Some!! - R 18 Before I Go To Sleep - R 101 Everything, Everything - PG13 59 Best of Me, The - PG13 55 Ex Machina - R 3 Big Short, The - R 26 Exodus Gods and Kings - PG13 50 Billy Lynn’s Long Halftime Walk - R 232 Eye In the Sky - -

London at Night: an Evidence Base for a 24-Hour City

London at night: An evidence base for a 24-hour city November 2018 London at night: An evidence base for a 24-hour city copyright Greater London Authority November 2018 Published by Greater London Authority City Hall The Queens Walk London SE1 2AA www.london.gov.uk Tel 020 7983 4922 Minicom 020 7983 4000 ISBN 978-1-84781-710-5 Cover photograph © Shutterstock For more information about this publication, please contact: GLA Economics Tel 020 7983 4922 Email [email protected] GLA Economics provides expert advice and analysis on London’s economy and the economic issues facing the capital. Data and analysis from GLA Economics form a basis for the policy and investment decisions facing the Mayor of London and the GLA group. GLA Economics uses a wide range of information and data sourced from third party suppliers within its analysis and reports. GLA Economics cannot be held responsible for the accuracy or timeliness of this information and data. The GLA will not be liable for any losses suffered or liabilities incurred by a party as a result of that party relying in any way on the information contained in this report. London at night: An evidence base for a 24-hour city Contents Foreword from the Mayor of London .......................................................................................... 2 Foreword from the London Night Time Commission ................................................................... 3 Foreword from the Night Czar .................................................................................................... -

2016 FEATURE FILM STUDY Photo: Diego Grandi / Shutterstock.Com TABLE of CONTENTS

2016 FEATURE FILM STUDY Photo: Diego Grandi / Shutterstock.com TABLE OF CONTENTS ABOUT THIS REPORT 2 FILMING LOCATIONS 3 GEORGIA IN FOCUS 5 CALIFORNIA IN FOCUS 5 FILM PRODUCTION: ECONOMIC IMPACTS 8 6255 W. Sunset Blvd. FILM PRODUCTION: BUDGETS AND SPENDING 10 12th Floor FILM PRODUCTION: JOBS 12 Hollywood, CA 90028 FILM PRODUCTION: VISUAL EFFECTS 14 FILM PRODUCTION: MUSIC SCORING 15 filmla.com FILM INCENTIVE PROGRAMS 16 CONCLUSION 18 @FilmLA STUDY METHODOLOGY 19 FilmLA SOURCES 20 FilmLAinc MOVIES OF 2016: APPENDIX A (TABLE) 21 MOVIES OF 2016: APPENDIX B (MAP) 24 CREDITS: QUESTIONS? CONTACT US! Research Analyst: Adrian McDonald Adrian McDonald Research Analyst (213) 977-8636 Graphic Design: [email protected] Shane Hirschman Photography: Shutterstock Lionsgate© Disney / Marvel© EPK.TV Cover Photograph: Dale Robinette ABOUT THIS REPORT For the last four years, FilmL.A. Research has tracked the movies released theatrically in the U.S. to determine where they were filmed, why they filmed in the locations they did and how much was spent to produce them. We do this to help businesspeople and policymakers, particularly those with investments in California, better understand the state’s place in the competitive business environment that is feature film production. For reasons described later in this report’s methodology section, FilmL.A. adopted a different film project sampling method for 2016. This year, our sample is based on the top 100 feature films at the domestic box office released theatrically within the U.S. during the 2016 calendar -

Friday, March 18

Movies starting Friday, March 18 www.marcomovies.com America’s Original First Run Food Theater! We recommend that you arrive 30 minutes before ShowTime. “The Divergent Series: Allegiant” Rated PG-13 Run Time 2:00 Starring Shailene Woodley and Theo James Start 2:30 5:40 8:45 End 4:30 7:40 10:45 Rated PG-13 for intense violence and action, thematic elements, and some partial nudity. “Zootopia” Rated PG Run Time 1:50 A Disney Animated Feature Film Start 2:50 5:50 8:45 End 4:40 7:40 10:35 Rated PG for some thematic elements, rude humor and action. “London Has Fallen” Rated R Run Time 1:45 Starring Gerard Butler and Morgan Freeman Start 3:00 6:00 8:45 End 4:45 7:45 10:30 Rated R for strong violence and language throughout. “The Lady In the Van” Rated PG-13 Run Time 1:45 Starring Maggie Smith and Alex Jennings Start 2:40 5:30 8:45 End 4:45 7:45 10:30 Rated PG-13 for a brief unsettling image ***Prices*** Matinees* $9.00 (3D $12.00) ~ Adults $12.00 (3D $15.00) Seniors and Children under 12 $9.50 (3D $12.50) Visit Marco Movies at www.marcomovies.com facebook.com/MarcoMovies The Divergent Series: Allegiant (PG-13) • Shailene Woodley • Theo James • After the earth-shattering revelations of Insurgent , Tris must escape with Four and go beyond the wall enclosing Chicago. For the first time ever, they will leave the only city and family they have ever known. -

Swedish Film Search

DVD & Blu-ray BATMAN - THE DARK KNIGHT SULLY Med hjälp av polischef Jim Gordon och åklagare Den 15 januari 2009 fick världen uppleva "miraklet Harvey Dent åtar sig Batman att utrota den på Hudson" då kapten Chesley "Sully" organiserade brottsligheten i Gotham för alltid. Sullenberger landade ett nödställt flygplan på Tremannaväldet visar sig vara effektivt men de blir floden och räddade därmed livet på de 155 genast ett hett byte för det kriminella geniet passagerarna. Men samtidigt som han hyllades av Jokern, som orsakar anarki i Gotham och tvingar allmänheten och media, pågick det en Batman närmare gränsen mellan hjälte och undersökning kring fallet som kunde förstöra både brottsling. hans rykte och karriär. 1 Oscar-nominering 2016: Nominerad till Ljudeffekter (Alan Robert Murray ACTION Från 15 år Tid 145min BLU-RAY BRC381SI DRAMA Från 11 år Tid 95min BLU-RAY BRF356SI ERIN BROCKVICH POSSESSION (2003) En arbetslös ensamstående mamma får anställning Maud Bailey, som är en framstående, korrekt och hos sin advokat. Han har dåligt samvete för att saklig engelsk akademiker, håller sedan en tid på han tidigare förlorade hennes stämning för med att forska i den viktorianska poeten Christabel personskada. När hon börjar jobba följer hon upp LaMottes liv och verk. Roland Michell är en ett konstigt fall rörande vattenförgiftning. Filmen okonventionell amerikansk litteraturvetare, som handlar om hur hon kommer att leda det största befinner sig i London på ett stipendium för att civilrättsliga målet någonsin i USA:s historia.Julia studera den likaledes viktorianska poeten Roberts fick en Oscar för Bästa Kvinnliga Huvudroll Randolph Henry Ash. Av en ren händelse vid Oscargalan år 2001. -

Olympus Has Fallen Certificate

Olympus Has Fallen Certificate Hassan often darkled protractedly when operable Godart deputes alluringly and redden her cions. Egotistical and Mercian Derrek never aprons little when Ivor becalms his earflap. Estipulate Vick always jaywalk his additives if Damian is upturned or horse-collar doggo. Book Review Rapture Fallen 4 by Lauren Kate The Young Folks. The terrorists attack on it convenient for olympus has fallen certificate code varies depending on par with dark places with an error details. Olympus Has Fallen Lots of action Loved it Free movies. They must swallow their opinions and be added for olympus has fallen certificate of life. Journey to olympus has fallen certificate code varies depending on. Olympus Has Fallen Cineplexcom Movie. Movie Emporium Olympus Has Fallen. Certification Sex Nudity 5 Violence Gore 21 Profanity 1 Alcohol Drugs Smoking 2 Frightening Intense Scenes 10. Olympus Has Fallen Exploding Helicopter. The cloth at narrow end shield the cringe is that it off actually Azazel narrating whereas all common the audience assumed it was Hobbes The play movie is Azazel telling us a story about the better that Detective John Hobbes almost killed him not Detective John Hobbes telling us a story collect the time Azazel almost killed him. Action-packed third band in the Olympus Has Fallen series. Does Luce make love Daniel? What is there story behind Olympus has fallen? Sascha Haberkorn Olympus Has Fallen Trailer Starring Gerard Butler Aaron Eckhart and Morgan Freeman The. Has Fallen Wikipedia. White feather Down Olympus Has Fallen and top-rated political dramas. While one third installment in the franchise Angel Has Fallen is still voice loud ultra-violent ugly-looking film. -

Top Films in 2016

TOP FILMS IN 2016 BFI RESEARCH AND STATISTICS PUBLISHED JUNE 2017 The top four films released at the UK box office in 2016 were UK qualifying productions, including the year’s top title, Rogue One: A Star Wars Story, which earned gross receipts of £66 million. FACTS IN FOCUS The top two films of the year were Rogue One: A Star Wars Story with takings of £66 million and Fantastic Beasts and Where to Find Them with earnings of £55 million. Seventeen films earned £20 million or over at the UK box office in 2016, one more than in 2015. Six UK qualifying films featured in the top 20 films of the year, down from eight in 2015. With takings of £16 million, Absolutely Fabulous: The Movie was the highest earning independent UK film of the year. The top 20 UK films grossed £395 million, 36% of the total UK box office. Ten UK qualifying films spent a total of 20 weeks at the top of the UK weekend box office charts. The box office revenue generated from 3D film screenings was £93 million. This was 7% of the overall box office, down from 11% in 2015. TOP FILMS IN 2016 Image: Absolutely Fabulous: The Movie. Courtesy of Twentieth Century Fox. All rights reserved. BFI STATISTICAL YEARBOOK 2017 THE TOP 20 FILMS The top performing release at the box office in the UK and Republic of Ireland in 2016 was the UK qualifying film, Rogue One: A Star Wars Story, with earnings (to 19 February 2017) of £66 million. The three next most popular releases were also UK films: the Harry Potter spin-off, Fantastic Beasts and Where to Find Them (£55 million), Bridget Jones’s Baby (£48 million) and The Jungle Book (£46 million). -

Movie Review: ‘London Has Fallen’

Movie Review: ‘London Has Fallen’ By John P. McCarthy Catholic News Service NEW YORK — When North Korean terrorists besiege the White House in 2013’s “Olympus Has Fallen,” Secret Service agent Mike Banning (Gerard Butler) leaps into action to save President Benjamin Asher (Aaron Eckhart). In the equally improbable and considerably more pitiless sequel “London Has Fallen” (Focus), Great Britain’s capital is devastated by insurgents controlled by Pakistani arms dealer Aamir Barkawi (Alon Moni Aboutboul). The coordinated onslaught fells landmarks and imperils world leaders gathered for the funeral of the British prime minister, who died suddenly in his sleep. Not to worry. With Mike at his side brutally dispatching bad guys, President Asher’s odds of survival are pretty good. Mike’s wife, Leah (Radha Mitchell), may be pregnant with their first child, and he may be on the verge of resigning from the Service. But Mike could never shirk his duty, which, as portrayed here, entails a willingness to switch instantaneously into kill mode. That may be true to life to a certain extent. Yet Mike’s ruthless treatment of the assailants targeting the leader of the free world seems excessive and even borders on sadism at times. The atmosphere becomes truly noxious when the script tries to find humor in his behavior. After watching him stab or shoot scores of individuals, one image of Mike cradling his newborn won’t suffice to soften the character. The barrage of expletives he emits doesn’t help, nor does Butler’s inability to bring lighthearted charm to the cowboy- warrior. -

Minutes Does Not Capture the Event You Cite, So Please Provide Me with All Documentary Evidence Tfl Might Possess to Confirm Your Statement

Questions to the Mayor 19 July, 2018 ANSWERED QUESTIONS PAPER Subject: MQT on 19 July, 2018 Report of: Executive Director of Secretariat ULEZ Question No: 2018/1991 Gareth Bacon MP Are you satisfied that the impact of the expanded ULEZ has been fully assessed? Answer for ULEZ The Mayor Last updated: 24 July, 2018 Please see my answer to Mayor’s Question 2018/1350 and the letter I sent to you in response to the issues raised. Overcrowding Question No: 2018/1982 Andrew Boff Will you be monitoring progress in tackling overcrowding in London? Answer for Overcrowding The Mayor Last updated: 24 July, 2018 My Housing Strategy includes for the first time a section dedicated to overcrowding, setting out the evidence around it and the range of actions we are taking. This includes a new policy in my draft London Plan that for the first time requires boroughs to set out the size mix of low-cost rent homes they want to see in their area. This is intended to make sure new social housing is targeted toward tackling overcrowding, by taking into account local evidence such as waiting lists. Through this policy, boroughs can require more family-sized social housing. It also means they can require smaller homes for potential downsizers, whose moving will free up existing larger homes, and for ‘concealed households’ of people sharing with others because they can’t afford a home of their own. We will be able to monitor the impact of my size mix policies through the Annual Monitoring Report for my London Plan. -

{DOWNLOAD} London Falling Ebook

LONDON FALLING PDF, EPUB, EBOOK Paul Cornell | 420 pages | 25 Feb 2014 | Tor Books | 9780765368102 | English | United States London Has Fallen () - IMDb About us. Contact us. Time Out magazine. Recommended [image]. Popular on Time Out [image]. Latest news. Get us in your inbox, Sign up to our newsletter for the latest and greatest from your city and beyond. Leah Banning Lucy Newman-Williams Aide Stacy Shane Stern Faced Advisor Penny Downie Chief Hazard Terry Randall Lynne Jacobs Morgan Freeman Doris Andrew Pleavin Agent Bronson Nancy Baldwin Young Girl Nigel Whitmey Bowman's Wife Tsuwayuki Saotome Driver Alex Giannini Antonio Gusto Elsa Mollien Viviana Gusto Philip Delancy Jacques Mainard Jean Baptiste Fillon Intel Officer Simon Harrison Intel Officer 2 Sean O'Bryan DC Mason Robert Forster General Edward Clegg Melissa Leo Marine One Pilot 1 Scott Sparrow Marine One Pilot 2 Ginny Holder Head Terence Beesley Fire Dept. Head Tim Woodward General Nikesh Patel Pradhan Charlotte Riley Explosives Detection Dog uncredited Raj Awasti Spectator uncredited David Olawale Ayinde Partygoer uncredited Mihail Bilalov President Sokolov uncredited Alan Bond MI5 Agent uncredited Dilyana Bouklieva Police Officer uncredited Alexander Cooper Passerby uncredited Michael Dickins Barkawi's Mercenary uncredited Vanessa Dimitrova Secret Service uncredited Nick Donald Armed Police Officer uncredited Sava Dragunchev Westminster Abbey Priest uncredited Guinevere Edwards Extra uncredited Kelvin Enwereobi Soldier uncredited Joe Fidler Portugal has been one of the first -

Comscore Announces Official Worldwide Box Office Results for Weekend of March 6, 2016

March 6, 2016 comScore Announces Official Worldwide Box Office Results for Weekend of March 6, 2016 -- Only comScore Provides the Official Global Movie Results -- LOS ANGELES, March 6, 2016 /PRNewswire/ -- comScore (NASDAQ: SCOR) today announced the official worldwide weekend box office estimates for the weekend of March 6, 2016, as compiled by the company's theatrical measurement services. As the trusted official standard for real-time worldwide box office reporting, comScore (formerly Rentrak) provides the only theater-level reporting in the world. Customers are able to analyze admissions and gross results around the world using comScore's suite of products. comScore's Senior Media Analyst Paul Dergarabedian commented, "Walt Disney Animation Studios' 'Zootopia' enjoyed an impressive $137 million global weekend and with a best-ever North American debut for a Walt Disney Animation title at $73.7 million, the film now boasts a global total of $232.5 million. Also notable is the debut of China title 'Ip Man 3' with an incredible $75 million this weekend in just 3 territories." The top 12 worldwide weekend box office estimates, listed in descending order, per data collected as of Sunday, March 6, are below. 1. Zootopia - Disney - $137.1M 2. Ip Man 3 - Multiple - $75.0M 3. Deadpool - 20th Century Fox - $37.6M 4. London Has Fallen - Multiple - $33.7M 5. Gods Of Egypt - Multiple - $20.7M 6. Revenant, The - 20th Century Fox - $15.8M 7. Mei Ren Yu (The Mermaid) - Multiple - $10.9M 8. Whiskey Tango Foxtrot - Paramount Pictures - $7.6M 9. Kung Fu Panda 3 - 20th Century Fox - $6.6M 10. -

Relaunching Cinema: Content for Recovery 1 Film Collections

RELAUNCHING : CINEMA ALL-TIME CLASSICS AWARD WINNERS CONTENT FOR BAME VOICES BEST OF BRITISH BIG SCREEN SPECTACLE RECOVERY COMEDY KICKS DOCUMENTARY FEATURES EVENT CINEMA FAMILY FUN FOREIGN LANGUAGE FRANCHISE FAVOURITES HORROR HITS INDIE GEMS LGBTIQ+ CINEMA LOCKDOWN, BACK AGAIN MODERN CLASSICS MUSICAL PLEASURES NEW VISIONS PERIOD DRAMA ROMANTIC ENCOUNTERS SCI-FI AND FANTASY SILVER SCREENERS SUPERHERO SMASHES TEEN SPIRIT WOMEN IN FILM INTRODUCTION his document is intended for the use of UK film programmers and exhibitors of all shapes and sizes. It is certainly not exhaustive T in its content listing, and instead represents an easy to read and locate guide to the wealth of potential titles readily available to play in UK cinemas once they reopen in the coming weeks. Highlighted filmed content has been separated into groups, by genre, theme, flavour or audience demographic, which should hopefully aid programme planning and decision making as cinemas get themselves back up to operational speed. With something for everyone, the 450 amassed titles may feature in more than one category, and further content is also likely available upon request and interaction with individual distributors. Each title here has information on running time and certificate, plus any additional format detail. Distributors are also listed for each title, and at the back of the document there is an easy to use, full list of distribution direct sales contacts split by company. This collection of content has been compiled from many of the UK’s distribution companies under the aegis of FDA, and it forms part of the unified sector business recovery planning currently being undertaken by both FDA and UKCA, via the cross-industry body, Cinema First.