A Novel Hybrid Approach for Retrieval of the Music Information

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Note Staff Symbol Carnatic Name Hindustani Name Chakra Sa C

The Indian Scale & Comparison with Western Staff Notations: The vowel 'a' is pronounced as 'a' in 'father', the vowel 'i' as 'ee' in 'feet', in the Sa-Ri-Ga Scale In this scale, a high note (swara) will be indicated by a dot over it and a note in the lower octave will be indicated by a dot under it. Hindustani Chakra Note Staff Symbol Carnatic Name Name MulAadhar Sa C - Natural Shadaj Shadaj (Base of spine) Shuddha Swadhishthan ri D - flat Komal ri Rishabh (Genitals) Chatushruti Ri D - Natural Shudhh Ri Rishabh Sadharana Manipur ga E - Flat Komal ga Gandhara (Navel & Solar Antara Plexus) Ga E - Natural Shudhh Ga Gandhara Shudhh Shudhh Anahat Ma F - Natural Madhyam Madhyam (Heart) Tivra ma F - Sharp Prati Madhyam Madhyam Vishudhh Pa G - Natural Panchama Panchama (Throat) Shuddha Ajna dha A - Flat Komal Dhaivat Dhaivata (Third eye) Chatushruti Shudhh Dha A - Natural Dhaivata Dhaivat ni B - Flat Kaisiki Nishada Komal Nishad Sahsaar Ni B - Natural Kakali Nishada Shudhh Nishad (Crown of head) Så C - Natural Shadaja Shadaj Property of www.SarodSitar.com Copyright © 2010 Not to be copied or shared without permission. Short description of Few Popular Raags :: Sanskrut (Sanskrit) pronunciation is Raag and NOT Raga (Alphabetical) Aroha Timing Name of Raag (Karnataki Details Avroha Resemblance) Mood Vadi, Samvadi (Main Swaras) It is a old raag obtained by the combination of two raags, Ahiri Sa ri Ga Ma Pa Ga Ma Dha ni Så Ahir Bhairav Morning & Bhairav. It belongs to the Bhairav Thaat. Its first part (poorvang) has the Bhairav ang and the second part has kafi or Så ni Dha Pa Ma Ga ri Sa (Chakravaka) serious, devotional harpriya ang. -

The Rich Heritage of Dhrupad Sangeet in Pushtimarg On

Copyright © 2006 www.vallabhkankroli.org - All Rights Reserved by Shree Vakpati Foundation - Baroda ||Shree DwaDwarrrrkeshokesho Jayati|| || Shree Vallabhadhish Vijayate || The Rich Heritage Of Dhrupad Sangeet in Pushtimarg on www.vallabhkankroli.org Reference : 8th Year Text Book of Pushtimargiya Patrachaar by Shree Vakpati Foundation - Baroda Inspiration: PPG 108 Shree Vrajeshkumar Maharajshri - Kankroli PPG 108 Shree Vagishkumar Bawashri - Kankroli Copyright © 2006 www.vallabhkankroli.org - All Rights Reserved by Shree Vakpati Foundation - Baroda Contents Meaning of Sangeet ........................................................................................................................... 4 Naad, Shruti and Swar ....................................................................................................................... 4 Definition of Raga.............................................................................................................................. 5 Rules for Defining Ragas................................................................................................................... 6 The Defining Elements in the Raga................................................................................................... 7 Vadi, Samvadi, Anuvadi, Vivadi [ Sonant, Consonant, Assonant, Dissonant] ................................ 8 Aroha, avaroha [Ascending, Descending] ......................................................................................... 8 Twelve Swaras of the Octave ........................................................................................................... -

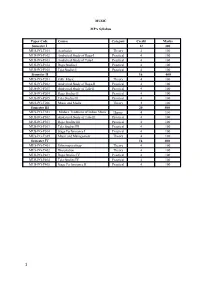

MUSIC MPA Syllabus Paper Code Course Category Credit Marks

MUSIC MPA Syllabus Paper Code Course Category Credit Marks Semester I 12 300 MUS-PG-T101 Aesthetics Theory 4 100 MUS-PG-P102 Analytical Study of Raga-I Practical 4 100 MUS-PG-P103 Analytical Study of Tala-I Practical 4 100 MUS-PG-P104 Raga Studies I Practical 4 100 MUS-PG-P105 Tala Studies I Practical 4 100 Semester II 16 400 MUS-PG-T201 Folk Music Theory 4 100 MUS-PG-P202 Analytical Study of Raga-II Practical 4 100 MUS-PG-P203 Analytical Study of Tala-II Practical 4 100 MUS-PG-P204 Raga Studies II Practical 4 100 MUS-PG-P205 Tala Studies II Practical 4 100 MUS-PG-T206 Music and Media Theory 4 100 Semester III 20 500 MUS-PG-T301 Modern Traditions of Indian Music Theory 4 100 MUS-PG-P302 Analytical Study of Tala-III Practical 4 100 MUS-PG-P303 Raga Studies III Practical 4 100 MUS-PG-P303 Tala Studies III Practical 4 100 MUS-PG-P304 Stage Performance I Practical 4 100 MUS-PG-T305 Music and Management Theory 4 100 Semester IV 16 400 MUS-PG-T401 Ethnomusicology Theory 4 100 MUS-PG-T402 Dissertation Theory 4 100 MUS-PG-P403 Raga Studies IV Practical 4 100 MUS-PG-P404 Tala Studies IV Practical 4 100 MUS-PG-P405 Stage Performance II Practical 4 100 1 Semester I MUS-PG-CT101:- Aesthetic Course Detail- The course will primarily provide an overview of music and allied issues like Aesthetics. The discussions will range from Rasa and its varieties [According to Bharat, Abhinavagupta, and others], thoughts of Rabindranath Tagore and Abanindranath Tagore on music to aesthetics and general comparative. -

University of Mauritius Mahatma Gandhi Institute

University of Mauritius Mahatma Gandhi Institute Regulations And Programme of Studies B. A (Hons) Performing Arts (Vocal Hindustani) (Review) - 1 - UNIVERSITY OF MAURITIUS MAHATMA GANDHI INSTITUTE PART I General Regulations for B.A (Hons) Performing Arts (Vocal Hindustani) 1. Programme title: B.A (Hons) Performing Arts (Vocal Hindustani) 2. Objectives To equip the student with further knowledge and skills in Vocal Hindustani Music and proficiency in the teaching of the subject. 3. General Entry Requirements In accordance with the University General Entry Requirements for admission to undergraduate degree programmes. 4. Programme Requirement A post A-Level MGI Diploma in Performing Arts (Vocal Hindustani) or an alternative qualification acceptable to the University of Mauritius. 5. Programme Duration Normal Maximum Degree (P/T): 2 years 4 years (4 semesters) (8 semesters) 6. Credit System 6.1 Introduction 6.1.1 The B.A (Hons) Performing Arts (Vocal Hindustani) programme is built up on a 3- year part time Diploma, which accounts for 60 credits. 6.1.2 The Programme is structured on the credit system and is run on a semester basis. 6.1.3 A semester is of a duration of 15 weeks (excluding examination period). - 2 - 6.1.4 A credit is a unit of measure, and the Programme is based on the following guidelines: 15 hours of lectures and / or tutorials: 1 credit 6.2 Programme Structure The B.A Programme is made up of a number of modules carrying 3 credits each, except the Dissertation which carries 9 credits. 6.3 Minimum Credits Required for the Award of the Degree: 6.3.1 The MGI Diploma already accounts for 60 credits. -

12 NI 6340 MASHKOOR ALI KHAN, Vocals ANINDO CHATTERJEE, Tabla KEDAR NAPHADE, Harmonium MICHAEL HARRISON & SHAMPA BHATTACHARYA, Tanpuras

From left to right: Pandit Anindo Chatterjee, Shampa Bhattacharya, Ustad Mashkoor Ali Khan, Michael Harrison, Kedar Naphade Photo credit: Ira Meistrich, edited by Tina Psoinos 12 NI 6340 MASHKOOR ALI KHAN, vocals ANINDO CHATTERJEE, tabla KEDAR NAPHADE, harmonium MICHAEL HARRISON & SHAMPA BHATTACHARYA, tanpuras TRANSCENDENCE Raga Desh: Man Rang Dani, drut bandish in Jhaptal – 9:45 Raga Shahana: Janeman Janeman, madhyalaya bandish in Teental – 14:17 Raga Jhinjhoti: Daata Tumhi Ho, madhyalaya bandish in Rupak tal, Aaj Man Basa Gayee, drut bandish in Teental – 25:01 Raga Bhupali: Deem Dara Dir Dir, tarana in Teental – 4:57 Raga Basant: Geli Geli Andi Andi Dole, drut bandish in Ektal – 9:05 Recorded on 29-30 May, 2015 at Academy of Arts and Letters, New York, NY Produced and Engineered by Adam Abeshouse Edited, Mixed and Mastered by Adam Abeshouse Co-produced by Shampa Bhattacharya, Michael Harrison and Peter Robles Sponsored by the American Academy of Indian Classical Music (AAICM) Photography, Cover Art and Design by Tina Psoinos 2 NI 6340 NI 6340 11 at Carnegie Hall, the Rubin Museum of Art and Raga Music Circle in New York, MITHAS in Boston, A True Master of Khayal; Recollections of a Disciple Raga Samay Festival in Philadelphia and many other venues. His awards are many, but include the Sangeet Natak Akademi Puraskar by the Sangeet Natak Aka- In 1999 I was invited to meet Ustad Mashkoor Ali Khan, or Khan Sahib as we respectfully call him, and to demi, New Delhi, 2015 and the Gandharva Award by the Hindusthan Art & Music Society, Kolkata, accompany him on tanpura at an Indian music festival in New Jersey. -

Stephen M. Slawek Curriculum Vitae

Stephen M. Slawek Curriculum Vitae 10008 Sausalito Drive Butler School of Music Austin, TX 78759-6106 The University of Texas at Austin (512) 794-2981 Austin, TX. 78712-0435 (512) 471-0671 Education 1986 Ph.D. (Ethnomusicology) University of Illinois at Urbana-Champaign 1978 M.A. (Ethnomusicology) University of Hawaii at Manoa 1976 M. Mus. (Sitar) Banaras Hindu University 1974 B. Mus. (Sitar) Banaras Hindu University 1973 Diploma (Tabla) Banaras Hindu University 1971 B.A. (Biology) University of Pennsylvania Employment 1999-present Professor of Ethnomusicology, The University of Texas at Austin 2000 Visiting Professor, Rice University, Hanzsen and Lovett College 1989-1999 Associate Professor of Ethnomusicology and Asian Studies, The University of Texas at Austin 1985-1989 Assistant Professor of Ethnomusicology and Asian Studies, The University of Texas at Austin 1983-1985 Instructor of Ethnomusicology and Asian Studies, The University of Texas at Austin 1983 Visiting Lecturer of Ethnomusicology, University of Illinois at Urbana- Champaign 1982 Graduate Assistant, School of Music, University of Illinois at Urbana- Champaign 1978-1980 Archivist, Archives of Ethnomusicology, School of Music, University of Illinois at Urbana-Champaign Scholarship and Creative Activities Books under contract The Classical Music of India: Going Global with Ravi Shankar. New York and London: Routledge Press. 1997 Musical Instruments of North India: Eighteenth Century Portraits by Baltazard Solvyns. Delhi: Manohar Publishers. Pp. i-vi and 1-153. (with Robert L. Hardgrave) SLAWEK- curriculum vitae 2 1987 Sitar Technique in Nibaddh Forms. New Delhi: Motilal Banarsidass, Indological Publishers and Booksellers. Pp. i-xix and 1-232. Articles in scholarly journals 1996 In Raga, in Tala, Out of Culture?: Problems and Prospects of a Hindustani Musical Transplant in Central Texas. -

Download Full Length Paper

International Journal of Research in Social Sciences Vol. 8 Issue 10, October 2018, ISSN: 2249-2496 Impact Factor: 7.081 Journal Homepage: http://www.ijmra.us, Email: [email protected] Double-Blind Peer Reviewed Refereed Open Access International Journal - Included in the International Serial Directories Indexed & Listed at: Ulrich's Periodicals Directory ©, U.S.A., Open J-Gate as well as in Cabell’s Directories of Publishing Opportunities, U.S.A The Importance of Music as a foundation of Mental Peace Dr. Sandhya Arora, Associate Professor, Department of Music, S.S. KhannaGirls’ Degree College, Allahabad. MUSIC FOR THE PEACE OF MIND Shakespeare once wrote: "If music be the food of love, play on..." Profound words, true, but he failed to mention that music is not just nourishment for the heart, but also for the soul. Music surrounds our lives, we hear it on the radio, on television, from our car and home stereos. We come across it in the mellifluous tunes of a classical concert or in the devotional strains of a bhajan, the wedding band, or the reaper in the fields breaking into song to express the joy of life. Even warbling in the bathroom gives us a happy start to the day. Music can delight all the senses and inspire every fiber of our being. Music has the power to soothe and relax, bring us comfort and embracing joy! Music subtly bypasses the intellectual stimulus in the brain and moves directly to our subconscious There is music for every mood and for every occasion. Many cultures recognize the importance of music and sound as a healing power. -

Track Name Singers VOCALS 1 RAMKALI Pt. Bhimsen Joshi 2 ASAWARI TODI Pt

Track name Singers VOCALS 1 RAMKALI Pt. Bhimsen Joshi 2 ASAWARI TODI Pt. Bhimsen Joshi 3 HINDOLIKA Pt. Bhimsen Joshi 4 Thumri-Bhairavi Pt. Bhimsen Joshi 5 SHANKARA MANIK VERMA 6 NAT MALHAR MANIK VERMA 7 POORIYA MANIK VERMA 8 PILOO MANIK VERMA 9 BIHAGADA PANDIT JASRAJ 10 MULTANI PANDIT JASRAJ 11 NAYAKI KANADA PANDIT JASRAJ 12 DIN KI PURIYA PANDIT JASRAJ 13 BHOOPALI MALINI RAJURKAR 14 SHANKARA MALINI RAJURKAR 15 SOHONI MALINI RAJURKAR 16 CHHAYANAT MALINI RAJURKAR 17 HAMEER MALINI RAJURKAR 18 ADANA MALINI RAJURKAR 19 YAMAN MALINI RAJURKAR 20 DURGA MALINI RAJURKAR 21 KHAMAJ MALINI RAJURKAR 22 TILAK-KAMOD MALINI RAJURKAR 23 BHAIRAVI MALINI RAJURKAR 24 ANAND BHAIRAV PANDIT JITENDRA ABHISHEKI 25 RAAG MALA PANDIT JITENDRA ABHISHEKI 26 KABIR BHAJAN PANDIT JITENDRA ABHISHEKI 27 SHIVMAT BHAIRAV PANDIT JITENDRA ABHISHEKI 28 LALIT BEGUM PARVEEN SULTANA 29 JOG BEGUM PARVEEN SULTANA 30 GUJRI JODI BEGUM PARVEEN SULTANA 31 KOMAL BHAIRAV BEGUM PARVEEN SULTANA 32 MARUBIHAG PANDIT VASANTRAO DESHPANDE 33 THUMRI MISHRA KHAMAJ PANDIT VASANTRAO DESHPANDE 34 JEEVANPURI PANDIT KUMAR GANDHARVA 35 BAHAR PANDIT KUMAR GANDHARVA 36 DHANBASANTI PANDIT KUMAR GANDHARVA 37 DESHKAR PANDIT KUMAR GANDHARVA 38 GUNAKALI PANDIT KUMAR GANDHARVA 39 BILASKHANI-TODI PANDIT KUMAR GANDHARVA 40 KAMOD PANDIT KUMAR GANDHARVA 41 MIYA KI TODI USTAD RASHID KHAN 42 BHATIYAR USTAD RASHID KHAN 43 MIYA KI TODI USTAD RASHID KHAN 44 BHATIYAR USTAD RASHID KHAN 45 BIHAG ASHWINI BHIDE-DESHPANDE 46 BHINNA SHADAJ ASHWINI BHIDE-DESHPANDE 47 JHINJHOTI ASHWINI BHIDE-DESHPANDE 48 NAYAKI KANADA ASHWINI -

The Thaat-Ragas of North Indian Classical Music: the Basic Atempt to Perform Dr

The Thaat-Ragas of North Indian Classical Music: The Basic Atempt to Perform Dr. Sujata Roy Manna ABSTRACT Indian classical music is divided into two streams, Hindustani music and Carnatic music. Though the rules and regulations of the Indian Shastras provide both bindings and liberties for the musicians, one can use one’s innovations while performing. As the Indian music requires to be learnt under the guidance of Master or Guru, scriptural guidelines are never sufficient for a learner. Keywords: Raga, Thaat, Music, Performing, Alapa. There are two streams of Classical music of India – the Ragas are to be performed with the basic help the North Indian i.e., Hindustani music and the of their Thaats. Hence, we may compare the Thaats South Indian i.e., Carnatic music. The vast area of with the skeleton of creature, whereas the body Indian Classical music consists upon the foremost can be compared with the Raga. The names of the criterion – the origin of the Ragas, named the 10 (ten) Thaats of North Indian Classical Music Thaats. In the Carnatic system, there are 10 system i.e., Hindustani music are as follows: Thaats. Let us look upon the origin of the 10 Thaats Sl. Thaats Ragas as well as their Thaat-ragas (i.e., the Ragas named 01. Vilabal Vilabal, Alhaiya–Vilaval, Bihag, according to their origin). The Indian Shastras Durga, Deshkar, Shankara etc. 02. Kalyan Yaman, Bhupali, Hameer, Kedar, throw light on the rules and regulations, the nature Kamod etc. of Ragas, process of performing these, and the 03. Khamaj Khamaj, Desh, Tilakkamod, Tilang, liberty and bindings of the Ragas while Jayjayanti / Jayjayvanti etc. -

Music (Hindustani Music) Vocal and Instrumental (Sitar)

SEMESTER –III (THEORY ) INDIAN MUSIC (HINDUSTANI MUSIC) VOCAL AND INSTRUMENTAL (SITAR) Syllabus and Courses of Study in Indian Music for B.A. Under CBCS for the Examination to be held in Dec. 2017, 2018 and 2019 DURATION OF EXAMINATION: 2½Hrs MAX. MARKS: 40 (32+08) COURSE Code. UMUTC 301 EXTERNAL EXAM: 32 (Marks) CREDITS: 2(2hrs per week) INTERNAL EXAM: 08 (Marks) APPLIED THEORY AND HISTORY OF INDIAN MUSIC SECTION – A Prescribed Ragas: 1. Bhupali 2. Bheemplasi 3. Khamaj 1. (A) Writing description of the prescribed ragas. (B) Writing of notation of Chota Khayal/Razakhani Gat or/Maseetkhani Gat in any one of the above prescribed Ragas in Pandit V.N. Bhatkhandey notation system with few Tanas/Todas. 2. (A) Description of below mentioned Talas with giving single, Dugun,Tigun & Chougun layakaries in Pandit V.N. Bhatkhandey notation system. 1. Chartal 2. Tilwara Taal. (including previous Semester’s Talas). (B) Compartive study of the ragas with their similar Ragas(Samprakritik Ragas) & Identifying the Swar combinations of prescribed Ragas SECTION – B 1. Detailed study of the following Musicology: Meend,Murki,Gamak,Tarab,Chikari,Zamzama,Ghaseet,Krintan,Avirbhava, Tirobhava 2. Detailed study of the following:- Khayal,(Vilambit&Drut)&Razakhani/Maseetkhani Gat ,Dhrupad 3. Establishment of shudh swaras on 22 Shruties according to ancient,medieval & modern scholars 4.Classification of Indian Musical instruments with detailed study of your own instrument.(Sitar/Taanpura) SECTION – C 1. History of Indian Music of Ancient period with special reference of Grantha/books 2. Gamak and its kinds. 3. Origin of Notation System & its merits & demrits 4. -

Emotions and Their Intensity in Hindustani Classical Music Using

EMOTIONS AND THEIR INTENSITY IN MUSIC 1 Emotions and Their Intensity in Hindustani Classical Music Using Two Rating Interfaces Junmoni Borgohain1, Raju Mullick2, Gouri Karambelkar2, Priyadarshi Patnaik1,3, Damodar Suar1 1Humanities and Social Sciences, Indian Institute of Technology Kharagpur, West Bengal 2Advanced Technology Development Centre, Indian Institute of Technology Kharagpur, West Bengal 3Rekhi Centre of Excellence for the Science of Happiness, Indian Institute of Technology Kharagpur, West Bengal EMOTIONS AND THEIR INTENSITY IN MUSIC 2 Abstract One of the very popular techniques of assessing music is using the dimensional model. Although it is used in numerous studies, the discrete model is of great importance in the Indian tradition. This study assesses two discrete interfaces for continuous rating of Hindustani classical music. The first interface, the Discrete emotion wheel (DEW) captures the range of eight aesthetic emotions relevant to Hindustani classical music and cited in Natyashastra, and the second interface, Intensity-rating emotion wheel (IEW) assesses the emotional arousal and identifies whether the additional cognitive load interferes with accurate rating. Forty-eight participants rated emotions expressed by five Western and six Hindustani classical clips. Results suggest that both the interfaces work effectively for both the music genres, and the intensity-rating emotion wheel was able to capture arousal in the clips where they show higher intensities in the dominant emotions. Implications of the tool for assessing the relation between musical structures, emotions and time are also discussed. Keywords Arousal, continuous response, discrete emotions, Hindustani classical music, interface Emotions and Their Intensity in Hindustani Classical Music Using Two Rating Interfaces Continuous assessment of music stimuli emerged as a tool in 1980s, a field dominated by self-report or post-performance ratings till then (Kallinen, Saari, Ravaja, & Laarni, 2005; EMOTIONS AND THEIR INTENSITY IN MUSIC 3 Schubert, 2001). -

INDIA INTERNATIONAL CENTRE Volume XXVIII

diary Price Re.1/- INDIA INTERNATIONAL CENTRE volume XXVIII. No. 2 March – April 2014 composed Carnatic music in Hindustani ragas as well as Same Difference NottuSvarams inspired by British band music; in recent MUSIC APPRECIATION PROMOTION: The North–South years, Carnatic ragas such as Hamsadhwani and Convergence in Carnatic and Hindustani Music Charukeshi have been adopted enthusiastically by the Panelists: Subhadra Desai, Saraswati Rajagopalan north; Maharashtrian abhangs have become staples at and Suanshu Khurana Chair: Vidya Shah, March 21 Carnatic concerts; Indian classical music has shown a PERFORMANCE: A Celebration of Carnatic and willingness to naturalise Western imports such as the Hindustani Music violin, guitar, saxophone, mandolin and even the organ. Carnatic Vocal Recital by Sudha Raghunathan So what this really means is that you have two distinct Hindustani Vocal Recital by Meeta Pandit systems, with often disparate ideas about what constitutes Collaboration: Spirit of India, March 22 good music production, but tantalisingly similar lexicons and grammatical constructs that occasionally become the This was a thought-provoking discussion-demonstration basis for some interesting conversations. on the convergence between Carnatic and Hindustani music, two systems divided by a putatively common These insights kept coming to mind the next evening, musical language. The audience certainly converged; as to when the clamour of birdsong at the picturesque Rose the systems, it was largely a revelation of their divergences, Garden heralded performances by Meeta Pandit of the though a very interesting, and at times even amusing one. Gwalior gharana and then Sudha Raghunathan, the Madras Music Academy’s Sangeeta Kalanidhi for this Carnatic training begins with the raga Mayamalavagaula, year.