Emotions and Their Intensity in Hindustani Classical Music Using

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

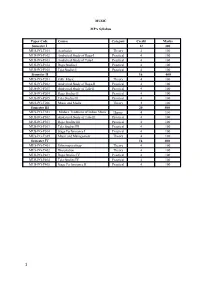

Note Staff Symbol Carnatic Name Hindustani Name Chakra Sa C

The Indian Scale & Comparison with Western Staff Notations: The vowel 'a' is pronounced as 'a' in 'father', the vowel 'i' as 'ee' in 'feet', in the Sa-Ri-Ga Scale In this scale, a high note (swara) will be indicated by a dot over it and a note in the lower octave will be indicated by a dot under it. Hindustani Chakra Note Staff Symbol Carnatic Name Name MulAadhar Sa C - Natural Shadaj Shadaj (Base of spine) Shuddha Swadhishthan ri D - flat Komal ri Rishabh (Genitals) Chatushruti Ri D - Natural Shudhh Ri Rishabh Sadharana Manipur ga E - Flat Komal ga Gandhara (Navel & Solar Antara Plexus) Ga E - Natural Shudhh Ga Gandhara Shudhh Shudhh Anahat Ma F - Natural Madhyam Madhyam (Heart) Tivra ma F - Sharp Prati Madhyam Madhyam Vishudhh Pa G - Natural Panchama Panchama (Throat) Shuddha Ajna dha A - Flat Komal Dhaivat Dhaivata (Third eye) Chatushruti Shudhh Dha A - Natural Dhaivata Dhaivat ni B - Flat Kaisiki Nishada Komal Nishad Sahsaar Ni B - Natural Kakali Nishada Shudhh Nishad (Crown of head) Så C - Natural Shadaja Shadaj Property of www.SarodSitar.com Copyright © 2010 Not to be copied or shared without permission. Short description of Few Popular Raags :: Sanskrut (Sanskrit) pronunciation is Raag and NOT Raga (Alphabetical) Aroha Timing Name of Raag (Karnataki Details Avroha Resemblance) Mood Vadi, Samvadi (Main Swaras) It is a old raag obtained by the combination of two raags, Ahiri Sa ri Ga Ma Pa Ga Ma Dha ni Så Ahir Bhairav Morning & Bhairav. It belongs to the Bhairav Thaat. Its first part (poorvang) has the Bhairav ang and the second part has kafi or Så ni Dha Pa Ma Ga ri Sa (Chakravaka) serious, devotional harpriya ang. -

A Novel Hybrid Approach for Retrieval of the Music Information

International Journal of Applied Engineering Research ISSN 0973-4562 Volume 12, Number 24 (2017) pp. 15011-15017 © Research India Publications. http://www.ripublication.com A Novel Hybrid Approach for Retrieval of the Music Information Varsha N. Degaonkar Research Scholar, Department of Electronics and Telecommunication, JSPM’s Rajarshi Shahu College of Engineering, Pune, Savitribai Phule Pune University, Maharashtra, India. Orcid Id: 0000-0002-7048-1626 Anju V. Kulkarni Professor, Department of Electronics and Telecommunication, Dr. D. Y. Patil Institute of Technology, Pimpri, Pune, Savitribai Phule Pune University, Maharashtra, India. Orcid Id: 0000-0002-3160-0450 Abstract like automatic music annotation, music analysis, music synthesis, etc. The performance of existing search engines for retrieval of images is facing challenges resulting in inappropriate noisy Most of the existing human computation systems operate data rather than accurate information searched for. The reason without any machine contribution. With the domain for this being data retrieval methodology is mostly based on knowledge, human computation can give best results if information in text form input by the user. In certain areas, machines are taken in a loop. In recent years, use of smart human computation can give better results than machines. In mobile devices is increased; there is a huge amount of the proposed work, two approaches are presented. In the first multimedia data generated and uploaded to the web every day. approach, Unassisted and Assisted Crowd Sourcing This data, such as music, field sounds, broadcast news, and techniques are implemented to extract attributes for the television shows, contain sounds from a wide variety of classical music, by involving users (players) in the activity. -

Master of Performing Arts (Vocal & Instrumental)

MASTER OF PERFORMING ARTS (VOCAL & INSTRUMENTAL) I SEMESTER Course - 101 (Applied Theory) Credits: 4 Marks: 80 Internal Assessment: 20 Total: 100 Course Objectives:- 1. To critically appreciate a music concert. 2. To understand and compare the ragas and talas prescribed for practical’s. 3. To write compositions in the prescribed notation system. 4. To introduce students to staff notation. Course Content:- I. Theoretical study of Ragas and Talas prescribed for practical and their comparative study wherever possible. II. Reading and writing of Notations of compositions Alap, Taan etc. in the Ragas and Talas with prescribed Laykraries. III. Elementary Knowledge of Staff Notation. IV. Critical appreciation of Music concert. Bibliographies:- a. Dr. Bahulkar, S. Kalashastra Visharad (Vol. 1 - 4 ). Mumbai:: Sanskar Prakashan. b. Dr. Sharma, M. Music India. A. B. H. Publishing Hoouse. c. Dr. Vasant. Sangeet Visharad. Hatras:: Sangeet Karyalaya. d. Rajopadhyay, V. Sangeet Shastra. Akhil Bhartiya Gandharva Vidhyalaya e. Rathod, B. Thumri. Jaipur:: University Book House Pvt. Ltd. f. Shivpuji, G. Lay Shastra. Bhopal: Madhya Pradesh Hindi Granth. Course - 102 (General Theory) Credits: 4 Marks: 80 Internal Assessment: 20 Total: 100 Course Objectives:- 1. To study Aesthetics in Music. 2. To appreciate the aesthetic aspects of different forms of music. Course Content:- I. Definition of Aesthetics and its Application in Music. II. Aesthetical principles of Different Haran’s. III. Aesthetical aspects of different forms of Music. a. Dhrupad, Dhamar, Khayal, Thumri, Tappa etc. IV. Merits and demerits of vocalist. Bibliographies:- a. Bosanquet, B. (2001). The concept of Aesthetics. New Delhi: Sethi Publishing Company. b. Dr. Bahulkar, S. Kalashastra Visharad (Vol. -

The Rich Heritage of Dhrupad Sangeet in Pushtimarg On

Copyright © 2006 www.vallabhkankroli.org - All Rights Reserved by Shree Vakpati Foundation - Baroda ||Shree DwaDwarrrrkeshokesho Jayati|| || Shree Vallabhadhish Vijayate || The Rich Heritage Of Dhrupad Sangeet in Pushtimarg on www.vallabhkankroli.org Reference : 8th Year Text Book of Pushtimargiya Patrachaar by Shree Vakpati Foundation - Baroda Inspiration: PPG 108 Shree Vrajeshkumar Maharajshri - Kankroli PPG 108 Shree Vagishkumar Bawashri - Kankroli Copyright © 2006 www.vallabhkankroli.org - All Rights Reserved by Shree Vakpati Foundation - Baroda Contents Meaning of Sangeet ........................................................................................................................... 4 Naad, Shruti and Swar ....................................................................................................................... 4 Definition of Raga.............................................................................................................................. 5 Rules for Defining Ragas................................................................................................................... 6 The Defining Elements in the Raga................................................................................................... 7 Vadi, Samvadi, Anuvadi, Vivadi [ Sonant, Consonant, Assonant, Dissonant] ................................ 8 Aroha, avaroha [Ascending, Descending] ......................................................................................... 8 Twelve Swaras of the Octave ........................................................................................................... -

About Emotions There Are 8 Primary Emotions. You Are Born with These

About Emotions There are 8 primary emotions. You are born with these emotions wired into your brain. That wiring causes your body to react in certain ways and for you to have certain urges when the emotion arises. Here is a list of primary emotions: Eight Primary Emotions Anger: fury, outrage, wrath, irritability, hostility, resentment and violence. Sadness: grief, sorrow, gloom, melancholy, despair, loneliness, and depression. Fear: anxiety, apprehension, nervousness, dread, fright, and panic. Joy: enjoyment, happiness, relief, bliss, delight, pride, thrill, and ecstasy. Interest: acceptance, friendliness, trust, kindness, affection, love, and devotion. Surprise: shock, astonishment, amazement, astound, and wonder. Disgust: contempt, disdain, scorn, aversion, distaste, and revulsion. Shame: guilt, embarrassment, chagrin, remorse, regret, and contrition. All other emotions are made up by combining these basic 8 emotions. Sometimes we have secondary emotions, an emotional reaction to an emotion. We learn these. Some examples of these are: o Feeling shame when you get angry. o Feeling angry when you have a shame response (e.g., hurt feelings). o Feeling fear when you get angry (maybe you’ve been punished for anger). There are many more. These are NOT wired into our bodies and brains, but are learned from our families, our culture, and others. When you have a secondary emotion, the key is to figure out what the primary emotion, the feeling at the root of your reaction is, so that you can take an action that is most helpful. . -

Finding the Golden Mean: the Overuse, Underuse, and Optimal Use of Character Strengths

Counselling Psychology Quarterly ISSN: 0951-5070 (Print) 1469-3674 (Online) Journal homepage: https://www.tandfonline.com/loi/ccpq20 Finding the golden mean: the overuse, underuse, and optimal use of character strengths Ryan M. Niemiec To cite this article: Ryan M. Niemiec (2019): Finding the golden mean: the overuse, underuse, and optimal use of character strengths, Counselling Psychology Quarterly, DOI: 10.1080/09515070.2019.1617674 To link to this article: https://doi.org/10.1080/09515070.2019.1617674 Published online: 20 May 2019. Submit your article to this journal View Crossmark data Full Terms & Conditions of access and use can be found at https://www.tandfonline.com/action/journalInformation?journalCode=ccpq20 COUNSELLING PSYCHOLOGY QUARTERLY https://doi.org/10.1080/09515070.2019.1617674 ARTICLE Finding the golden mean: the overuse, underuse, and optimal use of character strengths Ryan M. Niemiec VIA Institute on Character, Cincinnati, OH, USA ABSTRACT ARTICLE HISTORY The science of well-being has catalyzed a tremendous amount of Received 28 February 2019 research with no area more robust in application and impact than Accepted 8 May 2019 the science of character strengths. As the empirical links between KEYWORDS character strengths and positive outcomes rapidly grow, the research Character strengths; around strength imbalances and the use of strengths with problems strengths overuse; strengths and conflicts is nascent. The use of character strengths in understand- underuse; optimal use; ing and handling life suffering as well as emerging from it, is particularly second wave positive aligned within second wave positive psychology. Areas of particular psychology; golden mean promise include strengths overuse and strengths underuse, alongside its companion of strengths optimaluse.Thelatterisviewedasthe golden mean of character strengths which refers to the expression of the right combination of strengths, to the right degree, and in the right situation. -

MUSIC MPA Syllabus Paper Code Course Category Credit Marks

MUSIC MPA Syllabus Paper Code Course Category Credit Marks Semester I 12 300 MUS-PG-T101 Aesthetics Theory 4 100 MUS-PG-P102 Analytical Study of Raga-I Practical 4 100 MUS-PG-P103 Analytical Study of Tala-I Practical 4 100 MUS-PG-P104 Raga Studies I Practical 4 100 MUS-PG-P105 Tala Studies I Practical 4 100 Semester II 16 400 MUS-PG-T201 Folk Music Theory 4 100 MUS-PG-P202 Analytical Study of Raga-II Practical 4 100 MUS-PG-P203 Analytical Study of Tala-II Practical 4 100 MUS-PG-P204 Raga Studies II Practical 4 100 MUS-PG-P205 Tala Studies II Practical 4 100 MUS-PG-T206 Music and Media Theory 4 100 Semester III 20 500 MUS-PG-T301 Modern Traditions of Indian Music Theory 4 100 MUS-PG-P302 Analytical Study of Tala-III Practical 4 100 MUS-PG-P303 Raga Studies III Practical 4 100 MUS-PG-P303 Tala Studies III Practical 4 100 MUS-PG-P304 Stage Performance I Practical 4 100 MUS-PG-T305 Music and Management Theory 4 100 Semester IV 16 400 MUS-PG-T401 Ethnomusicology Theory 4 100 MUS-PG-T402 Dissertation Theory 4 100 MUS-PG-P403 Raga Studies IV Practical 4 100 MUS-PG-P404 Tala Studies IV Practical 4 100 MUS-PG-P405 Stage Performance II Practical 4 100 1 Semester I MUS-PG-CT101:- Aesthetic Course Detail- The course will primarily provide an overview of music and allied issues like Aesthetics. The discussions will range from Rasa and its varieties [According to Bharat, Abhinavagupta, and others], thoughts of Rabindranath Tagore and Abanindranath Tagore on music to aesthetics and general comparative. -

Identifying Expressions of Emotions and Their Stimuli in Text

Identifying Expressions of Emotions and Their Stimuli in Text by Diman Ghazi Thesis submitted to the Faculty of Graduate and Postdoctoral Studies in partial fulfillment of the requirements for the Ph.D. degree in Computer Science School of Electrical Engineering and Computer Science Faculty of Engineering University of Ottawa c Diman Ghazi, Ottawa, Canada, 2016 Abstract Emotions are among the most pervasive aspects of human experience. They have long been of interest to social and behavioural sciences. Recently, emotions have attracted the attention of researchers in computer science and particularly in computational linguistics. Computational approaches to emotion analysis have also focused on various emotion modalities, but there is less effort in the direction of automatic recognition of the emotion expressed. Although some past work has addressed detecting emotions, detecting why an emotion arises is ignored. In this work, we explore the task of classifying texts automatically by the emotions expressed, as well as detecting the reason why a particular emotion is felt. We believe there is still a large gap between the theoretical research on emotions in psy- chology and emotion studies in computational linguistics. In our research, we try to fill this gap by considering both theoretical and computational aspects of emotions. Starting with a general explanation of emotion and emotion causes from the psychological and cognitive perspective, we clarify the definition that we base our work on. We explain what is feasible in the scope of text and what is practically doable based on the current NLP techniques and tools. This work is organized in two parts: first part on Emotion Expression and the second part on Emotion Stimulus. -

University of Mauritius Mahatma Gandhi Institute

University of Mauritius Mahatma Gandhi Institute Regulations And Programme of Studies B. A (Hons) Performing Arts (Vocal Hindustani) (Review) - 1 - UNIVERSITY OF MAURITIUS MAHATMA GANDHI INSTITUTE PART I General Regulations for B.A (Hons) Performing Arts (Vocal Hindustani) 1. Programme title: B.A (Hons) Performing Arts (Vocal Hindustani) 2. Objectives To equip the student with further knowledge and skills in Vocal Hindustani Music and proficiency in the teaching of the subject. 3. General Entry Requirements In accordance with the University General Entry Requirements for admission to undergraduate degree programmes. 4. Programme Requirement A post A-Level MGI Diploma in Performing Arts (Vocal Hindustani) or an alternative qualification acceptable to the University of Mauritius. 5. Programme Duration Normal Maximum Degree (P/T): 2 years 4 years (4 semesters) (8 semesters) 6. Credit System 6.1 Introduction 6.1.1 The B.A (Hons) Performing Arts (Vocal Hindustani) programme is built up on a 3- year part time Diploma, which accounts for 60 credits. 6.1.2 The Programme is structured on the credit system and is run on a semester basis. 6.1.3 A semester is of a duration of 15 weeks (excluding examination period). - 2 - 6.1.4 A credit is a unit of measure, and the Programme is based on the following guidelines: 15 hours of lectures and / or tutorials: 1 credit 6.2 Programme Structure The B.A Programme is made up of a number of modules carrying 3 credits each, except the Dissertation which carries 9 credits. 6.3 Minimum Credits Required for the Award of the Degree: 6.3.1 The MGI Diploma already accounts for 60 credits. -

Evaluation of the Effects of Music Therapy Using Todi Raga of Hindustani Classical Music on Blood Pressure, Pulse Rate and Respiratory Rate of Healthy Elderly Men

Volume 64, Issue 1, 2020 Journal of Scientific Research Institute of Science, Banaras Hindu University, Varanasi, India. Evaluation of the Effects of Music Therapy Using Todi Raga of Hindustani Classical Music on Blood Pressure, Pulse Rate and Respiratory Rate of Healthy Elderly Men Samarpita Chatterjee (Mukherjee) 1, and Roan Mukherjee2* 1 Department of Hindustani Classical Music (Vocal), Sangit-Bhavana, Visva-Bharati (A Central University), Santiniketan, Birbhum-731235,West Bengal, India 2 Department of Human Physiology, Hazaribag College of Dental Sciences and Hospital, Demotand, Hazaribag 825301, Jharkhand, India. [email protected] Abstract Several studies have indicated that music therapy may affect I. INTRODUCTION cardiovascular health; in particular, it may bring positive changes Music may be regarded as the projection of ideas as well as in blood pressure levels and heart rate, thereby improving the emotions through significant sounds produced by an instrument, overall quality of life. Hence, to regulate blood pressure, music voices, or both by taking into consideration different elements of therapy may be regarded as a significant complementary and alternative medicine (CAM). The respiratory rate, if maintained melody, rhythm, and harmony. Music plays an important role in within the normal range, may promote good cardiac health. The everyone’s life. Music has the power to make one experience aim of the present study was to evaluate the changes in blood harmony, emotional ecstasy, spiritual uplifting, positive pressure, pulse rate and respiratory rate in healthy and disease-free behavioral changes, and absolute tranquility. The annoyance in males (age 50-60 years), at the completion of 30 days of music life may increase in lack of melody and harmony. -

Positive Psychology

7 WAYS TO APPLY POSITIVE PSYCHOLOGY Learn practical, proven strategies to live a happier, more engaged and more meaningful life. We all want to live happier, more engaging and more meaningful lives. The science of positive psychology contributes new Introduction insights and proven strategies to increase wellbeing in individuals, workplaces and communities. After years classifying and repairing the worst of human experience, positive psychology emerged to help us flourish and learn from the best. This rich and varied field now spans many areas, building on philosophies and practices from Aristotle to Maslow. This eBook introduces you to some of the most practical and research-backed ways to apply positive psychology to increase happiness, wellbeing and effectiveness—in your life, at work, at home and with the people you care for, help and lead. You will learn: • How to maximise the power of positivity to create sustainable pathways to happiness and success. • Seven positive psychology practices - “[Positive Psychology is] the from optimism to mindfullness. scientific study of positive • Tips to apply these practices at work, at human functioning and home and when helping people. flourishing on multiple levels.” We look forward to inspiring you and others to live a Martin Seligman &Mihaly Csikszentmihalyi happier, more engaged and fulfilling life! The Langley Group team © 2015 Langley Group. All rights reserved 2 Positive psychology 7 POSITIVE PRACTICES Positive emotion 1 Generating positive emotions helps broaden and build our resources and moves us toward greater wellbeing. Spearheaded by Martin Seligman and Mindset Mihaly Csikszentmihalyi in 1998, positive 2 psychology focuses on exploring and Adopting a positive attitude and Growth Mindset enhances learning and opens our expanding what makes life worthwhile, mind to new ways to raise happiness levels. -

Download Full Length Paper

International Journal of Research in Social Sciences Vol. 8 Issue 10, October 2018, ISSN: 2249-2496 Impact Factor: 7.081 Journal Homepage: http://www.ijmra.us, Email: [email protected] Double-Blind Peer Reviewed Refereed Open Access International Journal - Included in the International Serial Directories Indexed & Listed at: Ulrich's Periodicals Directory ©, U.S.A., Open J-Gate as well as in Cabell’s Directories of Publishing Opportunities, U.S.A The Importance of Music as a foundation of Mental Peace Dr. Sandhya Arora, Associate Professor, Department of Music, S.S. KhannaGirls’ Degree College, Allahabad. MUSIC FOR THE PEACE OF MIND Shakespeare once wrote: "If music be the food of love, play on..." Profound words, true, but he failed to mention that music is not just nourishment for the heart, but also for the soul. Music surrounds our lives, we hear it on the radio, on television, from our car and home stereos. We come across it in the mellifluous tunes of a classical concert or in the devotional strains of a bhajan, the wedding band, or the reaper in the fields breaking into song to express the joy of life. Even warbling in the bathroom gives us a happy start to the day. Music can delight all the senses and inspire every fiber of our being. Music has the power to soothe and relax, bring us comfort and embracing joy! Music subtly bypasses the intellectual stimulus in the brain and moves directly to our subconscious There is music for every mood and for every occasion. Many cultures recognize the importance of music and sound as a healing power.