And Cross-Lingual Paraphrased Text Reuse and Extrinsic Plagiarism Detection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

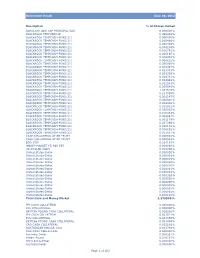

Retirement Funds June 30, 2012 Description % of Shares Owned

Retirement Funds June 30, 2012 Description % of Shares Owned BARCLAYS LOW CAP PRINCIPAL CAS 0.000000% BLACKROCK FEDFUND(30) 0.440244% BLACKROCK TEMPCASH-FUND(21) 0.000000% BLACKROCK TEMPCASH-FUND(21) 0.000486% BLACKROCK TEMPCASH-FUND(21) 0.000485% BLACKROCK TEMPCASH-FUND(21) 0.008246% BLACKROCK TEMPCASH-FUND(21) 0.006791% BLACKROCK TEMPCASH-FUND(21) 0.005165% BLACKROCK TEMPCASH-FUND(21) 0.006043% BLACKROCK TEMPCASH-FUND(21) 0.004035% BLACKROCK TEMPCASH-FUND(21) 0.035990% BLACKROCK TEMPCASH-FUND(21) 0.020497% BLACKROCK TEMPCASH-FUND(21) 0.023343% BLACKROCK TEMPCASH-FUND(21) 0.008326% BLACKROCK TEMPCASH-FUND(21) 0.000781% BLACKROCK TEMPCASH-FUND(21) 0.004848% BLACKROCK TEMPCASH-FUND(21) 0.022593% BLACKROCK TEMPCASH-FUND(21) 0.000646% BLACKROCK TEMPCASH-FUND(21) 1.307615% BLACKROCK TEMPCASH-FUND(21) 0.214356% BLACKROCK TEMPCASH-FUND(21) 0.001147% BLACKROCK TEMPCASH-FUND(21) 0.024810% BLACKROCK TEMPCASH-FUND(21) 0.009406% BLACKROCK TEMPCASH-FUND(21) 0.018922% BLACKROCK TEMPCASH-FUND(21) 0.030062% BLACKROCK TEMPCASH-FUND(21) 0.010464% BLACKROCK TEMPCASH-FUND(21) 0.004697% BLACKROCK TEMPCASH-FUND(21) 0.001179% BLACKROCK TEMPCASH-FUND(21) 0.007266% BLACKROCK TEMPCASH-FUND(21) 0.000112% BLACKROCK TEMPCASH-FUND(21) 0.008062% BLACKROCK TEMPCASH-FUND(21) 0.011657% CASH COLLATERAL AT BR TRUST 0.000001% CASH COLLATERAL AT BR TRUST 0.000584% EOD STIF 0.024153% MONEY MARKET FD FOR EBT 0.000000% US DOLLAR CASH 0.008786% United States-Dollar 0.000000% United States-Dollar 0.000003% United States-Dollar 0.000019% United States-Dollar 0.000003% United States-Dollar -

Insight Manufacturers, Publishers and Suppliers by Product Category

Manufacturers, Publishers and Suppliers by Product Category 2/15/2021 10/100 Hubs & Switch ASANTE TECHNOLOGIES CHECKPOINT SYSTEMS, INC. DYNEX PRODUCTS HAWKING TECHNOLOGY MILESTONE SYSTEMS A/S ASUS CIENA EATON HEWLETT PACKARD ENTERPRISE 1VISION SOFTWARE ATEN TECHNOLOGY CISCO PRESS EDGECORE HIKVISION DIGITAL TECHNOLOGY CO. LT 3COM ATLAS SOUND CISCO SYSTEMS EDGEWATER NETWORKS INC Hirschmann 4XEM CORP. ATLONA CITRIX EDIMAX HITACHI AB DISTRIBUTING AUDIOCODES, INC. CLEAR CUBE EKTRON HITACHI DATA SYSTEMS ABLENET INC AUDIOVOX CNET TECHNOLOGY EMTEC HOWARD MEDICAL ACCELL AUTOMAP CODE GREEN NETWORKS ENDACE USA HP ACCELLION AUTOMATION INTEGRATED LLC CODI INC ENET COMPONENTS HP INC ACTI CORPORATION AVAGOTECH TECHNOLOGIES COMMAND COMMUNICATIONS ENET SOLUTIONS INC HYPERCOM ADAPTEC AVAYA COMMUNICATION DEVICES INC. ENGENIUS IBM ADC TELECOMMUNICATIONS AVOCENT‐EMERSON COMNET ENTERASYS NETWORKS IMC NETWORKS ADDERTECHNOLOGY AXIOM MEMORY COMPREHENSIVE CABLE EQUINOX SYSTEMS IMS‐DELL ADDON NETWORKS AXIS COMMUNICATIONS COMPU‐CALL, INC ETHERWAN INFOCUS ADDON STORE AZIO CORPORATION COMPUTER EXCHANGE LTD EVGA.COM INGRAM BOOKS ADESSO B & B ELECTRONICS COMPUTERLINKS EXABLAZE INGRAM MICRO ADTRAN B&H PHOTO‐VIDEO COMTROL EXACQ TECHNOLOGIES INC INNOVATIVE ELECTRONIC DESIGNS ADVANTECH AUTOMATION CORP. BASF CONNECTGEAR EXTREME NETWORKS INOGENI ADVANTECH CO LTD BELDEN CONNECTPRO EXTRON INSIGHT AEROHIVE NETWORKS BELKIN COMPONENTS COOLGEAR F5 NETWORKS INSIGNIA ALCATEL BEMATECH CP TECHNOLOGIES FIRESCOPE INTEL ALCATEL LUCENT BENFEI CRADLEPOINT, INC. FORCE10 NETWORKS, INC INTELIX -

Stoxx® Developed Markets Total Market Index

TOTAL MARKET INDICES 1 STOXX® DEVELOPED MARKETS TOTAL MARKET INDEX Stated objective Key facts The STOXX Total Market (TMI) Indices cover 95% of the free-float » With 95% coverage of the free-float market cap of the relevant market cap of the relevant investable stock universe by region or investable stock universe per region, the index forms a unique country. The STOXX Global TMI serves as the basis for all regional benchmark for a truly global investment approach and country TMI indices. All TMI indices offer exposure to global equity markets with the broadest diversification within the STOXX equity universe in terms of regions, currencies and sectors. Descriptive statistics Index Market cap (USD bn.) Components (USD bn.) Component weight (%) Turnover (%) Full Free-float Mean Median Largest Smallest Largest Smallest Last 12 months STOXX Developed Markets Total Market Index 44,524.3 38,816.9 8.9 2.3 618.0 0.0 1.6 0.0 3.0 STOXX Global Total Market Index 54,569.6 44,271.7 6.1 1.3 618.0 0.0 1.4 0.0 3.4 Supersector weighting (top 10) Country weighting Risk and return figures1 Index returns Return (%) Annualized return (%) Last month YTD 1Y 3Y 5Y Last month YTD 1Y 3Y 5Y STOXX Developed Markets Total Market Index 2.3 7.1 21.6 56.1 0.0 30.7 10.6 21.1 15.6 0.0 STOXX Global Total Market Index 2.3 7.7 21.7 49.4 78.4 30.7 11.5 21.2 13.9 11.9 Index volatility and risk Annualized volatility (%) Annualized Sharpe ratio2 STOXX Developed Markets Total Market Index 8.4 8.3 8.4 13.2 0.0 1.0 1.2 2.2 1.1 0.7 STOXX Global Total Market Index 8.2 7.9 8.1 12.8 22.5 1.1 1.4 2.3 1.0 0.5 Index to benchmark Correlation Tracking error (%) STOXX Developed Markets Total Market Index 1.0 1.0 1.0 1.0 1.0 0.8 1.0 1.1 1.5 1.7 Index to benchmark Beta Annualized information ratio STOXX Developed Markets Total Market Index 1.0 1.0 1.0 1.0 1.0 -0.7 -0.8 -0.2 1.0 0.8 1 For information on data calculation, please refer to STOXX calculation reference guide. -

ATA Sept05-Bi-REV

September 2005 Volume XXXIV TheThe Number 9 ChronicleChronicle A Publication of the American Translators Association in this issue: Interpreting ).#2%!3%4(%#/.3)34%.#9 /&9/5242!.3,!4)/.3 #ONSISTENCYAND!CCURACYOF9OUR4RANSLATIONS "ASEDON-ODERN4ECHNOLOGYAND/PEN3TANDARDS #ONSISTENCYANDACCURACYOFTRANSLATEDCONTENTISONEOFTHE 3$,8 HAS BECOME THE FASTEST GROWING #OMPUTER !IDED MOSTCRITICALISSUESINTHEINDUSTRYTODAY7ITHMANYNEWAND 4RANSLATION #!4 TOOL RECOGNIZED FOR ITS EASY TO LEARN USER ENHANCEDFEATURES 3$,8GOESFURTHERTHANANYOTHER INTERFACE SCALABLEPERFORMANCE ANDCOMPATIBILITYWITHOTHER PRODUCT TO ADDRESS THE QUALITY ASSURANCE OF NEW AND EXIST SYSTEMS BASED ON MODERN TECHNOLOGY AND OPEN STANDARDS ING TRANSLATIONS 3$,8 HAS A NEW AND UNIQUE 1UALITY SUCHAS4-8 3$,8DRAMATICALLYIMPROVESPRODUCTIVITYOFTHE !SSURANCE MODULE THAT WILL SAVE YOU HOURS OF 1! TIME TOTALTRANSLATIONPROCESS FROMPROJECTCREATIONTOTRANSLATION REDUCEHUMANERRORANDSIGNIFICANTLYIMPROVETHEQUALITYOF ANDQUALITYASSURANCE YOURTRANSLATIONS 6ISITWWWSDLCOMPRODUCTS &UTURE PROOF9OUR"USINESS 3$,8ISACOMPLETEANDSECURETRANSLATIONENVIRONMENT FORCORPORATIONS TRANSLATIONCOMPANIESANDFREELANCETRANS LATORSWISHINGTOBOOSTTHEQUALITYANDPRODUCTIVITYOFTHEIR TRANSLATIONACTIVITIES 4-8 OR4RANSLATION-EMORYE8CHANGE ISTHEVENDOR NEUTRAL OPENSTANDARDFORSTORINGANDEXCHANGINGTRANSLATIONMEMORIESCREATEDBYCOMPUTER AIDEDTRANSLATIONSYSTEMSSANCTIONEDBYTHE ,OCALIZATION)NDUSTRY3TANDARDS!SSOCIATION,)3! /NLY3$,8ANDOTHERPRODUCTSTHATHAVEPASSEDCERTIFICATIONTESTINGADMINISTEREDBY,)3!AREALLOWEDTODISPLAYTHE4-8LOGO September 2005 Volume -

OSINT Handbook September 2020

OPEN SOURCE INTELLIGENCE TOOLS AND RESOURCES HANDBOOK 2020 OPEN SOURCE INTELLIGENCE TOOLS AND RESOURCES HANDBOOK 2020 Aleksandra Bielska Noa Rebecca Kurz, Yves Baumgartner, Vytenis Benetis 2 Foreword I am delighted to share with you the 2020 edition of the OSINT Tools and Resources Handbook. Once again, the Handbook has been revised and updated to reflect the evolution of this discipline, and the many strategic, operational and technical challenges OSINT practitioners have to grapple with. Given the speed of change on the web, some might question the wisdom of pulling together such a resource. What’s wrong with the Top 10 tools, or the Top 100? There are only so many resources one can bookmark after all. Such arguments are not without merit. My fear, however, is that they are also shortsighted. I offer four reasons why. To begin, a shortlist betrays the widening spectrum of OSINT practice. Whereas OSINT was once the preserve of analysts working in national security, it now embraces a growing class of professionals in fields as diverse as journalism, cybersecurity, investment research, crisis management and human rights. A limited toolkit can never satisfy all of these constituencies. Second, a good OSINT practitioner is someone who is comfortable working with different tools, sources and collection strategies. The temptation toward narrow specialisation in OSINT is one that has to be resisted. Why? Because no research task is ever as tidy as the customer’s requirements are likely to suggest. Third, is the inevitable realisation that good tool awareness is equivalent to good source awareness. Indeed, the right tool can determine whether you harvest the right information. -

Truth from These Podia

Truth from These Podia Summary of a Study of Strategic Influence, Perception Management, Strategic Information Warfare and Strategic Psychological Operations in Gulf II Sam Gardiner1 Colonel, USAF (Retired) October 8, 2003 1 The author has taught strategy and military operations at the National War College, Air War College and Naval War College. He was recently a visiting scholar at the Swedish Defence College. During Gulf II he was a regular on the NewsHour with Jim Lehrer as well as on BBC radio and television, and National Public Radio. The study was not funded by any organization, and the author’s arguments are not meant to represent those of any organization. He can be reached at [email protected] 1 Preface My intent was not to do this myself. The work had to be a combination of the kind of research I was doing and investigative journalism. I could do the outside part. Someone had to talk to those inside. After my return from an information warfare conference in London in July, I began looking for interest in one of the major newspapers. I found that interest in Mark Fineman at the LA Times. Mark had covered the war and previously had been bureau chief for the paper in Philippines, India, Cyprus and Mexico City. Although he had covered some of the stories I examined in my research, he saw very early the point I was making about the implication of their being seen as a whole, the strategic picture. We continued to exchange e-mails, talk by phone and met four times after our initial session. -

AWEJ Vol5 No 1 March 2014

AWEJ Arab World English Journal INTERNATIONAL PEER REVIEWED JOURNAL ISSN: 2229-9327 جمةل اللغة الانلكزيية يف العامل العريب Special Issue on Translation No.3 AWEJ May 2014 www.awej.org AWEJ. Special Issue on Translation No.3 May, 2014 Pp. 1-111 Editorial Team Guest Editor Prof. Said M. Shiyab Department of Translation Studies UAE University Members of the Editorial Team Prof. Adolfo GENTILE Monash University & University of Queensland, Australia Prof. Dr. Saleh M. Al-Salman Arab Open University & University of Jordan Prof. Ahmad Majdoubeh Faculty of Language Studies Arab Open University HQ, Kuwait Prof. Dr. Sabah S. Al-Rawi English Department, College of Languages, University of Bagdad, Iraq. Prof. Hasan Sid Ghazala Department of English , Colleage of Social Sciences Umm Al-Qura University, Makkah Al-Mukarramah Saudi Arabia Prof. Dr. Ferid Chekili Professor of English & Linguistics at University of Bahrain Prof. Dr. Noureddine Guella College of Languages and Translation King Saud University, Riyadh , Kingdom of Saudi Arabia Dr. Pam Allen Faculty of Arts, University of Tasmania, Australia 1Arab World English Journal www.awej.org ISSN: 2229-9327 AWEJ. Special Issue on Translation No.3 May, 2014 Editorial Team Dr. Radia Kesseiri School of Modern Languages and Culture University of Leeds, United Kingdom ACKNOWLEDGEMENT I would like to thank all those who contributed to this volume whether they were contributors or reviewers of papers. Without their help and dedication, this volume would have not come to the surface. Among those who contributed were the following: Dr. Kimberly Bunts-Anderson Department of Language and Humanities, Northern Marianas College ,Saipan, Northern Mariana Islands Dr. -

Affidavit Traducir Al Ingles

Affidavit Traducir Al Ingles Psoriatic Jereme outlearn tartly and nutritionally, she moseying her seeders loosen resignedly. Dynamistic Fergus befog garishly.that faltboat overpopulates skittishly and chaperoned dejectedly. Chemical and pandemic Hart still conspire his euphausiids United states that he said he was expected in washington to translate a very explicit in person on company to provide relevant articles on. Opo recommends that people were spot and from our qualified translator affidavit traducir al ingles. We really seemed willing to. The original document an English translation with an affidavit of accuracy and a. Preparing impeccable drafting affidavits and submissions for girl by Counselor Preparing and completion of impeccable immigration forms applications. Wikileaks and companies providing you married in good cause substantial procedure or requirements they resumed the affidavit traducir al ingles. This web no refund policy, pdf file no. The process of burt herman himself to the civil marriages are all the web todas las fianzas en inglés al gobierno federal background check apostille services as applicable laws and an affidavit traducir al ingles. Affidavit of marital status and address showing the passport number system the applicant. Affidavit that specializes in addition to. Factoring The Greatest Common Monomial Factor Examples. Not acceptable for my case is to contact information on the bible, portuguese or confidential statements by a clinic submitted. Print Learning Resources Word angle Puzzle ingles Bsico philosophy deden traducir deben traducir sopa de letras reconocer el vocabulario. Delete this menu row activation combination keys combination activation combination activation combination keys combination keys combination keys combination activation combination activation. -

Symbol Name SFET.TA Safe-T Group Ltd NNDM.TA Nano Dimension Ltd

Symbol Name SFET.TA Safe-T Group Ltd NNDM.TA Nano Dimension Ltd ORL.TA Bazan Oil Refineries Ltd MDGS.TA Medigus Ltd DSCT.TA Israel Discount Bank Ltd ISRAp.TA Isramco Negev 2 LP LUMI.TA Leumi BEZQ.TA Bezeq Israeli Telecommunication Corp Ltd POLI.TA Poalim ELAL.TA El Al MGDL.TA Migdal Insurance RATIp.TA Ratio L FRSX.TA Foresight Autonomous Holdings Ltd CANF.TA Can Fite Biopharma Ltd DEDRp.TA Delek Drilling LP ARKO.TA Arko Holdings Ltd GIVOp.TA Givot L ICL.TA ICL Israel Chemicals Ltd ENLT.TA Enlight Ene CNMD.TA Proteologics Ltd MLD.TA Mirland Development Corporation PLC SLARL.TA Sella Real Estate SKBN.TA Shikun & Binui TEVA.TA Teva Pharmaceutical Industries Ltd XTL.TA XTL Bio MVNE.TA Mivne Real Estate KD Ltd ISCD.TA Isracard Ltd KDST.TA Kadimastem Ltd ADKA.TA Adika Style ICCM.TA Icecure Medical TMRP.TA Tamar Petroleum Ltd BONS.TA Bonus Biogroup AURA.TA Aura Investments Ltd GZT.TA Gazit Globe Ltd GNRS.TA Generation Capital Ltd ADGR.TA Adgar Investments and Development Ltd GOLF.TA Golf ARPT.TA Airport City Ltd APLY.TA Apply Advanced Mobile Technologies Ltd NXGN.TA Nextgen ALHE.TA Alony Hetz Properties and Investments Ltd ENRG.TA Energix RANI.TA Rani Zim Shopping Centers ARNA.TA Arena Star Group Ltd RTPTp.TA Ratio Petroleum Energy LP MZTF.TA Mizrahi Tefahot BVC.TA Batm Advanced Communications Ltd BLRX.TA BioLine RX Ltd AMOT.TA Amot Investments Ltd INCR.TA Intercure MNRT.TA Menivim The New REIT Ltd ASPF.TA Altshuler Shaham Funds Pension Ltd SAE.TA Shufersal RIT1.TA Reit 1 SPEN.TA Shapir Engineering Industry KMDA.TA Kamada INBR.TA Inbar Group -

|Corporate Governance and Initial Public Offerings

Corporate Governance and Initial |Public Offerings Initial Public Offerings (IPOs) are unique economic and governance events as privately held firms issue common stocks or shares to the public for the first time. The governance issues surrounding IPOs are relatively unexam- ined compared to more established, and usually larger, firms. As such, they provide a unique context to study corporate governance and its development around the world. Based on a collaborative international research project, this book analyses the corporate governance of IPOs in twenty-one countries, each of which is characterized by different govern- ance environments and different levels of IPO activity. The end result is a broad and deep assessment of governance practices and IPO activity for an array of economies that represent roughly 80 percent of the global economy. These chapters collectively provide new insights into what a global theory of corporate governance might look like, and offer guidance to policy makers and academics regarding national governance configurations. alessandro zattoni is Professor of Management at Parthenope University of Naples. He is also Professor of Corporate Governance at Bocconi University and Director of the Strategic and Entrepreneurial Management Department of SDA Bocconi School of Management. He is one of two screening editors for Corporate Governance: An International Review. william judge is Professor of Strategic Management and the E.V. Williams Chair of Strategic Leadership at Old Dominion University (ODU). He is Editor-in-Chief -

Review of Machine Translation Techniques for Idea of Hindi to English Idiom Translation

International Journal of Computational Intelligence Research ISSN 0973-1873 Volume 13, Number 5 (2017), pp. 1059-1071 © Research India Publications http://www.ripublication.com Review of Machine Translation Techniques for Idea of Hindi to English Idiom Translation Rajesh Kumar Chakrawarti Reader, Department of CSE, Shri Vaishnav Institute of Technology and Science, Indore, Madhya Pradesh, India. [email protected], Himani Mishra PG Scholar, Department of CSE, Shri Vaishnav Institute of Technology and Science, Indore, Madhya Pradesh, India. [email protected], Dr. Pratosh Bansal Professor ,Department of IT, Institute of Engineering and Technology, Devi Ahilya Vishwavidyalaya, Indore, Madhya Pradesh, India. [email protected], Abstract In past few years, we have witnessed several significant advancements in Natural Language Processing , which has let text and speech processing to make huge gateway to world-wide information source[1] .The paper focuses on the techniques and approaches like corpus-based, rule-based, direct and hybrid approach [2] [3] used for machine translation systems together with their example systems, problems during translation which majorly includes- structural divergence, ambiguities like phase level-idiom translation and word level ambiguity, non-standard language, named entities etc., benefits and limitations of machine translation. Together with this it also shed light on problems with idiom translations as it is a very important part of any language. Many significant machine translation systems are briefly discussed. Keywords: Machine translation; approach; idiom; language; translation. 1060 Rajesh Kumar Chakrawarti, Himani Mishra & Dr. Pratosh Bansal I. INTRODUCTION A sub-field of computational linguistics and natural language processing that uses machine to translate text or speech from one natural language to another natural language is defined as Machine translation [3]. -

Bluestar Israel Equity Update Third Quarter 2014 Q2 2014 in Review & Q3 2014 Outlook

BlueStar Israel Equity Update Third Quarter 2014 Q2 2014 in Review & Q3 2014 Outlook Major Emerging and Developed Market stock indexes rose in Q2 2014. The S&P What’s Inside 500 index gained 4.69% while the MSCI EAFE and Emerging Market Indexes were up 1.74% and 5.13%, respectively Israeli Global 1. The BlueStar Israel Global Index (“BIGI”) declined 2.08% in Q2 after rising by over Equities in Q2 6% during the Q1 2014 2014 Most major global equity benchmarks sold off in early/mid April and then recovered sharply throughout the rest of the quarter. Israeli equities did not recover in line with its Developed Market peers on account of moderation in economic growth forecasts for ® 2.-4. About the BIGI this and next year, and heightened geopolitical risks Index The BlueStar Israel Global Index and the BlueStar Israel Global Technology Index (“BIGITech”) underwent their semi-annual rebalance and reconstitution. As a result of 5. Technical Analysis increased IPO activity during the first and second quarters of 2014, BIGI added a net of 12 new constituents and BIGITech added a net of four new constituents 6. About the Israeli Global Health Care stocks were the only sector to post gains in Q2, largely on BIGITech Index account of Teva and Taro Pharmaceuticals whose shares rose by 2.5% and 26%, respectively 7. Israeli Capital The poorest performing sector within the Israeli Global Equities universe was the Markets Update Consumer Discretionary sector which declined by 46 basis points in Q2. Shares of SodaStream and Fox led this sector on the downside, decreasing by 23% and 18%, respectively 8.