Statistical Physics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Temperature Dependence of Breakdown and Avalanche Multiplication in In0.53Ga0.47As Diodes and Heterojunction Bipolar Transistors

This is a repository copy of Temperature dependence of breakdown and avalanche multiplication in In0.53Ga0.47As diodes and heterojunction bipolar transistors . White Rose Research Online URL for this paper: http://eprints.whiterose.ac.uk/896/ Article: Yee, M., Ng, W.K., David, J.P.R. et al. (3 more authors) (2003) Temperature dependence of breakdown and avalanche multiplication in In0.53Ga0.47As diodes and heterojunction bipolar transistors. IEEE Transactions on Electron Devices, 50 (10). pp. 2021-2026. ISSN 0018-9383 https://doi.org/10.1109/TED.2003.816553 Reuse Unless indicated otherwise, fulltext items are protected by copyright with all rights reserved. The copyright exception in section 29 of the Copyright, Designs and Patents Act 1988 allows the making of a single copy solely for the purpose of non-commercial research or private study within the limits of fair dealing. The publisher or other rights-holder may allow further reproduction and re-use of this version - refer to the White Rose Research Online record for this item. Where records identify the publisher as the copyright holder, users can verify any specific terms of use on the publisher’s website. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ IEEE TRANSACTIONS ON ELECTRON DEVICES, VOL. 50, NO. 10, OCTOBER 2003 2021 Temperature Dependence of Breakdown and Avalanche Multiplication in InHXSQGaHXRUAs Diodes and Heterojunction Bipolar Transistors M. -

2D Potts Model Correlation Lengths

KOMA Revised version May D Potts Mo del Correlation Lengths Numerical Evidence for = at o d t Wolfhard Janke and Stefan Kappler Institut f ur Physik Johannes GutenbergUniversitat Mainz Staudinger Weg Mainz Germany Abstract We have studied spinspin correlation functions in the ordered phase of the twodimensional q state Potts mo del with q and at the rstorder transition p oint Through extensive Monte t Carlo simulations we obtain strong numerical evidence that the cor relation length in the ordered phase agrees with the exactly known and recently numerically conrmed correlation length in the disordered phase As a byproduct we nd the energy moments o t t d in the ordered phase at in very go o d agreement with a recent large t q expansion PACS numbers q Hk Cn Ha hep-lat/9509056 16 Sep 1995 Introduction Firstorder phase transitions have b een the sub ject of increasing interest in recent years They play an imp ortant role in many elds of physics as is witnessed by such diverse phenomena as ordinary melting the quark decon nement transition or various stages in the evolution of the early universe Even though there exists already a vast literature on this sub ject many prop erties of rstorder phase transitions still remain to b e investigated in detail Examples are nitesize scaling FSS the shap e of energy or magnetization distributions partition function zeros etc which are all closely interrelated An imp ortant approach to attack these problems are computer simulations Here the available system sizes are necessarily -

Full and Unbiased Solution of the Dyson-Schwinger Equation in the Functional Integro-Differential Representation

PHYSICAL REVIEW B 98, 195104 (2018) Full and unbiased solution of the Dyson-Schwinger equation in the functional integro-differential representation Tobias Pfeffer and Lode Pollet Department of Physics, Arnold Sommerfeld Center for Theoretical Physics, University of Munich, Theresienstrasse 37, 80333 Munich, Germany (Received 17 March 2018; revised manuscript received 11 October 2018; published 2 November 2018) We provide a full and unbiased solution to the Dyson-Schwinger equation illustrated for φ4 theory in 2D. It is based on an exact treatment of the functional derivative ∂/∂G of the four-point vertex function with respect to the two-point correlation function G within the framework of the homotopy analysis method (HAM) and the Monte Carlo sampling of rooted tree diagrams. The resulting series solution in deformations can be considered as an asymptotic series around G = 0 in a HAM control parameter c0G, or even a convergent one up to the phase transition point if shifts in G can be performed (such as by summing up all ladder diagrams). These considerations are equally applicable to fermionic quantum field theories and offer a fresh approach to solving functional integro-differential equations beyond any truncation scheme. DOI: 10.1103/PhysRevB.98.195104 I. INTRODUCTION was not solved and differences with the full, exact answer could be seen when the correlation length increases. Despite decades of research there continues to be a need In this work, we solve the full DSE by writing them as a for developing novel methods for strongly correlated systems. closed set of integro-differential equations. Within the HAM The standard Monte Carlo approaches [1–5] are convergent theory there exists a semianalytic way to treat the functional but suffer from a prohibitive sign problem, scaling exponen- derivatives without resorting to an infinite expansion of the tially in the system volume [6]. -

PHYS 596 Team 1

Braun, S., Ronzheimer, J., Schreiber, M., Hodgman, S., Rom, T., Bloch, I. and Schneider, U. (2013). Negative Absolute Temperature for Motional Degrees of Freedom. Science, 339(6115), pp.52-55. PHYS 596 Team 1 Shreya, Nina, Nathan, Faisal What is negative absolute temperature? • An ensemble of particles is said to have negative absolute temperature if higher energy states are more likely to be occupied than lower energy states. −퐸푖/푘푇 푃푖 ∝ 푒 • If high energy states are more populated than low energy states, entropy decreases with energy. 1 휕푆 ≡ 푇 휕퐸 Negative absolute temperature Previous work on negative temperature • The first experiment to measure negative temperature was performed at Harvard by Purcell and Pound in 1951. • By quickly reversing the magnetic field acting on a nuclear spin crystal, they produced a sample where the higher energy states were more occupied. • Since then negative temperature ensembles in spin systems have been produced in other ways. Oja and Lounasmaa (1997) gives a comprehensive review. Negative temperature for motional degrees of freedom • For the probability distribution of a negative temperature ensemble to be normalizable, we need an upper bound in energy. • Since localized spin systems have a finite number of energy states there is a natural upper bound in energy. • This is hard to achieve in systems with motional degrees of freedom since kinetic energy is usually not bounded from above. • Braun et al (2013) achieves exactly this with bosonic cold atoms in an optical lattice. What is the point? • At thermal equilibrium, negative temperature implies negative pressure. • This is relevant to models of dark energy and cosmology based on Bose-Einstein condensation. -

A Simple Method to Estimate Entropy and Free Energy of Atmospheric Gases from Their Action

Article A Simple Method to Estimate Entropy and Free Energy of Atmospheric Gases from Their Action Ivan Kennedy 1,2,*, Harold Geering 2, Michael Rose 3 and Angus Crossan 2 1 Sydney Institute of Agriculture, University of Sydney, NSW 2006, Australia 2 QuickTest Technologies, PO Box 6285 North Ryde, NSW 2113, Australia; [email protected] (H.G.); [email protected] (A.C.) 3 NSW Department of Primary Industries, Wollongbar NSW 2447, Australia; [email protected] * Correspondence: [email protected]; Tel.: + 61-4-0794-9622 Received: 23 March 2019; Accepted: 26 April 2019; Published: 1 May 2019 Abstract: A convenient practical model for accurately estimating the total entropy (ΣSi) of atmospheric gases based on physical action is proposed. This realistic approach is fully consistent with statistical mechanics, but reinterprets its partition functions as measures of translational, rotational, and vibrational action or quantum states, to estimate the entropy. With all kinds of molecular action expressed as logarithmic functions, the total heat required for warming a chemical system from 0 K (ΣSiT) to a given temperature and pressure can be computed, yielding results identical with published experimental third law values of entropy. All thermodynamic properties of gases including entropy, enthalpy, Gibbs energy, and Helmholtz energy are directly estimated using simple algorithms based on simple molecular and physical properties, without resource to tables of standard values; both free energies are measures of quantum field states and of minimal statistical degeneracy, decreasing with temperature and declining density. We propose that this more realistic approach has heuristic value for thermodynamic computation of atmospheric profiles, based on steady state heat flows equilibrating with gravity. -

The Emergence of a Classical World in Bohmian Mechanics

The Emergence of a Classical World in Bohmian Mechanics Davide Romano Lausanne, 02 May 2014 Structure of QM • Physical state of a quantum system: - (normalized) vector in Hilbert space: state-vector - Wavefunction: the state-vector expressed in the coordinate representation Structure of QM • Physical state of a quantum system: - (normalized) vector in Hilbert space: state-vector; - Wavefunction: the state-vector expressed in the coordinate representation; • Physical properties of the system (Observables): - Observable ↔ hermitian operator acting on the state-vector or the wavefunction; - Given a certain operator A, the quantum system has a definite physical property respect to A iff it is an eigenstate of A; - The only possible measurement’s results of the physical property corresponding to the operator A are the eigenvalues of A; - Why hermitian operators? They have a real spectrum of eigenvalues → the result of a measurement can be interpreted as a physical value. Structure of QM • In the general case, the state-vector of a system is expressed as a linear combination of the eigenstates of a generic operator that acts upon it: 퐴 Ψ = 푎 Ψ1 + 푏|Ψ2 • When we perform a measure of A on the system |Ψ , the latter randomly collapses or 2 2 in the state |Ψ1 with probability |푎| or in the state |Ψ2 with probability |푏| . Structure of QM • In the general case, the state-vector of a system is expressed as a linear combination of the eigenstates of a generic operator that acts upon it: 퐴 Ψ = 푎 Ψ1 + 푏|Ψ2 • When we perform a measure of A on the system |Ψ , the latter randomly collapses or 2 2 in the state |Ψ1 with probability |푎| or in the state |Ψ2 with probability |푏| . -

Aspects of Loop Quantum Gravity

Aspects of loop quantum gravity Alexander Nagen 23 September 2020 Submitted in partial fulfilment of the requirements for the degree of Master of Science of Imperial College London 1 Contents 1 Introduction 4 2 Classical theory 12 2.1 The ADM / initial-value formulation of GR . 12 2.2 Hamiltonian GR . 14 2.3 Ashtekar variables . 18 2.4 Reality conditions . 22 3 Quantisation 23 3.1 Holonomies . 23 3.2 The connection representation . 25 3.3 The loop representation . 25 3.4 Constraints and Hilbert spaces in canonical quantisation . 27 3.4.1 The kinematical Hilbert space . 27 3.4.2 Imposing the Gauss constraint . 29 3.4.3 Imposing the diffeomorphism constraint . 29 3.4.4 Imposing the Hamiltonian constraint . 31 3.4.5 The master constraint . 32 4 Aspects of canonical loop quantum gravity 35 4.1 Properties of spin networks . 35 4.2 The area operator . 36 4.3 The volume operator . 43 2 4.4 Geometry in loop quantum gravity . 46 5 Spin foams 48 5.1 The nature and origin of spin foams . 48 5.2 Spin foam models . 49 5.3 The BF model . 50 5.4 The Barrett-Crane model . 53 5.5 The EPRL model . 57 5.6 The spin foam - GFT correspondence . 59 6 Applications to black holes 61 6.1 Black hole entropy . 61 6.2 Hawking radiation . 65 7 Current topics 69 7.1 Fractal horizons . 69 7.2 Quantum-corrected black hole . 70 7.3 A model for Hawking radiation . 73 7.4 Effective spin-foam models . -

![Arxiv:1910.10745V1 [Cond-Mat.Str-El] 23 Oct 2019 2.2 Symmetry-Protected Time Crystals](https://docslib.b-cdn.net/cover/4942/arxiv-1910-10745v1-cond-mat-str-el-23-oct-2019-2-2-symmetry-protected-time-crystals-304942.webp)

Arxiv:1910.10745V1 [Cond-Mat.Str-El] 23 Oct 2019 2.2 Symmetry-Protected Time Crystals

A Brief History of Time Crystals Vedika Khemania,b,∗, Roderich Moessnerc, S. L. Sondhid aDepartment of Physics, Harvard University, Cambridge, Massachusetts 02138, USA bDepartment of Physics, Stanford University, Stanford, California 94305, USA cMax-Planck-Institut f¨urPhysik komplexer Systeme, 01187 Dresden, Germany dDepartment of Physics, Princeton University, Princeton, New Jersey 08544, USA Abstract The idea of breaking time-translation symmetry has fascinated humanity at least since ancient proposals of the per- petuum mobile. Unlike the breaking of other symmetries, such as spatial translation in a crystal or spin rotation in a magnet, time translation symmetry breaking (TTSB) has been tantalisingly elusive. We review this history up to recent developments which have shown that discrete TTSB does takes place in periodically driven (Floquet) systems in the presence of many-body localization (MBL). Such Floquet time-crystals represent a new paradigm in quantum statistical mechanics — that of an intrinsically out-of-equilibrium many-body phase of matter with no equilibrium counterpart. We include a compendium of the necessary background on the statistical mechanics of phase structure in many- body systems, before specializing to a detailed discussion of the nature, and diagnostics, of TTSB. In particular, we provide precise definitions that formalize the notion of a time-crystal as a stable, macroscopic, conservative clock — explaining both the need for a many-body system in the infinite volume limit, and for a lack of net energy absorption or dissipation. Our discussion emphasizes that TTSB in a time-crystal is accompanied by the breaking of a spatial symmetry — so that time-crystals exhibit a novel form of spatiotemporal order. -

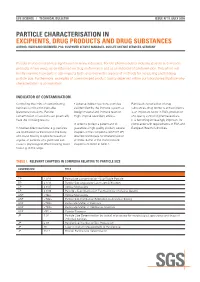

Particle Characterisation In

LIFE SCIENCE I TECHNICAL BULLETIN ISSUE N°11 /JULY 2008 PARTICLE CHARACTERISATION IN EXCIPIENTS, DRUG PRODUCTS AND DRUG SUBSTANCES AUTHOR: HILDEGARD BRÜMMER, PhD, CUSTOMER SERVICE MANAGER, SGS LIFE SCIENCE SERVICES, GERMANY Particle characterization has significance in many industries. For the pharmaceutical industry, particle size impacts products in two ways: as an influence on drug performance and as an indicator of contamination. This article will briefly examine how particle size impacts both, and review the arsenal of methods for measuring and tracking particle size. Furthermore, examples of compromised product quality observed within our laboratories illustrate why characterization is so important. INDICATOR OF CONTAMINATION Controlling the limits of contaminating • Adverse indirect reactions: particles Particle characterisation of drug particles is critical for injectable are identified by the immune system as substances, drug products and excipients (parenteral) solutions. Particle foreign material and immune reaction is an important factor in R&D, production contamination of solutions can potentially might impose secondary effects. and quality control of pharmaceuticals. have the following results: It is becoming increasingly important for In order to protect a patient and to compliance with requirements of FDA and • Adverse direct reactions: e.g. particles guarantee a high quality product, several European Health Authorities. are distributed via the blood in the body chapters in the compendia (USP, EP, JP) and cause toxicity to specific tissues or describe techniques for characterisation organs, or particles of a given size can of limits. Some of the most relevant cause a physiological effect blocking blood chapters are listed in Table 1. flow e.g. in the lungs. -

LECTURES on MATHEMATICAL STATISTICAL MECHANICS S. Adams

ISSN 0070-7414 Sgr´ıbhinn´ıInstiti´uid Ard-L´einnBhaile´ Atha´ Cliath Sraith. A. Uimh 30 Communications of the Dublin Institute for Advanced Studies Series A (Theoretical Physics), No. 30 LECTURES ON MATHEMATICAL STATISTICAL MECHANICS By S. Adams DUBLIN Institi´uid Ard-L´einnBhaile´ Atha´ Cliath Dublin Institute for Advanced Studies 2006 Contents 1 Introduction 1 2 Ergodic theory 2 2.1 Microscopic dynamics and time averages . .2 2.2 Boltzmann's heuristics and ergodic hypothesis . .8 2.3 Formal Response: Birkhoff and von Neumann ergodic theories9 2.4 Microcanonical measure . 13 3 Entropy 16 3.1 Probabilistic view on Boltzmann's entropy . 16 3.2 Shannon's entropy . 17 4 The Gibbs ensembles 20 4.1 The canonical Gibbs ensemble . 20 4.2 The Gibbs paradox . 26 4.3 The grandcanonical ensemble . 27 4.4 The "orthodicity problem" . 31 5 The Thermodynamic limit 33 5.1 Definition . 33 5.2 Thermodynamic function: Free energy . 37 5.3 Equivalence of ensembles . 42 6 Gibbs measures 44 6.1 Definition . 44 6.2 The one-dimensional Ising model . 47 6.3 Symmetry and symmetry breaking . 51 6.4 The Ising ferromagnet in two dimensions . 52 6.5 Extreme Gibbs measures . 57 6.6 Uniqueness . 58 6.7 Ergodicity . 60 7 A variational characterisation of Gibbs measures 62 8 Large deviations theory 68 8.1 Motivation . 68 8.2 Definition . 70 8.3 Some results for Gibbs measures . 72 i 9 Models 73 9.1 Lattice Gases . 74 9.2 Magnetic Models . 75 9.3 Curie-Weiss model . 77 9.4 Continuous Ising model . -

Chapter 3 Bose-Einstein Condensation of an Ideal

Chapter 3 Bose-Einstein Condensation of An Ideal Gas An ideal gas consisting of non-interacting Bose particles is a ¯ctitious system since every realistic Bose gas shows some level of particle-particle interaction. Nevertheless, such a mathematical model provides the simplest example for the realization of Bose-Einstein condensation. This simple model, ¯rst studied by A. Einstein [1], correctly describes important basic properties of actual non-ideal (interacting) Bose gas. In particular, such basic concepts as BEC critical temperature Tc (or critical particle density nc), condensate fraction N0=N and the dimensionality issue will be obtained. 3.1 The ideal Bose gas in the canonical and grand canonical ensemble Suppose an ideal gas of non-interacting particles with ¯xed particle number N is trapped in a box with a volume V and at equilibrium temperature T . We assume a particle system somehow establishes an equilibrium temperature in spite of the absence of interaction. Such a system can be characterized by the thermodynamic partition function of canonical ensemble X Z = e¡¯ER ; (3.1) R where R stands for a macroscopic state of the gas and is uniquely speci¯ed by the occupa- tion number ni of each single particle state i: fn0; n1; ¢ ¢ ¢ ¢ ¢ ¢g. ¯ = 1=kBT is a temperature parameter. Then, the total energy of a macroscopic state R is given by only the kinetic energy: X ER = "ini; (3.2) i where "i is the eigen-energy of the single particle state i and the occupation number ni satis¯es the normalization condition X N = ni: (3.3) i 1 The probability -

Influence of Thermal Fluctuations on Active Diffusion at Large Péclet Numbers

Influence of thermal fluctuations on active diffusion at large Péclet numbers Cite as: Phys. Fluids 33, 051904 (2021); https://doi.org/10.1063/5.0049386 Submitted: 04 March 2021 . Accepted: 15 April 2021 . Published Online: 14 May 2021 O. T. Dyer, and R. C. Ball COLLECTIONS This paper was selected as Featured ARTICLES YOU MAY BE INTERESTED IN Numerical and theoretical modeling of droplet impact on spherical surfaces Physics of Fluids 33, 052112 (2021); https://doi.org/10.1063/5.0047024 Phase-field modeling of selective laser brazing of diamond grits Physics of Fluids 33, 052113 (2021); https://doi.org/10.1063/5.0049096 Collective locomotion of two uncoordinated undulatory self-propelled foils Physics of Fluids 33, 011904 (2021); https://doi.org/10.1063/5.0036231 Phys. Fluids 33, 051904 (2021); https://doi.org/10.1063/5.0049386 33, 051904 © 2021 Author(s). Physics of Fluids ARTICLE scitation.org/journal/phf Influence of thermal fluctuations on active diffusion at large Peclet numbers Cite as: Phys. Fluids 33, 051904 (2021); doi: 10.1063/5.0049386 Submitted: 4 March 2021 . Accepted: 15 April 2021 . Published Online: 14 May 2021 O. T. Dyera) and R. C. Ball AFFILIATIONS Department of Physics, University of Warwick, Coventry, CV4 7AL, United Kingdom a)Author to whom correspondence should be addressed: [email protected] ABSTRACT Three-dimensional Wavelet Monte Carlo dynamics simulations are used to study the dynamics of passive particles in the presence of microswimmers—both represented by neutrally buoyant spheres—taking into account the often-omitted thermal motion alongside the hydrody- namic flows generated by the swimmers.