Towards CNN-Based Acoustic Modeling of Seventh Chords for Automatic Chord Recognition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

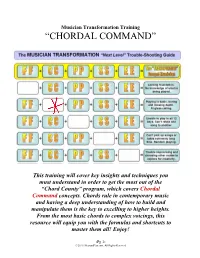

“Chordal Command”

Musician Transformation Training “CHORDAL COMMAND” This training will cover key insights and techniques you must understand in order to get the most out of the “Chord County” program, which covers Chordal Command concepts. Chords rule in contemporary music and having a deep understanding of how to build and manipulate them is the key to excelling to higher heights. From the most basic chords to complex voicings, this resource will equip you with the formulas and shortcuts to master them all! Enjoy! -Pg 1- © 2010. HearandPlay.com. All Rights Reserved Introduction In this guide, we’ll be starting with triads and what I call the “FANTASTIC FOUR.” Then we’ll move on to shortcuts that will help you master extended chords (the heart of contemporary playing). After that, we’ll discuss inversions (the key to multiplying your chordal vocaluary), primary vs secondary chords, and we’ll end on voicings and the difference between “voicings” and “inversions.” But first, let’s turn to some common problems musicians encounter when it comes to chordal mastery. Common Problems 1. Lack of chordal knowledge beyond triads: Musicians who fall into this category simply have never reached outside of the basic triads (major, minor, diminished, augmented) and are stuck playing the same chords they’ve always played. There is a mental block that almost prohibits them from learning and retaining new chords. Extra effort must be made to embrace new chords, no matter how difficult and unusual they are at first. Knowing the chord formulas and shortcuts that will turn any basic triad into an extended chord is the secret. -

Music Content Analysis : Key, Chord and Rhythm Tracking In

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by ScholarBank@NUS MUSIC CONTENT ANALYSIS : KEY, CHORD AND RHYTHM TRACKING IN ACOUSTIC SIGNALS ARUN SHENOY KOTA (B.Eng.(Computer Science), Mangalore University, India) A THESIS SUBMITTED FOR THE DEGREE OF MASTER OF SCIENCE DEPARTMENT OF COMPUTER SCIENCE NATIONAL UNIVERSITY OF SINGAPORE 2004 Acknowledgments I am grateful to Dr. Wang Ye for extending an opportunity to pursue audio research and work on various aspects of music analysis, which has led to this dissertation. Through his ideas, support and enthusiastic supervision, he is in many ways directly responsible for much of the direction this work took. He has been the best advisor and teacher I could have wished for and it has been a joy to work with him. I would like to acknowledge Dr. Terence Sim for his support, in the role of a mentor, during my first term of graduate study and for our numerous technical and music theoretic discussions thereafter. He has also served as my thesis examiner along with Dr Mohan Kankanhalli. I greatly appreciate the valuable comments and suggestions given by them. Special thanks to Roshni for her contribution to my work through our numerous discussions and constructive arguments. She has also been a great source of practical information, as well as being happy to be the first to hear my outrage or glee at the day’s current events. There are a few special people in the audio community that I must acknowledge due to their importance in my work. -

A Proposal for the Inclusion of Jazz Theory Topics in the Undergraduate Music Theory Curriculum

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange Masters Theses Graduate School 8-2016 A Proposal for the Inclusion of Jazz Theory Topics in the Undergraduate Music Theory Curriculum Alexis Joy Smerdon University of Tennessee, Knoxville, [email protected] Follow this and additional works at: https://trace.tennessee.edu/utk_gradthes Part of the Music Education Commons, Music Pedagogy Commons, and the Music Theory Commons Recommended Citation Smerdon, Alexis Joy, "A Proposal for the Inclusion of Jazz Theory Topics in the Undergraduate Music Theory Curriculum. " Master's Thesis, University of Tennessee, 2016. https://trace.tennessee.edu/utk_gradthes/4076 This Thesis is brought to you for free and open access by the Graduate School at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in Masters Theses by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. To the Graduate Council: I am submitting herewith a thesis written by Alexis Joy Smerdon entitled "A Proposal for the Inclusion of Jazz Theory Topics in the Undergraduate Music Theory Curriculum." I have examined the final electronic copy of this thesis for form and content and recommend that it be accepted in partial fulfillment of the equirr ements for the degree of Master of Music, with a major in Music. Barbara A. Murphy, Major Professor We have read this thesis and recommend its acceptance: Kenneth Stephenson, Alex van Duuren Accepted for the Council: Carolyn R. Hodges Vice Provost and Dean of the Graduate School (Original signatures are on file with official studentecor r ds.) A Proposal for the Inclusion of Jazz Theory Topics in the Undergraduate Music Theory Curriculum A Thesis Presented for the Master of Music Degree The University of Tennessee, Knoxville Alexis Joy Smerdon August 2016 ii Copyright © 2016 by Alexis Joy Smerdon All rights reserved. -

Reharmonizing Chord Progressions

Reharmonizing Chord Progressions We’re going to spend a little bit of time talking about just a few of the techniques which you’ve already learned about, and how you can use these tools to add interest and movement into your own chord progressions. CHORD INVERSIONS If a song that you’re playing stays on the same chord for several beats, it can begin to sound stagnant if you play the same chord voicing over and over. One of the things you can do to add movement into the chord progression is to stay on the same chord but choose a different inversion of that chord. For instance, if you have 2 consecutive measures where the 1(Major) chord forms the harmony, try playing the first measure using 1 in root position and start the next measure by changing to a 1 over 3 (in other words, use a first inversion 1 chord). In modern chord notation, we’d write this as C/E. Or, if you don’t want to change the bass note– you can at least try using a different ‘voicing’ of the chord: instead of (from bottom to top note) C, G, E, C you might try C, C, G, E or C, G, E, G. For a guitarist, this may mean just playing the chord in a different position. For a keyboardist, you may have to consider which note names are doubled and whether the spacing between notes is closer or farther apart. SUBSTITUTE CHORDS OF SIMILAR QUALITY One of the things we’ve talked about previously is that certain chords in any given key sound ‘at rest’ and certain chords sound with a great deal of tension and feel like they ‘need to resolve’ to a place of rest. -

Piano Lessons Book V2.Pdf

Learn & Master Piano Table of Contents SESSIONS PAGE SESSIONS PAGE Session 1 – First Things First 6 Session 15 – Pretty Chords 57 Finding the Notes on the Keyboard Major 7th Chords, Sixteenth Notes Session 2 – Major Progress 8 Session 16 – The Dominant Sound 61 Major Chords, Notes on the Treble Clef Dominant 7th Chords, Left-Hand Triads, D Major Scale Session 3 – Scaling the Ivories 11 Session 17 – Gettin’ the Blues 65 C Major Scale, Scale Intervals, Chord Intervals The 12-Bar Blues Form, Syncopated Rhythms Session 4 – Left Hand & Right Foot 14 Session 18 – Boogie-Woogie & Bending the Keys 69 Bass Clef Notes, Sustain Pedal Boogie-Woogie Bass Line, Grace Notes Session 5 – Minor Adjustments 17 Session 19 – Minor Details 72 Minor Chords and How They Work Minor 7th Chords Session 6 – Upside Down Chords 21 Session 20 – The Left Hand as a Bass Player 76 Chord Inversions, Reading Rhythms Left-Hand Bass Lines Session 7 – The Piano as a Singer 25 Session 21 – The Art of Ostinato 80 Playing Lyrically, Reading Rests in Music Ostinato, Suspended Chords Session 8 – Black is Beautiful 29 Session 22 – Harmonizing 84 Learning the Notes on the Black Keys Harmony, Augmented Chords Session 9 – Black Magic 33 Session 23 – Modern Pop Piano 87 More Work with Black Keys, The Minor Scale Major 2 Chords Session 10 – Making the Connection 37 Session 24 – Walkin’ the Blues & Shakin’ the Keys 90 Inversions, Left-Hand Accompaniment Patterns Sixth Chords, Walking Bass Lines, The Blues Scale, Tremolo Session 11 – Let it Be 42 Session 25 – Ragtime, Stride, & Diminished -

Chord Names and Symbols (Popular Music) from Wikipedia, the Free Encyclopedia

Chord names and symbols (popular music) From Wikipedia, the free encyclopedia Various kinds of chord names and symbols are used in different contexts, to represent musical chords. In most genres of popular music, including jazz, pop, and rock, a chord name and the corresponding symbol are typically composed of one or more of the following parts: 1. The root note (e.g. C). CΔ7, or major seventh chord 2. The chord quality (e.g. major, maj, or M). on C Play . 3. The number of an interval (e.g. seventh, or 7), or less often its full name or symbol (e.g. major seventh, maj7, or M7). 4. The altered fifth (e.g. sharp five, or ♯5). 5. An additional interval number (e.g. add 13 or add13), in added tone chords. For instance, the name C augmented seventh, and the corresponding symbol Caug7, or C+7, are both composed of parts 1, 2, and 3. Except for the root, these parts do not refer to the notes which form the chord, but to the intervals they form with respect to the root. For instance, Caug7 indicates a chord formed by the notes C-E-G♯-B♭. The three parts of the symbol (C, aug, and 7) refer to the root C, the augmented (fifth) interval from C to G♯, and the (minor) seventh interval from C to B♭. A set of decoding rules is applied to deduce the missing information. Although they are used occasionally in classical music, these names and symbols are "universally used in jazz and popular music",[1] usually inside lead sheets, fake books, and chord charts, to specify the harmony of compositions. -

How to Read Chord Symbols

HOW TO READ CHORD SYMBOLS The letters telling you WHICH CHORD to play – are called – CHORD QUALITIES. Chord qualities refer to the intervals between notes - which define the chord. The main chord qualities are: • Major, and minor. • Augmented, diminished, and half-diminished. • Dominant. Some of the symbols used for chord quality are similar to those used for interval quality: • m, or min for minor, • M, maj, or no symbol for major, • aug for augmented, • dim for diminished. In addition, however, • Δ is sometimes used for major, instead of the standard M, or maj, • − is sometimes used for minor, instead of the standard m or min, • +, or aug, is used for augmented (A is not used), • o, °, dim, is used for diminished (d is not used), • ø, or Ø is used for half diminished, • dom is used for dominant. Chord qualities are sometimes omitted – you will only see C or D (means to play C Major or D Major). When specified, they appear immediately after the root note. For instance, in the symbol Cm7 (C minor seventh chord) C is the root and m is the chord quality. When there are multiple “qualities” after the chord name – the accordionist will play the first “quality” for the left hand chord and “define” the other “qualities” in the right hand. The table shows the application of these generic and specific rules to interpret some of the main chord symbols. The same rules apply for the analysis of chord names. For each symbol, several formatting options are available. Except for the root, all the other parts of the symbols may be either superscripted or subscripted. -

Extensions on Diatonic Chords the Dominant Ninth (V9)

Extensions on Diatonic Chords These chords fall in the category of extended tertian harmonies, and contain five or more pitches, which requires a selection of notes for four parts. Chords with extensions appear primarily in root position. An extension may be analyzed as an essential harmonic tone or as an unessential non- harmonic tone (appoggiatura or accented passing tone). The Dominant Ninth (V9), Eleventh (V11) and Thirteenth (V13) The Dominant 9th (V9) chord constitutes of scale degree 5, scale degree 7, scale degree 4, and scale degree 6. The V9 adds a M9 to the V7. When writing for four voices, omit the fifth of the chord (scale degree 2). The ninth (scale degree 6) is usually in the highest voice. Any alterations to the 9th are added next to it (lowered: b9/-9, raised :#9/+9). The Dominant Eleventh chord adds the tonic in the highest voice to form a perfect 11th over the bass. Always omit the third of the chord so that it doesn’t clash with the 11th. But continue to support the 11th with the 7th and (if possible) the 9th. You may raise the 11th with #11. This chord does not appear until the 20th century; most 11ths before that time can be analyzed as non-chord tones. Extensions on Diatonic Chords The Dominant 13th (V13) chord adds scale degree 3 in the highest voice, to form a major 13th over the root. You may omit the fifth and 11th in this chord, but you will always retain the 7th (scale degree 4). The 13th may be lowered with a b13/-13. -

Contemp Piano 101 Instructions

Contemp PIano 101 Instructions Collection Editor: ET Contemp PIano 101 Instructions Collection Editor: ET Authors: Catherine Schmidt-Jones C.M. Sunday Online: < http://cnx.org/content/col10605/1.1/ > CONNEXIONS Rice University, Houston, Texas This selection and arrangement of content as a collection is copyrighted by E T. It is licensed under the Creative Commons Attribution 2.0 license (http://creativecommons.org/licenses/by/2.0/). Collection structure revised: November 17, 2008 PDF generated: February 15, 2013 For copyright and attribution information for the modules contained in this collection, see p. 22. Table of Contents Beyond Triads: Naming Other Chords ............................................................1 Sight-Reading Music ...............................................................................11 1 Piano 2 Dynamics and Accents in Music ................................................................17 Index ................................................................................................21 Attributions .........................................................................................22 iv Available for free at Connexions <http://cnx.org/content/col10605/1.1> Beyond Triads: Naming Other Chords1 Introduction Once you know how to name triads (please see Triads2 and Naming Triads3), you need only a few more rules to be able to name all of the most common chords. This skill is necessary for those studying music theory. It's also very useful at a "practical" level for composers, arrangers, and performers (especially people playing chords, like pianists and guitarists), who need to be able to talk to each other about the chords that they are reading, writing, and playing. Chord manuals, ngering charts, chord diagrams, and notes written out on a sta are all very useful, especially if the composer wants a very particular sound on a chord. But all you really need to know are the name of the chord, your major scales4 and minor scales5, and a few rules, and you can gure out the notes in any chord for yourself. -

The Chord Numbering System

The Chord Numbering System https://www.guitar-chords.org.uk/chord-theory/chord-numbering.html Chord Numbers Just like diatonic scale notes are numbered from one to seven, we also use numbers to denote the position of a chord relative to it's key (scale). Roman numerals are used to label chord positions. Using the previous example, the chords belonging to C major would be labelled from one to seven like this: • I - C Major • ii - D Minor • iii - E Minor • IV - F Major • V - G Major • vi - A Minor • vii - B Dim Note the use of lower case characters used for minor chords and upper case for major. This is common practice to write them this way. By thinking about chords in terms of scale note positions, it becomes a much easier job to remember, or quickly figure out what chords belong to what key. All we need to know is the scale notes and a simple chord order. For instance, we have used C major for our example but it doesn't really matter what scale is used, the order of chords remains the same. We can use this to create a simple formula for any major key. So from one to seven, the chord types are ... I ii iii IV V vi vii Maj Min Min Maj Maj Min Dim Using this idea it becomes a simple task to find the chords in any major key. Let's say we want to know what chords belong to the key of A major. All we need to know are the notes in the A major scale, then we find the chords like so .. -

Download a Sample

www.jamieholroydguitar.com 1 Beginner Jazz Guitar Chords Learn to Comp Like Your Favourite Jazz Guitarists Written by: Jamie Holroyd Edited by: Hayley Lawrence Cover design: Bill Mauro Copyright 2019 Jamie Holroyd Guitar www.jamieholroydguitar.com 2 Table of Contents Introduction How to Use This eBook Chapter 1 - Root on 6th & 5th String Chords Chapter 2 - Extended Chords Chapter 3 - Drop 2 Chords Chapter 4 - Comping Rhythms Chapter 5 - Transcribing Jazz Chords Chapter 6 - Diminished 7th Chords Chapter 7 - Dominant 7b9 Chords Chapter 8 - Dominant 7#9 Chords Chapter 9 - 9th and 13th Chords Chapter 10 - Triads Chapter 11 - 6th Chords Chapter 12 - Inner Chord Voicings Epilogue Blank Music Paper Blank TAB Paper Transcription Answers About The Author www.jamieholroydguitar.com 3 Introduction Welcome to Beginner Jazz Guitar Chords! Glad to have you here. You're in the right place if you're new to jazz guitar and want to learn how to play jazz guitar chords. Two of the biggest challenges you face as a jazz guitarist is learning chords in a systematic way and making them sound like jazz. This eBook gives you the skills to thoroughly learn jazz chords and make them sound like your favourite players. To begin with, you will start by learning the fundamental jazz chords. Then you will move progressively while building on what you learn in each chapter. By the end of the eBook you will be able to comp through any chord sheet using a variety of harmonic colours and rhythmic imagination. www.jamieholroydguitar.com 4 How to Use This eBook The following tips in this section will help you keep focused and avoid getting overwhelmed. -

Arranging for School Jazz Ensembles

OUP UNCORRECTED PROOF – FIRSTPROOFS, Tue Feb 05 2019, NEWGEN 22 Arranging for School Jazz Ensembles Matthew Clauhs No Need to Be the Baby Ellington Band Emily talks about how in the 1970s there was very little (if any) music published spe- cifcally for middle school jazz ensembles. Consequently, anything that they played she would have to frst transcribe. In those early days, she arranged many Earth, Wind, and Fire tunes for the abilities and instrumentation of her middle school jazz band and was happy to do it because playing these contemporary tunes heard on the radio was worth it for the students: Tey dug it. Tey loved it. Somebody would get on that ratty set and maybe it was me on piano and we would play a George Benson tune or we would play an Earth, Wind, and Fire tune and they would love it. So, then it becomes motivational— not necessarily educational. Emily notes that the harder the chart is, the less musicality middle school students can put into it. Consequently, she tries to fnd charts that are relatively easy but have retained characteristic elements of jazz. She fnds that Kendor publishes a lot of litera- ture that fts this description. Sometimes the really characteristic jazz charts are written with ranges outside the ability of middle school students, so she rewrites the parts in a more comfortable range. While she fnds many swing charts that work well for middle school jazz ensemble, it is more difcult to fnd Latin charts that “work” at that level. “Tere’s not a whole lot of literature written on the Latin side that’s right— either the clave is wrong or the tune just doesn’t work.” 9780190462574_Book.indb 257 05-Feb-19 6:02:45 PM OUP UNCORRECTED PROOF – FIRSTPROOFS, Tue Feb 05 2019, NEWGEN 258 | M ATTHEW C L AUHS I don’t feel the need to be the baby Ellington band at this age.