Replacing Matched Control Experiments with Machine

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2017.08.28 Anne Barry-Reidy Thesis Final.Pdf

REGULATION OF BOVINE β-DEFENSIN EXPRESSION THIS THESIS IS SUBMITTED TO THE UNIVERSITY OF DUBLIN FOR THE DEGREE OF DOCTOR OF PHILOSOPHY 2017 ANNE BARRY-REIDY SCHOOL OF BIOCHEMISTRY & IMMUNOLOGY TRINITY COLLEGE DUBLIN SUPERVISORS: PROF. CLIONA O’FARRELLY & DR. KIERAN MEADE TABLE OF CONTENTS DECLARATION ................................................................................................................................. vii ACKNOWLEDGEMENTS ................................................................................................................... viii ABBREVIATIONS ................................................................................................................................ix LIST OF FIGURES............................................................................................................................. xiii LIST OF TABLES .............................................................................................................................. xvii ABSTRACT ........................................................................................................................................xix Chapter 1 Introduction ........................................................................................................ 1 1.1 Antimicrobial/Host-defence peptides ..................................................................... 1 1.2 Defensins................................................................................................................. 1 1.3 β-defensins ............................................................................................................. -

Accompanies CD8 T Cell Effector Function Global DNA Methylation

Global DNA Methylation Remodeling Accompanies CD8 T Cell Effector Function Christopher D. Scharer, Benjamin G. Barwick, Benjamin A. Youngblood, Rafi Ahmed and Jeremy M. Boss This information is current as of October 1, 2021. J Immunol 2013; 191:3419-3429; Prepublished online 16 August 2013; doi: 10.4049/jimmunol.1301395 http://www.jimmunol.org/content/191/6/3419 Downloaded from Supplementary http://www.jimmunol.org/content/suppl/2013/08/20/jimmunol.130139 Material 5.DC1 References This article cites 81 articles, 25 of which you can access for free at: http://www.jimmunol.org/content/191/6/3419.full#ref-list-1 http://www.jimmunol.org/ Why The JI? Submit online. • Rapid Reviews! 30 days* from submission to initial decision • No Triage! Every submission reviewed by practicing scientists by guest on October 1, 2021 • Fast Publication! 4 weeks from acceptance to publication *average Subscription Information about subscribing to The Journal of Immunology is online at: http://jimmunol.org/subscription Permissions Submit copyright permission requests at: http://www.aai.org/About/Publications/JI/copyright.html Email Alerts Receive free email-alerts when new articles cite this article. Sign up at: http://jimmunol.org/alerts The Journal of Immunology is published twice each month by The American Association of Immunologists, Inc., 1451 Rockville Pike, Suite 650, Rockville, MD 20852 Copyright © 2013 by The American Association of Immunologists, Inc. All rights reserved. Print ISSN: 0022-1767 Online ISSN: 1550-6606. The Journal of Immunology Global DNA Methylation Remodeling Accompanies CD8 T Cell Effector Function Christopher D. Scharer,* Benjamin G. Barwick,* Benjamin A. Youngblood,*,† Rafi Ahmed,*,† and Jeremy M. -

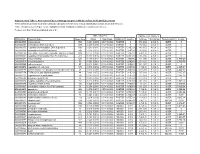

Association of Gene Ontology Categories with Decay Rate for Hepg2 Experiments These Tables Show Details for All Gene Ontology Categories

Supplementary Table 1: Association of Gene Ontology Categories with Decay Rate for HepG2 Experiments These tables show details for all Gene Ontology categories. Inferences for manual classification scheme shown at the bottom. Those categories used in Figure 1A are highlighted in bold. Standard Deviations are shown in parentheses. P-values less than 1E-20 are indicated with a "0". Rate r (hour^-1) Half-life < 2hr. Decay % GO Number Category Name Probe Sets Group Non-Group Distribution p-value In-Group Non-Group Representation p-value GO:0006350 transcription 1523 0.221 (0.009) 0.127 (0.002) FASTER 0 13.1 (0.4) 4.5 (0.1) OVER 0 GO:0006351 transcription, DNA-dependent 1498 0.220 (0.009) 0.127 (0.002) FASTER 0 13.0 (0.4) 4.5 (0.1) OVER 0 GO:0006355 regulation of transcription, DNA-dependent 1163 0.230 (0.011) 0.128 (0.002) FASTER 5.00E-21 14.2 (0.5) 4.6 (0.1) OVER 0 GO:0006366 transcription from Pol II promoter 845 0.225 (0.012) 0.130 (0.002) FASTER 1.88E-14 13.0 (0.5) 4.8 (0.1) OVER 0 GO:0006139 nucleobase, nucleoside, nucleotide and nucleic acid metabolism3004 0.173 (0.006) 0.127 (0.002) FASTER 1.28E-12 8.4 (0.2) 4.5 (0.1) OVER 0 GO:0006357 regulation of transcription from Pol II promoter 487 0.231 (0.016) 0.132 (0.002) FASTER 6.05E-10 13.5 (0.6) 4.9 (0.1) OVER 0 GO:0008283 cell proliferation 625 0.189 (0.014) 0.132 (0.002) FASTER 1.95E-05 10.1 (0.6) 5.0 (0.1) OVER 1.50E-20 GO:0006513 monoubiquitination 36 0.305 (0.049) 0.134 (0.002) FASTER 2.69E-04 25.4 (4.4) 5.1 (0.1) OVER 2.04E-06 GO:0007050 cell cycle arrest 57 0.311 (0.054) 0.133 (0.002) -

Detecting Global in Uence of Transcription

Detecting global inuence of transcription factor interactions on gene expression in lymphoblastoid cells using neural network models Neel Patel Case Western Reserve University William S. Bush ( [email protected] ) Case Western Reserve University Research Article Keywords: Transcription factors, Gene expression, Machine learning, Neural network, Chromatin-looping, Regulatory module, Multi-omics Posted Date: April 15th, 2021 DOI: https://doi.org/10.21203/rs.3.rs-406028/v1 License: This work is licensed under a Creative Commons Attribution 4.0 International License. Read Full License Detecting global influence of transcription factor interactions on gene expression in lymphoblastoid cells using neural network models. Neel Patel1,2 and William S. Bush2* 1Department of Nutrition, Case Western Reserve University, Cleveland, OH, USA. 2Department of Population and Quantitative Health Sciences, Case Western Reserve University, Cleveland, OH, USA.*-corresponding author(email:[email protected]) Abstract Background Transcription factor(TF) interactions are known to regulate target gene(TG) expression in eukaryotes via TF regulatory modules(TRMs). Such interactions can be formed due to co- localizing TFs binding proximally to each other in the DNA sequence or over long distances between distally binding TFs via chromatin looping. While the former type of interaction has been characterized extensively, long distance TF interactions are still largely understudied. Furthermore, most prior approaches have focused on characterizing physical TF interactions without accounting for their effects on TG expression regulation. Understanding TRM based TG expression regulation could aid in understanding diseases caused by disruptions to these mechanisms. In this paper, we present a novel neural network based TRM detection approach that consists of using multi-omics TF based regulatory mechanism information to generate features for building non-linear multilayer perceptron TG expression prediction models in the GM12878 immortalized lymphoblastoid cells. -

Supplementary Table S4. FGA Co-Expressed Gene List in LUAD

Supplementary Table S4. FGA co-expressed gene list in LUAD tumors Symbol R Locus Description FGG 0.919 4q28 fibrinogen gamma chain FGL1 0.635 8p22 fibrinogen-like 1 SLC7A2 0.536 8p22 solute carrier family 7 (cationic amino acid transporter, y+ system), member 2 DUSP4 0.521 8p12-p11 dual specificity phosphatase 4 HAL 0.51 12q22-q24.1histidine ammonia-lyase PDE4D 0.499 5q12 phosphodiesterase 4D, cAMP-specific FURIN 0.497 15q26.1 furin (paired basic amino acid cleaving enzyme) CPS1 0.49 2q35 carbamoyl-phosphate synthase 1, mitochondrial TESC 0.478 12q24.22 tescalcin INHA 0.465 2q35 inhibin, alpha S100P 0.461 4p16 S100 calcium binding protein P VPS37A 0.447 8p22 vacuolar protein sorting 37 homolog A (S. cerevisiae) SLC16A14 0.447 2q36.3 solute carrier family 16, member 14 PPARGC1A 0.443 4p15.1 peroxisome proliferator-activated receptor gamma, coactivator 1 alpha SIK1 0.435 21q22.3 salt-inducible kinase 1 IRS2 0.434 13q34 insulin receptor substrate 2 RND1 0.433 12q12 Rho family GTPase 1 HGD 0.433 3q13.33 homogentisate 1,2-dioxygenase PTP4A1 0.432 6q12 protein tyrosine phosphatase type IVA, member 1 C8orf4 0.428 8p11.2 chromosome 8 open reading frame 4 DDC 0.427 7p12.2 dopa decarboxylase (aromatic L-amino acid decarboxylase) TACC2 0.427 10q26 transforming, acidic coiled-coil containing protein 2 MUC13 0.422 3q21.2 mucin 13, cell surface associated C5 0.412 9q33-q34 complement component 5 NR4A2 0.412 2q22-q23 nuclear receptor subfamily 4, group A, member 2 EYS 0.411 6q12 eyes shut homolog (Drosophila) GPX2 0.406 14q24.1 glutathione peroxidase -

Transcriptome Analysis of Complex I-Deficient Patients Reveals Distinct

van der Lee et al. BMC Genomics (2015) 16:691 DOI 10.1186/s12864-015-1883-8 RESEARCH ARTICLE Open Access Transcriptome analysis of complex I-deficient patients reveals distinct expression programs for subunits and assembly factors of the oxidative phosphorylation system Robin van der Lee1†, Radek Szklarczyk1,2†, Jan Smeitink3,HubertJMSmeets4, Martijn A. Huynen1 and Rutger Vogel3* Abstract Background: Transcriptional control of mitochondrial metabolism is essential for cellular function. A better understanding of this process will aid the elucidation of mitochondrial disorders, in particular of the many genetically unsolved cases of oxidative phosphorylation (OXPHOS) deficiency. Yet, to date only few studies have investigated nuclear gene regulation in the context of OXPHOS deficiency. In this study we performed RNA sequencing of two control and two complex I-deficient patient cell lines cultured in the presence of compounds that perturb mitochondrial metabolism: chloramphenicol, AICAR, or resveratrol. We combined this with a comprehensive analysis of mitochondrial and nuclear gene expression patterns, co-expression calculations and transcription factor binding sites. Results: Our analyses show that subsets of mitochondrial OXPHOS genes respond opposingly to chloramphenicol and AICAR, whereas the response of nuclear OXPHOS genes is less consistent between cell lines and treatments. Across all samples nuclear OXPHOS genes have a significantly higher co-expression with each other than with other genes, including those encoding mitochondrial proteins. We found no evidence for complex-specific mRNA expression regulation: subunits of different OXPHOS complexes are similarly (co-)expressed and regulated by a common set of transcription factors. However, we did observe significant differences between the expression of nuclear genes for OXPHOS subunits versus assembly factors, suggesting divergent transcription programs. -

Supporting Information

Supporting Information Rangel et al. 10.1073/pnas.1613859113 SI Materials and Methods genes were identified, and the microarray data were used to Tumor Xenograft Studies. Female 6- to 7-wk-old Crl:NU(NCr)- determine the closest intrinsic subtype centroid for each sample, Foxn1nu mice were purchased from Charles River Laboratories. based on Spearman correlation using logged mean-centered Mammary fat pad injections into athymic nude mice were per- expression data. To estimate cellular proliferation, a “gene formed using 3 × 106 cells (HCC70, HCC1954, MDA-MB-468, and proliferation signature” (32) was used to generate a proliferation MDA-MB-231). The human cancer cells were resuspended in score for each sample. Briefly, using the logged expression data 100 μL of a 1:1 mix of PBS and matrigel (TREVIGEN). For for a subset of proliferation-related genes, singular value de- HCC1569 human breast cancer cells, we injected 4 × 106 cells in composition was used to produce a “proliferation metagene,” 100 μL of a 1:1 mix of PBS and matrigel. Injections were done which was then scaled to generate a score between 0 and 1, with into the fourth mammary gland. Tumors were measured using a a higher score denoting an increased level of proliferation rela- digital caliper, and the tumor volume was calculated using the tive to samples with lower scores. following formula: volume (mm3) = width × length/2. At the end of the experiment, tumor tissues were sectioned for fixation Human Data. Data from a combined cohort of 2,116 breast tumors (10% formalin or 4% paraformaldehyde) and RNA isolation. -

Virtual Chip-Seq: Predicting Transcription Factor Binding

bioRxiv preprint doi: https://doi.org/10.1101/168419; this version posted March 12, 2019. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. 1 Virtual ChIP-seq: predicting transcription factor binding 2 by learning from the transcriptome 1,2,3 1,2,3,4,5 3 Mehran Karimzadeh and Michael M. Hoffman 1 4 Department of Medical Biophysics, University of Toronto, Toronto, ON, Canada 2 5 Princess Margaret Cancer Centre, Toronto, ON, Canada 3 6 Vector Institute, Toronto, ON, Canada 4 7 Department of Computer Science, University of Toronto, Toronto, ON, Canada 5 8 Lead contact: michael.hoff[email protected] 9 March 8, 2019 10 Abstract 11 Motivation: 12 Identifying transcription factor binding sites is the first step in pinpointing non-coding mutations 13 that disrupt the regulatory function of transcription factors and promote disease. ChIP-seq is 14 the most common method for identifying binding sites, but performing it on patient samples is 15 hampered by the amount of available biological material and the cost of the experiment. Existing 16 methods for computational prediction of regulatory elements primarily predict binding in genomic 17 regions with sequence similarity to known transcription factor sequence preferences. This has limited 18 efficacy since most binding sites do not resemble known transcription factor sequence motifs, and 19 many transcription factors are not even sequence-specific. 20 Results: 21 We developed Virtual ChIP-seq, which predicts binding of individual transcription factors in new 22 cell types using an artificial neural network that integrates ChIP-seq results from other cell types 23 and chromatin accessibility data in the new cell type. -

Detecting Global in Uence of Transcription Factor Interactions On

Detecting Global Inuence of Transcription Factor Interactions on Gene Expression in Lymphoblastoid Cells Using Neural Network Models. Neel Patel Case Western Reserve University https://orcid.org/0000-0001-9953-6734 William S. Bush ( [email protected] ) Case Western Reserve University https://orcid.org/0000-0002-9729-6519 Research Keywords: Transcription factors, Gene expression, Machine learning, Neural network, Chromatin-looping, Regulatory module, Multi-omics. Posted Date: August 3rd, 2021 DOI: https://doi.org/10.21203/rs.3.rs-406028/v2 License: This work is licensed under a Creative Commons Attribution 4.0 International License. Read Full License Detecting global influence of transcription factor interactions on gene expression in lymphoblastoid cells using neural network models. Neel Patel1,2 and William S. Bush2* 1Department of Nutrition, Case Western Reserve University, Cleveland, OH, USA. 2Department of Population and Quantitative Health Sciences, Case Western Reserve University, Cleveland, OH, USA.*-corresponding author(email:[email protected]) Abstract Background Transcription factor(TF) interactions are known to regulate gene expression in eukaryotes via TF regulatory modules(TRMs). Such interactions can be formed due to co-localizing TFs binding proximally to each other in the DNA sequence or between distally binding TFs via long distance chromatin looping. While the former type of interaction has been characterized extensively, long distance TF interactions are still largely understudied. Furthermore, most prior approaches have focused on characterizing physical TF interactions without accounting for their effects on gene expression regulation. Understanding how TRMs influence gene expression regulation could aid in identifying diseases caused by disruptions to these mechanisms. In this paper, we present a novel neural network based approach to detect TRM in the GM12878 immortalized lymphoblastoid cell line. -

An Improved Auxin-Inducible Degron System Preserves Native Protein Levels and Enables Rapid and Specific Protein Depletion

Downloaded from genesdev.cshlp.org on September 30, 2021 - Published by Cold Spring Harbor Laboratory Press RESOURCE/METHODOLOGY An improved auxin-inducible degron system preserves native protein levels and enables rapid and specific protein depletion Kizhakke Mattada Sathyan,1 Brian D. McKenna,1 Warren D. Anderson,2 Fabiana M. Duarte,3 Leighton Core,4 and Michael J. Guertin1,2,5 1Biochemistry and Molecular Genetics Department, University of Virginia, Charlottesville, Virginia 22908, USA; 2Center for Public Health Genomics, University of Virginia, Charlottesville, Virginia 22908, USA; 3Department of Stem Cell and Regenerative Biology, Harvard University, Cambridge, Massachusetts 02138, USA; 4Department of Molecular and Cell Biology, University of Connecticut, Storrs, Connecticut 06269, USA; 5Cancer Center, University of Virginia, Charlottesville, Virginia 22908, USA Rapid perturbation of protein function permits the ability to define primary molecular responses while avoiding downstream cumulative effects of protein dysregulation. The auxin-inducible degron (AID) system was developed as a tool to achieve rapid and inducible protein degradation in nonplant systems. However, tagging proteins at their endogenous loci results in chronic auxin-independent degradation by the proteasome. To correct this deficiency, we expressed the auxin response transcription factor (ARF) in an improved inducible degron system. ARF is absent from previously engineered AID systems but is a critical component of native auxin signaling. In plants, ARF directly interacts with AID in the absence of auxin, and we found that expression of the ARF PB1 (Phox and Bem1) domain suppresses constitutive degradation of AID-tagged proteins. Moreover, the rate of auxin-induced AID degradation is substantially faster in the ARF-AID system. -

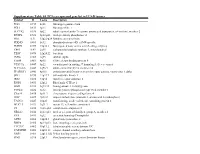

Supplement. Transcriptional Factors (TF), Protein Name and Their Description Or Function

Supplement. Transcriptional factors (TF), protein name and their description or function. TF Protein name TF description/function ARID3A AT rich interactive domain 3A (BRIGHT-like) This gene encodes a member of the ARID (AT-rich interaction domain) family of DNA binding proteins. ATF4 Activating Transcription Factor 4 Transcriptional activator. Binds the cAMP response element (CRE) (consensus: 5-GTGACGT[AC][AG]-3), a sequence present in many viral and cellular promoters. CTCF CCCTC-Binding Factor Chromatin binding factor that binds to DNA sequence specific sites. Involved in transcriptional regulation by binding to chromatin insulators and preventing interaction between promoter and nearby enhancers and silencers. The protein can bind a histone acetyltransferase (HAT)-containing complex and function as a transcriptional activator or bind a histone deacetylase (HDAC)-containing complex and function as a transcriptional repressor. E2F1-6 E2F transcription factors 1-6 The protein encoded by this gene is a member of the E2F family of transcription factors. The E2F family plays a crucial role in the control of cell cycle and action of tumor suppressor proteins and is also a target of the transforming proteins of small DNA tumor viruses. The E2F proteins contain several evolutionally conserved domains found in most members of the family. These domains include a DNA binding domain, a dimerization domain which determines interaction with the differentiation regulated transcription factor proteins (DP), a transactivation domain enriched in acidic amino acids, and a tumor suppressor protein association domain which is embedded within the transactivation domain. EBF1 Transcription factor COE1 EBF1 has been shown to interact with ZNF423 and CREB binding proteins. -

A Mutation in ZNF143 As a Novel Candidate Gene for Endothelial Corneal Dysplasia

Article A Mutation in ZNF143 as a Novel Candidate Gene for Endothelial Corneal Dysplasia Yonggoo Kim 1,2,†, Hye Jin You 3,†, Shin Hae Park 4,†, Man Soo Kim 4, Hyojin Chae 1,2, Joonhong Park 1,2, Dong Wook Jekarl 1,2, Jiyeon Kim 2, Ahlm Kwon 2, Hayoung Choi 2, Yeojae Kim 2, A Rome Paek 3, Ahwon Lee 5, Jung Min Kim 6, Seon Young Park 7, Yonghwan Kim 7, Keehyoung Joo 8,9, Jooyoung Lee 8,9,10, Jongsun Jung 11, So-Hyang Chung 4,12, Jee Won Mok 12 and Myungshin Kim 1,2,* 1 Department of Laboratory Medicine, College of Medicine, The Catholic University of Korea, Seoul 06591, Korea 2 Catholic Genetic Laboratory Center, Seoul St. Mary’s Hospital, College of Medicine, The Catholic University of Korea, Seoul 06591, Korea 3 Cancer Cell and Molecular Biology Branch, Division of Cancer Biology, National Cancer Center, Gyeonggi-do 10408, Korea 4 Department of Ophthalmology and Visual Science, Seoul St. Mary’s Hospital, College of Medicine, The Catholic University of Korea, Seoul 06591, Korea 5 Department of Hospital Pathology, College of Medicine, The Catholic University of Korea, Seoul 06591, Korea 6 Genoplan Korea, Inc., Seoul 06221, Korea 7 Department of Life Systems, Sookmyung Women’s University, Seoul 04312, Korea 8 Center for in Silico Protein Science, Korea Institute for Advanced Study, Seoul 02455, Korea 9 Center for Advanced Computation, Korea Institute for Advanced Study, Seoul 02455, Korea 10 School of Computational Sciences, Korea Institute for Advanced Study, Seoul 02455, Korea 11 Syntekabio Inc., Daejeon 34025, Korea 12 Catholic Institutes of Visual Science, The Catholic University of Korea, Seoul 06591, Korea * Correspondence: [email protected]; Tel.: +82-2-2258-1645 † These authors contributed equally to this work.