Finding People and Their Utterances in Social Media

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Class Descriptions Class Descriptions

class descriptions Class Descriptions SKILL LEVEL KEY SEWING/MACHINE CLASSES: BEGINNER Little or no sewing (i.e. construction, patterns) or machine (i.e. serger, sewing) knowledge. INTERMEDIATE Basic sewing (i.e. construction, patterns) or machine (i.e. serger, sewing) understanding. Can easily adjust stitches, tension, accessory feet, etc. ADVANCED Complete understanding of sewing (i.e. construction, patterns) and machines (i.e. serger, sewing). Able to construct a garment/quilt without guidance/assistance. Can upload, manipulate, edit embroidery designs. Complete understanding of sewing terminology. SOFTWARE: BEGINNER Little or no computer/software knowledge. INTERMEDIATE Legend Basic understanding of computers/software. Familiar with keyboards, mouse, external peripherals and equipment. (i.e. able to: copy/paste, drag/drop, make new file folders.) Hands On ADVANCED Complete understanding of computers/software. Can use multiple features of Digitizer MB. Can install/uninstall software/hardware with ease. (i.e. understands updates, drivers, Kit/Fee Required sewing machine to PC connectivity, PC terminology) Basic Sewing Kit: Sewing scissors, paper scissors, pins, seam ripper, rotary cutter/ruler/ mat, water-soluble fabric marker or chalk, tape measure, all purpose sewing thread, light Laptop Required neutral and dark neutral colors, glue stick or temporary spray adhesive, hand sewing needles, small flat head screwdriver, seam sealant, several medium safety pins. Lecture Basic Serger Kit: Sewing scissors, serger tweezers, thread snips, needle threader, floss threader, Allen screwdriver for 1100D and 1000CPX, needles, small lint brush, seam sealant. Pictures may not represent actual fabrics in the kits. 2 Class Descriptions NEW TOL ROOMS Certification for New TOL Machine for US and Canadian Dealers All US dealers must purchase a TOL machine as part of the certification. -

Sewing Machines and Sergers

Welcome To Janome Institute 2011- Your Education Connection September 3 7, at The Marriott World Center Resort In Orlando i Expand Your Horizons j How about some good news, for a change? The economic recovery has been a mixed bag for the sewing industry. Customers are buying... but they’re also holding onto their purse strings a little more tightly. They’re taking longer to make that big machine purchase. But, we’ve clearly seen, when they’re excited about a machine, they will gladly make the purchase. And that’s good news. Because at Janome Institute 2011 we’re going to unveil a machine that will cause your customers to be very excited. This top-of-the-line machine is going to redefine Janome sewing the way we did with the MC8000, MC10000, and MC11000. And it’s going to cause quite a stir among your customers. Believe me, people you’ve never seen before are going to stop by your store just to see it in person. You better have your demo ready, because you’ll be doing it a lot. (It wouldn’t be a bad idea, either, to order some extra cash register tape.) On Opening Night, Saturday, September 3rd, this machine will be revealed for the first time ever to attendees of Janome Institute 2011 in Orlando. You’re going to want to be one of them. Because starting the next day, you’ll get to try out the new machine and get the sales training and product knowledge you need to become a certified dealer. -

NYC Garment District Locations by Street 35Th Street

NYC Garment District Locations By Street Shop Address Phone Description Notes: 35th Street Open to Public - Fashion Sew Fast Sew Easy 147 W. 35th St., Suite 807 (212) 268-4321 Patterns, sewing kits Source Book Open to Public - Fashion American Sewing Supply 224 W. 35th St., Suite 500B (212) 239-3695 Professional supplies and equipment for tailors etc Source Book Open to Public - Fashion Safa Fabrics 237 W. 35th St. Ground Floor (212) 239-3415 Laces, tapestry, silk, brocade Source Book No Minimums: Big in stock inventory all colors many skins including: Leathers,Suedes, Metallics, pearlized, Exotics, Embosses, Furs Shearling , Novelty leathers Laser and Open to Public - Fashion Global Leathers 253 W. 35th St., 9th Floor (212) 244-5190 Perforated. Source Book Imported high quality fabric for dress, lining, satin; Open to Public - Fashion Nousha Tex, Sunmaid 253 W. 35th St., Ground Floor (212) 268-7770 Converter and importer of fine fabrics, woven Source Book No sales minimum. Leather and suede skins dealer. Importer, leather dealer. The source for the world's most beautiful leather. Supplier of leather, suede in lambskin, cowhide, pigskin, pearlized, printed, perforated, embossed, Tibet lamb, snakeskins, exotics, leather and fur trim providing garment accessories, home furnishing and suede Open to Public - Fashion Leather Suede Skins, Inc. 261 W. 35th St., 11th Floor (212) 967-6616 skins. Available for immediate delivery from stock. Source Book Jobber of lining, interlining, fusable, fusing fabrics; Open to Public - Fashion Moon Tex 261 W. 35th St., Ground Floor (212) 631-0970 embroidered Source Book NYC_Garment District_Shop List RI Sewing Network NYC Garment District Locations By Street Shop Address Phone Description Notes: 36th Street Open to Public - Fashion Gettinger Feather Corp. -

Term Association Modelling in Information Retrieval

TERM ASSOCIATION MODELLING IN INFORMATION RETRIEVAL JIASHU ZHAO A DISSERTATION SUBMITTED TO THE FACULTY OF GRADUATE STUDIES IN PARTIAL FULFILMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY GRADUATE PROGRAM IN COMPUTER SCIENCE AND ENGINEERING YORK UNIVERSITY TORONTO, ONTARIO MARCH 2015 c Jiashu Zhao 2015 Abstract Many traditional Information Retrieval (IR) models assume that query terms are in- dependent of each other. For those models, a document is normally represented as a bag of words/terms and their frequencies. Although traditional retrieval models can achieve reasonably good performance in many applications, the corresponding inde- pendence assumption has limitations. There are some recent studies that investigate how to model term associations/dependencies by proximity measures. However, the modeling of term associations theoretically under the probabilistic retrieval frame- work is still largely unexplored. In this thesis, I propose a new concept named Cross Term, to model term prox- imity, with the aim of boosting retrieval performance. With Cross Terms, the asso- ciation of multiple query terms can be modeled in the same way as a simple unigram term. In particular, an occurrence of a query term is assumed to have an impact on its neighboring text. The degree of the query term impact gradually weakens with increasing distance from the place of occurrence. Shape functions are used to ii characterize such impacts. Based on this assumption, I first propose a bigram CRoss TErm Retrieval (CRT ER2) model for probabilistic IR and a Language model based LM model CRT ER2 . Specifically, a bigram Cross Term occurs when the correspond- ing query terms appear close to each other, and its impact can be modeled by the intersection of the respective shape functions of the query terms. -

For Men & Women

MAKING Click on any image to go to the related material for Men & Women Keyhole Buttonholes Project-Garment Galleries the DVD Video Demos Printable Patterns All content © 2009 by David Page Coffin RTW & Custom Galleries Sources & Links Click Here to launch DPC’s blog for more info about using this DVD Click this symbol to return to this page Click this symbol to go to next page 1 BONUS CHAPTER Using an Eyelet Plate to Make Keyhole Buttonholes Eyelet Plates and Cutters Keyhole buttonholes are designed to An eyelet plate, shown in photos 1 and 2, covers prevent distortion when the closure is your feed dogs and provides a post on which under strain during wear. Without the ex- to position the precut hole for your keyhole, tra space provided by the keyhole shape, plus a slot that allows the needle to make a zig- 2 an ordinary buttonhole will be distorted zag stitch. The post is slotted, too, so the needle as the shank pulls against the end of the can swing inside it to form the inner edge of the hole. On pants, I’d suggest using key- eyelet (photo 3). The black plastic plate in photo holes for any and all buttonholes, wheth- 2 (sitting on top of my Bernina plate) is from my er on button flies, pocket flaps, or waist dear old Pfaff; notice that its slot is oriented in closures. I only recently got a sewing the opposite direction compared to the Bernina machine that could make keyhole but- plate (this apparently makes no difference), and tonholes, but I had already developed a that it has “toes” designed to snap into the feed- way of making keyhole buttonholes with dog holes on the machine, instead of screwing an eyelet plate. -

Sew Stitch Quick Manual

Sew Stitch Quick Manual If you are searched for the ebook Sew stitch quick manual in pdf form, in that case you come on to faithful site. We present the full variation of this ebook in DjVu, PDF, doc, ePub, txt forms. You may read Sew stitch quick manual online or load. Additionally to this ebook, on our site you may reading the guides and diverse art eBooks online, either downloading them as well. We like to attract your consideration that our website not store the eBook itself, but we provide reference to website wherever you may load or reading online. So that if you have necessity to load Sew stitch quick manual pdf, in that case you come on to the right website. We have Sew stitch quick manual doc, txt, PDF, ePub, DjVu formats. We will be happy if you return again and again. stitch sew quick hand held sewing machine by - SINGER-Stitch Sew Quick Hand Held Sewing Machine. This hand held sewing machine compact and portable; excellent for on-the-spot repairs and is lightweight and powerful. singer simple 23- stitch sewing machine 2263 - - Learning to sew is fun and easy when you start with the Singer Simple 23-Stitch Sewing Machine 2263. This product is designed specifically for first-timer sewers and singer sew quick manual - shopping.com - Features: 60 Built-In Stitches with stitch guide included in manual Automatic Needle Threader - sewing's biggest timesaver! Automatic Stitch Length Width ensures the amazon.com: stitch sew quick - Stitch Sew Quick. by Dyno Merchandise. 320 customer reviews | 23 answered questions Price: Manual Sewing Machine for Easy Repair of Fabric and Clothes. -

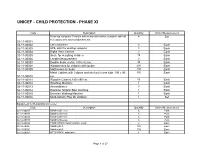

Unicef - Child Protection - Phase Xi

UNICEF - CHILD PROTECTION - PHASE XI Code Description Quantity Unit of Measurement Desk top computer Pentium with coloured monitor, complete with all 4 Set necessary accessories/attachments. 02-11-00001 02-11-00002 LaserJet printer 4 Each 02-11-00003 UPS, 220V for desktop computer 4 Each 02-11-00004 Digital Video Camera 2 Each 02-11-00005 Scale for weighing children 10 Each 02-11-00006 Length Measurement 2 Each 02-11-00007 Double beds, metal, 189 x 82 cm. 40 Each 02-11-00008 Wooden bed for children with locker 200 Each 02-11-00009 Mattresses for beds 200 Each Metal Cabinet with 2 doors and shelves in one side, 190 x 90 155 Each 02-11-00010 cm. 02-11-00011 Wooden Cabinet,180 x 90 cm. 50 Each 02-11-00012 Washing Machine 1 Each 02-11-00013 Airconditioner 3 Each 02-11-00014 Exercise Athletic Bike machine 1 Each 02-11-00015 Exercise Walking Machine 1 Each 02-11-00016 Multi-Activity Play for children 1 Set Equipment for Rehabilitation Center Code Description Quantity Unit of Measurement 02-11-00017 Sanding Sleeves 6 Pack 02-12-00018 Sanding Sleeves 6 Pack 02-11-00018 Sanding Sleeves 6 Pack 02-12-00019 Sanding Sleeves 6 Pack 02-11-00019 HABERMANN Sanding Drum. Long 2 Each 02-12-00020 Sanding Belt 100 Each 02-11-00020 Sanding Belt 100 Each 02-12-00021 OTTO BOCK, adjustable 1 Each Page 1 of 27 Code Description Quantity Unit of Measurement 02-11-00021 Round End Sanding Drum 1 Each 02-12-00022 Sanding Drum 10 Each 02-11-00022 Sanding Sleeve 25 Pack 02-12-00023 Sanding Sleeve 50 Pack 02-11-00023 Hot Air Gun (Triac) 1 Each 02-12-00024 Hole Punch Set -

Owner's Manual

TM TM Owner‘s manual This household sewing machine is designed to comply with IEC/EN 60335-2-28 and UL1594. IMPORTANT SAFETY INSTRUCTIONS When using an electrical appliance, basic safety precautions should always be followed, including the following: Read all instructions before using this household sewing machine. DANGER - To reduce the risk of electric shock: • A sewing machine should never be left unattended when plugged in. Always unplug this sewing machine from the electric outlet immediately after using and before cleaning. WARNING - To reduce the risk of burns, fire, electric shock, or injury to persons: • This sewing machine is not intended for use by persons (including children) with reduced physical, sensory or mental capabilities, or lack of experience and knowledge, unless they have been given supervision or instruction concerning use of the sewing machine by a person responsible for their safety. • Children should be supervised to ensure that they do not play with the sewing machine. • Use this sewing machine only for its intended use as described in this manual. Use only attachments recommended by the manufacturer as contained in this manual. • Never operate this sewing machine if it has a damaged cord or plug, if it is not working properly, if it has been dropped or damaged, or dropped into water. Return the sewing machine to the nearest authorized dealer or service center for examination, repair, electrical or mechanical adjustment. • Never operate the sewing machine with any air openings blocked. Keep ventilation openings of the sewing machine and foot controller free from the accumulation of lint, dust, and loose threads. -

Proximity-Based Approaches to Blog Opinion Retrieval

Proximity-based Approaches to Blog Opinion Retrieval Doctoral Dissertation submitted to the Faculty of Informatics of the Università della Svizzera Italiana in partial fulfillment of the requirements for the degree of Doctor of Philosophy presented by Shima Gerani under the supervision of Fabio Crestani September 2012 Dissertation Committee Marc Langheinrich University of Lugano, Switzerland Fernando Pedone University of Lugano, Switzerland Maarten De Rijke University of Amsterdam, The Netherlands Mohand Boughanem Paul Sabatier University, Toulouse, France Dissertation accepted on 13 September 2012 Research Advisor PhD Program Director Fabio Crestani Antonio Carzaniga pro tempore i I certify that except where due acknowledgement has been given, the work presented in this thesis is that of the author alone; the work has not been sub- mitted previously, in whole or in part, to qualify for any other academic award; and the content of the thesis is the result of work which has been carried out since the official commencement date of the approved research program. Shima Gerani Lugano, 13 September 2012 ii Abstract Recent years have seen the rapid growth of social media platforms that enable people to express their thoughts and perceptions on the web and share them with other users. Many people write their opinion about products, movies, peo- ple or events on blogs, forums or review sites. The so-called User Generated Content is a good source of users’ opinion and mining it can be very useful for a wide variety of applications that require understudying of public opinion about a concept. Blogs are one of the most popular and influential social media. -

2003 Article Index

2003 ARTICLE INDEX “40+ Great Finds” special offers for readers, Armhole fine-tuning armhole depth, armholes Felt Wee Folk, Oct:80 Aug:54-61 that bind or gape, Jan:28-31 Folkwear Book of Ethnic Clothing, Apr:78 “Artistry” necklaces from leftover yarns, Handmade Bags, Feb:80 fabrics and beads, Apr:44-46 Making Handbags, Apr:78 A Atwood, Jennie Archer (author) 40 tips for More Splash Than Cash Window “A Better Edge” facing alternatives, Jul:46-49 sewing for kids, Oct:38-41 Treatments, Aug:70 “A Little Flair” adding narrow ruffles and trim Options, Aug:70 to garments, Mar:40-43 Paper Quilting, Feb:80 “A Man and His Machine” humor story about B Queen of Inventions, Dec:82 buying first sewing machine, Mar:60-62 Babylon, Donna (author) Quiltagami, Jun:65 “ABCs of Sewing Class” preparing for making pet beds, Nov:44-46 Sandra Betzina Sews for Your Home, sewing classes, Mar:56-57 patriotic windsock, Jul:32-33 Feb:80 Accessories terms to know when making window Sew Fast, Sew Easy, Jun:65 backpack with expandable gusset, treatments, May:30-33 Sew Much Fun, Aug:70 May:60-63 three valances from basic shape, Aug:28-29 Sew Simple Squares, Oct:80 bag with bound buttonhole detail, Nov:64-66 trim options for window treatments, Sew Young, Sew Fun: Sew it Up!, Feb:80 faux suede belts, May:24-27 Jun:32-34 Sewing With Nancy’s Favorite Hints, Apr:78 faux suede handbag with animal print “Back to Basics: Buttonholes” perfecting Shibori, Dec:82 accent, Apr:40-42 buttonholes, Jul:52-55 Simple Slipcovers, Aug:70 handbag with grommet tape, Aug:21-22 “Back to Basics: -

Commercial Market Analysis Ottawa Street

Commercial Market Analysis Ottawa Street BIA April 2010 URBAN y MARKETING y COLLABORATIVE y a division of J.C. Williams Group 17 DUNDONALD STREET, 3RD FLOOR, TORONTO, ONTARIO M4Y 1K3. TEL: (416) 929-7690 FAX: (416) 921-4184 e-mail: [email protected] 350 WEST HUBBARD STREET, SUITE 240, CHICAGO, ILLINOIS 60610. TEL: (312) 673-1254 Commercial Market Analysis Ottawa Street BIA Table of Contents Executive Summary .................................................................................................................................. 1 1.0 Introduction ......................................................................................................................................... 3 1.1 Background and Project Information ...................................................................................... 3 2.0 Report Format ...................................................................................................................................... 5 3.0 Ottawa Street Trade Area Review ................................................................................................... 6 3.1 Trade Area Population Characteristics ................................................................................... 8 3.2 Household Expenditure Analysis .......................................................................................... 13 4.0 Retail Commercial Audit ................................................................................................................ 16 4.1 Market Positioning .................................................................................................................. -

Meet the Instructors Ct Spalding - Catherine Spalding Is a Photographer and Creative Photography Workshop Instructor

Meet the Instructors ct spalding - Catherine Spalding is a photographer and creative photography workshop instructor. Her photographs capture the subtle influences evoking emotion within each of her subjects. The study of details allow her to capture her subjects uniqueness, which what drives her photographic art style. Her portfolio, comprised of strong works that capture the avant-garde perspectives she shoots, showcases her originality and imagination. She exhibits and sells her work in solo exhibits, events and to the commercial and residential interior design market. ADULT CLASSES Drawing Acrylic Painting Bridge The SullivanMunce Cultural Center reserves the right to cancel classes due to lack of enrollment. Students wishing to cancel their Digital Photography registration must do so 7 days before class starts or registration fee will be forfeited. Ceramics Watercolor, Acrylic and REGISTER FOR CLASSES: Oil Painting www.sullivanmunce.org or Sewing 317.873.6862 or Pastels [email protected] REGISTRATION BEGINS August 11, 2010 Jewelry CLASSES BEGIN STARTING August 16, 2010 Yoga TEEN AND YOUTH CLASSES Mini-Masters This class schedule made possible in part by Ceramics Bookbinding 205-225 West Hawthorne Street Zionsville, IN 46077 Art Through Time 317.873.6862 www.sullivanmunce.org Parent/Child Pottery Sewing Meet the Instructors Kae Mentz - Kae loves to draw and paint. Growing up in northern Michigan Zionsville’s she loved to capture details of life in nature as well as people. Developing as an artist began with her pouring over favorite images of Michelangelo and cultural hub for Rembrandt in her grandfather's library. Kae’s education in fine art includes the study of Anatomy, Physiology and Kiniseology with an education degree.