An Artificial Intelligence Environment for Information Retrieval Research

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Go-Betweens Pdf Free Download

THE GO-BETWEENS PDF, EPUB, EBOOK David Nichols | 288 pages | 15 Feb 2006 | Puncture Publications | 9781891241161 | English | Portland, United States The Go-betweens PDF Book Like Like. Blunt Gordon Richardson The acting of Jack in this movie is outstanding - he should be at the top of the credits. Denys Maudsley. Alternate Versions. The Grant McLennan songs sound more developed and better to me, but the two singer-songwriters still complement each other very well. Ted Burgess Stephen Campbell Moore External Reviews. The band consisted of two year-olds, Robert Forster and co-founder Grant McLennan , who were joined by a drummer they knew. Official Sites. Boundary Rider Edit Storyline In 12 year old Leo Colston spends a blisteringly hot summer with the wealthy family of class-mate Marcus Maudsley at their Norfolk estate. Hugh Trimingham. In November , the duo left Australia, with a plan to shop their songs from record company to record company simply by visiting their offices and playing them. Was this review helpful to you? Although the Go-Betweens were absent throughout the '90s before re-forming in the new millennium, both of the band's songwriters embarked on respectable solo careers in the interim and, while rarely reaching the heights the Go-Betweens scaled, they still managed to uphold the group's legacy. Photo Gallery. Ted Burgess Stephen Campbell Moore Sign In Don't have an account? Denys Roger Lloyd Pack Elias Is a Nice Guy by Poster. The acting of Jack in this movie is outstanding - he should be at the top of the credits. -

Theatregraphy

Theatregraphy Play Company Year Date Theatre Co Stars Director Author Richard III National 1981 nn nn nn nn Youth Theatre Macbeth National 1982 nn nn Sally Dexter nn (as Macbeth) Youth Theatre Trumpets and Drums (based on London 1984 nn nn nn Berthold The Recruiting Officer) Academy of Brecht (as Captain Brazen) Music and Dramatic Art Claw Theatre 1985 nn Theatre Clwyd / nn nn nn (as Lusby) Clwyd / Mold Mold Trumpets and Drums (based on Theatre 1985 nn Theatre Clwyd / nn nn Berthold The Recruiting Officer) Clwyd / Mold Mold Brecht (as Captain Brazen) Romeo and Juliet (as Tybalt) Young Vic, 1986 11.02.1986 - Old Vic Vincenzo Ricotta, David William London ??? Mike Shannon, Rob Thacker Shakespeare Edwards, Paul Bradley, Geoffrey Beavers, Edward York, Gary Lucas, Sharon Bower, Suzan Sylvester, Val McLane, Gary Cady, Leslie Southwick, John Hallam The Winter's Tale (as Florizel) RSC 1986 25.05.1986 – Royal Terry Hands William Raymond Bowers, 17.01.1987 Shakespeare Shakespeare Gillian Barge, 14.10.1987 - Theatre Eileen Page, ??? Jeremy Irons, Stanley Dawson, Penny Downie, Paul Greenwood, Joe Melia, Sean O'Callaghan, Henry Goodman, Mark Lindley, Cornelia Hayes, Caroline Johnson, Susie Fairfax, David Glover, Brian Lawson, Bernard Horsfall, Simon Russell Beale, Roger Watkins, Trevor Gordon, Gary Love, Roger Moss, Patrick Robinson, Martin Hicks, Richard Parry, Sam Irons, Richard Easton Every Man in His Humour RSC 1986 15.05.1986 – Swan Theatre Haig, David Caird, John Jonson, Ben (as Wellbred) 07.01.1987 Richardson, Joely Postlethwaite, Pete Moss, -

Liner Notes for the Screenwriter William Goldman Was Addison

A Bridge Too Far , Richard Attenbor - But every penny of that 22 million dollar march music was often quite optimistic ough’s 1977 epic 22 million dollar film budget is on the screen, and thanks to and upbeat. And it was later on, when adapted from the 1974 book by Cor - the literate screenplay by Goldman, and things started to go wrong, that one felt nelius Ryan, tells the story of Operation the excellent performances by the large more of the irony of the loss of life and Market Garden, the failed Allied attempt cast, Attenborough created a com - various tragic elements about a battle to break through German lines and take pelling film that holds up beautifully all that did not succeed as had been several bridges at a time when the Ger - these years later. For such a huge and hoped. One of the final images left on mans were on the retreat. Joseph E. impressive production, A Bridge Too Far the screen after the retreat, capture or Levine financed the entire film by him - received no Academy Award nomina - death of the remaining Allied forces is self, selling off distribution rights as film - tions whatsoever (it was the year of of a small child marching along proudly ing continued – by the time the film was Annie Hall) , but did receive several with his toy rifle over his shoulder – to finished it was already four million dol - BAFTA nominations including Best Film the sounds of the once cheerful XXX lars into the black, a pretty amazing feat. and Best Director – it took home several Corps march. -

Films & Major TV Dramas Shot (In Part Or Entirely) in Wales

Films & Major TV Dramas shot (in part or entirely) in Wales Feature films in black text TV Drama in blue text Historical Productions (before the Wales Screen Commission began) Dates refer to when the production was released / broadcast. 1935 The Phantom Light - Ffestiniog Railway and Lleyn Peninsula, Gwynedd; Holyhead, Anglesey; South Stack Gainsborough Pictures Director: Michael Powell Cast: Binnie Hale, Gordon Harker, Donald Calthrop 1938 The Citadel - Abertillery, Blaenau Gwent; Monmouthshire Metro-Goldwyn-Mayer British Studios Director: King Vidor Cast: Robert Donat, Rosalind Russell, Ralph Richardson 1940 The Thief of Bagdad - Freshwater West, Pembrokeshire (Abu & Djinn on the beach) Directors: Ludwig Berger, Michael Powell The Proud Valley – Neath Port Talbot; Rhondda Valley, Rhondda Cynon Taff Director: Pen Tennyson Cast: Paul Robeson, Edward Chapman 1943 Nine Men - Margam Sands, Neath, Neath Port Talbot Ealing Studios Director: Harry Watt Cast: Jack Lambert, Grant Sutherland, Gordon Jackson 1953 The Red Beret – Trawsfynydd, Gwynedd Director: Terence Young Cast: Alan Ladd, Leo Genn, Susan Stephen 1956 Moby Dick - Ceibwr Bay, Fishguard, Pembrokeshire Director: John Huston Cast: Gregory Peck, Richard Basehart 1958 The Inn of the Sixth Happiness – Snowdonia National Park, Portmeirion, Beddgelert, Capel Curig, Cwm Bychan, Lake Ogwen, Llanbedr, Morfa Bychan Cast: Ingrid Bergman, Robert Donat, Curd Jürgens 1959 Tiger Bay - Newport; Cardiff; Tal-y-bont, Cardigan The Rank Organisation / Independent Artists Director: J. Lee Thompson Cast: -

Movie Suggestions for 55 and Older: #4 (141) After the Ball

Movie Suggestions for 55 and older: #4 (141) After The Ball - Comedy - Female fashion designer who has strikes against her because father sells knockoffs, gives up and tries to work her way up in the family business with legitimate designes of her own. Lots of obstacles from stepmother’s daughters. {The} Alamo - Western - John Wayne, Richard Widmark, Laurence Harvey, Richard Boone, Frankie Avalon, Patrick Wayne, Linda Cristal, Joan O’Brien, Chill Wills, Ken Curtis, Carlos Arru , Jester Hairston, Joseph Calleia. 185 men stood against 7,000 at the Alamo. It was filmed close to the site of the actual battle. Alive - Adventure - Ethan Hawke, Vincent Spano, James Newton Howard, et al. Based on a true story. Rugby players live through a plane crash in the Andes Mountains. When they realize the rescue isn’t coming, they figure ways to stay alive. Overcoming huge odds against their survival, this is about courage in the face of desolation, other disasters, and the limits of human courage and endurance. Rated “R”. {The} American Friend - Suspense - Dennis Hopper, Bruno Ganz, Lisa Kreuzer, Gerard Blain, Nicholas Ray, Samuel Fuller, Peter Lilienthal, Daniel Schmid, Jean Eustache, Sandy Whitelaw, Lou Castel. An American sociopath art dealer (forged paintings) sells in Germany. He meets an idealistic art restorer who is dying. The two work together so the German man’s family can get funds. This is considered a worldwide cult film. Amongst White Clouds - 294.3 AMO - Buddhist, Hermit Masters of China’s Zhongnan Mountains. Winner of many awards. “An unforgettable journey into the hidden tradition of China’s Buddhist hermit monks. -

DANIEL PHILLIPS Make up and Hair Designer AMPAS

Screen Talent Agency 818 206 0144 DANIEL PHILLIPS Make up and Hair Designer AMPAS Following a hairstyling apprenticeship, and training as a Graphic artist in the Marine industry, award winning hair and make up designer Daniel Phillips continued his studies at the London College of fashion studying Media, Film and Editorial Makeup & Hair, resulting in 2 years on the Fashion and beauty circuit. From there, he spent 8 years at the BBC honing his craft in the makeup department, covering a host of Period and Contemporary film and studio based projects. Now a freelance designer in TV and Film, Daniel has been awarded Emmy's, Royal Television Society awards and several BAFTA nominations. Daniel’s make up & hair skills coupled with his calming, positive approach to all of his artists and projects have positioned him as a designer in high demand. Daniel is available for work worldwide. selected credits as Hair & Make up Designer: production director/production company feature films THE VOYAGE OF DR. DOOLITTLE (Robert Downey, Jr., Kasia Smutniak) Stephen Gaghan / Joe Roth / Universal VICTORIA AND ABDUL (Judi Dench, Michael Gambon) Steven Frears / Tracey Seward / Focus Features TULIP FEVER (Alicia Vikander, Christoph Waltz) Justin Chadwick / Molly Connors / Weinstein Co. ALLIED (Brad Pitt, Marion Cotillard) Robert Zemeckis / Graham King / Paramount BASTILLE DAY (aka) THE TAKE (Idris Elba, Kelly Reilly) James Watkins / Steve Golin / Studio Canal FLORENCE FOSTER JENKINS (Meryl Streep, Hugh Grant) Stephen Frears / Michael Kuhn / Pathé BAFTA Nomination -

A-Z Movies V

DECEMBER 2017 A-Z MOVIES v 10 CLOVERFIELD LANE 30 DAYS OF NIGHT COMEDY MOVIES 2006 Frankie Muniz, Anthony Anderson. In an effort to reconnect with her December 14 THRILLER MOVIES 2016 THRILLER MOVIES 2007 Comedy (M ls) A rogue CIA agent has stolen a top daughter, Maggie takes her to Tuscany Paul Giamatti, Hope Davis. Thriller/Suspense (M lv) Horror (MA 15+ ah) December 23 secret mind control device, so Cody where she had spent her youth, A grumpy nerd who becomes a cult December 7, 13, 24 December 13, 25 Justin Long, Jonah Hill. must go undercover in London to get it only to rediscover a love she had comic-strip author and talk-show John Goodman, Josh Hartnett, Melissa George. A friendly bunch of college rejects back by posing as a student at an elite left behind. celebrity, combines life and art in a Mary Elizabeth Winstead. When an Alaskan town endures 30 invent a new university that they can boarding school. touching take on the American dream. After a catastrophic car crash, a young days of darkness, vampires come attend to keep their parents happy. ALLIED woman wakes up in an underground out to feed. Only a husband-and-wife But when the first semester begins, AGUIRRE, WRATH OF GOD PREMIERE MOVIES 2016 Drama THE AMERICAN bunker with a man who claims to have sheriff team stand between death and the deception spins out of control. WORLD MOVIES 1972 West Germany (M adlnsv) THRILLER MOVIES 2010 Drama saved her from an apocalyptic attack. survival until sunlight returns. -

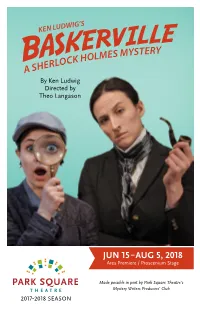

JUN 15 – AUG 5, 2018 Area Premiere / Proscenium Stage

By Ken Ludwig Directed by Theo Langason JUN 15 – AUG 5, 2018 Area Premiere / Proscenium Stage Made possible in part by Park Square Theatre’s Mystery Writers Producers’ Club 2017–2018 SEASON Get to your happy place. Escape the noise of now. Feel better and live bigger with Classical MPR. Tune in or stream at classicalmpr.org. AARP members and those 62+ enjoy complimentary coffee and cookies before Park Square matinees courtesy of: PARK SQUARE THEATRE INFORMATION CONTACT TICKET OFFICE HOURS Mailing Address: Tuesday – Saturday* 408 St. Peter Street, Suite 110 12:00-5:00pm Saint Paul, MN 55102 *Open Saturdays on performance days only Street Address: Performance Days* 20 West 7th Place, Saint Paul, MN 55102 6:30-8:30pm for 7:30 evening performances Ticket Office: 651.291.7005 1:00-3:00pm for 2:00 matinee performances Education: 651.291.9196 *Hold times may be longer due to in-person customer Donor Development: 651.767.1440 service before performances Audience Services: 651.767.8489 Proscenium Stage seats 348. Andy Boss Thrust Stage seats 203. Group Sales: 651.767.8485 The Historic Hamm Building is a smoke-free facility. Latecomers are seated at the discretion of the House Manager. Please visit parksquaretheatre.org Restrooms and water fountains are on main floor and lower level. to view the following listings: Cameras/audio/video equipment and laser pointers are prohibited. Staff, Board of Directors and These activities are made possible by the Educator Advisory Board. voters of Minnesota through a Minnesota State Arts Board Operating Support grant, thanks to a legislative appropriation from the arts and cultural heritage fund, and a grant BRAILLE from the Wells Fargo Foundation Minnesota. -

The Twelfth Night Party Report by Tim Rooke

President: Vice President: No. 474 - March 2011 Simon Russell Beale CBE Nickolas Grace Price 50p when sold The Twelfth Night Party Report by Tim Rooke The annual celebration by the Vic-Wells Association of 12th Night was held, as usual, at the Old Vic with the kind permission of the Artistic Director, Kevin Spacey. We thank him and also all the theatre staff who were there to lend a hand. A substantial number of our members gathered, together with the cast of the current Old Vic production, A Flea in her Ear, who happily tucked into the food and drink provided by Ruth Jeayes and other Committee members. Our Vice-President, Nickolas Grace, Vic-Wells members and guests enjoy the party introduced the cutting of the cake. We had expected that Tom Hollander would perform this ceremony but, due to an elbow injury sustained during a performance of the play, his place was taken by his under-study. Freddie Fox - yes, another member of this acting family - was a most acceptable substitute. Mr Fox has only recently left drama school and is to remain at the Old Vic for t h e n e x t p r o d u c t i o n Cause Célèbre. Freddie told us that his f a t h e r (Edward Fox) was rather envious of his son making his London stage debut in Chairman, Jim Ranger with Freddie Fox this most prestigious of theatres. His dad had made his stage debut in a provincial rep. playing, of all things, a bridesmaid! With the cake cut and distributed, Freddie and others from the cast mingled with our members and we chatted away so that our allotted time just flew past. -

MELANIE DICKS - 1St Assistant Director

MELANIE DICKS - 1st Assistant Director 2009 – Present Melanie set up Greenshoot in 2009 to try and deal with the vast amounts of film waste that is landfilled every year. She has dedicated the last 15 months to Greenshoot and is currently working on the new Britishstandard 8909, a management framework for sustainable filming, as an Environmental Consultant. Greenshoot are currently working on “Sherlock Holmes 2”, “Johnny English Reborn” and “47 Ronin”. Greenshoot have diverted over 1,000 tonnes of film waste in the last 6 months that would have been landfilled. ST TRINIAN’S: THE LEGEND OF FRITTON’S GOLD Directors/Producers: Oliver Parker and Barnaby Thompson. Co-producer: Mark Hubbard. Starring Colin Firth, Rupert Everett, Tallulah Riley, Sarah Harding And Gemma Arterton. Fragile Films. FROM TIME TO TIME Director: Julian Fellowes. Producers: Paul Kingsley and Liz Trubridge. Starring Maggie Smith, Timothy Spall, Eliza Bennett and Hugh Bonneville. Fragil Films. ST TRINIAN’S Directors/Producers: Oliver Parker and Barnaby Thompson. Co-producer: Mark Hubbard Starring Rupert Everett, Jodie Whittaker, Lena Headey, Gema Arterton and Colin Firth. Fragile Films. RUN, FAT BOY, RUN Director: David Schwimmer. Producer: Robert Jones. Starring Simon Pegg, Thandie Newton, Hank Azaria and Dylan Moran. Material Entertainment/New Line. THE 10 th MAN Director: Sam Liefer. Producer: Teddy Liefer. Starring Warren Mitchell and Andrew Sachs. Daydreamer Productions. (Short) 4929 Wilshire Blvd., Ste. 259 Los Angeles, CA 90010 ph 323.782.1854 fx 323.345.5690 [email protected] IT’S A BOY GIRL THING Director: Nick Hurran. Producers: Steve Hamilton Shaw, David Furnish and Elton John. Starring Samaire Armstrong, Kevin Zegers and Sharon Osbourne. -

UPA 3 Pre-1820

A Guide to the General Administration Collection Pre-1820 1681-1853 (bulk 1740-1820) 30.0 Cubic feet UPA 3 Pre-1820 Prepared by Francis James Dallett 1978 The University Archives and Records Center 3401 Market Street, Suite 210 Philadelphia, PA 19104-3358 215.898.7024 Fax: 215.573.2036 www.archives.upenn.edu Mark Frazier Lloyd, Director General Administration Collection Pre-1820 UPA 3 Pre-1820 TABLE OF CONTENTS PROVENANCE...............................................................................................................................1 ARRANGEMENT NOTE...............................................................................................................1 SCOPE AND CONTENTS.............................................................................................................2 CONTROLLED ACCESS HEADINGS.........................................................................................2 INVENTORY.................................................................................................................................. 4 COMMUNICATIONS OF INDIVIDUALS.............................................................................4 CONSTITUTION AND CHARTERS.................................................................................. 266 TRUSTEES............................................................................................................................272 FACULTY OF ARTS...........................................................................................................348 STUDENTS -

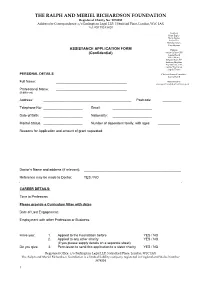

Assistance Application Form

THE RALPH AND MERIEL RICHARDSON FOUNDATION Registered Charity No: 1074030 Address for Correspondence: c/o Burlingtons Legal LLP, 5 Stratford Place, London, W1C 1AX Tel: 020 7529 5420 Trustees Brian Eagles Marje Eagles Emilia Fox Nickolas Grace Tony Hyams ASSISTANCE APPLICATION FORM Patrons (Confidential) Simon Callow CBE Joanna David Albert Finney Edward Fox OBE Anthony Hopkins Phyllida Law OBE Sophie Thompson Angela Thorne PERSONAL DETAILS Chair of Grants Committee Joanna David Full Name: ______________________________________ Administrator: [email protected] Professional Name: ______________________________________ (if different) Address: _______________________________________________ Postcode: __________ Telephone No: _____________________ Email: _____________________ Date of Birth: _____________________ Nationality: _____________________ Marital Status: _____________________ NumBer of dependent family, with ages: ____________ Reasons for Application and amount of grant requested: Doctor’s Name and address (if relevant): ____________________________________________________ Reference may Be made to Doctor: YES / NO CAREER DETAILS: Time in Profession: ____________________________________________________ Please provide a Curriculum Vitae with dates Date of Last Engagement: ____________________________________________________ Employment with other Profession or Business: _______________________________________________ Have you: 1. Applied to the Foundation Before YES / NO 2. Applied to any other charity YES / NO (if yes