DRAM Basic Circuits

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Modeling System Signal Integrity Uncertainty Considerations

WHITE PAPER Intel® FPGA Modeling System Signal Integrity Uncertainty Considerations Authors Abstract Ravindra Gali This white paper describes signal integrity (SI) mechanisms that cause system-level High-Speed I/O Applications timing uncertainty and how these mechanisms are modeled in the Intel® Quartus® Engineering, Intel® Corporation Prime software Timing Analyzer to achieve timing closure for external memory interface designs. Zhi Wong By using the Intel Quartus Prime software to achieve timing closure for external High-Speed I/O Applications memory interfaces, a designer does not need to allocate a separate SI timing Engineering, Intel Corporation budget to account for simultaneous switching output (SSO), simultaneous Navid Azizi switching input (SSI), intersymbol interference (ISI), and board-level crosstalk for Software Engineeringr flip-chip device families such as Stratix® IV and Arria® II FPGAs for typical user Intel Corporation implementation of external memory interfaces following good board design practices. John Oh High-Speed I/O Applications Introduction Engineering, Intel Corporation The widening performance gap between FPGAs, microprocessors, and memory Arun VR devices, along with the growth of memory-intensive applications, are driving the Memory I/O Applications Engineering, need for faster memory technologies. This push to higher bandwidths has been Intel Corporation accompanied by an increase in the signal count and the signaling rates of FPGAs and memory devices. In order to attain faster bandwidths, device makers continue to reduce the supply voltage. Initially, industry-standard DIMMs operated at 5 V. However, due to improvements in DRAM storage density, the operating voltage was decreased to 3.3 V (SDR), then to 2.5V (DDR), 1.8 V (DDR2), 1.5 V (DDR3), and 1.35 V (DDR3) to allow the memory to run faster and consume less power. -

Product Guide SAMSUNG ELECTRONICS RESERVES the RIGHT to CHANGE PRODUCTS, INFORMATION and SPECIFICATIONS WITHOUT NOTICE

May. 2018 DDR4 SDRAM Memory Product Guide SAMSUNG ELECTRONICS RESERVES THE RIGHT TO CHANGE PRODUCTS, INFORMATION AND SPECIFICATIONS WITHOUT NOTICE. Products and specifications discussed herein are for reference purposes only. All information discussed herein is provided on an "AS IS" basis, without warranties of any kind. This document and all information discussed herein remain the sole and exclusive property of Samsung Electronics. No license of any patent, copyright, mask work, trademark or any other intellectual property right is granted by one party to the other party under this document, by implication, estoppel or other- wise. Samsung products are not intended for use in life support, critical care, medical, safety equipment, or similar applications where product failure could result in loss of life or personal or physical harm, or any military or defense application, or any governmental procurement to which special terms or provisions may apply. For updates or additional information about Samsung products, contact your nearest Samsung office. All brand names, trademarks and registered trademarks belong to their respective owners. © 2018 Samsung Electronics Co., Ltd. All rights reserved. - 1 - May. 2018 Product Guide DDR4 SDRAM Memory 1. DDR4 SDRAM MEMORY ORDERING INFORMATION 1 2 3 4 5 6 7 8 9 10 11 K 4 A X X X X X X X - X X X X SAMSUNG Memory Speed DRAM Temp & Power DRAM Type Package Type Density Revision Bit Organization Interface (VDD, VDDQ) # of Internal Banks 1. SAMSUNG Memory : K 8. Revision M: 1st Gen. A: 2nd Gen. 2. DRAM : 4 B: 3rd Gen. C: 4th Gen. D: 5th Gen. -

You Need to Know About Ddr4

Overcoming DDR Challenges in High-Performance Designs Mazyar Razzaz, Applications Engineering Jeff Steinheider, Product Marketing September 2018 | AMF-NET-T3267 Company Public – NXP, the NXP logo, and NXP secure connections for a smarter world are trademarks of NXP B.V. All other product or service names are the property of their respective owners. © 2018 NXP B.V. Agenda • Basic DDR SDRAM Structure • DDR3 vs. DDR4 SDRAM Differences • DDR Bring up Issues • Configurations and Validation via QCVS Tool COMPANY PUBLIC 1 BASIC DDR SDRAM STRUCTURE COMPANY PUBLIC 2 Single Transistor Memory Cell Access Transistor Column (bit) line Row (word) line G S D “1” => Vcc “0” => Gnd “precharged” to Vcc/2 Cbit Ccol Storage Parasitic Line Capacitor Vcc/2 Capacitance COMPANY PUBLIC 3 Memory Arrays B0 B1 B2 B3 B4 B5 B6 B7 ROW ADDRESS DECODER ADDRESS ROW W0 W1 W2 SENSE AMPS & WRITE DRIVERS COLUMN ADDRESS DECODER COMPANY PUBLIC 4 Internal Memory Banks • Multiple arrays organized into banks • Multiple banks per memory device − DDR3 – 8 banks, and 3 bank address (BA) bits − DDR4 – 16 banks with 4 banks in each of 4 sub bank groups − Can have one active row in each bank at any given time • Concurrency − Can be opening or closing a row in one bank while accessing another bank Bank 0 Bank 1 Bank 2 Bank 3 Row 0 Row 1 Row 2 Row 3 Row … Row Buffers COMPANY PUBLIC 5 Memory Access • A requested row is ACTIVATED and made accessible through the bank’s row buffers • READ and/or WRITE are issued to the active row in the row buffers • The row is PRECHARGED and is no longer -

Improving DRAM Performance by Parallelizing Refreshes

Improving DRAM Performance by Parallelizing Refreshes with Accesses Kevin Kai-Wei Chang Donghyuk Lee Zeshan Chishti† [email protected] [email protected] [email protected] Alaa R. Alameldeen† Chris Wilkerson† Yoongu Kim Onur Mutlu [email protected] [email protected] [email protected] [email protected] Carnegie Mellon University †Intel Labs Abstract Each DRAM cell must be refreshed periodically every re- fresh interval as specified by the DRAM standards [11, 14]. Modern DRAM cells are periodically refreshed to prevent The exact refresh interval time depends on the DRAM type data loss due to leakage. Commodity DDR (double data rate) (e.g., DDR or LPDDR) and the operating temperature. While DRAM refreshes cells at the rank level. This degrades perfor- DRAM is being refreshed, it becomes unavailable to serve mance significantly because it prevents an entire DRAM rank memory requests. As a result, refresh latency significantly de- from serving memory requests while being refreshed. DRAM de- grades system performance [24, 31, 33, 41] by delaying in- signed for mobile platforms, LPDDR (low power DDR) DRAM, flight memory requests. This problem will become more preva- supports an enhanced mode, called per-bank refresh, that re- lent as DRAM density increases, leading to more DRAM rows freshes cells at the bank level. This enables a bank to be ac- to be refreshed within the same refresh interval. DRAM chip cessed while another in the same rank is being refreshed, alle- density is expected to increase from 8Gb to 32Gb by 2020 as viating part of the negative performance impact of refreshes. -

Datasheet DDR4 SDRAM Revision History

Rev. 1.4, Apr. 2018 M378A5244CB0 M378A1K43CB2 M378A2K43CB1 288pin Unbuffered DIMM based on 8Gb C-die 78FBGA with Lead-Free & Halogen-Free (RoHS compliant) datasheet SAMSUNG ELECTRONICS RESERVES THE RIGHT TO CHANGE PRODUCTS, INFORMATION AND SPECIFICATIONS WITHOUT NOTICE. Products and specifications discussed herein are for reference purposes only. All information discussed herein is provided on an "AS IS" basis, without warranties of any kind. This document and all information discussed herein remain the sole and exclusive property of Samsung Electronics. No license of any patent, copyright, mask work, trademark or any other intellectual property right is granted by one party to the other party under this document, by implication, estoppel or other- wise. Samsung products are not intended for use in life support, critical care, medical, safety equipment, or similar applications where product failure could result in loss of life or personal or physical harm, or any military or defense application, or any governmental procurement to which special terms or provisions may apply. For updates or additional information about Samsung products, contact your nearest Samsung office. All brand names, trademarks and registered trademarks belong to their respective owners. © 2018 Samsung Electronics Co., Ltd.GG All rights reserved. - 1 - Rev. 1.4 Unbuffered DIMM datasheet DDR4 SDRAM Revision History Revision No. History Draft Date Remark Editor 1.0 - First SPEC. Release 27th Jun. 2016 - J.Y.Lee 1.1 - Deletion of Function Block Diagram [M378A1K43CB2] on page 11 29th Jun. 2016 - J.Y.Lee - Change of Physical Dimensions [M378A1K43CB1] on page 41 1.11 - Correction of Typo 7th Mar. 2017 - J.Y.Lee 1.2 - Change of Physical Dimensions [M378A1K43CB1] on page 41 23h Mar. -

Access Order and Effective Bandwidth for Streams on a Direct Rambus Memory Sung I

Access Order and Effective Bandwidth for Streams on a Direct Rambus Memory Sung I. Hong, Sally A. McKee†, Maximo H. Salinas, Robert H. Klenke, James H. Aylor, Wm. A. Wulf Dept. of Electrical and Computer Engineering †Dept. of Computer Science University of Virginia University of Utah Charlottesville, VA 22903 Salt Lake City, Utah 84112 Abstract current DRAM page forces a new page to be accessed. The Processor speeds are increasing rapidly, and memory speeds are overhead time required to do this makes servicing such a request not keeping up. Streaming computations (such as multi-media or significantly slower than one that hits the current page. The order of scientific applications) are among those whose performance is requests affects the performance of all such components. Access most limited by the memory bottleneck. Rambus hopes to bridge the order also affects bus utilization and how well the available processor/memory performance gap with a recently introduced parallelism can be exploited in memories with multiple banks. DRAM that can deliver up to 1.6Gbytes/sec. We analyze the These three observations — the inefficiency of traditional, performance of these interesting new memory devices on the inner dynamic caching for streaming computations; the high advertised loops of streaming computations, both for traditional memory bandwidth of Direct Rambus DRAMs; and the order-sensitive controllers that treat all DRAM transactions as random cacheline performance of modern DRAMs — motivated our investigation of accesses, and for controllers augmented with streaming hardware. a hardware streaming mechanism that dynamically reorders For our benchmarks, we find that accessing unit-stride streams in memory accesses in a Rambus-based memory system. -

Memory Technology and Trends for High Performance Computing Contract #: MDA904-02-C-0441

Memory Technology and Trends for High Performance Computing Contract #: MDA904-02-C-0441 Contract Institution: Georgia Institute of Technology Project Director: D. Scott Wills Project Report 12 September 2002 — 11 September 2004 This project explored the impact of developing memory technologies on future supercomputers. This activity included both a literature study (see attached whitepaper), plus a more practical exploration of potential memory interfacing techniques using the sponsor recommended HyperTransport interface. The report indicates trends that will affect interconnection network design in future supercomputers. Related publications during the contract period include: 1. P. G. Sassone and D. S. Wills, On the Scaling of the Atlas Chip-Scale Multiprocessor, to appear in IEEE Transaction on Computers. 2. P. G. Sassone and D. S. Wills, Dynamic Strands: Collapsing Speculative Dependence Chains for Reducing Pipeline Communication, to appear in IEEE/ACM International Symposium on Microarchitecture, Portland, OR, December 2004. 3. B. A. Small, A. Shacham, K. Bergman, K. Athikulwongse, C. Hawkins, and D. S. Wills, Emulation of Realistic Network Traffic Patterns on an Eight-Node Data Vortex Interconnection Network Subsystem, to appear in OSA Journal of Optical Networking. 4. P. G. Sassone and D. S. Wills, On the Extraction and Analysis of Prevalent Dataflow Patterns, to appear in The IEEE 7th Annual Workshop on Workload Characterization (WWC-7), 8 pages, Austin, TX, October 2004. 5. H. Kim, D. S. Wills, and L. M. Wills, Empirical Analysis of Operand Usage and Transport in Multimedia Applications, in Proceedings of the 4th IEEE International Workshop on System-on-Chip for Real-Time Applications(IWSOC'04), pages 168-171, Banff, Alberta, Canada, July 2004. -

DDR400/333/266, Dual DDR, RDRAM 16 Bit and 32 Bit, SDRAM

Ace’s Hardware Granite Bay: Memory Technology Shootout Granite Bay: Memory Technology Shootout By Johan De Gelas – December 2002 Dual-Channel DDR SDRAM Arrives for the Pentium 4 DDR400/333/266, Dual DDR, RDRAM 16 bit and 32 bit, SDRAM... almost every memory technology on the market is available for the Pentium 4 platform. One of our previous technical articles discussed the advantages and disadvantages of the different architectures of Rambus and SDRAM based memory technology such as DDR and DDR-II. In this article, we will investigate how the different memory technologies and their supporting chipsets compare on the test bench. The following motherboards were tested: • The ASUS P4T533 features the i850E chipset and 32 bit RDRAM • The ASUS P4T533-C comes with the same chipset but uses two channels of 16 bit RIMMs • The MSI 648 Max comes with SIS 648 chipset which unofficially supports DDR400 • The MSI i845PE comes with Intel's newest i845 chipset, which officially support DDR333 • The Tyan Trinity 7205 and MSI GNB Max feature the Dual DDR266 Granite Bay chipset We are well aware that there have already many tests with Pentium 4 chipsets, Granite Bay included. So why bother to publish another on Ace’s Hardware? The focus of this article is on the memory technology supported by these chipsets. This article will offer you a insight in how the different memory technologies compare in a wide variety of applications. We'll investigate in depth what the advantages and disadvantages are of each memory technology and try to find out what are the reasons behind this. -

Chang-Hong Wu Distinguished Engineer, Juniper Networks the INTERNET EXPLOSION

ASICS: THE HEART OF MODERN ROUTERS Chang-Hong Wu Distinguished Engineer, Juniper Networks THE INTERNET EXPLOSION # Web Sites 130EB/yr Internet Capacity 162M # Connected Devices 1B Total Digitized Information 420EB # Google Searches/Month 100M 31B/mo 12EB/yr 40M 110EB 4PB/yr 60PB/yr 9.5M 160M 25M 33K 1 1.7M 2.7B/mo 1988 1993 1998 2003 2008 Exponential growth, no matter how you measure it! The clearest indication of value delivered to end-users 2 Copyright © 2010 Juniper Networks, Inc. DRIVING FORCE BEHIND EXPONENTIAL GROWTH C S C S N C S Information N System N Digital Stored Pipelining Microprocessor Multi-core Computing Program Computing Digital Circuit Packet TCP/IP Transmission Switching Switching HPN Networking Flash Digital Core Disk DRAM Storage Memory Storage 3 Copyright © 2010 Juniper Networks, Inc. COMPUTER PERFORMANCE: 1988-2008 228 500,000 X over 20 years 226 224 222 220 218 System CAGR: 1.9x /year 216 214 12 2 Super Computers 210 28 26 Megahertz Megahertz / MFlops 24 Microprocessor CAGR: 1.3x /year 22 20 „88 „89 „90 „91 „92 „93 „94 „95 „96 „97 „98 „99 „00 „01 „02 „03 „04 „05 „06 „07 „08 4 Copyright © 2010 Juniper Networks, Inc. ROUTER PERFORMANCE 1988 – 2008 1000,000 X over 20 years (2x /year) 224 Post-ASIC era: 2.2x /year TX T1600 222 220 T640 M160 218 Pre-ASIC era: 1.6x /year M40 216 214 212 Interface CAGR: 1.7x /year 210 28 26 Megabits per second 24 22 20 „88 „89 „90 „91 „92 „93 „94 „95 „96 „97 „98 „99 „00 „01 „02 „03 „04 „05 „06 „07 „08 5 Copyright © 2010 Juniper Networks, Inc. -

DDR4 8Gb C Die Unbuffered SODIMM Rev1.5 Apr.18.Book

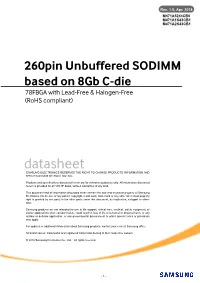

Rev. 1.5, Apr. 2018 M471A5244CB0 M471A1K43CB1 M471A2K43CB1 260pin Unbuffered SODIMM based on 8Gb C-die 78FBGA with Lead-Free & Halogen-Free (RoHS compliant) datasheet SAMSUNG ELECTRONICS RESERVES THE RIGHT TO CHANGE PRODUCTS, INFORMATION AND SPECIFICATIONS WITHOUT NOTICE. Products and specifications discussed herein are for reference purposes only. All information discussed herein is provided on an "AS IS" basis, without warranties of any kind. This document and all information discussed herein remain the sole and exclusive property of Samsung Electronics. No license of any patent, copyright, mask work, trademark or any other intellectual property right is granted by one party to the other party under this document, by implication, estoppel or other- wise. Samsung products are not intended for use in life support, critical care, medical, safety equipment, or similar applications where product failure could result in loss of life or personal or physical harm, or any military or defense application, or any governmental procurement to which special terms or provisions may apply. For updates or additional information about Samsung products, contact your nearest Samsung office. All brand names, trademarks and registered trademarks belong to their respective owners. © 2018 Samsung Electronics Co., Ltd. All rights reserved. - 1 - Rev. 1.5 Unbuffered SODIMM datasheet DDR4 SDRAM Revision History Revision No. History Draft Date Remark Editor 1.0 - First SPEC. Release 27th Jun. 2016 - J.Y.Lee 1.1 - Change of Function Block Diagram [M471A1K43CB1] on page 10~11 29th Jun. 2016 - J.Y.Lee - Change of Physical Dimensions on page 42~43 1.2 - Change of Physical Dimensions on page 42~43 24th Feb. -

Architecture of an AHB Compliant SDRAM Memory Controller

International Journal of Innovations in Engineering and Technology (IJIET) Architecture of An AHB Compliant SDRAM Memory Controller S. Lakshma Reddy Metch student, Department of Electronics and Communication Engineering CVSR College of Engineering, Hyderabad, Andhra Pradesh, India A .Krishna Kumari Professor, Department of Electronics and Communication Engineering CVSR College of Engineering, Hyderabad, Andhra Pradesh, India Abstract- -- Microprocessor performance has improved rapidly these years. In contrast, memory latencies and bandwidths have improved little. The result is that the memory access time has been a bottleneck which limits the system performance. As the speed of fetching data from memories is not able to match up with speed of processors. So there is the need for a fast memory controller. The responsibility of the controller is to match the speeds of the processor on one side and memory on the other so that the communication can take place seamlessly. Here we have built a memory controller which is specifically targeted for SDRAM. Certain features were included in the design which could increase the overall efficiency of the controller, such as, searching the internal memory of the controller for the requested data for the most recently used data, instead of going to the Memory to fetch it. The memory controller is designed which compatible with Advanced High-performance Bus (AHB) which is a new generation of AMBA bus. The AHB is for high-performance, high clock frequency system modules. The AHB acts as the high-performance system backbone bus. Index Terms- SDRAM, Memory controller, AMBA, FPGA, Xilinx, I. INTRODUCTION In order to enhance overall performance, SDRAMs offer features including multiple internal banks, burst mode access, and pipelining of operation executions. -

Performance Evaluation and Feasibility Study of Near-Data Processing on DRAM Modules (DIMM-NDP) for Scientific Applications Matthias Gries, Pau Cabré, Julio Gago

Performance Evaluation and Feasibility Study of Near-data Processing on DRAM Modules (DIMM-NDP) for Scientific Applications Matthias Gries, Pau Cabré, Julio Gago To cite this version: Matthias Gries, Pau Cabré, Julio Gago. Performance Evaluation and Feasibility Study of Near-data Processing on DRAM Modules (DIMM-NDP) for Scientific Applications. [Technical Report] Huawei Technologies Duesseldorf GmbH, Munich Research Center (MRC). 2019. hal-02100477 HAL Id: hal-02100477 https://hal.archives-ouvertes.fr/hal-02100477 Submitted on 15 Apr 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. TECHNICAL REPORT MRC-2019-04-15-R1, HUAWEI TECHNOLOGIES, MUNICH RESEARCH CENTER, GERMANY, APRIL 2019 1 Performance Evaluation and Feasibility Study of Near-data Processing on DRAM Modules (DIMM-NDP) for Scientific Applications Matthias Gries , Pau Cabre,´ Julio Gago Abstract—As the performance of DRAM devices falls more and more behind computing capabilities, the limitations of the memory and power walls are imminent. We propose a practical Near-Data Processing (NDP) architecture DIMM-NDP for mitigating the effects of the memory wall in the nearer-term targeting server applications for scientific computing. DIMM-NDP exploits existing but unused DRAM bandwidth on memory modules (DIMMs) and takes advantage of a subset of the forthcoming JEDEC NVDIMM-P protocol in order to integrate application-specific, programmable functionality near memory.