Lean and Mean to Agility and Beyond: the History—And Legacy—Of Agile Development

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MAKING DATA MODELS READABLE David C

MAKING DATA MODELS READABLE David C. Hay Essential Strategies, Inc. “Confusion and clutter are failures of [drawing] design, not attributes of information. And so the point is to find design strategies that reveal detail and complexity ¾ rather than to fault the data for an excess of complication. Or, worse, to fault viewers for a lack of understanding.” ¾ Edward R. Tufte1 Entity/relationship models (or simply “data models”) are powerful tools for analyzing and representing the structure of an organization. Properly used, they can reveal subtle relationships between elements of a business. They can also form the basis for robust and reliable data base design. Data models have gotten a bad reputation in recent years, however, as many people have found them to be more trouble to produce and less beneficial than promised. Discouraged, people have gone on to other approaches to developing systems ¾ often abandoning modeling altogether, in favor of simply starting with system design. Requirements analysis remains important, however, if systems are ultimately to do something useful for the company. And modeling ¾ especially data modeling ¾ is an essential component of requirements analysis. It is important, therefore, to try to understand why data models have been getting such a “bad rap”. Your author believes, along with Tufte, that the fault lies in the way most people design the models, not in the underlying complexity of what is being represented. An entity/relationship model has two primary objectives: First it represents the analyst’s public understanding of an enterprise, so that the ultimate consumer of a prospective computer system can be sure that the analyst got it right. -

Value Mapping – Critical Business Architecture Viewpoint

Download this and other resources @ http://www.aprocessgroup.com/myapg Value Mapping – Critical Business Architecture Viewpoint AEA Webinar Series “Enterprise Business Intelligence” Armstrong Process Group, Inc. www.aprocessgroup.com Copyright © 1998-2017, Armstrong Process Group, Inc., All rights reserved 2 About APG APG’s mission is to “Align information technology and systems engineering capabilities with business strategy using proven, practical processes delivering world-class results.” Industry thought leader in enterprise architecture, business modeling, process improvement, systems and software engineering, requirements management, and agile methods Member and contributor to UML ®, SysML ®, SPEM, UPDM ™ at the Object Management Group ® (OMG ®) TOGAF ®, ArchiMate ®, and IT4IT ™ at The Open Group BIZBOK ® Guide and UML Profile at the Business Architecture Guild Business partners with Sparx, HPE, and IBM Guild Accredited Training Partner ™ (GATP ™) and IIBA ® Endorsed Education Provider (EEP ™) AEA Webinar Series – “Enterprise Business Intelligence” – Value Mapping Copyright © 1998-2017, Armstrong Process Group, Inc., All rights reserved 3 Business Architecture Framework Business Architecture Knowledgebase Blueprints provide views into knowledgebase, based on stakeholder concerns Scenarios contextualize expected outcomes of business architecture work Also inform initial selections of key stakeholders and likely concerns AEA Webinar Series – “Enterprise Business Intelligence” – Value Mapping BIZBOK Guide Copyright © 1998-2017, -

CROSS SECTIONAL STUDY of AGILE SOFTWARE DEVELOPMENT METHODS and PROJECT PERFORMANCE Tracy Lambert Nova Southeastern University, [email protected]

Nova Southeastern University NSUWorks H. Wayne Huizenga College of Business and HCBE Theses and Dissertations Entrepreneurship 2011 CROSS SECTIONAL STUDY OF AGILE SOFTWARE DEVELOPMENT METHODS AND PROJECT PERFORMANCE Tracy Lambert Nova Southeastern University, [email protected] This document is a product of extensive research conducted at the Nova Southeastern University H. Wayne Huizenga College of Business and Entrepreneurship. For more information on research and degree programs at the NSU H. Wayne Huizenga College of Business and Entrepreneurship, please click here. Follow this and additional works at: https://nsuworks.nova.edu/hsbe_etd Part of the Business Commons Share Feedback About This Item NSUWorks Citation Tracy Lambert. 2011. CROSS SECTIONAL STUDY OF AGILE SOFTWARE DEVELOPMENT METHODS AND PROJECT PERFORMANCE. Doctoral dissertation. Nova Southeastern University. Retrieved from NSUWorks, H. Wayne Huizenga School of Business and Entrepreneurship. (56) https://nsuworks.nova.edu/hsbe_etd/56. This Dissertation is brought to you by the H. Wayne Huizenga College of Business and Entrepreneurship at NSUWorks. It has been accepted for inclusion in HCBE Theses and Dissertations by an authorized administrator of NSUWorks. For more information, please contact [email protected]. CROSS SECTIONAL STUDY OF AGILE SOFTWARE DEVELOPMENT METHODS AND PROJECT PERFORMANCE By Tracy Lambert A DISSERTATION Submitted to H. Wayne Huizenga School of Business and Entrepreneurship Nova Southeastern University in partial fulfillment of the requirements For the degree of DOCTOR OF BUSINESS ADMINISTRATION 2011 ABSTRACT CROSS SECTIONAL STUDY OF AGILE SOFTWARE DEVELOPMENT METHODS AND PROJECT PERFORMANCE by Tracy Lambert Agile software development methods, characterized by delivering customer value via incremental and iterative time-boxed development processes, have moved into the mainstream of the Information Technology (IT) industry. -

Chapter 1: Introduction

Just Enough Structured Analysis Chapter 1: Introduction “The beginnings and endings of all human undertakings are untidy, the building of a house, the writing of a novel, the demolition of a bridge, and, eminently, the finish of a voyage.” — John Galsworthy Over the River, 1933 www.yourdon.com ©2006 Ed Yourdon - rev. 051406 In this chapter, you will learn: 1. Why systems analysis is interesting; 2. Why systems analysis is more difficult than programming; and 3. Why it is important to be familiar with systems analysis. Chances are that you groaned when you first picked up this book, seeing how heavy and thick it was. The prospect of reading such a long, technical book is enough to make anyone gloomy; fortunately, just as long journeys take place one day at a time, and ultimately one step at a time, so long books get read one chapter at a time, and ultimately one sentence at a time. 1.1 Why is systems analysis interesting? Long books are often dull; fortunately, the subject matter of this book — systems analysis — is interesting. In fact, systems analysis is more interesting than anything I know, with the possible exception of sex and some rare vintages of Australian wine. Without a doubt, it is more interesting than computer programming (not that programming is dull) because it involves studying the interactions of people, and disparate groups of people, and computers and organizations. As Tom DeMarco said in his delightful book, Structured Analysis and Systems Specification (DeMarco, 1978), [systems] analysis is frustrating, full of complex interpersonal relationships, indefinite, and difficult. -

Edward Yourdon

EDWARD YOURDON EDWARD YOURDON is an internationally-recognized computer consultant, as well as the author of more than two dozen books, including Byte Wars, Managing High-Intensity Internet Projects, Death March, Rise and Resurrection of the American Programmer, and Decline and Fall of the American Programmer. His latest book, Outsource: competing in the global productivity race, discusses both current and future trends in offshore outsourcing, and provides practical strategies for individuals, small businesses, and the nation to cope with this unstoppable tidal wave. According to the December 1999 issue of Crosstalk: The Journal of Defense Software Engineering, Ed Yourdon is one of the ten most influential men and women in the software field. In June 1997, he was inducted into the Computer Hall of Fame, along with such notables as Charles Babbage, Seymour Cray, James Martin, Grace Hopper, Gerald Weinberg, and Bill Gates. Ed is widely known as the lead developer of the structured analysis/design methods of the 1970s, as well as a co-developer of the Yourdon/Whitehead method of object-oriented analysis/design and the Coad/Yourdon OO methodology in the late 1980s and 1990s. He was awarded a Certificate of Merit by the Second International Workshop on Computer-Aided Software Engineering in 1988, for his contributions to the promotion of Structured Methods for the improvement of Information Systems Development, leading to the CASE field. He was selected as an Honored Member of Who’s Who in the Computer Industry in 1989. And he was given the Productivity Award in 1992 by Computer Language magazine, for his book Decline and Fall of the American Programmer. -

A Brief History of Devops by Alek Sharma Introduction: History in Progress

A Brief History of DevOps by Alek Sharma Introduction: History in Progress Software engineers spend most of their waking hours wading George Santayana wrote that “those who cannot remember the through the mud of their predecessors. Only a few are lucky past are condemned to repeat it.” He was definitely not thinking enough to see green fields before conflict transforms the about software when he wrote this, but he’s dead now, which terrain; the rest are shipped to the front (end). There, they means he can be quoted out of context. Oh, the joys of public languish in trenches as shells of outages explode around them. domain! Progress is usually glacial, though ground can be covered This ebook will be about the history of software development through heroic sprints. methodologies — especially where they intersect with traditional best practices. Think of it as The Silmarillion of Silicon Valley, But veterans do emerge, scarred and battle-hardened. They except shorter and with more pictures. Before plunging into this revel in relating their most daring exploits and bug fixes to new rushing river of time, please note that the presented chronology recruits. And just as individuals have learned individual lessons is both theoretically complete and practically in progress. In other about writing code, our industry has learned collective lessons words, even though a term or process might have been coined, it about software development at scale. It’s not always easy to always takes more time for Best Practices to trickle down to Real see these larger trends when you’re on the ground — buried in Products. -

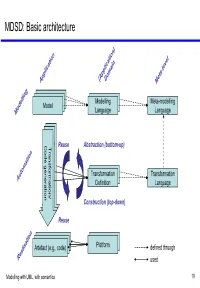

MDSD: Basic Architecture

MDSD: Basic architecture Model Modelling Meta-modelling ModelModel Model Language Language Codegeneration Transformation/ Codegeneration Transformation/ Code generation Transformation/ Reuse Abstraction (bottom-up) TransformationModel Transformation Definition Language Construction (top-down) Reuse Model Model Artefact Model(e.g., code) Platform defined through used Modelling with UML, with semantics 19 MDSD: A bird’s view Implementation Model Transformer J2EE Implementation .Net Transformation Knowledge Implementation . Modelling with UML, with semantics 20 How is MDSD realised? • Developer develops model(s), Model ModelModel Meta-model expressed using a DSL, based on certain meta-model(s). Transformation Using code generation templates, the Transformer • Rules model is transformed into executable code. • Alternative: Interpretation Model Meta-model optional, can be repeated • Optionally, the generated code is merged with manually written code. Code Transformer Generation Templates • One or more model-to-model transformation steps may precede code generation. Manually Generated Code Written Code optional Modelling with UML, with semantics 21 (Meta-)Model hierarchy conformsTo Meta-meta-model meta Meta-meta-model MOF element M3 meta conformsTo Meta-model element Relational UML … meta-model meta-model M2 Meta-model meta conformsTo repOf Model element System … … M1 Model Modelling with UML, with semantics 22 (Meta-)Model hierarchy: Example conformsTo MOF Meta-meta-model source Association destination Class conformsTo Relational Meta-model -

Continuing Madness: Methods Behind System Architecting Challenged

Continuing Madness: Methods Behind System Architecting Challenged Robert (Bob) Scheurer Associate Technical Fellow | Systems Engineering Defense, Space and Security Robert P. Scheurer 10/18/2019, Continuing Madness – Copyright © 2019 Boeing. All rights reserved. Methods Behind System Architecting Challenged 1 Topics 1. System Architectures/Models: An Evolution 2. Modeling Frameworks and Methods: Today’s Reality 3. Toward Better Architectures, More Useful Methods, and Best Outcomes: The Challenges 4. Summary and Conclusions Robert P. Scheurer 10/18/2019, Continuing Madness – Copyright © 2019 Boeing. All rights reserved. Methods Behind System Architecting Challenged 2 Architecting/Modeling: Recipe for Success . 1993 Paper: “Method Behind the Madness in System Modeling” . Premises for Successful Modeling: Disciplined Methodology Automation via Computer-Based Tools Proper Training in Method and Tool . Conclusion: Modeling Method is Needed in Order to Avoid Right Solution to the Wrong Problem Wrong Solution to the Right Problem No Solution to any Practical Problem Robert P. Scheurer 10/18/2019, Continuing Madness – Copyright © 2019 Boeing. All rights reserved. Methods Behind System Architecting Challenged 3 Architecting/Modeling: Then to Now Lessons Learned from Modern History: Genesis of Formal SE: 1943 Army Field Manual Modeling Language 1960’s: Process Relationships Frameworks Evolution (Late 1960’s – Structured Analysis & Design Technique DoD-5000 Defense Acquisition Life Cycle Evolution Today 1943: Basic Structure 2019: Tailored Processes Robert P. Scheurer 10/18/2019, Continuing Madness – Copyright © 2019 Boeing. All rights reserved. Methods Behind System Architecting Challenged 44 Today’s Condition with Architectures 1 . Higher Fidelity and Functional Diversity: Experience in Multiple Technical Fields Necessary . Extended Objectives: Digital Twin, Economy, Enduring Relevance . Constraining Objectives: Modular Open Systems Approach; Cybersecurity; Affordability; Hardware / Software Re-Use . -

Enterprise Modelling

Objectives for Session Enterprise Modelling • Introduce Enterprise Modelling • Enterprise = … • Different approaches • Model = … – Information Engineering • How would you do it? – OOEM – DFD – Enterprise Ontology – ERD • Issues – UML – getting started – Z – managing change – SD – modelling • Why would you do it? • Research tasks • ………………………. that way? RSRP RSRP Enterprise Modelling Approaches • Its what you want it to be! • Information Engineering • But – James Martin, 198x – should cover whole organisation, not just a – Data oriented single system – Top down • Includes • Object Oriented – business processes – Jacobson, Rumbaugh, Booch, Coad et al, 199x – Data + function (technical) + process – business data (about and for) – Bottom up • More importantly • Ontology Based – organisation goals – Enterprise project (IBM, AIAI, Unilever et al) – system purpose – language / change oriented • Others … Soft Systems (rich pictures), IDEF RSRP RSRP What Is a Goal or an Objective? Information Engineering - EM Outline • Karakostas & Loucopolis • Seven stages - first one is EM Goal = set of values for defined parameters – Information Systems Planning í of a “system” BAA í BSD í TD í Con í Trans í Prod • ISP split into 5 areas Objective = abstract goal, not “when”, – Determine direction: mission í objective í “how” or “how much” strategy í goal í plan í CSF • Can be different from project objectives – Map organisation: hierarchy, subject areas, function diagram Goal = “high level principle that is used to – Activities and data guide project … (e.g.) -

Appendices MISQ Archivist for Business Process Change: a Study of Methodologies, Techniques and Tools

Appendices MISQ Archivist for Business Process Change: A Study of Methodologies, Techniques and Tools By William J. Kettinger James T.C. Teng Subashish Guha A total of eight appendices (Appendix 1 through Appendix 8) and an associated reference for these appendices have been placed here. In addition, there is currently a search engine located at http://theweb.badm.sc.edu/bpr/ to assist users in identifying BPR techniques and tools. 1 Appendices 1-8 Contained in MISQ Archivist Appendix 1: Detailed Description of the Research Methodology Appendix 2: Sources of Research Data Appendix 3: Mapping and Reliability of Stages to BPR Methodologies Appendix 4: BPR Techniques Description and Mapping Appendix 5: BPR Tools Description & Mapping Appendix 6: Hierarchical Mapping of BPR Techniques and Tools to the Stage-Activity Framework Appendix 7: IT/Process Analysis Appendix 8: Categories of BPR Techniques Appendix References 2 Appendix 1: Detailed Description of the Research Methodology Referring to Table 1, the researchers conducted the following research steps: Steps 1&2: Literature Review and Market Assessment The authors conducted a literature search on the current state of BPR MTTs using secondary research sources which include scholarly and trade literature, CD- ROM product data bases, on-line market intelligence services such as Computer Select, ABI/Inform, and reports from market research firms such as IDC, Seybold's and Gartner Group, product announcements, public bulletin boards, as well as recently published books and periodicals (e.g., Spurr, Layzell, Jennison & Richards, 1994; Hansen, 1994). This effort produced a list of initial sources of research data as shown in Appendix 2, which classifies these sources into two groups: 1) BPR Market Researchers and Consultants, and 2) BPR Tools and Technology Vendors. -

By Gerard Coleman School of Computer Applications, Dublin City University, Glasnevin, Dublin 9. M. Sc., September 1997

A Quality Software Process for Rapid Application Development By Gerard Coleman School of Computer Applications, Dublin City University, Glasnevin, Dublin 9. M. Sc., September 1997 A Quality Software Process for Rapid Application Development A Dissertation Presented in Fulfilment of the Requirement for the M.Sc. Degree September 1997 Gerard Coleman School of Computer Applications Dublin City University Supervisor: Mr. Renaat Verbruggen Declaration I hereby certify that this material, which I now submit for assessment on the programme of study leading to the award of M.Sc. is entirely my own work and has not been taken from the work of others save and to the extent that such work has been cited and acknowledged within the text of my work. Date: Acknowledgements I would like to thank Renaat for his assistance, insight and guidance during the research. I would also like to thank my wife, Fiona, for her encouragement and understanding throughout the compilation of this work. Finally, I would like to thank Patrick O’Beime, Shay Curtin and Gerard McCloskey who gave so generously of their time to review this work. iv Abstract Having a defined and documented standardised software process, together with the appropriate techniques and tools to measure its effectiveness, offers the potential to software producers to improve the quality of their output. Many firms have yet to define their own software process. Yet without a defined process it is impossible to measure success or focus on how development capability can be enhanced. To date, a number of software process improvement frameworks have been developed and implemented. -

Automation with the Four Rs of CASE

Dr. Carma McClure presents Rebuilding for Software Automation with the Four Rs of CASE San Francisco Atlanta September 17-19, 1991 October 21-23, 1991 /ASI -D-W-. An Extended Intelligence, Our LIPS logo stands for the AI tern- Inc. Conference and Tools Exhibition Logical Inferences Per Second for SOFTWARE AUTOMATION Software Automation demands advantage ofCASE. RAD" , Enter- rebuilding the entire software organi- prise Modeling, Object-Oriented zation. CASE began the software Development and Reusability are TOOLS automationmovement with the methods that leverage our huge " I-CASE rebuilding ofsoftware tools. First, we investmentsin CASE, greatly speed introduced CASE tools to provide up application development and REPOSITORIES " automated assistancefor software maintenanceand eliminate the " RE-ENGINEERING systems analysis, design and imple- enormouswaste of time, software mentation. Now, we must introduce resources, and money. __ Integrated,i___ei4_c--cu, Repository-based_YCL.u-__u_y-L>a_cu CASEv^/\ol . f_- because software- automation changes METHODS 100l Environmentsto capitalize. on , , the. software process, we must rebuild1.11 software productivity1 . and11.quality , RAD" * . ourmanagement techniques.. One~ " improvements, as well as Ke-engineer- , . , , _ extremelyimportant change . REUSABILITY . , 111 (■■ r is the use " ing 1ools to extendthe benefits of , , /-> a or- ■ or quantitative,automated measure 1.11. n- r existing. , - CASE to billionsor lines of .11. " OBJECT-ORIENTED , ments, and metrics that direct the1 systems code. , . , , , , r introduction. and control therisk. of MANAGEMENT But there is much more to software software automation. Those organiza- technologyrebuilding then simply tions that are most successful with MEASURES " new tools. We also must rebuild our CASEknow how to measureand METRICS methods and management practices.