Deep NLP-Based Representations for a Generalizable Anime Recommender System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Protoculture Addicts

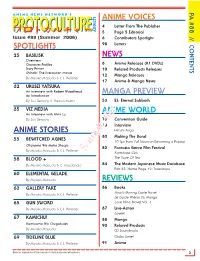

PA #88 // CONTENTS PA A N I M E N E W S N E T W O R K ' S ANIME VOICES 4 Letter From The Publisher PROTOCULTURE¯:paKu]-PROTOCULTURE ADDICTS 5 Page 5 Editorial Issue #88 (Summer 2006) 6 Contributors Spotlight SPOTLIGHTS 98 Letters 25 BASILISK NEWS Overview Character Profiles 8 Anime Releases (R1 DVDs) Story Primer 10 Related Products Releases Shinobi: The live-action movie 12 Manga Releases By Miyako Matsuda & C.J. Pelletier 17 Anime & Manga News 32 URUSEI YATSURA An interview with Robert Woodhead MANGA PREVIEW An Introduction By Zac Bertschy & Therron Martin 53 ES: Eternal Sabbath 35 VIZ MEDIA ANIME WORLD An interview with Alvin Lu By Zac Bertschy 73 Convention Guide 78 Interview ANIME STORIES Hitoshi Ariga 80 Making The Band 55 BEWITCHED AGNES 10 Tips from Full Moon on Becoming a Popstar Okusama Wa Maho Shoujo 82 Fantasia Genre Film Festival By Miyako Matsuda & C.J. Pelletier Sample fileKamikaze Girls 58 BLOOD + The Taste Of Tea By Miyako Matsuda & C. Macdonald 84 The Modern Japanese Music Database Part 35: Home Page 19: Triceratops 60 ELEMENTAL GELADE By Miyako Matsuda REVIEWS 63 GALLERY FAKE 86 Books Howl’s Moving Castle Novel By Miyako Matsuda & C.J. Pelletier Le Guide Phénix Du Manga 65 GUN SWORD Love Hina, Novel Vol. 1 By Miyako Matsuda & C.J. Pelletier 87 Live-Action Lorelei 67 KAMICHU! 88 Manga Kamisama Wa Chugakusei 90 Related Products By Miyako Matsuda CD Soundtracks 69 TIDELINE BLUE Otaku Unite! By Miyako Matsuda & C.J. Pelletier 91 Anime More on: www.protoculture-mag.com & www.animenewsnetwork.com 3 ○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○ LETTER FROM THE PUBLISHER A N I M E N E W S N E T W O R K ' S PROTOCULTUREPROTOCULTURE¯:paKu]- ADDICTS Over seven years of writing and editing anime reviews, I’ve put a lot of thought into what a Issue #88 (Summer 2006) review should be and should do, as well as what is shouldn’t be and shouldn’t do. -

Bcsfazine #528 | Felicity Walker

The Newsletter of the British Columbia Science Fiction Association #528 $3.00/Issue May 2017 In This Issue: This and Next Month in BCSFA..........................................0 About BCSFA.......................................................................0 Letters of Comment............................................................1 Calendar...............................................................................6 News-Like Matter..............................................................11 Turncoat (Taral Wayne)....................................................17 Art Credits..........................................................................18 BCSFAzine © May 2017, Volume 45, #5, Issue #528 is the monthly club newsletter published by the British Columbia Science Fiction Association, a social organiza- tion. ISSN 1490-6406. Please send comments, suggestions, and/or submissions to Felicity Walker (the editor), at felicity4711@ gmail .com or Apartment 601, Manhattan Tower, 6611 Coo- ney Road, Richmond, BC, Canada, V6Y 4C5 (new address). BCSFAzine is distributed monthly at White Dwarf Books, 3715 West 10th Aven- ue, Vancouver, BC, V6R 2G5; telephone 604-228-8223; e-mail whitedwarf@ deadwrite.com. Single copies C$3.00/US$2.00 each. Cheques should be made pay- able to “West Coast Science Fiction Association (WCSFA).” This and Next Month in BCSFA Friday 19 May: Submission deadline for June BCSFAzine (ideally). Sunday 14 May at 7 PM: May BCSFA meeting—at Ray Seredin’s, 707 Hamilton Street (recreation room), New Westminster. -

Protoculture Addicts #68

Sample file CONTENTS 3 ○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○○ PROTOCULTURE ✾ PRESENTATION ........................................................................................................... 4 STAFF NEWS ANIME & MANGA NEWS: Japan / North America ............................................................... 5, 10 Claude J. Pelletier [CJP] — Publisher / Manager ANIME & MANGA RELEASES ................................................................................................. 6 Martin Ouellette [MO] — Editor-in-Chief PRODUCTS RELEASES ............................................................................................................ 8 Miyako Matsuda [MM] — Editor / Translator NEW RELEASES ..................................................................................................................... 11 Contributing Editors Aaron K. Dawe, Asaka Dawe, Keith Dawe REVIEWS Kevin Lillard, James S. Taylor MODELS: ....................................................................................................................... 33, 39 MANGA: ............................................................................................................................. 40 Layout FESTIVAL: Fantasia 2001 (Anime, Part 2) The Safe House Metropolis ...................................................................................................................... 42 Cover Millenium Actress ........................................................................................................... -

Super Robot Wars Jump You've Been to Plenty of Universes Before, But

Super Robot Wars Jump You've been to plenty of universes before, but how about one comprised of multiple universes? The Super Robot Wars universe is one where all robot anime can cross over and interact. Ever wanted to see how a Mobile Suit or a Knightmare Frame stack up against the super robot threats of the Dinosaur Empire? Ever wanted to see what would happen if all of these heroes met and fought together? This is the jump for you. First of course we need to get you some funds and generate the world for you, so you get +1000CP Roll 1d8 per table to determine what series is in your world, rolling an 8 gives you free choice from the table rolled on. Group 1 SD Gundam Sangokuden Brave Battle Warriors SPT Layzner Shin Getter Robo Armageddon SDF Macross Dancougar Nova Tetsujin 28 (80s) Daitarn 3 Group 2 New Getter Robo Shin Getter Robo vs Neo Getter Robo King of Braves GaoGaiGar (TV, FINAL optional) Evangelion Code Geass Patlabor Armored Trooper VOTOMS Group 3 Big O Xabungle Shin Mazinger Z Turn A Gundam Macross Plus Machine Robo: Revenge of Chronos Gundam X Group 4 Zambot 3 Full Metal Panic (includes Fummofu, 2nd Raid, manga) Trider G7 Tobikage Gaiking: Legend of Daiku-Maryu Fafner in the Azure Dragonar Group 5 Godmars Metal Overman King Gainer Getter Robo and Getter Robo G (Toei 70s) Dai Guard G Gundam Macross 7 Linebarrels of Iron (Anime or manga, your call) Group 6 Gundam SEED/Destiny (Plus Astray and Stargazer) Mazinkaiser (OVA+Movie+series) Gunbuster Martian Successor Nadesico Brain Powerd Gear Fighter Dendoh Mazinkaiser SKL Group 7 Tekkaman Blade Gaiking (Toei 70s) Heavy Metal L Gaim Great Mazinger and Mazinger Z (Toei 70s) Gundam 00 Kotetsu Jeeg (70s) and Kotetsushin Jeeg Macross Frontier Group 8 Gundam (All of the Universal Century) Gundam Wing Aura Battler Dunbine Gurren Lagann Grendaizer Dancougar Virtual On MARZ -50CP to add an additional series from the tables -100CP to add a series not on the tables Drop in anywhere on Earth or space for free, but remember to keep in mind the series in your universe. -

Patch's London Adventure 102 Dalmations

007: Racing 007: The World Is Not Enough 007: Tomorrow Never Dies 101 Dalmations 2: Patch's London Adventure 102 Dalmations: Puppies To The Rescue 1Xtreme 2002 FIFA World Cup 2Xtreme ok 360 3D Bouncing Ball Puzzle 3D Lemmings 3Xtreme ok 3D Baseball ok 3D Fighting School 3D Kakutou Tsukuru 40 Winks ok 4th Super Robot Wars Scramble 4X4 World Trophy 70's Robot Anime Geppy-X A A1 Games: Bowling A1 Games: Snowboarding A1 Games: Tennis A Bug's Life Abalaburn Ace Combat Ace Combat 2 ok Ace Combat 3: Electrosphere ok aces of the air ok Acid Aconcagua Action Bass Action Man: Operation Extreme ok Activision Classics Actua Golf Actua Golf 2 Actua Golf 3 Actua Ice Hockey Actua Soccer Actua Soccer 2 Actua Soccer 3 Adidas Power Soccer ok Adidas Power Soccer '98 ok Advan Racing Advanced Variable Geo Advanced Variable Geo 2 Adventure Of Little Ralph Adventure Of Monkey God Adventures Of Lomax In Lemming Land, The ok Adventure of Phix ok AFL '99 Afraid Gear Agent Armstrong Agile Warrior: F-111X ok Air Combat ok Air Grave Air Hockey ok Air Land Battle Air Race Championship Aironauts AIV Evolution Global Aizouban Houshinengi Akuji The Heartless ok Aladdin In Nasiria's Revenge Alexi Lalas International Soccer ok Alex Ferguson's Player Manager 2001 Alex Ferguson's Player Manager 2002 Alien Alien Resurrection ok Alien Trilogy ok All Japan Grand Touring Car Championship All Japan Pro Wrestling: King's Soul All Japan Women's Pro Wrestling All-Star Baseball '97 ok All-Star Racing ok All-Star Racing 2 ok All-Star Slammin' D-Ball ok All Star Tennis '99 Allied General -

Hajime No Ippo the Fighting Ps3

Hajime No Ippo The Fighting Ps3. 1 / 5 Hajime No Ippo The Fighting Ps3. 2 / 5 3 / 5 Boxing manga/anime franchise Hajime no Ippo returns for the PS3. 1. hajime no ippo the fighting 2. hajime no ippo fighting spirit 3. hajime no ippo the fighting game Hajime No Ippo: The Fighting (PS3) Sound Remaster. Progress on this Project can be found on this Board.. Check out the first official screenshots from the PS3-exclusive Hajime no Ippo: The Fighting.. Hajime No Ippo: The Fighting! Edição, Digital. Plataforma, PS3. Formato, Digital. Outras características.. Sviluppato da Bandai Namco Games, Hajime no Ippo: The Fighting! è un gioco sportivo uscito l'11 dicembre 2014 per PS3.. Gamer Pr0n: Hajime No Ippo The Fighting PS3. What is good guys. We're taking it to the land of the rising sun, but really isn't that where all of ... hajime no ippo the fighting hajime no ippo the fighting, hajime no ippo the fighting ps3, hajime no ippo fighting spirit, hajime no ippo the fighting iso, hajime no ippo the fighting game, hajime no ippo the fighting rising, hajime no ippo fighting souls game, hajime no ippo the fighting apk, hajime no ippo the fighting anime, hajime no ippo the fighting download, hajime no ippo fighting souls apk, hajime no ippo fighting, hajime no ippo fighting game, hajime no ippo fighting styles, hajime no ippo fighting spirit episode 1, hajime no ippo fighting spirit stream, hajime no ippo fighting spirit download, hajime no ippo fighting record Juego Psx Hajime No Ippo - The Fighting! (jap) · Playera Anime Pelea Crossover Hajime No Ippo Ashita No Joe · $259. -

Copy of Anime Licensing Information

Title Owner Rating Length ANN .hack//G.U. Trilogy Bandai 13UP Movie 7.58655 .hack//Legend of the Twilight Bandai 13UP 12 ep. 6.43177 .hack//ROOTS Bandai 13UP 26 ep. 6.60439 .hack//SIGN Bandai 13UP 26 ep. 6.9994 0091 Funimation TVMA 10 Tokyo Warriors MediaBlasters 13UP 6 ep. 5.03647 2009 Lost Memories ADV R 2009 Lost Memories/Yesterday ADV R 3 x 3 Eyes Geneon 16UP 801 TTS Airbats ADV 15UP A Tree of Palme ADV TV14 Movie 6.72217 Abarashi Family ADV MA AD Police (TV) ADV 15UP AD Police Files Animeigo 17UP Adventures of the MiniGoddess Geneon 13UP 48 ep/7min each 6.48196 Afro Samurai Funimation TVMA Afro Samurai: Resurrection Funimation TVMA Agent Aika Central Park Media 16UP Ah! My Buddha MediaBlasters 13UP 13 ep. 6.28279 Ah! My Goddess Geneon 13UP 5 ep. 7.52072 Ah! My Goddess MediaBlasters 13UP 26 ep. 7.58773 Ah! My Goddess 2: Flights of Fancy Funimation TVPG 24 ep. 7.76708 Ai Yori Aoshi Geneon 13UP 24 ep. 7.25091 Ai Yori Aoshi ~Enishi~ Geneon 13UP 13 ep. 7.14424 Aika R16 Virgin Mission Bandai 16UP Air Funimation 14UP Movie 7.4069 Air Funimation TV14 13 ep. 7.99849 Air Gear Funimation TVMA Akira Geneon R Alien Nine Central Park Media 13UP 4 ep. 6.85277 All Purpose Cultural Cat Girl Nuku Nuku Dash! ADV 15UP All Purpose Cultural Cat Girl Nuku Nuku TV ADV 12UP 14 ep. 6.23837 Amon Saga Manga Video NA Angel Links Bandai 13UP 13 ep. 5.91024 Angel Sanctuary Central Park Media 16UP Angel Tales Bandai 13UP 14 ep. -

Opinión | Reportajes | Noticias | Avances the Evil Within Promete Traer De Regreso El Terror Que Dejó En El Camino Resident Evil

OPINIÓN EN MODO HARDCORE TodoOCTUBRE 2014 JuegosNÚMERO 7 OPINIÓN | REPORTAJES | NOTICIAS | AVANCES THE EVIL WITHIN PROMETE TRAER DE REGRESO EL TERROR QUE DEJÓ EN EL CAMINO RESIDENT EVIL EL DIABLO EN EL CUERPO BAYONETTA INSOMNIAC WWE 2K15 EL REGRESO DE EL ESTUDIO DE 2K SPORTS PONE LA BRUJA SEXY SPYRO Y RATCHET LA LUCHA LIBRE EN NINTENDO PASA A XBOX ONE A OTRO NIVEL UNA REALIZACIÓN DE PLAYADICTOS TodoJuegos TodoJuegos ¿Necesitamos Home? Equipo Editorial ace unos días, Sony anunció que en DIRECTOR marzo próximo cerrará para siempre Rodrigo Salas PlayStation Home. Seamos sinceros... JEFE DE PRODUCCIÓN H Cristian Garretón ¿cuántos realmente lo ocuparon y cuántos entramos sólo en algunos eventos especia- EDITOR GENERAL les o cuando había regalos de por medio? Jorge Maltrain Macho Sin embargo, dos hechos me hacen pensar EQUIPO DE REPORTEROS que lo de Sony fue una gran idea, pobre- Felipe Jorquera mente ejecutada, que podría haberse con- #LDEA20 vertido en un bombazo en PlayStation 4, de Andrea Sánchez haber tenido la voluntad para enfrentarlo. #EIDRELLE Primero, las palabras de un desarrollador de Jorge Rodríguez juegos e ítemes para PS Home, quien asegu- #KEKOELFREAK raba haber ganado millones vendiendo ro- Roberto Pacheco pas virtuales o créditos para sus juegos. #NEGGUSCL Luego, el fenómeno de La Torre en Destiny. Raúl Maltrain Una vez, sin ir más lejos, me senté en un rin- #RAULAZO cón mientras recorría mi menú de armas y, al David Ignacio P. regresar al juego, me encontré rodeado por #AQUOSHARP una decena de jugadores sentados, sin ha- -

Hajime No Ippo Subbed

Hajime no ippo subbed Hajime no Ippo Episode 1 English Subbed at gogoanime. Category: TV Series. Anime info: Hajime no Ippo · Read Manga Hajime no Ippo. Please, reload page if. hajime-no-ippo-episode 1 sub . Takamura give Ippo K.O. video -> Ippo became boxer-> Ippo. Hajime no Ippo Episode 3 (english subbed) . Memories.. Although I watched this, when Ippo started. Watch online and download Hajime No Ippo Episode 1 anime in high quality. Various formats from p to p HD (or even p). HTML5. Watch Hajime no Ippo and download Hajime no Ippo in high quality. Various formats from p to p HD (or even p). HTML5 available for mobile. Watch Hajime No Ippo: The Fighting! full episodes online English Sub. Other titles: Hajime no Ippo: Rising Synopsis: Japanese Featherweight Champion. Watch Hajime No Ippo Episode 1 KissAnime English Subbed in HD. Stream Hajime No Ippo Episode. So for Hajime No Ippo is the dub any good or should I just go sub? P.S Not trying to turn this into a massive Dub vs Sub debate, so save that. Hajime no Ippo only at dubhappy. Yea the dub for Hajime no ippo is good, haven't watched the sub to Anime info: Hajime no Ippo (Dub). Watch online and. Watch online and download anime Hajime No Ippo Episode 1 in high quality. Various formats from p to p HD (or even p). HTML5 available for. alternate titles: fighting spirit, hajime - no - ippo ;categories: action, comedy, drama, sport. Watch Lastman dubbed AND subbed on VRV today!! Anime Hajime no ippo: Champion Road and 1 episode Hajimme no ippo: Mashiba vs. -

86428094.Pdf

Editorial STAFF El futuro de aquí en más: Idea La baja produccion de series de anime este año com- Carlos Romero parandola con años anteriores, había hecho creer a Redacción varios especialistas que la industria del anime estaba Carlos Romero, siendo afectada por la crisis economica mundial y au- Hernán Panessi, Pamela guraban un futuro incierto. Timpani, Juan Linietsky, Ramsés En honor a la verdad, tal afirmación parece exagerada, tomando en cuenta la gran calidad de series estrena- Quintana, Mario Rodríguez Valles das este año. Corrección y Edición Umineko naku koro ni, K-on!, Tokyo Magnitude 8.0 y un Pamela Timpani largo etc. se convirtieron en éxitos casi instantáneos. Ayudante de Sumado a la buena recepción que tuvo el regreso de Corrección Dragon Ball, Full Metal Alchemisth y Haruhi Suzumi- ya y el estreno de esperadas adaptaciones animadas Romina Espinoza, como Fairy Tail y el arco final de Inu Yasha, nos hacen Moris Humberto Mejia Arriaga pensar que el futuro parece bastante alentador. Diseño de revista, ¿Y en Occidente cómo estamos? web y mascota Se sumaron nuevos canales que pasan anime, pero Andrea Calabró que no llegan a todo el continente, como el caso de Citybive, Zaz! e I-sat, que sólo se ven en determinados Composición países. Andrea Calabró, Animax por fin decidió cambiar de rumbo y emitir mas Cristian Prósperi contenido norteamericano, alejándose de su promesa Webmaster 100% anime. Pamela Timpani Parece que nosotros somos los que estamos en crisis! jejeje... Disclaimer Carlos Romero All the brands/product names and artwork are trademarks and registered trademarks of their owners. -

Gesamtkatalog Japan DVD Zum Download

DVD Japan (Kurzübersicht) Nr. 33 Juli 2004 Best.Nr. Titel Termin Preis** Animation 50009604 .Hack // Sign (DD & PCM) 25.08.2002 109,90 € 50011539 .Hack//Legend Of Twilight Bracelet Vol. 1 25.04.2003 94,90 € 50010369 .Hack//Sign Vol. 5 (PCM) 25.11.2002 109,90 € 50010725 .Hack//Sign Vol. 6 (PCM) 21.12.2002 109,90 € 50011314 .Hack//Sign Vol. 9 (PCM) 28.03.2003 109,90 € 50002669 1001 Nights 25.08.2000 60,90 € 50001721 1001 Nights (1998) 18.12.1999 154,90 € 50003015 101 Dalmatians 18.10.2000 78,90 € 50010612 101 Dalmatians 2: Patch's London Adventure 06.12.2002 64,90 € 50008214 11 Nin Iru! 22.03.2002 79,90 € 50010894 12 Kokuki 8-10 Vol. 4 (DD) 16.01.2003 79,90 € 50007134 24 Hours TV Special Animation 1978-1981 22.11.2001 281,90 € 50009578 3chome No Tama Onegai! Momochan Wo Sagashite! (DD) 21.08.2002 49,90 € 50011428 3x3 Eyes DVD Box (DD) 21.05.2003 263,90 € 50008995 7 Nin Me No Nana 4 Jikanme 03.07.2002 105,90 € 50008431 7 Nin No Nana 2jikanme 01.05.2002 89,90 € 50008430 7 Nin No Nana 4jikanme 03.07.2002 127,90 € 50008190 7 Nin No Nana Question 1 03.04.2002 89,90 € 50001393 A.D. Police 25.07.1999 94,90 € 50001719 Aardman Collection (1989-96) 24.12.1999 64,90 € 50015065 Abaranger Vs Hurricanger 21.03.2004 75,90 € 50009732 Abenobasi Maho Syotengai Vol. 4 (DD) 02.10.2002 89,90 € 50007135 Ace Wo Nerae - Theater Version (DD) 25.11.2001 94,90 € 50005931 Ace Wo Nerae Vol. -

100Quotesanimedisney.Pdf

100 Inspirational Quotes From Anime, Disney, Dreamworks And More! Quotes Collected by: Joseph Sturch Table of Contents Part 1) Anime Part 2) Disney Part 3) Dreamworks Part 4) Other Part 5) About Joseph Sturch PART 1 ANIME Don't come whining to me with this destiny stuff, and stop trying to tell me you can change what you are. You can do it too. After all, unlike me you're not a failure. -Naruto, Naruto It takes courage to accept you're wrong. Because it means you don't trust yourself. You can work through this, you can move on. -Mahiru Shirota, Servamp In the end, you choose what you choose. If afterwards your regrets are at minimum good for you. -Levi, Attack on Titan If you keep giving out more than you’re getting back in return, evidentially there isn’t going to be anything left. -Mr.Ton, Aggretsuko There is no such thing as a painless lesson. They just don't exist. Sacrifices are necessary. You can't gain anything without losing something first. Although, if you can endure that pain, and walk away from it, you'll find you now have a heart strong enough to overcome any obstacle. -Edward, Full Metal Alchemist The difference between the novice and the master is that the master has failed more times than the novice has tried. -Koro Sensei, Assassination Classroom We each need to find our own inspiration. Sometimes it's not easy. -Kiki's Delivery Service Giving up is what kills people. -Alucard, Hellsing If you win, you live.