Deep Two-Way Matrix Reordering for Relational Data Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

“Saitama GENSAI Project”: a Project for Disaster Mitigation

“Saitama GENSAI Project”: A Project for Disaster Mitigation Saitama Prefecture Background disaster mitigation based community development. In order to ensure the safety and security of residents (Published charts related to disaster prevention) from disasters including major earthquakes that have • Map of evacuation centres been occurring frequently in recent years, torrential • Outlook charts related to possible damage rain caused by typhoons, tornados, wind gusts, and caused by a northern Tokyo Bay earthquake heavy snow etc., Saitama Prefecture deliberated dif- (seismic intensity map, distribution chart of ferent ways to share disaster information among the completely destroyed buildings, distribution residents to try and promote disaster prevention and chart of burnt-down buildings, and distribution disaster mitigation based community development. chart of potential liquefaction) The deliberations concluded that if the residents can • Inland water hazard maps (for Ageo City and not only receive disaster information but also transmit Kumagaya City only) and share information on any disasters happening nearby, more appropriate action and disaster preven- tion and disaster mitigation based community devel- opment could be expected. Therefore, the Prefectural Government launched the “Saitama GENSAI Project” together with the private weather information service, WEATHERNEWS Inc. (WNI), in April 2014. Purpose of Project Sharing disaster information among residents Saitama GENSAI Project screenshot (Seismic in- If residents can not only receive -

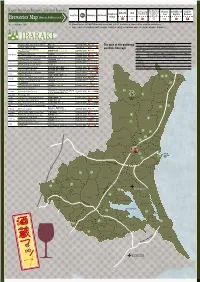

Ibaraki (PDF/6429KB)

Tax Free Shop Kanto-Shinetsu Regional Taxation Bureau Tax Free Shop Brewery available for English website shop consumption tax telephone consumption tax tour English Brochure Location No. Breweries Mainbrand & liquor tax (Wineries,Distilleries,et al.) number As of December, 2017 ※ Please refer to SAKE Brewery when you visit it, because a reservation may be necessary. ※ A product of type besides the main brand is being sometimes also produced at each brewery. ❶ Domaine MITO Corp. Izumi-cho Winery MITO Wine 029-210-2076 Shochu (C) Shochu (Continuous Distillation) Mito The type of the mainbrand ❷ MEIRI SHURUI CO.,LTD MANYUKI Shochu (S) 029-247-6111 alcoholic beverage Shochu (S) Shochu (Simple system Distillation) Isakashuzouten Limited Shochu (S) Sweet sake ❸ Partnership KAMENOTOSHI 0294-82-2006 Beer Beer, Sparkling liquor New genre(Beer-like liqueur) Hitachiota ❹ Okabe Goumeigaisha YOKAPPE Shochu (S) 0294-74-2171 ❺ Gouretsutominagashuzouten Limited Partnership Kanasagou Shochu (S) 0294-76-2007 Wine Wine, Fruit wine, Sweet fruit wine ❻ Hiyamashuzou Corporation SHOKOUSHI Wine 0294-78-0611 Whisky ❼ Asakawashuzou Corporation KURABIRAKI Shochu (S) 0295-52-0151 Liqueurs Hitachiomiya ❽ Nemotoshuzou Corporation Shochu (S) Doburoku ❾ Kiuchi Kounosu Brewery HITACHINO NEST BEER Beer 029-298-0105 Naka Other brewed liquors ❿ Kiuchi Nukata Brewery HITACHINO NEST BEER Beer 029-212-5111 ⓫ Kakuchohonten Corporation KAKUSEN Shochu (S) 0295-72-0076 Daigo ⓬ Daigo Brewery YAMIZO MORINO BEER Beer 0295-72-8888 ⓭ Kowa Inc. Hitachi Sake Brewery KOUSAI Shochu (S) -

The Chiba Bank, Ltd. Integrated Report 2020

The Chiba Bank, Ltd. Integrated Report 2020 The Chiba Bank, Ltd. 1-2, Chiba-minato, Chuo-ku, Chiba-shi, Chiba 260-8720, Japan Integrated Report Phone: 81-43-245-1111 https://www.chibabank.co.jp/ 005_9326487912009.indd 1-3 2020/09/10 11:20:10 Introduction Our Philosophy Corporate Data The Chiba Bank, Ltd. As of March 31, 2020 Aiming to enhance “customer Principal Shareholders experience” as a partner to customers The ten largest shareholders of the Bank and their respective shareholdings as of March 31, 2020 were as follows: Number of Shares Percentage of Total (in thousands)*1 Shares Issued*2 (%) and regional communities The Master Trust Bank of Japan, Ltd. (Trust Account) 56,139 7.55 Japan Trustee Services Bank, Ltd. (Trust Account) 35,615 4.79 Nippon Life Insurance Company 26,870 3.61 The Dai-ichi Life Insurance Company, Limited 26,230 3.53 Sompo Japan Nipponkoa Insurance Inc.*3 18,537 2.49 Meiji Yasuda Life Insurance Company 18,291 2.46 SUMITOMO LIFE INSURANCE COMPANY 17,842 2.40 MUFG Bank, Ltd. 17,707 2.38 STATE STREET BANK AND TRUST COMPANY 505223 14,576 1.96 Japan Trustee Services Bank, Ltd. (Trust Account 5) 13,406 1.80 Management Policy Excluded from the figures above are 72,709 thousand treasury shares in the name of the Chiba Bank, Ltd. (Excludes one thousand shares which, although registered in the name of the Chiba Bank, Ltd. on the shareholder list, are not actually owned by the Bank.) As a regional financial institution based in Chiba Prefecture, Chiba Bank Group recognizes that *1 Rounded down to the nearest thousand *2 Rounded down to two decimal places its mission is to “contribute to the sustainable development of regional economies through the *3 The trade name of Sompo Japan Nipponkoa Insurance Inc. -

Saitama Prefecture 埼玉県

February 2017 Saitama Prefecture 埼玉県 一 1 Overview of Saitama Pref.埼 2 Fiscal Position 玉 3 Bond Issue Policies 県 勢 Mt.Buko Kawagoe Bell Tower Saitama Shintoshin Saitama Super Arena Saitama Stadium 2002 Sakitama Ancient Burial Mounds “Toki-no-kane” “Sakitama Kohun-gun” 1 Overview of Saitama Population, Industry, Transportation and Rising Potential Population of 7.3 million equal to that of Switzerland・・・Relatively lower average age and larger productive age population ratio than other prefectures A variety of industries generate nominal GDP worth JPY21trn, equal to that of Czech and New Zealand Hokkaido Convenient transportation network and lower disaster risks Prefectural Gross Product (Nominal) Population 7.27mn (#5) Akita Source: 2015 National Census JPY20.7trn(#5) Source: FY2013 Annual Report on Prefectural Accounts, Cabinet Office 1 Tokyo Metro. 13,520,000 1 Tokyo Metro. JPY93.1trn Yamagata 2 Kanagawa Pref. 9,130,000 2 Osaka Pref. JPY37.3trn 3 Osaka Pref. 8,840,000 3 Aichi Pref. JPY35.4trn 4 Aichi Pref. 7,480,000 4 Kanagawa Pref. JPY30.2trn 5 Saitama Pref. 7,270,000 5 Saitama Pref. JPY20.7trn Population Growth 1.0%(#3) Hokuriku oban Metropolitan Employer compensation Inter-City per capita Kyoto Saitama Expressway Nagoya Tokyo Gaikan Tokyo Expressway JPY4,620,000(#7) Osaka Narita Source: FY2013 Annual Report on Prefectural Accounts, Cabinet Haneda Office Expressway Japan Shinkansen Japan’s Key Transportation Hub Lower Risk of Natural Disaster ・Connected to major eastern Japan cities with 6 Shinkansen lines Estimated damage on buildings -

Guide on Stops Green Red Pink Black

Shin-Keisei Line Guide on Keisei Line, Hokusō Line and Shibayama Line 新京成線 京成線・北総線・芝山鉄道線 ご案内 Guide on stops Green Red Pink Black Matsudo SL01 Kamihongō SL02 Matsudo-Shinden SL03 Minoridai SL04 Yabashira SL05 Tokiwadaira SL06 Gokō SL07 Motoyama SL08 Kunugiyama SL09 Kita-Hatsutomi SL10 松戸 上本郷 松戸新田みのり台八柱 常盤平 五香 元山 くぬぎ山北初富 みどり Limited Express あか Limited Express ピンク Rapid くろ Local 停車駅ご案内 Shibamata KS50 Kanamachi KS51Keisei- 柴又 京成金町 快速特急 特急 快速 普通 Orange Light Blue Blue オレンジ Access Express そらいろ Commuter Express あお Express アクセス特急 通勤特急 急行 JR Line JR Line(Shin-Yahashira) Narita SKY ACCESS Line 成田スカイアクセス線 JR Line Tōbu Line Keisei-Ueno KS01 京成上野Limited Express Nippori KS02 日暮里 Aoto KS09 青砥 Keisei-Takasago KS10 京成高砂 Imba nihon-idai Yukawa Narita KS43 Access Express Access Express Higashi-Matsudo HS05 東松戸 HS08 Access Express HS12 HS14 印旛日本医大成田湯川 KS41 KS42 SL11 Newtown Chūō Chiba 千葉ニ ュ ータウン中央 2 Terminal Narita Airport 空港第2ビル 1 Terminal Narita Airport 成田空港 Limited Express Limited Express Shin-Kamagaya 新鎌ヶ谷Limited Express Commuter Express Express Express Keisei-Narita KS40 京成成田 Rapid (成田第1ターミナル) Shin-Shibamata HS01 Yagiri HS02 Kita-Kokubun HS03 Akiyama HS04 Matsuhidai HS06 Ōmachi HS07 Nishi-Shiroi HS09 Shiroi HS10 Komuro HS11 Inzai-Makinohara HS13 Narita SKY ACCESS Line 新柴又 矢切 北国分 秋山 松飛台 大町 西白井 白井 小室 印西牧の原 (成田第2 ・ 第3ターミナル) 成田スカイアクセス線 Shim-Mikawashima KS03新三河島Machiya KS04町屋Senjuōhashi KS05千住大橋Keisei-Sekiya KS06京成関屋Horikirishōbuen KS07堀切菖蒲園Ohanajaya KS08お花茶屋 日暮里 Kamagaya-Daibutsu 닛포리 Hokusō Line Tōyō Rapid Line JR Line 北総線 Shin-Tsudanuma Futawamukōdai 青砥 -

Lions Club Name District Recognition

LIONS CLUB NAME DISTRICT RECOGNITION AGEO District 330 C Model Club AICHI EMERALD District 334 A Model Club AICHI GRACE District 334 A Model Club AICHI HIMAWARI District 334 A Model Club AICHI SAKURA District 334 A Model Club AIZU SHIOKAWA YUGAWA District 332 D Model Club AIZU WAKAMATSU KAKUJO District 332 D Model Club AIZUBANGE District 332 D Model Club ANDONG District 356 E Model Club ANDONG SONGJUK District 356 E Model Club ANJYO District 334 A Model Club ANSAN JOONGANG District 354 B Model Club ANSUNG NUNGKOOL District 354 B Model Club ANYANG INDUK District 354 B Model Club AOMORI CHUO District 332 A Model Club AOMORI HAKKO District 332 A Model Club AOMORI JOMON District 332 A Model Club AOMORI MAHOROBA District 332 A Model Club AOMORI NEBUTA District 332 A Model Club ARAO District 337 E Model Club ASAHIKAWA District 331 B Model Club ASAHIKAWA HIGASHI District 331 B Model Club ASAHIKAWA NANAKAMADO District 331 B Model Club ASAHIKAWA TAISETSU District 331 B Model Club ASAKA District 330 C Model Club ASAKURA District 337 A Model Club ASHIKAGA District 333 B Model Club ASHIKAGA MINAMI District 333 B Model Club ASHIKAGA NISHI District 333 B Model Club ASHIRO District 332 B Model Club ASHIYA District 335 A Model Club ASHIYA HARMONY District 335 A Model Club ASO District 337 E Model Club ATSUGI MULBERRY District 330 B Model Club AYASE District 330 B Model Club BAIK SONG District 354 H Model Club BANGKOK PRAMAHANAKORN 2018 District 310 C Model Club BAYAN BARU District 308 B2 Model Club BIZEN District 336 B Model Club BUCHEON BOKSAGOL District -

Zircon U-Pb Dating of a Tuff Layer from the Miocene Onnagawa Formation in Northern Japan

Geochemical Journal, Vol. 55, pp. 185 to 191, 2021 doi:10.2343/geochemj.2.0622 NOTE Zircon U-Pb dating of a tuff layer from the Miocene Onnagawa Formation in Northern Japan JUMPEI YOSHIOKA,1,2* JUNICHIRO KURODA,1 NAOTO TAKAHATA,1 YUJI SANO,1,3 KENJI M. MATSUZAKI,1 HIDETOSHI HARA,4 GERALD AUER,5 SHUN CHIYONOBU6 and RYUJI TADA2,7,8 1Atmosphere and Ocean Research Institute, The University of Tokyo, 5-1-5 Kashiwanoha, Kashiwa, Chiba 277-8564, Japan 2Department of Earth and Planetary Science, The University of Tokyo, 7-3-1 Hongo, Bunkyo-ku, Tokyo 113-0033, Japan 3Institute of Surface-Earth System Science, Tianjin University, Tianjin 300072, China 4Geological Survey of Japan, AIST, 1-1-1 Higashi, Tsukuba, Ibaraki 305-8567, Japan 5Institute of Earth Sciences, University of Graz, NAWI Graz Geocenter, Heinrichstrasse 26, 8010 Graz, Austria 6Faculty of International Resource Sciences, Akita University, 1-1 Tegatagakuenmachi, Akita, Akita 010-8502, Japan 7The Research Center of Earth System Science, Yunnan University, Chenggong District, Kunming, Yunnan 650500, China 8Institute for Geo-Cosmology, Chiba Institute of Technology, 2-17-1 Tsudanuma, Narashino, Chiba 275-0016, Japan (Received December 10, 2020; Accepted March 3, 2021) During the Middle-to-Late Miocene, diatomaceous sediments were deposited in the North Pacific margin and mar- ginal basins. The Onnagawa Formation is one of such deposits, which shows cyclic sedimentary rhythms reflecting oscil- lations of the marine environment in the Japan Sea. However, the age of the Onnagawa Formation is still poorly con- strained due to the poor preservation of siliceous microfossils. To better constrain its age, we performed U-Pb dating of zircon grains from a tuff layer from the middle part of the Onnagawa Formation, and obtained an age of 11.18 ± 0.37 Ma. -

Garbage Collection Day for Each Area Narashino City Garbage Disposal

Narashino City Garbage Disposal Rules English ●Follow the rules of collection day, time, site, and classification procedures. ●Dispose the garbage from dawn to 8:00 a.m. on the collection day. ●Please separate the garbage properly, put it in a bag and tie it tightly. Garbage Collection Day for Each Area Non-burnables Area Burnables Recyclables Hazardous three times a week two times a month once a week once a month a Akitsu Mon Wed Fri 1st & 3rd Sat Thu 2nd Sat i Izumi-cho Tue Thu Sat 1st & 3rd Mon Fri 2nd Mon Okubo 1 & 2 chome Tue Thu Sat 1st & 3rd Fri Mon 2nd Fri o Okubo 3 & 4 chome Tue Thu Sat 1st & 3rd Mon Wed 2nd Mon Kasumi Mon Wed Fri 2nd & 4th Tue Sat 1st Tue ka Kanadenomori Mon Wed Fri 1st & 3rd Thu Tue 2nd Thu Saginuma Mon Wed Fri 2nd & 4th Sat Thu 1st Sat sa Saginumadai Tue Thu Sat 1st & 3rd Fri Mon 2nd Fri shi Shinei Tue Thu Sat 2nd & 4th Mon Wed 1st Mon so Sodegaura Mon Wed Fri 1st & 3rd Tue Thu 2nd Tue Tsudanuma 1&2&3 chome Mon Wed Fri 2nd & 4th Tue Sat 1st Tue tsu Tsudanuma 4&5&6&7 chome Mon Wed Fri 1st & 3rd Sat Thu 2nd Sat ha Hanasaki Tue Thu Sat 2nd &4th Wed Mon 1st Wed Higashinarashino 1&2&3 chome Tue Thu Sat 2nd & 4th Wed Fri 1st Wed hi Higashinarashino 4&5&6&7&8 chome Tue Thu Sat 1st & 3rd Wed Fri 2nd Wed fu Fujisaki Mon Wed Fri 2nd & 4th Thu Sat 1st Thu Mimomi Tue Thu Sat 2nd & 4th Mon Wed 1st Mon mi Mimomihongo Tue Thu Sat 2nd & 4th Mon Wed 1st Mon mo Motookubo Tue Thu Sat 2nd & 4th Fri Mon 1st Fri Yashiki Tue Thu Sat 1st & 3rd Mon Wed 2nd Mon Yatsu 1&2&3&4&7 chome Mon Wed Fri 1st & 3rd Thu Tue 2nd Thu ya Yatsu 5&6 chome Mon Wed Fri 2nd & 4th Sat Tue 1st Sat Yatsumachi Mon Wed Fri 2nd & 4th Sat Tue 1st Sat ●"1st & 3rd Sat" under "Non-burnables" and "Hazardous" means the first and the third Saturday of each month. -

Official Guide T2 All En.Pdf

2020 , 1 December 2020 FLOOR GUIDE ENGLISH December Narita International Airport Terminal2 Narita Airport is working in conjunction with organizations such as Japan’s Ministry of Narita International Airport FLOOR GUIDE, Planned and Published by Narita International Airport Corporation (NAA), Published Planned andPublished by Narita International FLOOR GUIDE, Airport Narita International Justice and Ministry of Health, Labour and Welfare to combat the spread of COVID-19. Due to the spread of the virus, business hours may have changed at some terminal facilities and stores. For the latest information, please consult the Narita International Airport Official Website. Narita International Airport Ofcial Website 英語 CONTENTS INFORMATION & SERVICES Lost Item Inquiries/infotouch Interactive Digital Displays NariNAVI/Lounges …………………………………………………………4 Flight Information/ Terminals and Airlines ……………………………………… 5 Internet Services ……………………………………………………………5 General Information ………………………………………………………5 FLOOR MAP Terminal 2 Services Map ……………………………………… 6–7 B1F Railways (Airport Terminal2 Station) ……………………………………………… 8–9 1F International Arrival Lobby …………………………………………… 10–11 2F Parking Lot Accessway ……………………………………………… 12–13 3F International Departure Lobby (Check-in Counter) ………………… 14–15 4F Restaurants and Shops/Observation Deck ……………………… 16–17 3F International Departure Lobby (Boarding Gate)/ Duty Free and Shopping Area ……………………………………… 18–21 Domestic Flights …………………………………………………… 22–23 SHOPS AND FACILITIES Before Passport Control … 24–29 After Domestic Check-in -

Chapter 8 Taxation

A Guide to Living in Saitama Chapter 8 Taxation 1 Income Tax Saitama’s Prefectural Mascot Kobaton 2 Inhabitant Tax 3 Other Major Taxes All residents of Japan, regardless of nationality, are obligated to pay taxes. Taxes are an important resource used to promote a happy and stable environment for everyone. Taxes support various projects across a wide range of fields such as education, welfare, civil engineering, medical treatment, culture, environment, and industry. The two main taxes are income tax, which is levied by the national government, and inhabitant tax (prefectural and municipal tax), which is levied by both the prefectural and municipal governments. You may be exempt from paying income tax and inhabitant tax because of a taxation treaty between your country and Japan. To avoid double taxation, special exemptions have been established through bilateral taxation treaties between Japan and various countries. To check if these exemptions apply to you, please contact your country's embassy in Japan for further information. Payment of taxes must be done by the due date. If payment is overdue, an overdue fee will be incurred every day from the day after the due date until the payment is made. If your taxes remain unpaid for an extended period of time, your taxable assets will be seized. We encourage you to pay these taxes by the due date. Payment of taxes (Saitama Prefectural Government Taxation Division website) URL: http://www.pref.saitama.lg.jp/a0209/z-kurashiindex/z-3.html Explanation of prefectural taxes (Saitama Prefectural Government Taxation Division website) URL: http://www.pref.saitama.lg.jp/a0209/z-kurashiindex/z-3.html URL: http://www.pref.saitama.lg.jp/a0209/z-kurashiindex/documents/r1_kurasi-to-kenzei_e.pdf (English) 8-1 A Guide to Living in Saitama URL: http://www.pref.saitama.lg.jp/a0209/z-kurashiindex/documents/r1_kurasi-to-kenzei_c.pdf (Chinese) 1 Income Tax and Special Reconstruction Income Tax Income tax is levied on a person’s total income earned between January 1 and December 31. -

Japanese Zoning and Its Applicability in American Cities a Senior Project

Japanese Zoning and Its Applicability in American Cities A Senior Project presented to the Faculty of the City & Regional Planning California Polytechnic State University, San Luis Obispo In Partial Fulfillment of the Requirements for the Degree Bachelor of Science in City & Regional Planning by Anthony C. Petrillo Jr. March, 2017 © 2017 Anthony C. Petrillo Jr. Table of Contents 1. Introduction 1.1. Project Description and Purpose 1 1.2. Project Methodology and Research Approach 2 2. Background Report 2.1. Zoning in the United States 2.1.1. History of Zoning in the United States 4 2.1.2. Euclidian (or Single-Use) Zoning 7 2.1.3. How Zoning Works in California 7 2.1.4. Problems of Euclidian Zoning 9 2.1.5. Criteria for Comparison 12 2.2. Japanese Zoning 2.2.1. History of Japanese Land Use Planning and Zoning 14 2.2.2. How Land Use Planning and Zoning Works in Japan 16 2.2.2.1. District Plans 24 2.2.3. Problems Facing Japanese Cities 26 2.3. Case Study: Tokyo 2.3.1. Plans on the National Level 29 2.3.1.1. Master Plan for City Planning Areas 29 2.3.1.2. Tokyo Metropolitan Government Land Use Plan 31 2.3.1.3. National Capital Region Development Act 32 2.3.1.4. National Capital Regional Plan 34 2.3.2. Metropolitan Planning 34 2.3.2.1. Use Zoning 34 2.3.2.2. Special Use Zoning 36 2.3.2.3. Parks and Open Space 37 2.3.3. -

Entrance Ceremony Held to Welcome Newest Students by JIU Times

Produced by × JIU TIMES Vol. 16 SPRING 2016 Entrance ceremony held to welcome newest students by JIU Times Josai International University (JIU) held an entrance ceremony for new students on April 2 at its Togane Campus in Chiba Prefecture. The university, which is celebrating the 25th year anniversary, welcomed 1,650 students to its eight faculties and graduate school, as well as its school of Japanese Language and Culture. Among freshmen, 330 were non-Japanese from 22 countries and regions in Asia, Europe and South America. The ceremony at the Sports Culture Center was attended by foreign dignitaries, including HRH Tuanku Syed Faizuddin Putra Ibni Tu- anku Syed Sirajuddin Jamalullail, the Crown Prince of Perlis, Malaysia; HRH Tuanku Haj- jah Lailatul Shahreen Akashah Binti Khalil, Right photo: The Crown Prince of Perlis, Malaysia, HRH Tuanku Syed Faizuddin Putra Ibni Tuanku Syed Sirajuddin Jamalullail, one of the guests at the JIU entrance ceremony, addresses new students at the Togane the Crown Princess of Perlis, Malaysia; former Campus in Chiba Prefecture on April 2; Left photo: The Crown Prince and Crown Princess of Perlis, Malaysia, HRH Tuanku Hajjah Lailatul Shahreen Akashah Binti Khalil, look on during the ceremony. Malaysian Minister of Tourism and Josai Cen- tre of ASEAN Studies Director Ng Yen Yen; Foundation that enabled five female students Bright future ahead for latest graduates of JIU Kamarudin Hussin, former vice chancellor of from the Southeast Asian country to study at Universiti Malaysia Perlis (UniMAP); and Zul the university from the 2016 academic year. by Terutada Tsunoda, Student Her Imperial Highness Princess Taka- Azhar Zahid Jamal, deputy vice chancellor of “Created by the mother of his royal high- Faculty of International Humanities mado attended the ceremony, offering a UniMAP.