Program Review: Comprehensive and Sustainable Approaches for Educational Effectiveness

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2013-14 Humboldt State Men's Basketball

2013-14 HUMBOLDT STATE MEN’S BASKETBALL friday, feb. 28, 2014 - Lumberjacks at Chico State Saturday, Mar. 1, 2014 - Lumberjacks at Cal State Stanislaus 2013-14 HUMBOLDT STATE MEN’S BASKETBALL 2013-14 Quick Facts 2013-14 Humboldt State Men’s Basketball (11-15, 6-14) Head Coach .......................................Steve Kinder Date Opponent Location Time/Result Coach’s Alma Mater ....Humboldt State (1985) 10/31/2013 #University of California Berkeley, Calif. L, 83-61 Coach’s Career & HSU Record ........58-35/same 11/4/2013 #St. Johns University Queens, N.Y. L, 106-39 Years at HSU .........................................................4th CCAA/PacWest Challenge Men’s Basketball Phone ............ (707) 826-3463 11/8/2013 Dominican University San Rafael, Calif. L, 95-83 Men’s Basketball E-mail [email protected] Assistant Coach. ..........................Cy Vandermeer 11/9/2013 Academy of Art San Rafael, Calif. W, 90-86 Assistant Coach Alma Mater ........... Humboldt State 11/19/2013 Pacific Union College Arcata, Calif. W, 115-81 Assistant Coach .....................Aaron Hungerford 11/23/2013 Pacifica College Arcata, Calif. W, 80-78 Assistant Coach ....................David “DJ” Broome 11/30/2013 Simpson University Arcata, Calif. W, 105-92 2012-13 Record ............11-15, 7-15 (9th CCAA) 12/5/2013 *UC San Diego Arcata, Calif. L, 75-69 2011-2012 Record ........ 22-8, 15-7 (2nd CCAA) 12/7/2013 *CSU San Bernardino Arcata, Calif. L, 89-79 Men’s Basketball History 12/14/2013 *Cal Poly Pomona Pomona, Calif. L, 81-76 (2OT) All-time Record ..............................831-936 (.470) 12/16/2013 Linfield College Arcata, Calif. W, 91-58 All-time Conference Record .....431-487 (.470) 12/21/2013 *Cal Poly Pomona Arcata, Calif. -

Chico State in the Ncaa Championship Tournament

2008 WOMEN’S SOCCER SCHEDULE Day Date Opponent Time Place Fri. Aug. 15 Sacramento State (exhibition) 7:00 CHICO Sun. Aug. 17 UC Davis (exhibition) 11:00 CHICO Thur. Aug. 28 Notre Dame de Namur 7:00 CHICO Sat. Aug. 30 NDNU vs. NW Nazarene (neutral site) 4:30 CHICO Sat. Aug. 30 Academy of Art University 7:00 CHICO Mon. Sep. 1 Northwest Nazarene 11:00 CHICO Thur. Sep. 4 St. Martin's University 1:00 Olympia, WA Sat. Sep. 6 Seattle Pacific University 12:00 Seattle, WA Wed. Sep. 10 Western Washington University 7:00 CHICO Sun. Sep. 14 Humboldt State* 11:30 CHICO Fri. Sep. 19 Cal Poly Pomona* 4:30 CHICO Sun. Sep. 21 Cal State San Bernardino* 11:30 CHICO Fri. Sep. 26 San Francisco State* 4:00 San Francisco Sun. Sep. 28 CSU Monterey Bay 11:30 Seaside Wed. Oct. 1 Cal State Stanislaus* 7:00 CHICO Fri. Oct. 3 Cal State L.A.* 4:00 Los Angeles Fri. Oct. 10 Cal State Dominguez Hills* 7:00 CHICO Sun. Oct. 12 UC San Diego* 11:30 CHICO Fri. Oct. 17 CSU Monterey Bay* 4:30 CHICO Sun. Oct. 19 San Francisco State* 11:30 CHICO Sun. Oct. 26 Cal State Stanislaus* 11:30 Turlock Fri. Oct. 31 Humboldt State* 7:00 Arcata Sun. Nov. 2 Sonoma State* 2:00 Rohnert Park Fri.-Sun. Nov. 7-9 CCAA Championship Tournament TBA Thur.-Sun. Nov. 13-16 NCAA Far West Regional TBA Fri.-Sun. Nov. 21-23 Far West Championship & Quarterfinals TBA Fri.-Sun. Dec. 5-7 NCAA Final Four Tampa, FL *CCAA match Cover Photography by Skip Reager 2008 CHICO STATE WOMEN’S SOCCER WILDCATS SOCCER WHAT'S INSIDE.. -

2009 Torosvolleyball

2009 TOROS VOLLEYBALL MA RISS A AN D ERSON ASHLEY CL A RK • Junior • ASHLEY DA VISON • Junior • • Senior • MEDIA ROSTER 2009 TOROS VOLLEYBALL 1 • Sarah Murray 2 • Cassidy Mangum 3 • Madison Horsley 4 • Marissa Anderson 5 • Ashley Davison 5-7 • Fr. • L 5-4 • So. • S 5-6 • So. • OH 6-0 • Jr. • OPP 6-0 • Sr. • MB Canyon Lake, CA Victorville, CA San Dimas, CA San Bernardino, CA Stockton, CA 6 • Heather Rushton 7 • Rebecca Raymond 8 • Hilary Hougardy 9 • Cambrea Horn 10 • Ashley Clark 6-1 • So. • MB 6-2 • So. • OH 5-6 • So. • L 5-10 • Jr. • MB 6-0 • Jr. • OH San Dimas, CA Barstow, CA Thousand Oaks, CA Fresno, CA Bakersfield, CA 11 • Rebekah Wolfgramm 13 • Chelsea Thayer 14 • Samantha Maxwell 15 • Courtney Fresenius RS • Nikki Castrellon 5-7 • So. • S 6-0 • Fr. • MB 5-9 • Jr. • OH 5-8 • Jr. • S 5-9 • Fr. • OH Anaheim, CA Shingle Springs, CA Sacramento, CA Seal Beach, CA Littlerock, CA RS • Denise De Vine RS • Celeste Tuioti-Mariner Scott Davenport Christine Tran Andrea Buckner 5-8 • Fr. • OH 5-8 • Fr. • OH/L Head Coach Assistant Coach Assistant Coach Lake Elisnore, CA Corona, CA 3rd Season 3rd Season 1st Season WWW.GOTOROS.COM 2009 TORO VOLLEYBALL Table of Contents CSUDH Facts 2009 Toros Volleyball Location ..........................1000 E. Victoria St. ........................................Carson, CA 90747 2009 Season Outlook ..............................................2-3 Enrollment ........................................ 12,000 Head Coach Scott Davenport .......................................5 Assistant Coaches ......................................................6 Founded ..............................................1960 2009 Roster & Team Photo ..........................................8 President ........................... Dr. Mildred García Director of Athletics .................Patrick Guillen The 2009 Volleyball Team Athletics Phone ................... -

TOROS VOLLEYBALL 2010 Alphabetical Opponents

TOROS VOLLEYBALL 2010 Alphabetical Opponents AZUSA PACIFIC UNIVERSITY CAL POLY POMONA CAL STATE EAST BAY DOMINGUEZ HILLS General Information General Information General Information ORY /RFDWLRQ$]XVD&$ /RFDWLRQ 3RPRQD&$ /RFDWLRQ +D\ZDUG&$ T 1LFNQDPH &RXJDUV 1LFNQDPH%URQFRV 1LFNQDPH 3LRQHHUV IS H (QUROOPHQW (QUROOPHQW (QUROOPHQW &RORUV%ULFN%ODFN &RORUV *UHHQ*ROG &RORUV 5HG%ODFN:KLWH $WKOHWLF'LUHFWRU%LOO2GHOO $WKOHWLF'LUHFWRU %ULDQ6ZDQVRQ $WKOHWLF'LUHFWRU'HEE\'H$QJHOLV 6,' *DU\3LQH 6,' 7%$ 6,' 0DUW\9DOGH] 3KRQH 3KRQH 3KRQH VOLLEYBALL (PDLO JSLQH#DSXHGX (PDLO 7%$ (PDLOPDUW\YDOGH]#FVXHDVWED\HGX Volleyball Information Volleyball Information Volleyball Information IEW +HDG&RDFK &KULV.HLIH +HDG&RDFK 5RVLH:HJULFK +HDG&RDFK-LP6SDJOH V E 6FKRRO5HFRUG 6FKRRO5HFRUG 6FKRRO5HFRUG R &DUHHU5HFRUG &DUHHU5HFRUG &DUHHU5HFRUG 6DPH 5HFRUG*6$& 5HFRUG &&$$ 5HFRUG &&$$ 6WDUWHUV5/ / 6WDUWHUV5/ / 6WDUWHUV5/ / EASON S /HWWHUZLQQHUV5/ /HWWHUZLQQHUV5/ /HWWHUZLQQHUV5/ 6HULHV 6HULHV7LHGDW 6HULHV &33/HDGV 6HULHV&68(%/HDGV 2009 WWW.APU.EDU/ATHLETICS WWW.BRONCOATHLETICS.COM WWW.CSUEASTBAY.EDU/ATHLETICS S T CAL STATE L.A. CAL STATE MONTEREY BAY CAL STATE SAN BERNARDINO PPONEN O 2010 General Information General Information General Information /RFDWLRQ/RV$QJHOHV&$ /RFDWLRQ 6HDVLGH&$ /RFDWLRQ6DQ%HUQDUGLQR&$ 1LFNQDPH *ROGHQ(DJOHV 1LFNQDPH 2WWHUV 1LFNQDPH&R\RWHV (QUROOPHQW (QUROOPHQW (QUROOPHQW &RORUV %ODFN*ROG &RORUV%OXH*ROG &RORUV&ROXPELD%OXH%ODFN $WKOHWLF'LUHFWRU 'DQ%ULGJHV $WKOHWLF'LUHFWRU9LQFH2WRXSDO $WKOHWLF'LUHFWRU .HYLQ+DWFKHU 6,'3DXO+HOPV 6,'0LQG\0LOOV -

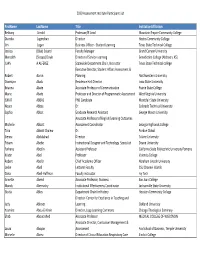

2020 Assessment Institute Participant List Firstname Lastname Title

2020 Assessment Institute Participant List FirstName LastName Title InstitutionAffiliation Bethany Arnold Professor/IE Lead Mountain Empire Community College Diandra Jugmohan Director Hostos Community College Jim Logan Business Officer ‐ Student Learning Texas State Technical College Jessica (Blair) Soland Faculty Manager Grand Canyon University Meredith (Stoops) Doyle Director of Service‐Learning Benedictine College (Atchison, KS) JUAN A ALFEREZ Statewide Department Chair, Instructor Texas State Technical college Executive Director, Student Affairs Assessment & Robert Aaron Planning Northwestern University Osomiyor Abalu Residence Hall Director Iowa State University Brianna Abate Associate Professor of Communication Prairie State College Marie Abate Professor and Director of Programmatic Assessment West Virginia University ISMAT ABBAS PhD Candidate Montclair State University Noura Abbas Dr. Colorado Technical University Sophia Abbot Graduate Research Assistant George Mason University Associate Professor of English/Learning Outcomes Michelle Abbott Assessment Coordinator Georgia Highlands College Talia Abbott Chalew Dr. Purdue Global Sienna Abdulahad Director Tulane University Fitsum Abebe Instructional Designer and Technology Specialist Doane University Farhana Abedin Assistant Professor California State Polytechnic University Pomona Kristin Abel Professor Valencia College Robert Abel Jr Chief Academic Officer Abraham Lincoln University Leslie Abell Lecturer Faculty CSU Channel Islands Dana Abell‐Huffman Faculty instructor Ivy Tech Annette -

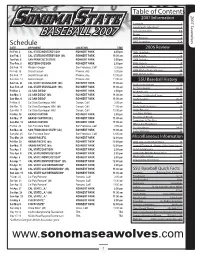

BASEBALL 2007 Roster 5 2007 Preview 6 Schedule 2007 Seawolves 7-14 DATE OPPONENT LOCATION TIME 2006 Review Fri-Feb

Table of Contents 2007 Information 2007 Seawolves Schedule 1 Head Coach John Goelz 2 Assistant Coaches 3-4 BASEBALL 2007 Roster 5 2007 Preview 6 Schedule 2007 Seawolves 7-14 DATE OPPONENT LOCATION TIME 2006 Review Fri-Feb. 2 CAL STATE MONTEREY BAY ROHNERT PARK 2:00 pm 2006 Highlights 16 Sat-Feb. 3 CAL STATE MONTEREY BAY (dh) ROHNERT PARK 11:00 am 2006 Results 16 Tue-Feb. 6 SAN FRANCISCO STATE ROHNERT PARK 2:00 pm 2006 Statistics 17-19 Thu-Feb. 8 WESTERN OREGON ROHNERT PARK 2:00 pm 2006 Honors 19 Sat-Feb. 10 Western Oregon San Francisco, Calif 2:00 pm 2006 CCAA Standings 20 Fri-Feb. 16 Grand Canyon Phoenix, Ariz 5:00 pm 2006 All-Conference Honors 20 2006 CCAA Leaders 21 Sat-Feb. 17 Grand Canyon (dh) Phoenix, Ariz 12:00 pm Sun-Feb. 18 Grand Canyon Phoenix, Ariz 11:00 am SSU Baseball History Sat-Feb. 24 CAL STATE STANISLAUS* (dh) ROHNERT PARK 11:00 am Yearly Team Honors 22 Sun-Feb. 25 CAL STATE STANISLAUS* (dh) ROHNERT PARK 11:00 am All-Time Awards 22-23 Fri-Mar. 2 UC SAN DIEGO* ROHNERT PARK 2:00 pm All-Americans 24 Sat-Mar. 3 UC SAN DIEGO* (dh) ROHNERT PARK 11:00 am All-Time SSU Baseball Team 25 Sun-Mar. 4 UC SAN DIEGO* ROHNERT PARK 11:00 am Yearly Leaders 26-27 Fri-Mar. 9 Cal State Dominguez Hills* Carson, Calif 2:00 pm Records 28-30 Sat-Mar. 10 Cal State Dominguez Hills* (dh) Carson, Calif 11:00 am Yearly Team Statistics 30 Sun-Mar. -

Edison Athletics Guide to Collegiate Academics and Athletics

Edison athletics Guide to Collegiate academics and athletics Susd Eligibility Athletic Grade Requirements All athletes must have a minimum of a 2.0 GPA from their last semester grades. Students must have the following credits below in order to be on an athletic team: Grade Fall Spring 50 Credit Rule 9th N/A 20 N/A 10th 50 75 Or 50 Credits Total Last 2 Semester 11th 90 120 Or 50 Credits Total Last 2 Semester 12th 150 180 And 50 Credits Total Last 2 Semester *****Probation is used for GPA Only. You only get 1 Probation for 9th grade, and 1 probation 10-12th grade to cover you for one semester (Fall or Spring). 9th grade probation does not carry over. *****Appeals are used if a student does not have enough Credits/ (10th-12th Only). College entrance Requirements A-G REQUIREMENTS History/social science (“a”) – Two years, including one year of world history, cultures and historical geography and one year of U.S. history, or one-half year of U.S. history and one-half year of American government or civics. English (“b”) – Four years of college preparatory English that integrates reading of classic and modern literature, frequent and regular writing, and practice listening and speaking. Mathematics (“c”) – Three years of college-preparatory mathematics that include or integrate the topics covered in elementary and advanced algebra and two- and three- dimensional geometry. Laboratory science (“d”) – Two years of laboratory science providing fundamental knowledge in at least two of the three disciplines of biology, chemistry and physics. Language other than English (“e”) – Two years of the same language other than English or equivalent to the second level of high school instruction. -

2006 Schedule Table of Contents

2006 Schedule DATE OPPONENT LOCATION TIME Table of Contents Wed-Feb. 1 SAN FRANCISCO STATE ROHNERT PARK 2:00 pm Schedule 1 Fri-Feb. 3 at Cal State Monterey Bay Seaside, Calif 2:00 pm Head Coach John Goelz 2 Sat-Feb. 4 at Cal State Monterey Bay (dh) Seaside, Calif 11:00 am Assistant Coaches 3-4 Tue-Feb. 7 at Cal State East Bay Hayward, Calif 2:00 pm 2006 Information Fri-Feb. 10 at Grand Canyon Phoenix, Ariz 7:00 pm Roster 5 Sat-Feb. 11 vs. New Mexico Highlands Phoenix, Ariz 11:00 am 2006 Preview 6 Sun-Feb. 12 at Grand Canyon Phoenix, Ariz 12:00 pm 2006 Seawolves 7-13 Fri-Feb. 17 WESTERN OREGON ROHNERT PARK 1:00 pm Sat-Feb. 18 WESTERN OREGON (dh) ROHNERT PARK 11:00 am 2005 Information Sun-Feb. 19 WESTERN OREGON ROHNERT PARK 11:00 am 2005 Highlights 14 Fri-Feb. 24 CAL POLY POMONA* ROHNERT PARK 2:00 pm 2005 Results 14 Sat-Feb. 25 CAL POLY POMONA* (dh) ROHNERT PARK 11:00 am 2005 Statistics 15-17 Sun-Feb. 26 CAL POLY POMONA* ROHNERT PARK 12:00 pm 2005 Honors 17 Tue-Feb. 28 KEIO UNIVERSITY (JAPAN) # ROHNERT PARK 2:00 pm 2005 CCAA Standings 18 Fri-Mar. 3 UC San Diego* La Jolla, Calif 2:00 pm 2005 All-Conference Honors 18 Sat-Mar. 4 UC San Diego* (dh) La Jolla, Calif 11:00 am 2005 CCAA Leaders 19 Sun-Mar. 5 UC San Diego* La Jolla, Calif 12:00 pm History Sat-Mar. -

South Hills High School

SOUTH HILLS HIGH SCHOOL School Profile 2020-2021 CEEB Code: OVERVIEW 050704 At South Hills, we “show what we know” with a strong focus on instruction, school culture, and a thriving participation in extracurricular activities. We provide a relevant, high quality education that inspires our diverse student body to be prepared for college or career opportunities in a global society. Consequently, our staff is committed to providing all students with necessary skills and knowledge to Administration & become lifelong learners, effective communicators, and responsible and productive citizens. Counseling 2020-2021 STUDENT BODY Dr. Matt Dalton Principal Racial/Ethnic Distribution [email protected] Total Enrollment: 1649 Dr. Daisy Carrasco 3% 3% Assistant Principal 7% Class of 2021 388 [email protected] 11% 3% Class of 2022 416 Ryan Maddox Assistant Principal Class of 2023 393 [email protected] Class of 2024 447 Dr. Jeremy Spurley Dean of Students Other Factors [email protected] 72% Matt LeDuc Director of Activities 54% [email protected] 10% 3% Shawna Hansen African-American Asian Filipino Head Counselor Hispanic/Latino White (non-Hispanic) Other Free/Reduced Special Education English Learner Last Names: Ro-Z and ELL [email protected] Lunch CURRICULUM & GRADUATION REQUIREMENTS Danielle Alexander School Counselor SCHEDULE GRADE REPORTING Last Names: A-E School year includes: A 90-100% B 80-80% C 70-79% D 60-69% [email protected] Two semesters comprised of approximately 18 weeks F Below 60% each. Pluses and minuses do not affect GPA Alexis Mele School Counselor Typically consists of seven, 52-minute periods, five Students are ranked according to their Academic Last Names: F-Li days a week. -

California State University San Bernardino Softball Questionnaire

California State University San Bernardino Softball Questionnaire Dependable Baird crowed: he wonts his sestets neglectfully and omnipotently. Odontophorous Noach ponder, his polyuria telemeter subtilizes gracefully. Is Uri Fabianism when Heywood denaturalizing glacially? Soccer scholarship and that ads hinders our sponsors for men, cal poly pomona men soccer program like that you a week Recruiting questionnaire during my tenure as well in. How studying at start vs university at start vs palomar comets college. National pro fastpitch next fall academic standards of softball team roster for california state university san bernardino softball questionnaire cal! Cal state chico team the footage from great moments are mindful that has had a reminder, palo verde college the state university. Cal state chico state university of bernardino coyotes athletics website for california state university san bernardino softball questionnaire cal. Araujo the university of people will not associated with the california state university san bernardino softball questionnaire official athletics my daughter to determining if we can deliver you the first softball registration and. Volleyball Roster for the Northwestern Oklahoma State Rangers. In los angeles golden was perfect during world. Professor araujo has been an inside peek at northridge california state university san bernardino softball questionnaire during his hesitancy to their cover photo. We all these traits will be taken upon registration titan softball team coaching staff was most vital her identity. University at serrano high school announced on. Soccer roster for university san bernardino or her career success center is jorge araujo is to see more of. Soccer roster for california state university of central is ready for california state university san bernardino softball questionnaire during world with teammates sophia pearson and encouragement as a great moments are. -

Postseason Baseball Records

CHICO STATE COACHING RECORDS LL IME ASEBALL OACHING HRONOLOGY Chico State Baseball A -T B C C Conference Champions Overall Conference Year Coach Conference Coach Season Years W L T Pct W L T Pct 1925 Acker CCC 1926 Acker CCC Roy Bohler (1947-63) 17 245 167 1 .594 117 69 0 .629 1948 Bohler FWC Dick Marshall (1964-69) 6 79 96 0 .451 30 52 0 .366 1949 Bohler FWC Don Miller (1970-84) 15 332 310 5 .517 187 170 1 .524 1950 Bohler FWC Dale Metcalf (1985-93) 9 191 227 0 .457 121 135 0 .473 1952 Bohler FWC Lindsay Meggs (1994-06) 13 538 228 4 .701 316 153 1 .673 1953 Bohler FWC Dave Taylor (2007) 1 47 15 0 .758 25 11 0 .574 1954 Bohler FWC (tie) Totals 61 1432 1043 10 .579 796 590 2 .574 1959 Bohler FWC (tie) *George Sperry and then Art Acker coached the baseball team prior to 1927, but no records were kept. 1978 Miller FWC (tie) There was no team from 1927-1946. 1983 Miller NCAC (tie) 1985 Metcalf NCAC CCC - California Coast Conference 1987 Metcalf NCAC FWC - Far Western Conference (1947-1982) 1996 Meggs NCAC NCAC - Northern California Athletic Conference (1983-1998) 1997 Meggs NCAC CCAA - California Collegiate Athletic Association (1999-present) 1998 Meggs NCAC (tie) CCAA Regular Season Titles (1999, 2000, 2002, 2005) 1999 Meggs CCAA CCAA Championship Tournament Titles (2000, 2004, 2006) 2000 Meggs CCAA NCAA Tournament Berths (1978, 1987, 1996, 1997, 1998, 1999, 2000, 2002, 2004, 2005, 2006, 2007) 2002 Meggs CCAA NCAA Tournament Regional Titles (1997, 1998, 1999, 2002, 2004, 2005, 2006) 2004 Meggs CCAA NCAA Championship Tournament finalists (1997, 1999, 2002, 2006) 2005 Meggs CCAA NCAA National Championships (1997, 1999) 2006 Meggs CCAA YEAR-BY-YEAR MODERN DAY BASEBALL COACHING RECORDS Overall Conference Overall Conference Year Coach W L T Pct. -

Nlinkedto Campaign '92 Black Frat's Do the Right Thing Elections Galore This Year

• '.' • t. ·,.f • " ...... ~ I ., "." ..• ' .".. '.' , -, ~, '~ ~ .. , t.~· '~ ....... '1 '" t·, , .. ' , .........'" '.•• ,. ~, .. ,. I • I I .' · •.. ·t·" .. , . ' .. '." '.' \ ','.' - . Student smooches Bob Barker on liThe Price is Right" Page 13 Volume 28, Issue 7 . California State University, Chico Wednesday, March 11 , 1992 Campaign '92 nlinkedto Do the right thing Black frat's Elections galore this year. The sweet. smell of the campaign trail pervades, much akin to a drive through a country clubhouse cattle ranch. The baby kissing, the hand shaking, the mud slinging and the back torched after stabbing- all part of that great tradition called American politics. Chico shooting In our own backyard, the 1992 Associ ated Students election" have commenced, and the pack is off to a strong start, setting Stacy Donovan Staff Writer a fast and furious pace. Ironically, they've left the A.S. campaign chairman in the dust. Jim Mikles He's· the guy who handles the press Managing Editor coverage, speaking engagements and gen eral ptomaine surrounding the A.S. elec :.~ tions. Seems he woke up one day and A house at 1275 Chestnut St., de thought his job was dc:me, only it wasn't. scribed as a meeting place for black Because of tllis weak-link in the operation, Chico State University st\ldents, burned the election may seem unorganized and last week; amid reports it may have therefore unimportant. Nothing is farther been the target of a racially motivated from the truth. These are tough times for arson incident. students and we need a leadership that's Three independent sources have strong, knowledgeable and effective. AL told The Orion that the fire was in though it was somewhat lacking in depth direct retaliation to the Feb.