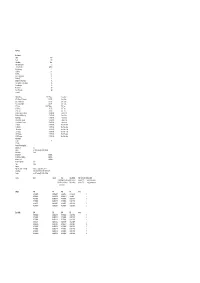

MPEG-1 LAYER 3 音訊解碼器於DSP晶片之 即時軟體實現 Real-Time Implementation of MPEG-1 Layer 3 Audio Decoder on a DSP Chip

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CD/DVD Player

4-277-895-11(1) CD/DVD Player Operating Instructions DVP-NS638P DVP-NS648P © 2011 Sony Corporation Precautions Notes about the discs Safety • To keep the disc clean, handle WARNING To prevent fire or shock hazard, do the disc by its edge. Do not touch not place objects filled with the surface. Dust, fingerprints, or To reduce the risk of fire or liquids, such as vases, on the scratches on the disc may cause electric shock, do not expose apparatus. it to malfunction. this apparatus to rain or moisture. Installing To avoid electrical shock, do • Do not install the unit in an not open the cabinet. Refer inclined position. It is designed servicing to qualified to be operated in a horizontal personnel only. position only. The mains lead must only be • Keep the unit and discs away changed at a qualified from equipment with strong service shop. magnets, such as microwave Batteries or batteries ovens, or large loudspeakers. installed apparatus shall not • Do not place heavy objects on be exposed to excessive heat the unit. such as sunshine, fire or the like. Lightning For added protection for this set • Do not expose the disc to direct CAUTION during a lightning storm, or when it sunlight or heat sources such as is left unattended and unused for hot air ducts, or leave it in a car The use of optical instruments with long periods of time, unplug it parked in direct sunlight as the this product will increase eye from the wall outlet. This will temperature may rise hazard. As the laser beam used in prevent damage to the set due to considerably inside the car. -

C-Based Hardware Design of Imdct Accelerator for Ogg Vorbis Decoder

C-BASED HARDWARE DESIGN OF IMDCT ACCELERATOR FOR OGG VORBIS DECODER Shinichi Maeta1, Atsushi Kosaka1, Akihisa Yamada1, 2, Takao Onoye1, Tohru Chiba1, 2, and Isao Shirakawa1 1Department of Information Systems Engineering, 2Sharp Corporation Graduate School of Information Science and Technology, 2613-1 Ichinomoto, Tenri, Nara, 632-8567 Japan Osaka University phone: +81 743 65 2531, fax: +81 743 65 3963, 2-1 Yamada-oka, Suita, Osaka, 565-0871 Japan email: [email protected], phone: +81 6 6879 7808, fax: +81 6 6875 5902, [email protected] email: {maeta, kosaka, onoye, sirakawa}@ist.osaka-u.ac.jp ABSTRACT ARM7TDMI is used as the embedded processor since it has This paper presents hardware design of an IMDCT accelera- come into wide use recently. tor for an Ogg Vorbis decoder using a C-based design sys- tem. Low power implementation of audio codec is important 2. OGG VORBIS CODEC in order to achieve long battery life of portable audio de- 2.1 Ogg Vorbis Overview vices. Through the computational cost analysis of the whole decoding process, it is found that Ogg Vorbis requires higher Figure 1 shows a block diagram of the Ogg Vorbis codec operation frequency of an embedded processor than MPEG processes outlined below. Audio. In order to reduce the CPU load, an accelerator is designed as specific hardware for IMDCT, which is detected MDCT Psycho Audio Remove Channel Acoustic VQ as the most computation-intensive functional block. Real- Signal Floor Coupling time decoding of Ogg Vorbis is achieved with the accelera- FFT Model Ogg Vorbis tor and an embedded processor both run at 36MHz. -

File Name Benchmark Width 1024 Height 768 Anti-Aliasing None

File Name Benchmark Width 1024 Height 768 Anti-Aliasing None Anti-Aliasing Quality 0 Texture Filtering Optimal Max Anisotropy 4 VS Profile 3_0 PS Profile 3_0 Force Full Precision No Disable DST No Disable Post-Processing No Force Software Vertex Shader No Color Mipmaps No Repeat Tests Off Fixed Framerate Off Comment 3DMark Score 4580 3DMarks Game Tests GT1 - Return To Proxycon 18.5 FPS Game Tests GT2 - Firefly Forest 11.7 FPS Game Tests GT3 - Canyon Flight 28.4 FPS Game Tests CPU Score 2184 CPUMarks CPU Tests CPU Test 1 1.1 FPS CPU Tests CPU Test 2 1.9 FPS CPU Tests Fill Rate - Single-Texturing 0.0 FPS N/A Feature Tests Fill Rate - Multi-Texturing 0.0 FPS N/A Feature Tests Pixel Shader 0.0 FPS N/A Feature Tests Vertex Shader - Simple 0.0 FPS N/A Feature Tests Vertex Shader - Complex 0.0 FPS N/A Feature Tests 8 Triangles 0.0 FPS N/A Batch Size Tests 32 Triangles 0.0 FPS N/A Batch Size Tests 128 Triangles 0.0 FPS N/A Batch Size Tests 512 Triangles 0.0 FPS N/A Batch Size Tests 2048 Triangles 0.0 FPS N/A Batch Size Tests 32768 Triangles 0.0 FPS N/A Batch Size Tests System Info Version 3.5 CPU Info Central Processing Unit Manufacturer Intel Family Intel(R) Pentium(R) 4 CPU 3.40GHz Architecture 32-bit Internal Clock 3400 MHz Internal Clock Maximum 3400 MHz External Clock 800 MHz Socket Designation CPU 1 Type Central Upgrade HyperThreadingTechnology Available - 2 Logical Processors Capabilities MMX, CMov, RDTSC, SSE, SSE2, PAE Version Intel(R) Pentium(R) 4 CPU 3.40GHz Caches Level Capacity Type Type Details Error Correction TyAssociativity 1 -

6/8/2018, 16:56:57 Machine Name: LAPTOP

------------------ System Information ------------------ Time of this report: 6/8/2018, 16:56:57 Machine name: LAPTOP-DL519BUJ Machine Id: {6A4BAB40-8615-4F88-B9C6-14E85D02B1B3} Operating System: Windows 10 Famille 64-bit (10.0, Build 17134) (17134.rs4_release.180410-1804) Language: French (Regional Setting: French) System Manufacturer: Acer System Model: Predator G9-791 BIOS: V1.11 (type: UEFI) Processor: Intel(R) Core(TM) i7-6700HQ CPU @ 2.60GHz (8 CPUs), ~2.6GHz Memory: 16384MB RAM Available OS Memory: 16266MB RAM Page File: 5726MB used, 12970MB available Windows Dir: C:\WINDOWS DirectX Version: DirectX 12 DX Setup Parameters: Not found User DPI Setting: 96 DPI (100 percent) System DPI Setting: 96 DPI (100 percent) DWM DPI Scaling: Disabled Miracast: Available, with HDCP Microsoft Graphics Hybrid: Supported DxDiag Version: 10.00.17134.0001 64bit Unicode ------------ DxDiag Notes ------------ Display Tab 1: No problems found. Display Tab 2: No problems found. Sound Tab 1: No problems found. Input Tab: No problems found. -------------------- DirectX Debug Levels -------------------- Direct3D: 0/4 (retail) DirectDraw: 0/4 (retail) DirectInput: 0/5 (retail) DirectMusic: 0/5 (retail) DirectPlay: 0/9 (retail) DirectSound: 0/5 (retail) DirectShow: 0/6 (retail) --------------- Display Devices --------------- Card name: Intel(R) HD Graphics 530 Manufacturer: Intel Corporation Chip type: Intel(R) HD Graphics Family DAC type: Internal Device Type: Full Device (POST) Device Key: Enum\PCI\VEN_8086&DEV_191B&SUBSYS_105B1025&REV_06 Device Status: 0180200A -

Directshow Codecs On

DirectShow Codecs (Reported) Version RVG 0.5754 Windows Vista X86 Windows Vista X64 Codec Company Description Reported Windows XP Pro WindowsXP Starter Business N Business HomeBasic N HomeBasic HomePremium Ultimate Business N Business HomeBasic N HomeBasic HomePremium Ultimate AVI Decompressor Microsoft Corporation DirectShow Runtime AVI Draw Microsoft Corporation DirectShow Runtime Cinepak Codec by Radius Radius Inc. Cinepak® Codec DV Splitter Microsoft Corporation DirectShow Runtime DV Video Decoder Microsoft Corporation DirectShow Runtime DV Video Encoder Microsoft Corporation DirectShow Runtime Indeo® video 4.4 Decompression Filter Intel Corporation Intel Indeo® Video 4.5 Indeo® video 5.10 Intel Corporation Intel Indeo® video 5.10 Indeo® video 5.10 Compression Filter Intel Corporation Intel Indeo® video 5.10 Indeo® video 5.10 Decompression Filter Intel Corporation Intel Indeo® video 5.10 Intel 4:2:0 Video V2.50 Intel Corporation Microsoft H.263 ICM Driver Intel Indeo(R) Video R3.2 Intel Corporation N/A Intel Indeo® Video 4.5 Intel Corporation Intel Indeo® Video 4.5 Intel Indeo(R) Video YUV Intel IYUV codec Intel Corporation Codec Microsoft H.261 Video Codec Microsoft Corporation Microsoft H.261 ICM Driver Microsoft H.263 Video Codec Microsoft Corporation Microsoft H.263 ICM Driver Microsoft MPEG-4 Video Microsoft MPEG-4 Video Decompressor Microsoft Corporation Decompressor Microsoft RLE Microsoft Corporation Microsoft RLE Compressor Microsoft Screen Video Microsoft Screen Video Decompressor Microsoft Corporation Decompressor Video -

System Information ---Time of This Report: 7/30/2013, 15:28:13 Machine Name

Downloaded from: justpaste.it/39ad ------------------ System Information ------------------ Time of this report: 7/30/2013, 15:28:13 Machine name: ERIKDC-HP Operating System: Windows 7 Home Premium 64-bit (6.1, Build 7601) Service Pack 1 (7601.win7sp1_gdr.130318-1533) Language: English (Regional Setting: English) System Manufacturer: Hewlett-Packard System Model: HP Pavilion g6 Notebook PC BIOS: InsydeH2O Version CCB.03.61.17F.46 Processor: AMD A4-3300M APU with Radeon(tm) HD Graphics (2 CPUs), ~1.9GHz Memory: 4096MB RAM Available OS Memory: 3562MB RAM Page File: 3257MB used, 3866MB available Windows Dir: C:\Windows DirectX Version: DirectX 11 DX Setup Parameters: Not found User DPI Setting: Using System DPI System DPI Setting: 96 DPI (100 percent) DWM DPI Scaling: Disabled DxDiag Version: 6.01.7601.17514 32bit Unicode ------------ DxDiag Notes ------------ Display Tab 1: No problems found. Sound Tab 1: No problems found. Sound Tab 2: No problems found. Input Tab: No problems found. -------------------- DirectX Debug Levels -------------------- Direct3D: 0/4 (retail) DirectDraw: 0/4 (retail) DirectInput: 0/5 (retail) DirectMusic: 0/5 (retail) DirectPlay: 0/9 (retail) DirectSound: 0/5 (retail) DirectShow: 0/6 (retail) --------------- Display Devices --------------- Card name: AMD Radeon(TM) HD 6480G Manufacturer: ATI Technologies Inc. Chip type: ATI display adapter (0x9648) DAC type: Internal DAC(400MHz) Device Key: Enum\PCI\VEN_1002&DEV_9648&SUBSYS_169B103C&REV_00 Display Memory: 2022 MB Dedicated Memory: 497 MB Shared Memory: 1525 MB -

ARM MPEG-2 Audio Layer III Decoder Version 1

ARM MPEG-2 Audio Layer III Decoder Version 1 Programmer’s Guide Copyright © 1999 ARM Limited. All rights reserved. ARM DUI 0121B Copyright © 1999 ARM Limited. All rights reserved. Release Information The following changes have been made to this document. Change history Date Issue Change May 1999 A First release June 1999 B Second release, minor changes Proprietary Notice ARM, the ARM Powered logo, Thumb, and StrongARM are registered trademarks of ARM Limited. The ARM logo, AMBA, Angel, ARMulator, EmbeddedICE, ModelGen, Multi-ICE, ARM7TDMI, ARM9TDMI, TDMI, and STRONG are trademarks of ARM Limited. All other products or services mentioned herein may be trademarks of their respective owners. Neither the whole nor any part of the information contained in, or the product described in, this document may be adapted or reproduced in any material form except with the prior written permission of the copyright holder. The product described in this document is subject to continuous developments and improvements. All particulars of the product and its use contained in this document are given by ARM in good faith. However, all warranties implied or expressed, including but not limited to implied warranties of merchantability, or fitness for purpose, are excluded. This document is intended only to assist the reader in the use of the product. ARM Limited shall not be liable for any loss or damage arising from the use of any information in this document, or any error or omission in such information, or any incorrect use of the product. ii Copyright © 1999 ARM Limited. All rights reserved. ARM DUI 0121B Preface This preface introduces the ARM Moving Pictures Experts Group (MPEG)-2 Audio Layer III (MP3) Decoder. -

System Information ---Time of This Report: 11/23/2010, 00:57:15

------------------ System Information ------------------ Time of this report: 11/23/2010, 00:57:15 Machine name: WANTEDPC Operating System: Windows XP Professional (5.1, Build 2600) Service Pack 2 (2 600.xpsp_sp2_rtm.0408032158) Language: English (Regional Setting: English) System Manufacturer: ECS System Model: 945GCTM2 BIOS: Default System BIOS Processor: Intel(R) Pentium(R) Dual CPU E2200 @ 2.20GHz (2 CPUs) Memory: 2040MB RAM Page File: 228MB used, 3707MB available Windows Dir: C:\WINDOWS DirectX Version: DirectX 9.0c (4.09.0000.0904) DX Setup Parameters: Not found DxDiag Version: 5.03.2600.2180 32bit Unicode ------------ DxDiag Notes ------------ DirectX Files Tab: No problems found. Display Tab 1: No problems found. DirectDraw test results: All tests were successful. Direct3D 7 test results: All tests were successful. Direct3D 8 test results: All tests were successful. Direct3D 9 test results: All tests were succ essful. Sound Tab 1: DirectSound test results: All tests were successful. Music Tab: DirectMusic test results: All tests were successful. Input Tab: No problems found. Network Tab: No problems found. DirectPlay test results: The tests were cancelled before completing. -------------------- DirectX Debug Levels -------------------- Direct3D: 0/4 (n/a) DirectDraw: 0/4 (retail) DirectInput: 0/5 (n/a) DirectMusic: 0/5 (n/a) DirectPlay: 0/9 (retail) DirectSound: 0/5 (retail) DirectShow: 0/6 (retail) --------------- Display Devices --------------- Card name: Intel(R) 82945G Express Chipset Family Manufacturer: Intel Corporation -

Video Quality Measurement for 3G Handset

University of Plymouth PEARL https://pearl.plymouth.ac.uk 04 University of Plymouth Research Theses 01 Research Theses Main Collection 2007 Video Quality Measurement for 3G Handset Zeeshan http://hdl.handle.net/10026.2/509 University of Plymouth All content in PEARL is protected by copyright law. Author manuscripts are made available in accordance with publisher policies. Please cite only the published version using the details provided on the item record or document. In the absence of an open licence (e.g. Creative Commons), permissions for further reuse of content should be sought from the publisher or author. Video Quality Measurement for 3G Handset by Zeeshan Dissertation submitted in partial fulfilment of the requirements for the award of Master of Research in Communications Engineering and Signal Processing in School of Computing, Communication and Electronics University of Plymouth January 2007 Supervisors Professor Emmanuel C. Ifeachor Dr. Lingfen Sun Mr. Zhuoqun Li © Zeeshan 2007 University of Plymouth Library Item no. „ . ^ „ Declaration This is to certify that the candidate, Mr. Zeeshan, carried out the work submitted herewith Candidate's Signature: Mr. Zeeshan KJ(. 'X&_.XJ<t^ Date: 25/01/2007 Supervisor's Signature: Dr. Lingfen Sun /^i^-^^^^f^ » P^^^. 25/01/2007 Second Supervisor's Signature: Mr. Zhuoqun Li / Date: 25/01/2007 Copyright & Legal Notice This copy of the dissertation has been supplied on the condition that anyone who consults it is understood to recognize that its copyright rests with its author and that no part of this dissertation and information derived from it may be published without the author's prior written consent. -

System Information ---Time of This Report: 10/15/2014, 12:07:56 Machine Name: FU2-PC Operatin

------------------ System Information ------------------ Time of this report: 10/15/2014, 12:07:56 Machine name: FU2-PC Operating System: Windows Vista Home Premium (6.0, Build 6002) Service Pack 2 (6002.vistasp2_gdr.130308-1436) Language: English (Regional Setting: English) System Manufacturer: alienware System Model: M17x BIOS: Ver A00 1.00PARTTBL Processor: Intel(R) Core(TM)2 Duo CPU T9600 @ 2.80GHz (2 CPUs), ~ 2.8GHz Memory: 7934MB RAM Page File: 5194MB used, 10847MB available Windows Dir: C:\Windows DirectX Version: DirectX 11 DX Setup Parameters: Not found DxDiag Version: 7.00.6002.18107 32bit Unicode ------------ DxDiag Notes ------------ Display Tab 1: No problems found. Sound Tab 1: No problems found. Input Tab: No problems found. -------------------- DirectX Debug Levels -------------------- Direct3D: 0/4 (retail) DirectDraw: 0/4 (retail) DirectInput: 0/5 (retail) DirectMusic: 0/5 (retail) DirectPlay: 0/9 (retail) DirectSound: 0/5 (retail) DirectShow: 0/6 (retail) --------------- Display Devices --------------- Card name: NVIDIA GeForce GTX 280M Manufacturer: NVIDIA Chip type: GeForce GTX 280M DAC type: Integrated RAMDAC Device Key: Enum\PCI\VEN_10DE&DEV_060A&SUBSYS_02A11028&REV_A2 Display Memory: 4077 MB Dedicated Memory: 1005 MB Shared Memory: 3071 MB Current Mode: 1176 x 664 (32 bit) (59Hz) Monitor: Generic PnP Monitor Driver Name: nvd3dumx.dll,nvwgf2umx.dll,nvwgf2umx.dll,nvd3dum,nvwgf2um,nvw gf2um Driver Version: 8.17.0012.5738 (English) DDI Version: 10 BGRA Supported: Yes Driver Attributes: Final Retail Driver Date/Size: -

System Information ---Time of This Report: 8/22/2018, 17:04:09 Machine Name: DESKTOP-STEVE Ma

------------------ System Information ------------------ Time of this report: 8/22/2018, 17:04:09 Machine name: DESKTOP-STEVE Machine Id: {37478566-514C-4678-A48F-FDF573367108} Operating System: Windows 10 Home 64-bit (10.0, Build 17134) (17134.rs4_release.180410-1804) Language: English (Regional Setting: English) System Manufacturer: Dell Inc. System Model: Inspiron 3650 BIOS: 3.5.2 (type: UEFI) Processor: Intel(R) Core(TM) i3-6100 CPU @ 3.70GHz (4 CPUs), ~3.7GHz Memory: 8192MB RAM Available OS Memory: 8096MB RAM Page File: 9435MB used, 3114MB available Windows Dir: C:\WINDOWS DirectX Version: DirectX 12 DX Setup Parameters: Not found User DPI Setting: 96 DPI (100 percent) System DPI Setting: 96 DPI (100 percent) DWM DPI Scaling: Disabled Miracast: Available, with HDCP Microsoft Graphics Hybrid: Not Supported DxDiag Version: 10.00.17134.0001 64bit Unicode ------------ DxDiag Notes ------------ Display Tab 1: No problems found. Sound Tab 1: No problems found. Sound Tab 2: No problems found. Input Tab: No problems found. -------------------- DirectX Debug Levels -------------------- Direct3D: 0/4 (retail) DirectDraw: 0/4 (retail) DirectInput: 0/5 (retail) DirectMusic: 0/5 (retail) DirectPlay: 0/9 (retail) DirectSound: 0/5 (retail) DirectShow: 0/6 (retail) --------------- Display Devices --------------- Card name: Intel(R) HD Graphics 530 Manufacturer: Intel Corporation Chip type: Intel(R) HD Graphics Family DAC type: Internal Device Type: Full Device (POST) Device Key: Enum\PCI\VEN_8086&DEV_1912&SUBSYS_06F71028&REV_06 Device Status: 0180200A -

Rozpoznávač Hudebního Stylu Z MP3 Prohlášení

VYSOKÉ UČENÍ TECHNICKÉ V BRNĚ BRNO UNIVERSITY OF TECHNOLOGY FAKULTA INFORMAČNÍCH TECHNOLOGIÍ ÚSTAV POČÍTAČOVÉ GRAFIKY A MULTIMÉDIÍ FACULTY OF INFORMATION TECHNOLOGY DEPARTMENT OF COMPUTER GRAPHICS AND MULTIMEDIA ROZPOZNÁVAČ HUDEBNÍHO STYLU Z MP3 MP3 MUSIC STYLE RECOGNITION DIPLOMOVÁ PRÁCE MASTER`S THESIS AUTOR PRÁCE BC. DEUTSCHER MICHAEL AUTHOR VEDOUCÍ PRÁCE ING. FRANTIŠEK GRÉZL, PH. D. SUPERVISOR BRNO 2009 Abstrakt Práce popisuje návrh rozpoznávače hudebních stylů. Stručně se zabývá digitalizací hudebních dat, způsobem jejich ukládání v počítačích. Dále zmiňuje příznaky používané pro klasifikaci včetně nástinu jejich extrakce. Stěžejní částí je pak srovnání úspěšnosti rozpoznání hudebních žánrů pomocí příznaků získaných přímo z hudebních dat v mp3 formátu a příznaků získaných klasickou analýzou. Klíčová slova Rozpoznávač hudebního stylu, hudební styl, mp3, extrakce příznaků, hudba Abstract This document describes the concept of music style recognition. It gives a quick reference to the digitalization of music data and storing music data in computers. It also mentions features used for music style recognition and their extraction. The main part of this document compares the successfulness of music genre recognition using features extracted directly from audio data in mp3 format and features extracted by usual analysis. Keywords Recognition of music style, music style, mp3, feature extraction, music Citace Deutscher Michael: Rozpoznávač hudebního stylu z mp3. Brno, 2009, diplomová práce, FIT VUT v Brně. Rozpoznávač hudebního stylu z MP3 Prohlášení Prohlašuji, že jsem tuto diplomovou práci vypracoval samostatně pod vedením Ing. Františka Grézla, Ph. D. Uvedl jsem všechny literární prameny a publikace, ze kterých jsem čerpal. …………………… Michael Deutscher 26.5.2009 Poděkování Rád bych poděkoval za odbornou pomoc při tvorbě tohoto dokumentu Ing.