Transforming the Arχiv to XML

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TUGBOAT Volume 39, Number 2 / 2018 TUG 2018 Conference

TUGBOAT Volume 39, Number 2 / 2018 TUG 2018 Conference Proceedings TUG 2018 98 Conference sponsors, participants, program 100 Joseph Wright / TUG goes to Rio 104 Joseph Wright / TEX Users Group 2018 Annual Meeting notes Graphics 105 Susanne Raab / The tikzducks package A L TEX 107 Frank Mittelbach / A rollback concept for packages and classes 113 Will Robertson / Font loading in LATEX using the fontspec package: Recent updates 117 Joseph Wright / Supporting color and graphics in expl3 119 Joseph Wright / siunitx: Past, present and future Software & Tools 122 Paulo Cereda / Arara — TEX automation made easy 126 Will Robertson / The Canvas learning management system and LATEXML 131 Ross Moore / Implementing PDF standards for mathematical publishing Fonts 136 Jaeyoung Choi, Ammar Ul Hassan, Geunho Jeong / FreeType MF Module: A module for using METAFONT directly inside the FreeType rasterizer Methods 143 S.K. Venkatesan / WeTEX and Hegelian contradictions in classical mathematics Abstracts 147 TUG 2018 abstracts (Behrendt, Coriasco et al., Heinze, Hejda, Loretan, Mittelbach, Moore, Ochs, Veytsman, Wright) 149 MAPS: Contents of issue 48 (2018) 150 Die TEXnische Kom¨odie: Contents of issues 2–3/2018 151 Eutypon: Contents of issue 38–39 (October 2017) General Delivery 151 Bart Childs and Rick Furuta / Don Knuth awarded Trotter Prize 152 Barbara Beeton / Hyphenation exception log Book Reviews 153 Boris Veytsman / Book review: W. A. Dwiggins: A Life in Design by Bruce Kennett Hints & Tricks 155 Karl Berry / The treasure chest Cartoon 156 John Atkinson / Hyphe-nation; Clumsy Advertisements 157 TEX consulting and production services TUG Business 158 TUG institutional members 159 TUG 2019 election News 160 Calendar TEX Users Group Board of Directors † TUGboat (ISSN 0896-3207) is published by the Donald Knuth, Grand Wizard of TEX-arcana ∗ TEX Users Group. -

Semi-Automated Correction Tools for Mathematics-Based Exercises in MOOC Environments

International Journal of Artificial Intelligence and Interactive Multimedia, Vol. 3, Nº 3 Semi-Automated Correction Tools for Mathematics-Based Exercises in MOOC Environments Alberto Corbí and Daniel Burgos Universidad Internacional de La Rioja the accuracy of the result but also in the correctness of the Abstract — Massive Open Online Courses (MOOCs) allow the resolution process, which might turn out to be as important as participation of hundreds of students who are interested in a –or sometimes even more important than– the final outcome wide range of areas. Given the huge attainable enrollment rate, it itself. Corrections performed by a human (a teacher/assistant) is almost impossible to suggest complex homework to students and have it carefully corrected and reviewed by a tutor or can also add value to the teacher’s view on how his/her assistant professor. In this paper, we present a software students learn and progress. The teacher’s feedback on a framework that aims at assisting teachers in MOOCs during correction sheet always entails a unique opportunity to correction tasks related to exercises in mathematics and topics improve the learner’s knowledge and build a more robust with some degree of mathematical content. In this spirit, our awareness on the matter they are currently working on. proposal might suit not only maths, but also physics and Exercises in physics deepen this reviewing philosophy and technical subjects. As a test experience, we apply it to 300+ physics homework bulletins from 80+ students. Results show our student-teacher interaction. Keeping an organized and solution can prove very useful in guiding assistant teachers coherent resolution flow is as relevant to the understanding of during correction shifts and is able to mitigate the time devoted the underlying physical phenomena as the final output itself. -

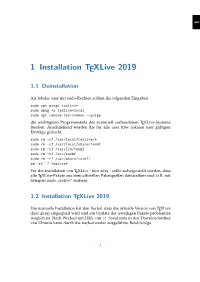

1 Installation Texlive 2019

1 1 Installation TEXLive 2019 1.1 Deinstallation Als lokaler user mit sudo-Rechten sollten die folgenden Eingaben sudo apt purge texlive* sudo dpkg -r texlive-local sudo apt remove tex-common --purge die wichtigsten Programmteile des eventuell vorhandenen TEXLive-Systems loschen.¨ Anschließend werden die fur¨ alle user bzw. lokalen user gultigen¨ Eintrage¨ geloscht.¨ sudo rm -rf /usr/local/texlive/* sudo rm -rf /usr/local/share/texmf sudo rm -rf /var/lib/texmf sudo rm -rf /etc/texmf sudo rm -rf /usr/share/texmf/ rm -rf ~/.texlive* Vor der Installation von TEXLive - hier 2019 - sollte sichergestellt werden, dass alle TEXLive-Pakete aus dem offiziellen Paketquellen deinstalliert sind (z.B. mit Synaptic nach texlive“ suchen). ” 1.2 Installation TEXLive 2019 Die manuelle Installation hat den Vorteil, dass die aktuelle Version von TEXLive (hier 2019) eingespielt wird und ein Update der jeweiligen Pakete problemlos moglich¨ ist. Nach Wechsel mit Hilfe von cd Downloads in den Downloadordner von Ubuntu kann durch die nacheinander ausgefuhrte¨ Befehlsfolge 1 1 1 Installation TEXLive 2019 wget http://mirror.ctan.org/systems/texlive/tlnet/ c ,! install-tl-unx.tar.gz tar -zxvf install-tl-unx.tar.gz cd install-tl-20190503/ sudo ./install-tl -gui der Installationsprozess in die Wege geleitet werden. Zu beachten ist, dass der Wechsel in das Unterverzeichnis mit Hilfe von cd install-tl-20190503/ auch eine andere Nummer (Datumsfolge?) haben kann und dies mit Hilfe der (unvollstandigen)¨ Eingabe von cd install-tl- und anschließender Betatigung¨ der Tabulatortaste automatisch erganzt¨ wird. Die Ubernahme¨ der Voreinstellungen lasst¨ den Installationsvorgang - welcher je nach Internetverbindung von geschatzt¨ einer halben bis zu mehreren Stunden dauern kann - anlaufen. -

Forms of Plagiarism in Digital Mathematical Libraries

Preprint from https://www.gipp.com/pub/ M. Schubotz et al. \Forms of Plagiarism in Digital Mathematical Li- braries". In: Proc. CICM. Czech Republic, July 2019 Forms of Plagiarism in Digital Mathematical Libraries Moritz Schubotz1;2, Olaf Teschke2, Vincent Stange1, Norman Meuschke1, and Bela Gipp1 1 University of Wuppertal, Wuppertal, Germany [email protected] 2 FIZ Karlsruhe / zbMATH, Berlin, Germany [email protected] Abstract. We report on an exploratory analysis of the forms of pla- giarism observable in mathematical publications, which we identified by investigating editorial notes from zbMATH. While most cases we encoun- tered were simple copies of earlier work, we also identified several forms of disguised plagiarism. We investigated 11 cases in detail and evaluate how current plagiarism detection systems perform in identifying these cases. Moreover, we describe the steps required to discover these and potentially undiscovered cases in the future. 1 Introduction Plagiarism is `the use of ideas, concepts, words, or structures without appro- priately acknowledging the source to benefit in a setting where originality is ex- pected' [5, 7]. Plagiarism represents severe research misconduct and has strongly negative impacts on academia and the public. Plagiarized research papers com- promise the scientific process and the mechanisms for tracing and correcting results. If researchers expand or revise earlier findings in subsequent research, papers that plagiarized the original paper remain unaffected. Wrong findings can spread and affect later research or practical applications. Furthermore, academic plagiarism causes a significant waste of resources [6]. Reviewing plagiarized research papers and grant applications causes unnecessary work. For example, Wager [29] quotes a journal editor stating that 10% of the papers submitted to the respective journal suffered from plagiarism of an unac- arXiv:1905.03322v2 [cs.DL] 9 Sep 2019 ceptable extent. -

Augmenting Mathematical Formulae for More Effective Querying & Efficient Presentation

Augmenting Mathematical Formulae for More Effective Querying & Efficient Presentation vorgelegt von Diplom-Physiker Moritz Schubotz geb. in Offenbach am Main von der Fakult¨atIV { Elektrotechnik und Informatik der Technischen Universit¨atBerlin zur Erlangung des akademischen Grades Doktor der Naturwissenschaften { Dr. rer. nat. { genehmigte Dissertation Promotionsausschuss: Vorsitzender: Prof. Dr. Odej Kao Gutachter: Prof. Dr. Volker Markl Gutachter: Prof. Abdou Youssef, PhD Gutachter: Prof. James Pitman, PhD Tag der wissenschaftlichen Aussprache: 31. M¨arz2017 Berlin 2017 ii Abstract Mathematical Information Retrieval (MIR) is a research area that focuses on the Information Need (IN) of the Science, Technology, Engineering and Mathematics (STEM) domain. Unlike traditional Information Retrieval (IR) research, that extracts information from textual data sources, MIR takes mathematical formulae into account as well. This thesis makes three main contributions: 1. It analyses the strengths and weaknesses of current MIR systems and establishes a new MIR task for future evaluations; 2. Based on the analysis, it augments mathematical notation as a foundation for future MIR systems to better fit the IN from the STEM domain; and 3. It presents a solution on how large web publishers can efficiently present math- ematics to satisfy the INs of each individual visitor. With regard to evaluation of MIR systems, it analyses the first international MIR task and proposes the Math Wikipedia Task (WMC). In contrast to other tasks, which evaluate the overall performance of MIR systems based on an IN, that is described by a combination of textual keywords and formulae, WMC was designed to gain insights about the math-specific aspects of MIR systems. In addition to that, this thesis investigates how different factors of similarity measures for mathematical expressions influence the effectiveness of MIR results. -

Mathoid: Robust, Scalable, Fast and Accessible Math Rendering for Wikipedia

Mathoid: Robust, Scalable, Fast and Accessible Math Rendering for Wikipedia Moritz Schubotz1, Gabriel Wicke2 1 Database Systems and Information Management Group, Technische Universit¨atBerlin, Einsteinufer 17, 10587 Berlin, Germany [email protected] 2 Wikimedia Foundation, San Francisco, California, U.S.A. [email protected] Abstract. Wikipedia is the first address for scientists who want to recap basic mathematical and physical laws and concepts. Today, formulae in those pages are displayed as Portable Network Graphics images. Those images do not integrate well into the text, can not be edited after copy- ing, are inaccessible to screen readers for people with special needs, do not support line breaks for small screens and do not scale for high res- olution devices. Mathoid improves this situation and converts formulae specified by Wikipedia editors in a TEX-like input format to MathML, with Scalable Vector Graphics images as a fallback solution. 1 Introduction: browsers are becoming smarter Wikipedia has supported mathematical content since 2003. Formulae are entered in a TEX-like notation and rendered by a program called texvc. One of the first versions of texvc announced the future of MathML support as follows: \As of January 2003, we have TeX markup for mathematical formulas on Wikipedia. It generates either PNG images or simple HTML markup, depending on user prefs and the complexity of the expression. In the future, as more browsers are smarter, it will be able to generate enhanced HTML or even MathML in many cases." [11] Today, more then 10 years later, less than 20% of people visiting the English arXiv:1404.6179v1 [cs.DL] 24 Apr 2014 Wikipedia, currently use browsers that support MathML (e.g., Firefox) [27]. -

Using NLG for Speech Synthesis of Mathematical Sentences

Using NLG for speech synthesis of mathematical sentences Alessandro Mazzei Universita` degli Studi di Torino [email protected] Michele Monticone Cristian Bernareggi Universita` degli Studi di Torino Universita` degli Studi di Torino [email protected] [email protected] Abstract and so it represents both the function application of f to x, and the multiplication of the constant f People with sight impairments can access to for the constant x surrounded by parenthesis. a mathematical expression by using its LATEX source. However, this mechanisms have sev- In this paper we study the design of a natu- eral drawbacks: (1) it assumes the knowledge ral language generation (NLG) system for pro- of the LATEX, (2) it is slow, since LATEX is ver- ducing a mathematical sentence, that is a natural bose and (3) it is error-prone since LATEX is language sentence containing the semantics of a a typographical language. In this paper we mathematical expression. Indeed, humans, when study the design of a natural language genera- have to orally communicate mathematical expres- tion system for producing a mathematical sen- sions, use their most sophisticated communication tence, i.e. a natural language sentence express- ing the semantics of a mathematical expres- technology, that is natural language. However, sion. Moreover, we describe the main results with respect to other domains, the mathematical of a first human based evaluation experiment domain has a number of peculiarities for speech of the system for Italian language. that needed to be accounted for (see Section4). We have three main research goals in this pa- 1 Introduction per. -

Workingwiki: a Mediawiki-Based Platform for Collaborative Research

WorkingWiki: a MediaWiki-based platform for collaborative research Lee Worden McMaster University Hamilton, Ontario, Canada University of California, Berkeley Berkeley, California, United States [email protected] Abstract WorkingWiki is a software extension for the popular MediaWiki platform that makes a wiki into a powerful environment for collaborating on publication-quality manuscripts and software projects. Developed in Jonathan Dushoff’s theoretical biology lab at McMaster University and available as free software, it allows wiki users to work together on anything that can be done by using UNIX commands to transform textual “source code” into output. Researchers can use it to collaborate on programs written in R, python, C, or any other language, and there are special features to support easy work on LATEX documents. It develops the potential of the wiki medium to serve as a combination collaborative text editor, development environment, revision control system, and publishing platform. Its potential uses are open-ended — its processing is controlled by makefiles that are straightforward to customize — and its modular design is intended to allow parts of it to be adapted to other purposes. Copyright c 2011 by Lee Worden ([email protected]). This work is licensed under the Creative Commons Attribution 3.0 license. The human readable license can be found here: http: //creativecommons.org/licenses/by/3.0. 1 Introduction The remarkable success of Wikipedia as a collaboratively constructed repository of human knowledge is strong testimony to the power of the wiki as a medium for online collaboration. Wikis — websites whose content can be edited by readers — have been adopted by great numbers of diverse groups around the world hoping to compile and present their shared knowledge. -

Latex to EPUB a Poor Man's Guide to Publishing Math Ebooks

LATEX to EPUB A poor man’s guide to publishing math ebooks Alberto Pettarin [email protected] A Talk Title, Explained LATEX to EPUB A poor man’s guide to publishing math ebooks A Talk Title, Explained LATEX to EPUB A poor man’s guide to publishing math ebooks I Easy-to-use interface to TEX high-quality typesetter I Semantic-oriented markup language ( chapter Introduction , n f g begin quote ... end quote ) n f g n f g I Widely used in science and engineering A Talk Title, Explained LATEX to EPUB A poor man’s guide to publishing math ebooks I Open ebook standard by IDPF I EPUB 3.0 published on Oct 11 2011 I EPUB file = ZIP[ (X)HTML + CSS + metadata ] I Main format on the market (except Amazon Kindle) A Talk Title, Explained LATEX to EPUB A poor man’s guide to publishing math ebooks I Scientific Technical Medical (STM) contents I Notes, pre-prints, journals, magazines, books, . S I Math notation: single symbols (z, A, Φ, , , ), short expressions 2 ; (y = ax 2 + bx + c), complex formulas: S 1 0 F(r0) Π F(r) = r × dV 0 4πr × jr - r j ZV 0 I BTW, massive business opportunity here. A Talk Title, Explained LATEX to EPUB A poor man’s guide to publishing math ebooks I Goal: LAT X document EPUB ebook E ) I No manual editing of LATEX source files I Easy-to-use, free software tool(chain) A Talk Title, Explained LATEX to EPUB A poor man’s guide to publishing math ebooks I Goal: LAT X document EPUB ebook E ) I No manual editing of LATEX source files I Easy-to-use, free software tool(chain) I BTW, massive business opportunity here. -

Latexml 2012 – a Year of Latexml

LATExml 2012 { A Year of LATExml Deyan Ginev1;2 and Bruce R. Miller2 1 Computer Science, Jacobs University Bremen, Germany 2 National Institute of Standards and Technology Abstract. LATExml, a TEX to XML converter, is being used in a wide range of MKM applications. In this paper, we present a progress report for the 2012 calendar year. Noteworthy enhancements include: increased coverage such as Wikipedia syntax; enhanced capabilities such as embed- dable JavaScript and CSS resources and RDFa support; a web service for remote processing via web-sockets; along with general accuracy and reliability improvements. The outlook for an 0.8.0 release in mid-2013 is also discussed. 1 Introduction LATExml [Mil] is a TEX to XML converter, bringing the well-known author- ing syntax of TEX and LATEX to the world of XML. Not a new face in the MKM crowd, LaTeXML has been adopted in a wide range of MKM applica- tions. Originally designed to support the development of NIST's Digital Library of Mathematical Functions (DLMF), it is now employed in publishing frame- works, authoring suites and for the preparation of a number of large-scale TEX corpora. In this paper, we present a progress report for the 2012 calendar year of LATExml's master and development branches. In 2012, the LATExml Subversion repository saw 30% of the total project commits since 2006. Currently, the two authors maintain a developer and master branch of LATExml, respectively. The main branch contains all mature features of LATExml. 2 Main Development Trunk LATExml's processing model can be broken down into two phases: the basic conversion transforms the TEX/LATEX markup into a LATEX-like XML schema; a post-processing phase converts that XML into the target format, usually some format in the HTML family. -

LATEXML the Manual ALATEX to XML/HTML/MATHML Converter; Version 0.8.5

LATEXML The Manual ALATEX to XML/HTML/MATHML Converter; Version 0.8.5 Bruce R. Miller November 17, 2020 ii Contents Contents iii List of Figures vii 1 Introduction1 2 Using LATEXML 5 2.1 Conversion...............................6 2.2 Postprocessing.............................7 2.3 Splitting................................. 11 2.4 Sites................................... 11 2.5 Individual Formula........................... 13 3 Architecture 15 3.1 latexml architecture........................... 15 3.2 latexmlpost architecture......................... 18 4 Customization 19 4.1 LaTeXML Customization........................ 20 4.1.1 Expansion............................ 20 4.1.2 Digestion............................ 22 4.1.3 Construction.......................... 24 4.1.4 Document Model........................ 27 4.1.5 Rewriting............................ 28 4.1.6 Packages and Options..................... 28 4.1.7 Miscellaneous......................... 29 4.2 latexmlpost Customization....................... 29 4.2.1 XSLT.............................. 30 4.2.2 CSS............................... 30 5 Mathematics 33 5.1 Math Details............................... 34 5.1.1 Internal Math Representation.................. 34 5.1.2 Grammatical Roles....................... 36 iii iv CONTENTS 6 Localization 39 6.1 Numbering............................... 39 6.2 Input Encodings............................. 40 6.3 Output Encodings............................ 40 6.4 Babel.................................. 40 7 Alignments 41 7.1 TEX Alignments............................ -

Omdoc.Sty/Cls: Semantic Markup for Open Mathematical Documents in LATEX∗

omdoc.sty/cls: Semantic Markup for Open Mathematical Documents in LATEX∗ Michael Kohlhase Jacobs University, Bremen http://kwarc.info/kohlhase January 28, 2012 Abstract The omdoc package is part of the STEX collection, a version of TEX/LATEX that allows to markup TEX/LATEX documents semantically without leaving the document format, essentially turning TEX/LATEX into a document format for mathematical knowledge management (MKM). This package supplies an infrastructure for writing OMDoc documents in LATEX. This includes a simple structure sharing mechanism for STEX that allows to to move from a copy-and-paste document development model to a copy-and-reference model, which conserves space and simplifies document management. The augmented structure can be used by MKM systems for added-value services, either directly from the STEX sources, or after transla- tion. ∗Version v1.0 (last revised 2012/01/28) 1 omdoc.dtx 1999 2012-01-28 07:32:11Z kohlhase Contents 1 Introduction 3 2 The User Interface 3 2.1 Package and Class Options . .3 2.2 Document Structure . .3 2.3 Ignoring Inputs . .4 2.4 Structure Sharing . .4 2.5 Colors . .5 3 Miscellaneous 5 4 Limitations 5 5 Implementation: The OMDoc Class 6 5.1 Class Options . .6 5.2 Setting up Namespaces and Schemata for LaTeXML . .7 5.3 Beefing up the document environment . .7 6 Implementation: OMDoc Package 8 6.1 Package Options . .8 6.2 Document Structure . .9 6.3 Front and Backmatter . 10 6.4 Ignoring Inputs . 11 6.5 Structure Sharing . 11 6.6 Colors . 12 6.7 LATEX Commands we interpret differently .