A Additional Notation for the Appendix B Proof of Proposition 4.1 C Results from Section 5

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Unicode Request for Cyrillic Modifier Letters Superscript Modifiers

Unicode request for Cyrillic modifier letters L2/21-107 Kirk Miller, [email protected] 2021 June 07 This is a request for spacing superscript and subscript Cyrillic characters. It has been favorably reviewed by Sebastian Kempgen (University of Bamberg) and others at the Commission for Computer Supported Processing of Medieval Slavonic Manuscripts and Early Printed Books. Cyrillic-based phonetic transcription uses superscript modifier letters in a manner analogous to the IPA. This convention is widespread, found in both academic publication and standard dictionaries. Transcription of pronunciations into Cyrillic is the norm for monolingual dictionaries, and Cyrillic rather than IPA is often found in linguistic descriptions as well, as seen in the illustrations below for Slavic dialectology, Yugur (Yellow Uyghur) and Evenki. The Great Russian Encyclopedia states that Cyrillic notation is more common in Russian studies than is IPA (‘Transkripcija’, Bol’šaja rossijskaja ènciplopedija, Russian Ministry of Culture, 2005–2019). Unicode currently encodes only three modifier Cyrillic letters: U+A69C ⟨ꚜ⟩ and U+A69D ⟨ꚝ⟩, intended for descriptions of Baltic languages in Latin script but ubiquitous for Slavic languages in Cyrillic script, and U+1D78 ⟨ᵸ⟩, used for nasalized vowels, for example in descriptions of Chechen. The requested spacing modifier letters cannot be substituted by the encoded combining diacritics because (a) some authors contrast them, and (b) they themselves need to be able to take combining diacritics, including diacritics that go under the modifier letter, as in ⟨ᶟ̭̈⟩BA . (See next section and e.g. Figure 18. ) In addition, some linguists make a distinction between spacing superscript letters, used for phonetic detail as in the IPA tradition, and spacing subscript letters, used to denote phonological concepts such as archiphonemes. -

E and Throat C Entury E S Urgery Head a Ull, M.D

PAGE 03111 02/10/2010 15:50 7084500005 t TURY Et'-lT e and Throat c entury E s urgery Head a Ull, M.D. , MD. er, M.D. ,vf.D. •, M. D. venue j.I 6lJ46i 60·0007 o·noo5 GOT JMY A 0 I SERT'ON OF PE TUSeS MYR\N Po P Instructions , some naUsea and Diet eiv"'d 9 I' thesia may ~XhPtenena~~r a liquid diet on the first Childr m or adults who haye rec f ~ f~Nln'p eat a bland IIg me occas onally vomiting. It IS there 0 e pr day a,oter the surgery . Keep ears dry n er he ear after surgery. Plug ~he ears with a 1 . Keep the ears dry. 0,0 not , ~lI o \ n showering or washing the hair . cotton ball and Vaseline pe :role liable, they are not foolproof. These are available 2. Although ear plugs and swi T\ m oe purchased in our office, in all drugstores. Custom ~W l m 0 ter or water enters the ear during a shower, use the 3. If accidentally the head IS subm( rg 'l1ediately after the surgery or use a hairdryer to the antibiotic eardrops that were pre scr ear. Medi ::atloos Eardl ops are usually prescribed for 3 d er he surgery. Do not refrigerate eardrops, Hold bottle in yOllr hand for a few minutes to )ring l'Chops to body temperature. Cold eardrops cause a brief, but unpleasant vertigo. Fol\,)wi n( the I tlon of PE tubes, there is not much pain, Tylenol shou!d suffice to control any disccmfor Pleal :e note the following: If YOL have eardrops from your pE diatr :x pain such as Auralgan, Tympagesic. -

Old Cyrillic in Unicode*

Old Cyrillic in Unicode* Ivan A Derzhanski Institute for Mathematics and Computer Science, Bulgarian Academy of Sciences [email protected] The current version of the Unicode Standard acknowledges the existence of a pre- modern version of the Cyrillic script, but its support thereof is limited to assigning code points to several obsolete letters. Meanwhile mediæval Cyrillic manuscripts and some early printed books feature a plethora of letter shapes, ligatures, diacritic and punctuation marks that want proper representation. (In addition, contemporary editions of mediæval texts employ a variety of annotation signs.) As generally with scripts that predate printing, an obvious problem is the abundance of functional, chronological, regional and decorative variant shapes, the precise details of whose distribution are often unknown. The present contents of the block will need to be interpreted with Old Cyrillic in mind, and decisions to be made as to which remaining characters should be implemented via Unicode’s mechanism of variation selection, as ligatures in the typeface, or as code points in the Private space or the standard Cyrillic block. I discuss the initial stage of this work. The Unicode Standard (Unicode 4.0.1) makes a controversial statement: The historical form of the Cyrillic alphabet is treated as a font style variation of modern Cyrillic because the historical forms are relatively close to the modern appearance, and because some of them are still in modern use in languages other than Russian (for example, U+0406 “I” CYRILLIC CAPITAL LETTER I is used in modern Ukrainian and Byelorussian). Some of the letters in this range were used in modern typefaces in Russian and Bulgarian. -

Neurological Soft Signs in Mainstream Pupils Arch Dis Child: First Published As 10.1136/Adc.85.5.371 on 1 November 2001

Arch Dis Child 2001;85:371–374 371 Neurological soft signs in mainstream pupils Arch Dis Child: first published as 10.1136/adc.85.5.371 on 1 November 2001. Downloaded from J M Fellick, A P J Thomson, J Sills, C A Hart Abstract psychiatry. Are there any tests that a paediatri- Aims—(1) To examine the relation be- cian may use to predict which children have tween neurological soft signs and meas- significant problems? ures of cognition, coordination, and Neurological soft signs (NSS) may be behaviour in mainstream schoolchildren. defined as minor abnormalities in the neuro- (2) To determine whether high soft sign logical examination in the absence of other fea- scores may predict children with signifi- tures of fixed or transient neurological disor- cant problems in other areas. der.1 They have been associated with Methods—A total of 169 children aged behaviour,12 coordination,3 and learning diY- between 8 and 13 years from mainstream culties.4 Other authors believe they represent a schools were assessed. They form part of developmental lag rather than a fixed abnor- a larger study into the outcome of menin- mality.5 Studies have found a high incidence of gococcal disease in childhood. Half had soft signs in children following premature6 or previous meningococcal disease and half low birthweight7 birth, meningitis,8 and malnu- were controls. Assessment involved trition.910 measurement of six soft signs followed by There are a number of soft sign batteries assessment of motor skills (movement published that include tests of sensory func- ABC), cognitive function (WISC-III), and tion, coordination, motor speed, and abnormal behaviour (Conners’ Rating Scales). -

Sacred Concerto No. 6 1 Dmitri Bortniansky Lively Div

Sacred Concerto No. 6 1 Dmitri Bortniansky Lively div. Sla va vo vysh nikh bo gu, sla va vo vysh nikh bo gu, sla va vo Sla va vo vysh nikh bo gu, sla va vo vysh nikh bo gu, 8 Sla va vo vysh nikh bo gu, sla va, Sla va vo vysh nikh bo gu, sla va, 6 vysh nikh bo gu, sla va vovysh nikh bo gu, sla va vovysh nikh sla va vo vysh nikh bo gu, sla va vovysh nikh bo gu, sla va vovysh nikh 8 sla va vovysh nikh bo gu, sla va vovysh nikh bo gu sla va vovysh nikh bo gu, sla va vovysh nikh bo gu 11 bo gu, i na zem li mir, vo vysh nikh bo gu, bo gu, i na zem li mir, sla va vo vysh nikh, vo vysh nikh bo gu, i na zem 8 i na zem li mir, i na zem li mir, sla va vo vysh nikh, vo vysh nikh bo gu, i na zem i na zem li mir, i na zem li mir 2 16 inazem li mir, sla va vo vysh nikh, vo vysh nikh bo gu, inazem li mir, i na zem li li, i na zem li mir, sla va vo vysh nikh bo gu, i na zem li 8 li, inazem li mir, sla va vo vysh nikh, vo vysh nikh bo gu, i na zem li, ina zem li mir, vo vysh nikh bo gu, i na zem li 21 mir, vo vysh nikh bo gu, vo vysh nikh bo gu, i na zem li mir, i na zem li mir, vo vysh nikh bo gu, vo vysh nikh bo gu, i na zem li mir, i na zem li 8 mir, i na zem li mir, i na zem li mir, i na zem li, i na zem li mir,mir, i na zem li mir, i na zem li mir, inazem li, i na zem li 26 mir, vo vysh nikh bo gu, i na zem li mir. -

HYMNS CURRICULUM LEVEL 2 – First Edition – June 2017

HYMNS CURRICULUM LEVEL 2 – First Edition – June 2017 Under the Auspices of H.G. Bishop David, Bishop of New York and New England Curriculum for Learning Coptic Hymns Drafted by the Diocesan Subcommittee for Hymns in the Coptic Orthodox Diocese of New York and New England Page 2 of 53 Page 3 of 53 Page 4 of 53 Page 5 of 53 Hymns Syllabus - Level 2 Vespers/Matins • Tenouwst • Amwini • Wounia] • Tenjoust Long • Amyn Kurie eleycon • Allylouia (Psalm Response) and Tawwaf Liturgy • Je `vmeui • Tai soury • }soury • Pihmot gar • <ere ne Maria • Tenjoust (Gregorian) • Anavora (Gregorian) • Kurie eleycon (Gregorian) • Bwl ebol • Amyn Fraction (both ways) • Ic o panagioc Patyr Praises • Pihwc `nhouit • Qen ouswt • Marenouwnh • Ari'alin • Tenoueh `ncwk • Pihwc `mmahftoou • Loipon (Short) • };eotokia Sunday Page 6 of 53 Seasonal • }par;enoc/Ariprecbeuin • Eferanaf • "alia Batoc ie Adam <oiak • Pijinmici • Je peniwt • Apen[oic Holy Week & Holy 50 Days • Ebolqen • Ouka],ycic • Eulogite • Ke upertou (Short) • <rictoc Anecty • Ton cunanar,on Glorification • Kcmarwout • <ere :eotoke • Agioc ictin • Cena `tso • Vai pe `vlumen • Qen `Vran Papal • Kalwc Deacon Responses • Twbh `ejen nenio] (Litany of the Departed) • Twbh `ejen nenio] (Litany of the Sick) • Twbh `ejen nenio] (Litany of the Travelers) • Twbh `ejen ny`etfi (Litany of the Oblations) Page 7 of 53 Hymns for Verspers and Matins Introduction to the Verses of Cymbals for Adam Days يارب ارحم: @Lord have mercy: Kurie ele`ycon تعالوا فلنسجد، للثالوث @O come let us worship, the `Amwini marenouwst القدوس، الذي هو ال اب Holy Trinity, the Father and `n}`triac e;ouab@ `ete `Viwt والبن، والروح القدس. -

Language Specific Peculiarities Document for Halh Mongolian As Spoken in MONGOLIA

Language Specific Peculiarities Document for Halh Mongolian as Spoken in MONGOLIA Halh Mongolian, also known as Khalkha (or Xalxa) Mongolian, is a Mongolic language spoken in Mongolia. It has approximately 3 million speakers. 1. Special handling of dialects There are several Mongolic languages or dialects which are mutually intelligible. These include Chakhar and Ordos Mongol, both spoken in the Inner Mongolia region of China. Their status as separate languages is a matter of dispute (Rybatzki 2003). Halh Mongolian is the only Mongolian dialect spoken by the ethnic Mongolian majority in Mongolia. Mongolian speakers from outside Mongolia were not included in this data collection; only Halh Mongolian was collected. 2. Deviation from native-speaker principle No deviation, only native speakers of Halh Mongolian in Mongolia were collected. 3. Special handling of spelling None. 4. Description of character set used for orthographic transcription Mongolian has historically been written in a large variety of scripts. A Latin alphabet was introduced in 1941, but is no longer current (Grenoble, 2003). Today, the classic Mongolian script is still used in Inner Mongolia, but the official standard spelling of Halh Mongolian uses Mongolian Cyrillic. This is also the script used for all educational purposes in Mongolia, and therefore the script which was used for this project. It consists of the standard Cyrillic range (Ux0410-Ux044F, Ux0401, and Ux0451) plus two extra characters, Ux04E8/Ux04E9 and Ux04AE/Ux04AF (see also the table in Section 5.1). 5. Description of Romanization scheme The table in Section 5.1 shows Appen's Mongolian Romanization scheme, which is fully reversible. -

Once, a Rather Long Time Ago, a Very Good Doctor Told Us, "Never Go

Once, a rather long time ago, muscles in the very process of a very good doctor told us, stretching and relaxing, we miss "Never go to sleep tired. Exer our guess ! \Ve haven't had a cise until you have released ten chance to prove this theory but a sions, cleansing muscles and tis woman who has tells us she took sues of all fatigue poisons. off two inches in a yery short Otherwise you probably will time. wake still tired." Another adYantage offered by Many times we have had an the Rx Lounge is the so-call ed opportunity to verify the truth beauty or body lant, known to of this statement that if you fall all fashionable salons where asleep tense and tired you usual complete beauty culture is prac ly wake much the same way. But ticed. This is a Yery simple way this doctor's a d v i c e always of relaxing the ''"hole body by seemed too stern for us to fol low. placing the head lower than the \i\f hen we are t ired we simply do feet at the proper angle. The not exercise ! beauty slant straightens the Many years later - just the spine ... frees feet and legs from other day in fact - we found a the continual pull of gravity, re solution to the problem, at leasing congestions in tissues ECKERTS' of a ll places! Solu and blood stream . g ives ab tion is the Rx Lounge, contour dominal muscles a lift and allows chair with a patented construc the blood to flow easily, without tion that enables you, with a strain on the heart, to face, minimum of effort, to exercise throat, chin and shoulders. -

1 Phün Tsok Ge Lek Che Wai Trün Pey Ku Thar

SONGS OF SPIRITUAL EXPERIENCE - Condensed Points of the Stages of the Path - lam rim nyams mgur - by Je Tsongkapa 1 PHÜN TSOK GE LEK CHE WAI TRÜN PEY KU THAR YE DRO WAI RE WA KONG WEY SUNG MA LÜ SHE JA JI ZHIN ZIK PEY THUK SHA KYEY TSO WO DE LA GO CHAK TSEL Your body is created from a billion perfect factors of goodness; Your speech satisfies the yearnings of countless sentient beings; Your mind perceives all objects of knowledge exactly as they are – I bow my head to you O chief of the Shakya clan. 2 DA ME TÖN PA DE YI SE KYI CHOK GYAL WAI DZE PA KÜN GYI KUR NAM NE DRANG ME ZHING DU TRÜL WAI NAM RÖL PA MI PAM JAM PAI YANG LA CHAK TSEL LO You’re the most excellent sons of such peerless teacher; You carry the burden of the enlightened activities of all conquerors, And in countless realms you engage in ecstatic display of emanations – I pay homage to you O Maitreya and Manjushri. 3 SHIN TU PAK PAR KAR WA GYAL WAI YUM JI ZHIN GONG PA DREL DZE DZAM LING GYEN LU DRUB THOK ME CHE NI SA SUM NA YONG SU TRAK PEY ZHAB LA DAG CHAK TSEL So difficult to fathom is the mother of all conquerors, You who unravel its contents as it is are the jewels of the world; You’re hailed with great fame in all three spheres of the world – I pay homage to you O Nagarjuna and Asanga. -

Marking the Grave of Lincoln's Mother

Bulletm of the Linculn Nations! Life Foundation. - - - - - - Dr. Louis A. Warren, E~itor. Published each we<'k by Tho Lincoln Nataonal L•fe lnsurnnce Company, of Fort Wayne, Indiana. No. 218 Jo'ORT WAYNE, fNDIANA June 12, 1933 MARKING THE GRAVE OF LINCOLN'S MOTHER The annual obJt rvancc o! :M<'morhll nnlt \1,; i~h nppro :. h·tt··r which )lr. P. E. Studebaker or South Bend wrote priatc e.."<erci~~ at the grn\'e of Nancy Hank L1ncoln in· to Cu~emor !.Iount on June 11, 1897, staU.s t.hnt he rood ,;tcs a contlnunll)· incrC".lBII g numlw:r of people to attend of th~ negl~led condition of the grave in a ncY..'"Spaper, the ceremonie:; each yenr. 1 h1 fact a;,uggl" t3 thnt the ma~k and, at the :;-uggestion of Sehuyler Cotcax, "I enu cd a ing of the burial placo of Lincoln's mother •• a story wh1ch mO\;.~t .:.lab to be pbccd o'\"'"~r the gra\·e, and at the J.Bmo should be preserved. Whlil• at as difilcult tn \erify a;ome of time friends pbced an iron fence around the lot ... 1 hn\'G the early tradition" mentioning rn:Lrkcra used nt the grave, u \Cr my:.clf vi,.itcd tht< spot." Trumnn S. Gilke)•, the post the accounL;,; of the more forrnal nttempts to honor the m·aster ht H~kport, acted as agent for llr. Stud<'bak(>r in president's mother arc av.aiJab1e. t'urchn!-.ing the marker. Allli-.d H. Yates, the Jocal Jnonu· Origi11al .llarl~crs m(nt worker, ~ured the stone from \\'. -

TLD: Сайт Script Identifier: Cyrillic Script Description: Cyrillic Unicode (Basic, Extended-A and Extended-B) Version: 1.0 Effective Date: 02 April 2012

TLD: сайт Script Identifier: Cyrillic Script Description: Cyrillic Unicode (Basic, Extended-A and Extended-B) Version: 1.0 Effective Date: 02 April 2012 Registry: сайт Registry Contact: Iliya Bazlyankov <[email protected]> Tel: +359 8 9999 1690 Website: http://www.corenic.org This document presents a character table used by сайт Registry for IDN registrations in Cyrillic script. The policy disallows IDN variants, but prevents registration of names with potentially similar characters to avoid any user confusion. U+002D;U+002D # HYPHEN-MINUS -;- U+0030;U+0030 # DIGIT ZERO 0;0 U+0031;U+0031 # DIGIT ONE 1;1 U+0032;U+0032 # DIGIT TWO 2;2 U+0033;U+0033 # DIGIT THREE 3;3 U+0034;U+0034 # DIGIT FOUR 4;4 U+0035;U+0035 # DIGIT FIVE 5;5 U+0036;U+0036 # DIGIT SIX 6;6 U+0037;U+0037 # DIGIT SEVEN 7;7 U+0038;U+0038 # DIGIT EIGHT 8;8 U+0039;U+0039 # DIGIT NINE 9;9 U+0430;U+0430 # CYRILLIC SMALL LETTER A а;а U+0431;U+0431 # CYRILLIC SMALL LETTER BE б;б U+0432;U+0432 # CYRILLIC SMALL LETTER VE в;в U+0433;U+0433 # CYRILLIC SMALL LETTER GHE г;г U+0434;U+0434 # CYRILLIC SMALL LETTER DE д;д U+0435;U+0435 # CYRILLIC SMALL LETTER IE е;е U+0436;U+0436 # CYRILLIC SMALL LETTER ZHE ж;ж U+0437;U+0437 # CYRILLIC SMALL LETTER ZE з;з U+0438;U+0438 # CYRILLIC SMALL LETTER I и;и U+0439;U+0439 # CYRILLIC SMALL LETTER SHORT I й;й U+043A;U+043A # CYRILLIC SMALL LETTER KA к;к U+043B;U+043B # CYRILLIC SMALL LETTER EL л;л U+043C;U+043C # CYRILLIC SMALL LETTER EM м;м U+043D;U+043D # CYRILLIC SMALL LETTER EN н;н U+043E;U+043E # CYRILLIC SMALL LETTER O о;о U+043F;U+043F -

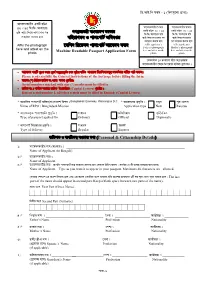

MRP Application Form

¢X.BC.¢f glj - 1 (¢he¡j¨−mÉ fÊ¡fÉ) A¡−hceL¡l£l HL¢V l¢Pe 55 ^ 45 ¢jx¢jx A¡L¡−ll A¡−hceL¡l£l ¢fa¡l A¡−hceL¡l£l j¡a¡l NZfÊS¡a¿»£ h¡wm¡−cn plL¡l HL¢V l¢Pe 30 ^ 25 HL¢V l¢Pe 30 ^ 25 R¢h A¡W¡ ¢c−u m¡N¡−e¡l fl ¢jx¢jx A¡L¡−ll R¢h ¢jx¢jx A¡L¡−ll R¢h paÉ¡ue Ll−a q−h h¢ql¡Nje J f¡p−f¡VÑ A¢dcçl A¡W¡ ¢c−u m¡N¡−e¡l fl A¡W¡ ¢c−u m¡N¡−e¡l paÉ¡ue Ll−a q−h fl paÉ¡ue Ll−a q−h Affix the photograph Affix applicant’s Affix applicant’s −j¢ne ¢l−Xhm f¡p−f¡VÑ A¡−hce glj Father’s photograph Mother’s photograph here and attest on the Machine Readable Passport Application Form here and attest on the here and attest on the photo photo photo −Lhmj¡œ 15 hvp−ll e£−Q AfË¡çhuú A¡−hceL¡l£l −r−œ Ef−l¡š² R¢hàu fË−u¡Se z • A¡−hce fœ¢V f¨lZ Ll¡l f¨−hÑ Ae¤NÊqf§hÑL −no fªù¡u h¢ZÑa p¡d¡le ¢e−cÑne¡pj§q paLÑa¡l p¢qa f¡W Ll²ez Please read carefully the General Instructions at the last page before filling the form. • a¡lL¡ (*) ¢Q¢q²a œ²¢jL ew …−m¡ AhnÉ f¨lZ£uz Serial numbers marked with star (*) marks must be filled in.