Interaction Techniques for Finger Sensing Input Devices

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Using Action-Level Metrics to Report the Performance of Multi-Step Keyboards

Using Action-Level Metrics to Report the Performance of Multi-Step Keyboards Gulnar Rakhmetulla* Ahmed Sabbir Arif† Steven J. Castellucci‡ Human-Computer Interaction Group Human-Computer Interaction Group Independent Researcher University of California, Merced University of California, Merced Toronto I. Scott MacKenzie§ Caitlyn E. Seim¶ Electrical Engineering and Computer Science Mechanical Engineering York University Stanford University Table 1: Conventional error rate (ER) and action-level unit error rate ABSTRACT (UnitER) for a constructive method (Morse code). This table illustrates Computer users commonly use multi-step text entry methods on the phenomenon of using Morse code to enter “quickly” with one handheld devices and as alternative input methods for accessibility. character error (“l”) in each attempt. ER is 14.28% for each attempt. These methods are also commonly used to enter text in languages One of our proposed action-level metrics, UnitER, gives a deeper with large alphabets or complex writing systems. These methods insight by accounting for the entered input sequence. It yields an error require performing multiple actions simultaneously (chorded) or rate of 7.14% for the first attempt (with two erroneous dashes), and in a specific sequence (constructive) to produce input. However, an improved error rate of 3.57% for the second attempt (with only evaluating these methods is difficult since traditional performance one erroneous dash). The action-level metric shows that learning has metrics were designed explicitly for non-ambiguous, uni-step meth- occurred with a minor improvement in error rate, but this phenomenon ods (e.g., QWERTY). They fail to capture the actual performance of is omitted in the ER metric, which is the same for both attempts. -

Senorita: a Chorded Keyboard for Sighted, Low Vision, and Blind

Senorita: A Chorded Keyboard for Sighted, Low Vision, and Blind Mobile Users Gulnar Rakhmetulla Ahmed Sabbir Arif Human-Computer Interaction Group Human-Computer Interaction Group University of California, Merced University of California, Merced Merced, CA, United States Merced, CA, United States [email protected] [email protected] Figure 1. With Senorita, the left four keys are tapped with the left thumb and the right four keys are tapped with the right thumb. Tapping a key enters the letter in the top label. Tapping two keys simultaneously (chording) enters the common letter between the keys in the bottom label. The chord keys are arranged on opposite sides. Novices can tap a key to see the chords available for that key. This figure illustrates a novice user typing the word “we”. She scans the keyboard from the left and finds ‘w’ on the ‘I’ key. She taps on it with her left thumb to see all chords for that key on the other side, from the edge: ‘C’, ‘F’, ‘W’, and ‘X’ (the same letters in the bottom label of the ‘I’ key). She taps on ‘W’ with her right thumb to form a chord to enter the letter. The next letter ‘e’ is one of the most frequent letters in English, thus has a dedicated key. She taps on it with her left thumb to complete the word. ABSTRACT INTRODUCTION Senorita is a novel two-thumb virtual chorded keyboard for Virtual Qwerty augmented with linguistic and behavioral mod- mobile devices. It arranges the letters on eight keys in a single els has become the dominant input method for mobile devices. -

Gesture Typing on Smartwatches Using a Segmented QWERTY Around the Bezel

SwipeRing: Gesture Typing on Smartwatches Using a Segmented QWERTY Around the Bezel Gulnar Rakhmetulla* Ahmed Sabbir Arif† Human-Computer Interaction Group Human-Computer Interaction Group University of California, Merced University of California, Merced Figure 1: SwipeRing arranges the standard QWERTY layout around the edge of a smartwatch in seven zones. To enter a word, the user connects the zones containing the target letters by drawing gestures on the screen, like gesture typing on a virtual QWERTY. A statistical decoder interprets the input and enters the most probable word. A suggestion bar appears to display other possible words. The user could stroke right or left on the suggestion bar to see additional suggestions. Tapping on a suggestion replaces the last entered word. One-letter and out-of-vocabulary words are entered by repeated strokes from/to the zones containing the target letters, in which case the keyboard first enters the two one-letter words in the English language (see the second last image from the left), then the other letters in the sequence in which they appear in the zones (like multi-tap). Users could also repeatedly tap (instead of stroke) on the zones to enter the letters. The keyboard highlights the zones when the finger enters them and traces all finger movements. This figure illustrates the process of entering the phrase “the world is a stage” on the SwipeRing keyboard (upper sequence) and on a smartphone keyboard (bottom sequence). We can clearly see the resemblance of the gestures. ABSTRACT player or rejecting a phone call), checking notifications on incoming Most text entry techniques for smartwatches require repeated taps to text messages and social media posts, and using them as fitness enter one word, occupy most of the screen, or use layouts that are trackers to record daily physical activity. -

Clavier DUCK

Clavier DUCK : Utilisation d'un syst`emede d´eduction de mots pour faciliter la saisie de texte sur ´ecrantactile pour les non-voyants Philippe Roussille, Mathieu Raynal, Christophe Jouffrais To cite this version: Philippe Roussille, Mathieu Raynal, Christophe Jouffrais. Clavier DUCK : Utilisation d'un syst`emede d´eduction de mots pour faciliter la saisie de texte sur ´ecrantactile pour les non- voyants. 27`eme conf´erencefrancophone sur l'Interaction Homme-Machine., Oct 2015, Toulouse, France. ACM, IHM-2015, pp.a19, 2015, <10.1145/2820619.2820638>. <hal-01218748> HAL Id: hal-01218748 https://hal.archives-ouvertes.fr/hal-01218748 Submitted on 21 Oct 2015 HAL is a multi-disciplinary open access L'archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destin´eeau d´ep^otet `ala diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publi´esou non, lished or not. The documents may come from ´emanant des ´etablissements d'enseignement et de teaching and research institutions in France or recherche fran¸caisou ´etrangers,des laboratoires abroad, or from public or private research centers. publics ou priv´es. Clavier DUCK : Utilisation d’un système de déduction de mots pour faciliter la saisie de texte sur écran tactile pour les non-voyants. Philippe Roussille Mathieu Raynal Christophe Jouffrais Université de Toulouse & Université de Toulouse & CNRS & Université de CNRS ; IRIT ; CNRS ; IRIT ; Toulouse ; IRIT ; F31 062 Toulouse, France F31 062 Toulouse, France F31 062 Toulouse, France [email protected] [email protected] [email protected] RÉSUMÉ fournissant des claviers logiciels qui s’affichent sur ces L’utilisation des écrans tactiles et en particulier les cla- surfaces tactiles. -

Using Action-Level Metrics to Report the Performance of Multi-Step Keyboards

Using Action-Level Metrics to Report the Performance of Multi-Step Keyboards Gulnar Rakhmetulla* Ahmed Sabbir Arif† Steven J. Castellucci‡ Human-Computer Interaction Group Human-Computer Interaction Group Independent Researcher University of California, Merced University of California, Merced Toronto I. Scott MacKenzie§ Caitlyn E. Seim¶ Electrical Engineering and Computer Science Mechanical Engineering York University Stanford University Table 1: Conventional error rate (ER) and action-level unit error rate ABSTRACT (UnitER) for a constructive method (Morse code). This table illustrates Computer users commonly use multi-step text entry methods on the phenomenon of using Morse code to enter “quickly” with one handheld devices and as alternative input methods for accessibility. character error (“l”) in each attempt. ER is 14.28% for each attempt. These methods are also commonly used to enter text in languages One of our proposed action-level metrics, UnitER, gives a deeper with large alphabets or complex writing systems. These methods insight by accounting for the entered input sequence. It yields an error require performing multiple actions simultaneously (chorded) or rate of 7.14% for the first attempt (with two erroneous dashes), and in a specific sequence (constructive) to produce input. However, an improved error rate of 3.57% for the second attempt (with only evaluating these methods is difficult since traditional performance one erroneous dash). The action-level metric shows that learning has metrics were designed explicitly for non-ambiguous, uni-step meth- occurred with a minor improvement in error rate, but this phenomenon ods (e.g., QWERTY). They fail to capture the actual performance of is omitted in the ER metric, which is the same for both attempts. -

Designing a Better Touch Keyboard

Designing a Better Touch Keyboard JOEL BESADA ([email protected]) DD143X Degree Project in Computer Science, First Level KTH Royal Institute of Technology, School of Computer Science and Communication - Supervisor: Anders Askenfelt Examiner: Mårten Björkman iii Abstract The growing market of smartphone and tablet devices has made touch screens a big part of how people interact with technology today. The standard virtual keyboard used on these devices is based on the computer keyboard – a design that may not be very well suited for touch screens. This study sought to answer whether there are alterna- tive ways to design virtual touch keyboards. An alternative design may require training to use, but the user should be able to use it viably after a reasonable amount of prac- tice. Optimally, the user should also have the potential to reach a higher level of efficiency than what is possible on the standard touch keyboard design. Analysis was done by researching current and previous attempts at alternative keyboard designs, and by imple- menting an own prototype based on analysis made on the researched designs. The thesis concludes that there is an interest in solving issues encountered in this area, and that there is good potential in building viable virtual keyboards using alternative design paradigms. iv Referat Utformning av ett bättre pektangentbord Den växande marknaden för smarttelefoner och surfplattor har gjort pekskärmen till en stor del av hur folket interage- rar med dagens teknologi. Det virtuella standardtangent- bordet som används på dessa enheter är baserad på dator- tangentbordet – en design som möjligen inte är särskilt bra anpassad för pekskärmar. -

Mobile Devices Developers Guide

MOBILE DEVICES DEVELOPERS GUIDE MOBILE DEVICES DEVELOPERS GUIDE 8000271-001 Rev. A April 2015 ii Mobile Devices Developers Guide No part of this publication may be reproduced or used in any form, or by any electrical or mechanical means, without permission in writing from Zebra. This includes electronic or mechanical means, such as photocopying, recording, or information storage and retrieval systems. The material in this manual is subject to change without notice. The software is provided strictly on an “as is” basis. All software, including firmware, furnished to the user is on a licensed basis. Zebra grants to the user a non-transferable and non-exclusive license to use each software or firmware program delivered hereunder (licensed program). Except as noted below, such license may not be assigned, sublicensed, or otherwise transferred by the user without prior written consent of Zebra. No right to copy a licensed program in whole or in part is granted, except as permitted under copyright law. The user shall not modify, merge, or incorporate any form or portion of a licensed program with other program material, create a derivative work from a licensed program, or use a licensed program in a network without written permission from Zebra. The user agrees to maintain Zebra’s copyright notice on the licensed programs delivered hereunder, and to include the same on any authorized copies it makes, in whole or in part. The user agrees not to decompile, disassemble, decode, or reverse engineer any licensed program delivered to the user or any portion thereof. Zebra reserves the right to make changes to any software or product to improve reliability, function, or design. -

Predicting and Reducing the Impact of Errors in Character-Based Text Entry

PREDICTING AND REDUCING THE IMPACT OF ERRORS IN CHARACTER-BASED TEXT ENTRY AHMED SABBIR ARIF A DISSERTATION SUBMITTED TO THE FACULTY OF GRADUATE STUDIES IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY GRADUATE PROGRAM IN COMPUTER SCIENCE AND ENGINEERING YORK UNIVERSITY TORONTO, ONTARIO APRIL 2014 © AHMED SABBIR ARIF, 2014 Abstract This dissertation focuses on the effect of errors in character-based text entry techniques. The effect of errors is targeted from theoretical, behavioral, and practical standpoints. This document starts with a review of the existing literature. It then presents results of a user study that investigated the effect of different error correction conditions on popular text entry performance metrics. Results showed that the way errors are handled has a significant effect on all frequently used error metrics. The outcomes also provided an understanding of how users notice and correct errors. Building on this, the dissertation then presents a new high-level and method-agnostic model for predicting the cost of error correction with a given text entry technique. Unlike the existing models, it accounts for both human and system factors and is general enough to be used with most character-based techniques. A user study verified the model through measuring the effects of a faulty keyboard on text entry performance. Subsequently, the work then explores the potential user adaptation to a gesture recognizer’s misrecognitions in two user studies. Results revealed that users gradually adapt to misrecognition errors by replacing the erroneous gestures with alternative ones, if available. Also, users adapt to a frequently misrecognized gesture faster if it occurs more frequently than the other error-prone gestures. -

Me310a 2003-2004

ME310 2003-2004 Optimum Human Machine Interface for the IT Generation all great design firms started in someone’s garage Department of Mechanical Engineering School of Engineering, Stanford University, Stanford CA 94305 Tori L. Bailey David P. Fries Philipp L. Skogstad ME 310 – YTPD Garage June 7, 2004 Toyota Info Technology Center U.S.A. Interface for IT Generation Page 2 of 254 ME 310 – YTPD Garage June 7, 2004 Toyota Info Technology Center U.S.A. Interface for IT Generation 1 Front Matter 1.1 Executive Summary Driving the vehicle has been the primary task of the driver since the automobile was invented over 100 years ago. Yet as technology increasingly invades the car, the driver is required to divert attention to managing secondary (non-driving) tasks created by these technologies. The goal of the Toyota project is to investigate the types of secondary tasks that might be available to the IT Generation in future vehicles, and to design an interface that will allow the driver to accomplish these secondary tasks safely. The design team, a collaboration between Stanford University and the Tokyo Metropolitan Institute of Technology (TMIT), has explored many ideas for future vehicle functions and determined that most involve the idea of “connectedness.” The teams are focusing on improving this in-car “connectedness” by designing an interface system that allows drivers to create text while driving safely. Fig. 1: The Optimum Human Machine Interface for text entry while driving. The system consists of four components: a text input device, a logic core (software), an output device, and a test vehicle for collecting user data. -

Computer-Enhanced and Mobile-Assisted Language Learning: Emerging Issues and Trends

Computer-Enhanced and Mobile-Assisted Language Learning: Emerging Issues and Trends Felicia Zhang University of Canberra, Australia Senior Editorial Director: Kristin Klinger Director of Book Publications: Julia Mosemann Editorial Director: Lindsay Johnston Acquisitions Editor: Erika Carter Development Editor: Hannah Abelbeck Production Editor: Sean Woznicki Typesetters: Lisandro Gonzalez, Chris Shearer, Mackenzie Snader, Milan Vracarich Print Coordinator: Jamie Snavely Cover Design: Nick Newcomer Published in the United States of America by Information Science Reference (an imprint of IGI Global) 701 E. Chocolate Avenue Hershey PA 17033 Tel: 717-533-8845 Fax: 717-533-8661 E-mail: [email protected] Web site: http://www.igi-global.com Copyright © 2012 by IGI Global. All rights reserved. No part of this publication may be reproduced, stored or distributed in any form or by any means, electronic or mechanical, including photocopying, without written permission from the publisher. Product or company names used in this set are for identification purposes only. Inclusion of the names of the products or companies does not indicate a claim of ownership by IGI Global of the trademark or registered trademark. Library of Congress Cataloging-in-Publication Data Computer-enhanced and mobile-assisted language learning: emerging issues and trends / Felicia Zhang, editor. p. cm. Includes bibliographical references and index. Summary: “This book presents the latest research into computer-enhanced language learning as well as the integration of mobile devices into new language acquisition”--Provided by publisher. ISBN 978-1-61350-065-1 -- ISBN 978-1-61350-066-8 (ebook) -- ISBN 978-1-61350-067-5 (print & perpetual access) 1. Language and languages--Computer-assisted instruction. -

MIDI in Samplitude 220 MIDI Options 220 Import, Record, Edit 220 MIDI Object Editorctrl + O 221

2 COPYRIGHT Copyright This documentation is protected by copyright law. All rights, especially rights to reproduction, distribution, and to the translation, are reserved. No part of this publication may be reproduced in form of copies, microfilms or other processes, or transmitted into a language used for machines, especially data processing machines, without the express written consent of the publisher. MAGIX® and Samplitude® are registered trademarks of MAGIX AG. ASIO & VST are registered trademarks of Steinberg Media Technologies GmbH. All other mentioned product names are trademarks of their respective owners. Errors and changes to the contents as well as program modifications reserved. This product uses MAGIX patented technology (USP 6518492) and MAGIX patent pending technology. Copyright © MAGIX AG, 1990-2009. All rights reserved. WELCOME 3 Welcome Thank you for choosing Samplitude! You now have in your possession one of the most successful all-around solutions for professional audio editing. As a PC-based Digital Audio Workstation (DAW), this software supplies comprehensive application options for recording, editing, mixing, media authoring, and mastering. The current version was developed in close collaboration with musicians, sound engineers, producers, and users. It has lots of innovative functions and incorporates the comprehensive and advanced development of tried and tested performance features – unique functionality & sound neutrality, outstanding cutting & editing options, perfect CD/DVD mastering, and the flexible customization of individual workflows. There are various tools available to you for every work step – all completely in 32-bit and available at up to 384 KHz. Outstanding sound based on highly-developed digital algorithms, absolute phase stability, and the universal use of floating-point calculations belong to the professional standard boasted by Samplitude. -

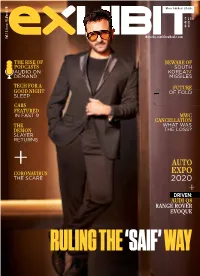

Audi Q8 Range Rover Evoque Ruling the ‘Saif’ Way

Where Tech Meets Lifestyle ` 150 € 2 $ 2 Vol.15.Issue.10.March.20 THE RISE OF BEWARE OF PODCASTS SOUTH AUDIO ON KOREA‘N’ DEMAND MISSILES TECH FOR A FUTURE GOOD NIGHT OF FOLD SLEEP CARS FEATURED IN FAST 9 MWC CANCELLATION THE WHAT WAS DEMON THE LOSS? SLAYER RETURNS + AUTO CORONAVIRUS EXPO THE SCARE 2020 + DRIVEN: AUDI Q8 RANGE ROVER EVOQUE RULING THE ‘SAIF’ WAY MAR 20 I I 1 Skip the reams of paper Go GREEN in a TECH way. Your one-stop access to all the daily feeds of tech & Lifestyle entwined in one place Get instant access on the go Faster than a fingerprint scanner SKIP THE PAPER MODE QUICKLY, SWITCH TO A TAB OR BROWSER. The touch of paper has its own luxury but if you are roaming the world, then our Web Edition is the ultimate answer to your calling. 2 I I MAR 20 WELCOME TO THE TABLET EDITION Look further for Exhibit Enhanced play sign to ENHANCED watch the page come alive. GET THE MAR 20 I I 3 MAKE THE BEST FILMMAKERS SQUIRM WITH ENVY SHOT ON NIKON Z 7 IMAGE COURTESY WANDERLUST BY SUJISHA & RAJEEV 45.7 493 8K 64 - 24.5 12 4K UHD 100 - megapixels AF points^ time-lapse 25600 megapixels FPS# full-frame 51200 movie* ISO movie ISO Body MRP: ` 2 30 450.00 Body MRP: ` 1 56 450.00 Body + Lens (24-70mm) MRP: ` 2 71 450.00 Body + Lens (24-70mm) MRP: ` 1 97 950.00 Price quoted is for one unit of product. MRP inclusive of all taxes | ^In FX format with single-point AF.