Download the Transcript

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Traditional Institutions, Social Change, and Same-Sex Marriage

WAX.DOC 10/5/2005 1:41 PM The Conservative’s Dilemma: Traditional Institutions, Social Change, and Same-Sex Marriage AMY L. WAX* I. INTRODUCTION What is the meaning of marriage? The political fault lines that have emerged in the last election on the question of same-sex marriage suggest that there is no consensus on this issue. This article looks at the meaning of marriage against the backdrop of the same-sex marriage debate. Its focus is on the opposition to same-sex marriage. Drawing on the work of some leading conservative thinkers, it investigates whether a coherent, secular case can be made against the legalization of same-sex marriage and whether that case reflects how opponents of same-sex marriage think about the issue. In examining these questions, the article seeks more broadly to achieve a deeper understanding of the place of marriage in social life and to explore the implications of the recent controversy surrounding its reform. * Professor of Law, University of Pennsylvania Law School. 1059 WAX.DOC 10/5/2005 1:41 PM One striking aspect of the debate over the legal status of gay relationships is the contrast between public opinion, which is sharply divided, and what is written about the issue, which is more one-sided. A prominent legal journalist stated to me recently, with grave certainty, that there exists not a single respectable argument against the legal recognition of gay marriage. The opponents’ position is, in her word, a “nonstarter.” That viewpoint is reflected in discussions of the issue that appear in the academic literature. -

President Biden Appeals for Unity He Faces a Confluence of Crises Stemming from Pandemic, Insurrection & Race by BRIAN A

V26, N21 Thursday, Jan.21, 2021 President Biden appeals for unity He faces a confluence of crises stemming from pandemic, insurrection & race By BRIAN A. HOWEY INDIANAPOLIS – In what remains a crime scene from the insurrection on Jan. 6, President Joe Biden took the oath of office at the U.S. Capitol Wednesday, appealing to all Americans for “unity” and the survival of the planet’s oldest democ- racy. “We’ve learned again that democracy is precious,” when he declared in strongman fashion, “I alone can fix Biden said shortly before noon Wednesday after taking the it.” oath of office from Chief Justice John Roberts. “Democ- When Trump fitfully turned the reins over to Biden racy is fragile. And at this hour, my friends, democracy has without ever acknowledging the latter’s victory, it came prevailed.” after the Capitol insurrection on Jan. 6 that Senate Minor- His words of assurance came four years to the day ity Leader Mitch McConnell said he had “provoked,” leading since President Trump delivered his dystopian “American to an unprecedented second impeachment. It came with carnage” address, coming on the heels of his Republican National Convention speech in Cleveland in July 2016 Continued on page 3 Biden’s critical challenge By BRIAN A. HOWEY INDIANAPOLIS – Here is the most critical chal- lenge facing President Biden: Vaccinate as many of the 320 million Americans as soon as possible. While the Trump administration’s Operation Warp “Hoosiers have risen to meet Speed helped develop the CO- VID-19 vaccine in record time, these unprecedented challenges. most of the manufactured doses haven’t been injected into the The state of our state is resilient arms of Americans. -

Deception, Disinformation, and Strategic Communications: How One Interagency Group Made a Major Difference by Fletcher Schoen and Christopher J

STRATEGIC PERSPECTIVES 11 Deception, Disinformation, and Strategic Communications: How One Interagency Group Made a Major Difference by Fletcher Schoen and Christopher J. Lamb Center for Strategic Research Institute for National Strategic Studies National Defense University Institute for National Strategic Studies National Defense University The Institute for National Strategic Studies (INSS) is National Defense University’s (NDU’s) dedicated research arm. INSS includes the Center for Strategic Research, Center for Complex Operations, Center for the Study of Chinese Military Affairs, Center for Technology and National Security Policy, Center for Transatlantic Security Studies, and Conflict Records Research Center. The military and civilian analysts and staff who comprise INSS and its subcomponents execute their mission by conducting research and analysis, publishing, and participating in conferences, policy support, and outreach. The mission of INSS is to conduct strategic studies for the Secretary of Defense, Chairman of the Joint Chiefs of Staff, and the Unified Combatant Commands in support of the academic programs at NDU and to perform outreach to other U.S. Government agencies and the broader national security community. Cover: Kathleen Bailey presents evidence of forgeries to the press corps. Credit: The Washington Times Deception, Disinformation, and Strategic Communications: How One Interagency Group Made a Major Difference Deception, Disinformation, and Strategic Communications: How One Interagency Group Made a Major Difference By Fletcher Schoen and Christopher J. Lamb Institute for National Strategic Studies Strategic Perspectives, No. 11 Series Editor: Nicholas Rostow National Defense University Press Washington, D.C. June 2012 Opinions, conclusions, and recommendations expressed or implied within are solely those of the contributors and do not necessarily represent the views of the Defense Department or any other agency of the Federal Government. -

Mccarthyism and the Big Lie

University of Central Florida STARS PRISM: Political & Rights Issues & Social Movements 1-1-1953 McCarthyism and the big lie Milton Howard Find similar works at: https://stars.library.ucf.edu/prism University of Central Florida Libraries http://library.ucf.edu This Book is brought to you for free and open access by STARS. It has been accepted for inclusion in PRISM: Political & Rights Issues & Social Movements by an authorized administrator of STARS. For more information, please contact [email protected]. Recommended Citation Howard, Milton, "McCarthyism and the big lie" (1953). PRISM: Political & Rights Issues & Social Movements. 45. https://stars.library.ucf.edu/prism/45 and the BIG LIE McCARTHYISM AND THE BIG LIE By MILTON HOWARD A man is standing on the steps of the US. Trsssnry Buildiig in downtown New York. He irs &outing his contempt for any American who believes we can have peace in tbis world. He says such Americas are "Kremlin dupes." He derides them as "egg-heads and appeaser&" The speaker is Joe McCarthy, U.S. Senator from Wisconsin. He holds the crowd's attention. He has studied the trade of lashing hysteria into rm audience. Five years ago, he was a little known pvliti- . cian. Today, his name has become known throughout the world. .Hehas baptized the poIitical fact known as McCarthykn. Americans began to get a whiff of this new fact when millions eud- denly faced what is now known as "The Reign of Fear." Three years ago, the President of the U.S.A. startled the world. He said that the American citizens of the state of Wisconsin were afraia. -

Marx and Mendacity: Can There Be a Politics Without Hypocrisy?

Analyse & Kritik 01+02/2015 (© Lucius & Lucius, Stuttgart) S. 521 Martin Jay Marx and Mendacity: Can There Be a Politics without Hypocrisy? Abstract: As demonstrated by Marx's erce defence of his integrity when anonymously accused of lying in l872, he was a principled believer in both personal honesty and the value of truth in politics. Whether understood as enabling an accurate, `scientic' depiction of the contradictions of the present society or a normative image of a truly just society to come, truth-telling was privileged by Marx over hypocrisy as a political virtue. Contemporary Marxists like Alain Badiou continue this tradition, arguing that revolutionary politics should be understood as a `truth procedure'. Drawing on the alternative position of political theorists such as Hannah Arendt, who distrusted the monologic and absolutist implications of a strong notion of truth in politics, this paper defends the role that hypocrisy and mendacity, understood in terms of lots of little lies rather than one big one, can play in a pluralist politics, in which, pace Marx, rhetoric, opinion and the clash of values resist being subsumed under a singular notion of the truth. 1. Introduction In l872, an anonymous attack was launched in the Berlin Concordia: Zeitschrift für die Arbeiterfrage against Karl Marx for having allegedly falsied a quotation from an 1863 parliamentary speech by the British Liberal politician, and future Prime Minister, William Gladstone in his own Inaugural Address to the First International in l864. The polemic was written, so it was later disclosed, by the eminent liberal political economist Lujo Brentano.1 Marx vigorously defended himself in a response published later that year in Der Volksstaat, launching a bitter debate that would drag on for two decades, involving Marx's daughter Eleanor, an obscure Cambridge don named Sedly Taylor, and even Gladstone himself, who backed Brentano's version. -

WHY COMPETITION in the POLITICS INDUSTRY IS FAILING AMERICA a Strategy for Reinvigorating Our Democracy

SEPTEMBER 2017 WHY COMPETITION IN THE POLITICS INDUSTRY IS FAILING AMERICA A strategy for reinvigorating our democracy Katherine M. Gehl and Michael E. Porter ABOUT THE AUTHORS Katherine M. Gehl, a business leader and former CEO with experience in government, began, in the last decade, to participate actively in politics—first in traditional partisan politics. As she deepened her understanding of how politics actually worked—and didn’t work—for the public interest, she realized that even the best candidates and elected officials were severely limited by a dysfunctional system, and that the political system was the single greatest challenge facing our country. She turned her focus to political system reform and innovation and has made this her mission. Michael E. Porter, an expert on competition and strategy in industries and nations, encountered politics in trying to advise governments and advocate sensible and proven reforms. As co-chair of the multiyear, non-partisan U.S. Competitiveness Project at Harvard Business School over the past five years, it became clear to him that the political system was actually the major constraint in America’s inability to restore economic prosperity and address many of the other problems our nation faces. Working with Katherine to understand the root causes of the failure of political competition, and what to do about it, has become an obsession. DISCLOSURE This work was funded by Harvard Business School, including the Institute for Strategy and Competitiveness and the Division of Research and Faculty Development. No external funding was received. Katherine and Michael are both involved in supporting the work they advocate in this report. -

POLITICAL SPEECH, DOUBLESPEAK, and CRITICAL-THINKING SKILLS in AMERICAN EDUCATION by Doris E. Minin-White a Capstone Project

POLITICAL SPEECH, DOUBLESPEAK, AND CRITICAL-THINKING SKILLS IN AMERICAN EDUCATION by Doris E. Minin-White A capstone project submitted in partial fulfillment of the requirements for the degree of Masters of Arts in English as a Second Language. Hamline University Saint Paul, Minnesota December, 2017 Capstone Project Trish Harvey Content Expert: Joseph White 2 My Project In this project, I will attempt to identify and analyze common cases of doublespeak in selected samples of political discourse, delivered by the two most recent U. S. presidents: Barack Obama and Donald Trump. The sample will include the transcripts from two speeches and two debates for each of the speakers. The speeches chosen will be their inaugural addresses and their presidential nomination acceptance speeches. The debates will be the first and second debates held, as part of the presidential campaign, in which the selected speakers participated. I chose to include speeches from the presidential inaugural address because they represent, perhaps, the greatest opportunity for presidents to speak with impact to the people they represent and to their fellow lawmakers who are generally present at the inauguration. I also chose the speeches they made when accepting the presidential nominations of their respective parties, because it is their opportunity to outline what their party’s platform is, what they feel are the important issues of the day, and how they intend to pursue those issues. Finally, I chose presidential debates because political discourse in this context is more spontaneous. According to the Commission on Presidential Debates (2017), a non-partisan entity that has organized presidential debates in the United States since 1988, political debates are carefully prepared and organized. -

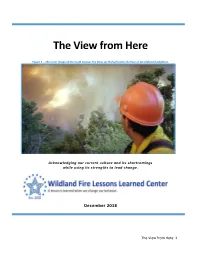

The View from Here

The View from Here Figure 1 -- The iconic image of the South Canyon Fire blow-up that will claim the lives of 14 wildland firefighters. Acknowledging our current culture and its shortcomings while using its strengths to lead change. December 2018 The View from Here 1 This collection represents collective insight into how we operate and why we must alter some of our most ingrained practices and perspectives. Contents Introduction .................................................................................................................................... 3 I Risk ................................................................................................................................................ 4 1. The Illusion of Control ............................................................................................................. 5 2. It’s Going to Happen Again ................................................................................................... 14 3. The Big Lie – Honor the Fallen .............................................................................................. 19 4. The Problem with Zero ......................................................................................................... 26 5. RISK, GAIN, and LOSS – What are We Willing to Accept? .................................................... 29 6. How Do We Know This Job is Dangerous? ............................................................................ 39 II Culture ....................................................................................................................................... -

Jonathan Rauch

Jonathan Rauch Jonathan Rauch, a contributing editor of National Journal and The Atlantic, is the author of several books and many articles on public policy, culture, and economics. He is also a guest scholar at the Brookings Institution, a leading Washington think-tank. He is winner of the 2005 National Magazine Award for columns and commentary and the 2010 National Headliner Award for magazine columns. His latest book is Gay Marriage: Why It Is Good for Gays, Good for Straights, and Good for America, published in 2004 by Times Books (Henry Holt). It makes the case that same-sex marriage would benefit not only gay people but society and the institution of marriage itself. Although much of his writing has been on public policy, he has also written on topics as widely varied as adultery, agriculture, economics, gay marriage, height discrimination, biological rhythms, number inflation, and animal rights. His multiple-award-winning column, “Social Studies,” was published in National Journal (a Washington-based weekly on government, politics, and public policy) from 1998 to 2010, and his features appeared regularly both there and in The Atlantic. Among the many other publications for which he has written are The New Republic, The Economist, Reason, Harper’s, Fortune, Reader’s Digest, U.S. News & World Report, The New York Times newspaper and magazine, The Wall Street Journal, The Washington Post, The Los Angeles Times, The New York Post, Slate, The Chronicle of Higher Education, The Public Interest, The Advocate, The Daily, and others. Rauch was born and raised in Phoenix, Arizona, and graduated in 1982 from Yale University. -

Commonwealth of Pennsylvania House of Representatives

COMMONWEALTH OF PENNSYLVANIA HOUSE OF REPRESENTATIVES STATE GOVERNMENT COMMITTEE PUBLIC HEARING STATE CAPITOL HARRISBURG, PA IRVIS OFFICE BUILDING ROOM G-50 THURSDAY, APRIL 1, 2 021 12:30 P.M. PRESENTATION ON ELECTION INTEGRITY AND ACCESSIBILITY POLICY BEFORE: HONORABLE SETH M. GROVE, MAJORITY CHAIRMAN HONORABLE RUSS DIAMOND HONORABLE MATTHEW D. DOWLING HONORABLE DAWN W. KEEFER HONORABLE ANDREW LEWIS HONORABLE RYAN E. MACKENZIE HONORABLE BRETT R. MILLER HONORABLE ERIC R. NELSON HONORABLE JASON ORTITAY HONORABLE CLINT OWLETT HONORABLE FRANCIS X. RYAN HONORABLE PAUL SCHEMEL HONORABLE LOUIS C. SCHMITT, JR. HONORABLE CRAIG T. STAATS HONORABLE JEFF C. WHEELAND Debra B. Miller dbmreporting@msn. com 2 BEFORE (continued): HONORABLE MARGO L. DAVIDSON, DEMOCRATIC CHAIRWOMAN HONORABLE ISABELLA V. FITZGERALD HONORABLE KRISTINE C. HOWARD HONORABLE MAUREEN E. MADDEN HONORABLE BENJAMIN V. SANCHEZ HONORABLE JOE WEBSTER HONORABLE REGINA G. YOUNG COMMITTEE STAFF PRESENT: MICHAELE TOTINO MAJORITY EXECUTIVE DIRECTOR MICHAEL HECKMANN MAJORITY RESEARCH ANALYST SHERRY EBERLY MAJORITY LEGISLATIVE ADMINISTRATIVE ASSISTANT NICHOLAS HIMEBAUGH DEMOCRATIC EXECUTIVE DIRECTOR 3 I N D E X TESTIFIERS * * * NAME PAGE DR. B. CLIFFORD NEUMAN DIRECTOR, USC CENTER FOR COMPUTER SYSTEMS SECURITY; ASSOCIATE PROFESSOR OF COMPUTER SCIENCE PRACTICE, INFORMATION SCIENCES INSTITUTE, VITERBI SCHOOL OF ENGINEERING, UNIVERSITY OF SOUTHERN CALIFORNIA...................11 DR. WILLIAM T. ADLER SENIOR TECHNOLOGIST FOR ELECTIONS & DEMOCRACY, CENTER FOR DEMOCRACY & TECHNOLOGY...................11 -

Building Better Care: Improving the System for Delivering Health Care to Older Adults and Their Families

Building Better Care: Improving the System for Delivering Health Care To Older Adults and Their Families A Special Forum Hosted by the Campaign for Better Care July 28, 2010 • National Press Club Speaker Biographies DEBRA L. NESS, MS President National Partnership for Women & Families For over two decades, Debra Ness has been an ardent advocate for the principles of fairness and social justice. Drawing on an extensive background in health and public policy, Ness possesses a unique understanding of the issues that women and families face at home, in the workplace, and in the health care arena. Before assuming her current role as President, she served as Executive Vice President of the National Partnership for 13 years. Ness has played a leading role in positioning the organization as a powerful and effective advocate for today’s women and families. Ness serves on the boards of some of the nation’s most influential organizations working to improve health care. She sits on the Boards of the National Committee for Quality Assurance (NCQA), the National Quality Forum (NQF), and recently completed serving on the Board of Trustees of the American Board of Internal Medicine Foundation. She co-chairs the Consumer-Purchaser Disclosure Group and sits on the Steering Committee of the AQA and on the Quality Alliance Steering Committee. Ness also serves on the board of the Economic Policy Institute (EPI) and on the Executive Committee of the Leadership Conference on Civil Rights (LCCR), co-chairing LCCR’s Health Task Force. THE HONORABLE SHELDON WHITEHOUSE United States Senator For more than 20 years, Sheldon Whitehouse has served the people of Rhode Island: championing health care reform, standing up for our environment, helping solve fiscal crises, and investigating public corruption. -

Three Explanatory Essays Giving Context and Analysis to Submitted Evidence

Three Explanatory Essays Giving Context and Analysis to Submitted Evidence Part 1: Cambridge Analytica, the Artificial Enemy and Trump's 'Big Lie' By Emma L. Briant, University of Essex Last week, whistleblowers, including former Cambridge Analytica research director Chris Wylie, exposed much of the hidden workings behind the Cambridge Analytica digital strategy funded by the Mercers which empowered the US far right and their Republican apologists, and revealed CA’s involvement in the “Brexit” campaign in the UK. Amid Cambridge Analytica CEO Alexander Nix’s gaslighting and deflection after Trump’s election victory, few questions about this powerful company have been answered. As a propaganda scholar, I have spent a decade researching SCL Group, a conglomerate of companies including Cambridge Analytica who did work for the Trump campaign. Following the US election, I used the substantial contacts I had developed to research an upcoming book. What I discovered was alarming. In this and two other linked explanatory essays, I discuss my findings concerning the involvement of these parties in Brexit (See Part 2) and Cambridge Analytica’s grossly unethical conduct enacted for profit (See Part 3). I draw on my exclusive interviews conducted for my upcoming book What’s Wrong with the Democrats? Media Bias, Inequality and the rise of Donald Trump (co-authored with George Washington University professor Robert M. Entman) and academic publications on the EU referendum, and my counter-terrorism research. Due to my expertise on this topic, I was compelled by the UK Electoral Commission, Information Commissioners Office and the Chair of the Digital, Culture, Media and Sport Committee's Fake News Inquiry Damian Collins MP to submit information and research relating to campaigns by SCL, Cambridge Analytica and other actors.