CUDA Hardware / Software CUDA Code Walkthrough

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

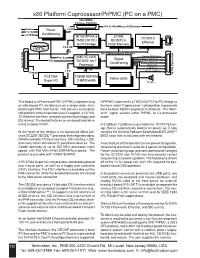

X86 Platform Coprocessor/Prpmc (PC on a PMC)

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

Parallel Rendering on Hybrid Multi-GPU Clusters

Eurographics Symposium on Parallel Graphics and Visualization (2012) H. Childs and T. Kuhlen (Editors) Parallel Rendering on Hybrid Multi-GPU Clusters S. Eilemann†1, A. Bilgili1, M. Abdellah1, J. Hernando2, M. Makhinya3, R. Pajarola3, F. Schürmann1 1Blue Brain Project, EPFL; 2CeSViMa, UPM; 3Visualization and MultiMedia Lab, University of Zürich Abstract Achieving efficient scalable parallel rendering for interactive visualization applications on medium-sized graphics clusters remains a challenging problem. Framerates of up to 60hz require a carefully designed and fine-tuned parallel rendering implementation that fits all required operations into the 16ms time budget available for each rendered frame. Furthermore, modern commodity hardware embraces more and more a NUMA architecture, where multiple processor sockets each have their locally attached memory and where auxiliary devices such as GPUs and network interfaces are directly attached to one of the processors. Such so called fat NUMA processing and graphics nodes are increasingly used to build cost-effective hybrid shared/distributed memory visualization clusters. In this paper we present a thorough analysis of the asynchronous parallelization of the rendering stages and we derive and implement important optimizations to achieve highly interactive framerates on such hybrid multi-GPU clusters. We use both a benchmark program and a real-world scientific application used to visualize, navigate and interact with simulations of cortical neuron circuit models. Categories and Subject Descriptors (according to ACM CCS): I.3.2 [Computer Graphics]: Graphics Systems— Distributed Graphics; I.3.m [Computer Graphics]: Miscellaneous—Parallel Rendering 1. Introduction Hybrid GPU clusters are often build from so called fat render nodes using a NUMA architecture with multiple The decomposition of parallel rendering systems across mul- CPUs and GPUs per machine. -

Exploiting Free Silicon for Energy-Efficient Computing Directly

Exploiting Free Silicon for Energy-Efficient Computing Directly in NAND Flash-based Solid-State Storage Systems Peng Li Kevin Gomez David J. Lilja Seagate Technology Seagate Technology University of Minnesota, Twin Cities Shakopee, MN, 55379 Shakopee, MN, 55379 Minneapolis, MN, 55455 [email protected] [email protected] [email protected] Abstract—Energy consumption is a fundamental issue in today’s data A well-known solution to the memory wall issue is moving centers as data continue growing dramatically. How to process these computing closer to the data. For example, Gokhale et al [2] data in an energy-efficient way becomes more and more important. proposed a processor-in-memory (PIM) chip by adding a processor Prior work had proposed several methods to build an energy-efficient system. The basic idea is to attack the memory wall issue (i.e., the into the main memory for computing. Riedel et al [9] proposed performance gap between CPUs and main memory) by moving com- an active disk by using the processor inside the hard disk drive puting closer to the data. However, these methods have not been widely (HDD) for computing. With the evolution of other non-volatile adopted due to high cost and limited performance improvements. In memories (NVMs), such as phase-change memory (PCM) and spin- this paper, we propose the storage processing unit (SPU) which adds computing power into NAND flash memories at standard solid-state transfer torque (STT)-RAM, researchers also proposed to use these drive (SSD) cost. By pre-processing the data using the SPU, the data NVMs as the main memory for data-intensive applications [10] to that needs to be transferred to host CPUs for further processing improve the system energy-efficiency. -

Parallel Texture-Based Vector Field Visualization on Curved Surfaces Using GPU Cluster Computers

Eurographics Symposium on Parallel Graphics and Visualization (2006) Alan Heirich, Bruno Raffin, and Luis Paulo dos Santos (Editors) Parallel Texture-Based Vector Field Visualization on Curved Surfaces Using GPU Cluster Computers S. Bachthaler1, M. Strengert1, D. Weiskopf2, and T. Ertl1 1Institute of Visualization and Interactive Systems, University of Stuttgart, Germany 2School of Computing Science, Simon Fraser University, Canada Abstract We adopt a technique for texture-based visualization of flow fields on curved surfaces for parallel computation on a GPU cluster. The underlying LIC method relies on image-space calculations and allows the user to visualize a full 3D vector field on arbitrary and changing hypersurfaces. By using parallelization, both the visualization speed and the maximum data set size are scaled with the number of cluster nodes. A sort-first strategy with image-space decomposition is employed to distribute the workload for the LIC computation, while a sort-last approach with an object-space partitioning of the vector field is used to increase the total amount of available GPU memory. We specifically address issues for parallel GPU-based vector field visualization, such as reduced locality of memory accesses caused by particle tracing, dynamic load balancing for changing camera parameters, and the combination of image-space and object-space decomposition in a hybrid approach. Performance measurements document the behavior of our implementation on a GPU cluster with AMD Opteron CPUs, NVIDIA GeForce 6800 Ultra GPUs, and Infiniband network connection. Categories and Subject Descriptors (according to ACM CCS): I.3.3 [Computer Graphics]: Viewing algorithms I.3.3 [Three-Dimensional Graphics and Realism]: Color, shading, shadowing, and texture 1. -

CUDA What Is GPGPU

What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics CUDA – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput Slides by David Kirk – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation • Applications – see //GPGPU.org – Game effects (FX) physics, image processing – Physical modeling, computational engineering, matrix algebra, convolution, correlation, sorting Previous GPGPU Constraints CUDA • Dealing with graphics API per thread per Shader Input Registers • “Compute Unified Device Architecture” – Working with the corner cases per Context • General purpose programming model of the graphics API Fragment Program Texture – User kicks off batches of threads on the GPU • Addressing modes Constants – GPU = dedicated super-threaded, massively data parallel co-processor – Limited texture size/dimension Temp Registers • Targeted software stack – Compute oriented drivers, language, and tools Output Registers • Shader capabilities • Driver for loading computation programs into GPU FB Memory – Limited outputs – Standalone Driver - Optimized for computation • Instruction sets – Interface designed for compute - graphics free API – Data sharing with OpenGL buffer objects – Lack of Integer & bit ops – Guaranteed maximum download & readback speeds • Communication limited – Explicit GPU memory management – Between pixels – Scatter a[i] = p 1 Parallel Computing on a GPU Extended C • Declspecs • NVIDIA GPU Computing Architecture – global, device, shared, __device__ float filter[N]; – Via a separate HW interface local, constant __global__ void convolve (float *image) { – In laptops, desktops, workstations, servers GeForce 8800 __shared__ float region[M]; ... • Keywords • 8-series GPUs deliver 50 to 200 GFLOPS region[threadIdx] = image[i]; on compiled parallel C applications – threadIdx, blockIdx • Intrinsics __syncthreads() – __syncthreads .. -

Evolution of the Graphical Processing Unit

University of Nevada Reno Evolution of the Graphical Processing Unit A professional paper submitted in partial fulfillment of the requirements for the degree of Master of Science with a major in Computer Science by Thomas Scott Crow Dr. Frederick C. Harris, Jr., Advisor December 2004 Dedication To my wife Windee, thank you for all of your patience, intelligence and love. i Acknowledgements I would like to thank my advisor Dr. Harris for his patience and the help he has provided me. The field of Computer Science needs more individuals like Dr. Harris. I would like to thank Dr. Mensing for unknowingly giving me an excellent model of what a Man can be and for his confidence in my work. I am very grateful to Dr. Egbert and Dr. Mensing for agreeing to be committee members and for their valuable time. Thank you jeffs. ii Abstract In this paper we discuss some major contributions to the field of computer graphics that have led to the implementation of the modern graphical processing unit. We also compare the performance of matrix‐matrix multiplication on the GPU to the same computation on the CPU. Although the CPU performs better in this comparison, modern GPUs have a lot of potential since their rate of growth far exceeds that of the CPU. The history of the rate of growth of the GPU shows that the transistor count doubles every 6 months where that of the CPU is only every 18 months. There is currently much research going on regarding general purpose computing on GPUs and although there has been moderate success, there are several issues that keep the commodity GPU from expanding out from pure graphics computing with limited cache bandwidth being one. -

Comparing the Power and Performance of Intel's SCC to State

Comparing the Power and Performance of Intel’s SCC to State-of-the-Art CPUs and GPUs Ehsan Totoni, Babak Behzad, Swapnil Ghike, Josep Torrellas Department of Computer Science, University of Illinois at Urbana-Champaign, Urbana, IL 61801, USA E-mail: ftotoni2, bbehza2, ghike2, [email protected] Abstract—Power dissipation and energy consumption are be- A key architectural challenge now is how to support in- coming increasingly important architectural design constraints in creasing parallelism and scale performance, while being power different types of computers, from embedded systems to large- and energy efficient. There are multiple options on the table, scale supercomputers. To continue the scaling of performance, it is essential that we build parallel processor chips that make the namely “heavy-weight” multi-cores (such as general purpose best use of exponentially increasing numbers of transistors within processors), “light-weight” many-cores (such as Intel’s Single- the power and energy budgets. Intel SCC is an appealing option Chip Cloud Computer (SCC) [1]), low-power processors (such for future many-core architectures. In this paper, we use various as embedded processors), and SIMD-like highly-parallel archi- scalable applications to quantitatively compare and analyze tectures (such as General-Purpose Graphics Processing Units the performance, power consumption and energy efficiency of different cutting-edge platforms that differ in architectural build. (GPGPUs)). These platforms include the Intel Single-Chip Cloud Computer The Intel SCC [1] is a research chip made by Intel Labs (SCC) many-core, the Intel Core i7 general-purpose multi-core, to explore future many-core architectures. It has 48 Pentium the Intel Atom low-power processor, and the Nvidia ION2 (P54C) cores in 24 tiles of two cores each. -

World's First High- Performance X86 With

World’s First High- Performance x86 with Integrated AI Coprocessor Linley Spring Processor Conference 2020 April 8, 2020 G Glenn Henry Dr. Parviz Palangpour Chief AI Architect AI Software Deep Dive into Centaur’s New x86 AI Coprocessor (Ncore) • Background • Motivations • Constraints • Architecture • Software • Benchmarks • Conclusion Demonstrated Working Silicon For Video-Analytics Edge Server Nov, 2019 ©2020 Centaur Technology. All Rights Reserved Centaur Technology Background • 25-year-old startup in Austin, owned by Via Technologies • We design, from scratch, low-cost x86 processors • Everything to produce a custom x86 SoC with ~100 people • Architecture, logic design and microcode • Design, verification, and layout • Physical build, fab interface, and tape-out • Shipped by IBM, HP, Dell, Samsung, Lenovo… ©2020 Centaur Technology. All Rights Reserved Genesis of the AI Coprocessor (Ncore) • Centaur was developing SoC (CHA) with new x86 cores • Targeted at edge/cloud server market (high-end x86 features) • Huge inference markets beyond hyperscale cloud, IOT and mobile • Video analytics, edge computing, on-premise servers • However, x86 isn’t efficient at inference • High-performance inference requires external accelerator • CHA has 44x PCIe to support GPUs, etc. • But adds cost, power, another point of failure, etc. ©2020 Centaur Technology. All Rights Reserved Why not integrate a coprocessor? • Very low cost • Many components already on SoC (“free” to Ncore) Caches, memory, clock, power, package, pins, busses, etc. • There often is “free” space on complex SOCs due to I/O & pins • Having many high-performance x86 cores allows flexibility • The x86 cores can do some of the work, in parallel • Didn’t have to implement all strange/new functions • Allows fast prototyping of new things • For customer: nothing extra to buy ©2020 Centaur Technology. -

Literature Review and Implementation Overview: High Performance

LITERATURE REVIEW AND IMPLEMENTATION OVERVIEW: HIGH PERFORMANCE COMPUTING WITH GRAPHICS PROCESSING UNITS FOR CLASSROOM AND RESEARCH USE APREPRINT Nathan George Department of Data Sciences Regis University Denver, CO 80221 [email protected] May 18, 2020 ABSTRACT In this report, I discuss the history and current state of GPU HPC systems. Although high-power GPUs have only existed a short time, they have found rapid adoption in deep learning applications. I also discuss an implementation of a commodity-hardware NVIDIA GPU HPC cluster for deep learning research and academic teaching use. Keywords GPU · Neural Networks · Deep Learning · HPC 1 Introduction, Background, and GPU HPC History High performance computing (HPC) is typically characterized by large amounts of memory and processing power. HPC, sometimes also called supercomputing, has been around since the 1960s with the introduction of the CDC STAR-100, and continues to push the limits of computing power and capabilities for large-scale problems [1, 2]. However, use of graphics processing unit (GPU) in HPC supercomputers has only started in the mid to late 2000s [3, 4]. Although graphics processing chips have been around since the 1970s, GPUs were not widely used for computations until the 2000s. During the early 2000s, GPU clusters began to appear for HPC applications. Most of these clusters were designed to run large calculations requiring vast computing power, and many clusters are still designed for that purpose [5]. GPUs have been increasingly used for computations due to their commodification, following Moore’s Law (demonstrated arXiv:2005.07598v1 [cs.DC] 13 May 2020 in Figure 1), and usage in specific applications like neural networks. -

Introduction to Cpu

microprocessors and microcontrollers - sadri 1 INTRODUCTION TO CPU Mohammad Sadegh Sadri Session 2 Microprocessor Course Isfahan University of Technology Sep., Oct., 2010 microprocessors and microcontrollers - sadri 2 Agenda • Review of the first session • A tour of silicon world! • Basic definition of CPU • Von Neumann Architecture • Example: Basic ARM7 Architecture • A brief detailed explanation of ARM7 Architecture • Hardvard Architecture • Example: TMS320C25 DSP microprocessors and microcontrollers - sadri 3 Agenda (2) • History of CPUs • 4004 • TMS1000 • 8080 • Z80 • Am2901 • 8051 • PIC16 microprocessors and microcontrollers - sadri 4 Von Neumann Architecture • Same Memory • Program • Data • Single Bus microprocessors and microcontrollers - sadri 5 Sample : ARM7T CPU microprocessors and microcontrollers - sadri 6 Harvard Architecture • Separate memories for program and data microprocessors and microcontrollers - sadri 7 TMS320C25 DSP microprocessors and microcontrollers - sadri 8 Silicon Market Revenue Rank Rank Country of 2009/2008 Company (million Market share 2009 2008 origin changes $ USD) Intel 11 USA 32 410 -4.0% 14.1% Corporation Samsung 22 South Korea 17 496 +3.5% 7.6% Electronics Toshiba 33Semiconduc Japan 10 319 -6.9% 4.5% tors Texas 44 USA 9 617 -12.6% 4.2% Instruments STMicroelec 55 FranceItaly 8 510 -17.6% 3.7% tronics 68Qualcomm USA 6 409 -1.1% 2.8% 79Hynix South Korea 6 246 +3.7% 2.7% 812AMD USA 5 207 -4.6% 2.3% Renesas 96 Japan 5 153 -26.6% 2.2% Technology 10 7 Sony Japan 4 468 -35.7% 1.9% microprocessors and microcontrollers -

AI Chips: What They Are and Why They Matter

APRIL 2020 AI Chips: What They Are and Why They Matter An AI Chips Reference AUTHORS Saif M. Khan Alexander Mann Table of Contents Introduction and Summary 3 The Laws of Chip Innovation 7 Transistor Shrinkage: Moore’s Law 7 Efficiency and Speed Improvements 8 Increasing Transistor Density Unlocks Improved Designs for Efficiency and Speed 9 Transistor Design is Reaching Fundamental Size Limits 10 The Slowing of Moore’s Law and the Decline of General-Purpose Chips 10 The Economies of Scale of General-Purpose Chips 10 Costs are Increasing Faster than the Semiconductor Market 11 The Semiconductor Industry’s Growth Rate is Unlikely to Increase 14 Chip Improvements as Moore’s Law Slows 15 Transistor Improvements Continue, but are Slowing 16 Improved Transistor Density Enables Specialization 18 The AI Chip Zoo 19 AI Chip Types 20 AI Chip Benchmarks 22 The Value of State-of-the-Art AI Chips 23 The Efficiency of State-of-the-Art AI Chips Translates into Cost-Effectiveness 23 Compute-Intensive AI Algorithms are Bottlenecked by Chip Costs and Speed 26 U.S. and Chinese AI Chips and Implications for National Competitiveness 27 Appendix A: Basics of Semiconductors and Chips 31 Appendix B: How AI Chips Work 33 Parallel Computing 33 Low-Precision Computing 34 Memory Optimization 35 Domain-Specific Languages 36 Appendix C: AI Chip Benchmarking Studies 37 Appendix D: Chip Economics Model 39 Chip Transistor Density, Design Costs, and Energy Costs 40 Foundry, Assembly, Test and Packaging Costs 41 Acknowledgments 44 Center for Security and Emerging Technology | 2 Introduction and Summary Artificial intelligence will play an important role in national and international security in the years to come.