IRAM Microprocessor Talk

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mipspro C++ Programmer's Guide

MIPSproTM C++ Programmer’s Guide 007–0704–150 CONTRIBUTORS Rewritten in 2002 by Jean Wilson with engineering support from John Wilkinson and editing support from Susan Wilkening. COPYRIGHT Copyright © 1995, 1999, 2002 - 2003 Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The electronic (software) version of this document was developed at private expense; if acquired under an agreement with the USA government or any contractor thereto, it is acquired as "commercial computer software" subject to the provisions of its applicable license agreement, as specified in (a) 48 CFR 12.212 of the FAR; or, if acquired for Department of Defense units, (b) 48 CFR 227-7202 of the DoD FAR Supplement; or sections succeeding thereto. Contractor/manufacturer is Silicon Graphics, Inc., 1600 Amphitheatre Pkwy 2E, Mountain View, CA 94043-1351. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo, IRIX, O2, Octane, and Origin are registered trademarks and OpenMP and ProDev are trademarks of Silicon Graphics, Inc. in the United States and/or other countries worldwide. MIPS, MIPS I, MIPS II, MIPS III, MIPS IV, R2000, R3000, R4000, R4400, R4600, R5000, and R8000 are registered or unregistered trademarks and MIPSpro, R10000, R12000, R1400 are trademarks of MIPS Technologies, Inc., used under license by Silicon Graphics, Inc. Portions of this publication may have been derived from the OpenMP Language Application Program Interface Specification. -

MIPS® Architecture for Programmers Volume I-B: Introduction to the Micromips32™ Architecture, Revision 5.03

MIPS® Architecture For Programmers Volume I-B: Introduction to the microMIPS32™ Architecture Document Number: MD00741 Revision 5.03 Sept. 9, 2013 Unpublished rights (if any) reserved under the copyright laws of the United States of America and other countries. This document contains information that is proprietary to MIPS Tech, LLC, a Wave Computing company (“MIPS”) and MIPS’ affiliates as applicable. Any copying, reproducing, modifying or use of this information (in whole or in part) that is not expressly permitted in writing by MIPS or MIPS’ affiliates as applicable or an authorized third party is strictly prohibited. At a minimum, this information is protected under unfair competition and copyright laws. Violations thereof may result in criminal penalties and fines. Any document provided in source format (i.e., in a modifiable form such as in FrameMaker or Microsoft Word format) is subject to use and distribution restrictions that are independent of and supplemental to any and all confidentiality restrictions. UNDER NO CIRCUMSTANCES MAY A DOCUMENT PROVIDED IN SOURCE FORMAT BE DISTRIBUTED TO A THIRD PARTY IN SOURCE FORMAT WITHOUT THE EXPRESS WRITTEN PERMISSION OF MIPS (AND MIPS’ AFFILIATES AS APPLICABLE) reserve the right to change the information contained in this document to improve function, design or otherwise. MIPS and MIPS’ affiliates do not assume any liability arising out of the application or use of this information, or of any error or omission in such information. Any warranties, whether express, statutory, implied or otherwise, including but not limited to the implied warranties of merchantability or fitness for a particular purpose, are excluded. Except as expressly provided in any written license agreement from MIPS or an authorized third party, the furnishing of this document does not give recipient any license to any intellectual property rights, including any patent rights, that cover the information in this document. -

Mipspro™ Compiling and Performance Tuning Guide

MIPSpro™ Compiling and Performance Tuning Guide Document Number 007-2360-006 Contributors Written by Arthur Evans, Wendy Ferguson, Jed Hartman, Jackie Neider Edited by Christina Cary Production by Lorrie Williams Engineering contributions by Dave Anderson, Zaineb Asaf, Dave Babcock, Greg Boyd, Jack Carter, Ann Mei Chang, Wei-Chau Chang, David Ciemiewicz, Rune Dahl, Jim Dehnert, David Frederick, Sanjoy Ghosh, Jay Gischer, Bob Green, Seema Hiranandani, W. Wilson Ho, Marty Itzkowitz, Bhaskar Janakiraman, Woody Lichtenstein, Dror Maydan, Ajit Mayya, Ray Milkey, Michael Murphy, Bron Nelson, Andy Palay, Ron Price, John Wilkinson © Copyright 1996 Silicon Graphics, Inc.— All Rights Reserved This document contains proprietary and confidential information of Silicon Graphics, Inc. The contents of this document may not be disclosed to third parties, copied, or duplicated in any form, in whole or in part, without the prior written permission of Silicon Graphics, Inc. Restricted Rights Legend Use, duplication, or disclosure of the technical data contained in this document by the Government is subject to restrictions as set forth in subdivision (c) (1) (ii) of the Rights in Technical Data and Computer Software clause at DFARS 52.227-7013 and/or in similar or successor clauses in the FAR, or in the DOD or NASA FAR Supplement. Unpublished rights reserved under the Copyright Laws of the United States. Contractor/manufacturer is Silicon Graphics, Inc., 2011 N. Shoreline Blvd., Mountain View, CA 94039-7311. Silicon Graphics, the Silicon Graphics logo, and IRIS are registered trademarks and IRIX, CASEVision, IRIS IM, IRIS Showcase, Impressario, Indigo Magic, Inventor, IRIS-4D, POWER Series, RealityEngine, CHALLENGE, Onyx, Origin2000, and WorkShop are trademarks of Silicon Graphics, Inc. -

Pluggable Interface Relays CR-M Miniature Relays

Data sheet Pluggable interface relays CR-M Miniature relays Pluggable interface relays are used for electrical isolation, amplification and signal matching between the electronic controlling, e.g. PLC (programmable logic controller), PC or field bus systems and the sensor / actuator level. They don’t use additional internal protective circuits and thus are overload-proof against short-time variations like current or voltage peaks. 2CDC 291 002 S0015 Characteristics Approvals – Standard miniature relays with mechanical status indication H ANSI/UL 508, CAN/CSA C22.2 No.14 – 13 different rated control supply voltages: F CAN/CSA C22.2 No.14 DC versions: 12 V, 24 V, 48 V, 60 V, 110 V, 125 V, 220 V J VDE (except 125 V DC devices) AC versions: 24 V, 48 V, 60 V, 110 V, 120 V, 230 V EAC – Output: 2 c/o (SPDT) contacts (12 A), 3 c/o (SPDT) R contacts (10 A) or 4 c/o (SPDT) contacts (6 A) P Lloyds Register (only devices with 4 c/o (SPDT) – Available with or without LED contacts) CCC – 4 c/o (SPDT) contact version optionally equipped with E gold contacts, LED and free wheeling diode L RMRS (except 60 V and 125 V devices) – Integrated test button for manual actuation and locking of output contacts (DC coil = blue, AC coil = orange) that Marks can be removed if necessary a CE – Cadmium-free contact material – Suited for logical and standard sockets – Width on socket: 27 mm (1.063 in) – Pluggable function modules: reverse polarity protection/ free wheeling diode, LED indication, RC elements, overvoltage protection Order data Packing unit = 10 pieces -

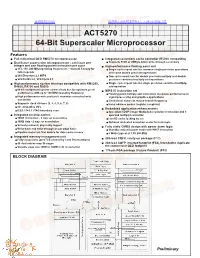

64-Bit Superscaler Microprocessor ACT5270

查询5270供应商 捷多邦,专业PCB打样工厂,24小时加急出货 ACT5270 64-Bit Superscaler Microprocessor Features ■ Full militarized QED RM5270 microprocessor ■ Integrated secondary cache controller (R5000 compatible) ■ Dual Issue superscalar microprocessor - can issue one ● Supports 512K or 2MByte block write-through secondary integer and one floating-point instruction per cycle ■ High-performance floating point unit ● 133, 150, 200 MHz operating frequencies – Consult Factory for ● Single cycle repeat rate for common single precision operations latest speeds and some double precision operations ● 260 Dhrystone2.1 MIPS ● Two cycle repeat rate for double precision multiply and double ● SPECInt95 5.0, SPECfp95 5.3 precision combined multiply-add operations ■ High performance system interface compatible with RM5260, ● Single cycle repeat rate for single precision combined multiply- R4600, R4700 and R5000 add operation ● 64-bit multiplexed system address/data bus for optimum price/ ■ MIPS IV instruction set performance with up to 100 MHz operating frequency ● Floating point multiply-add instruction increases performance in ● High performance write protocols maximize uncached write signal processing and graphics applications bandwidth ● Conditional moves to reduce branch frequency ● Supports clock divisors (2, 3, 4, 5, 6, 7, 8) ● Index address modes (register + register) ● 5V compatible I/O’s ■ Embedded application enhancements ● IEEE 1149.1 JTAG boundary scan ● Specialized DSP integer Multiply-Accumulate instruction and 3 ■ Integrated on-chip caches operand multiply instruction -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements. -

Electronic Products and Relays Selection Table Interface Relays CR-Range and R600 / R500 Range Pluggable Interface Relays

Electronic Products and Relays Selection Table Interface Relays CR-Range and R600 / R500 Range Pluggable Interface Relays CR-M Range Order number number Order 1SVR 405 611 R4000 1SVR 405 611 R1000 1SVR 405 611 R6000 1SVR 405 611 R4200 1SVR 405 611 R8000 1SVR 405 611 R8200 1SVR 405 611 R9000 1SVR 405 611 R0000 1SVR 405 611 R5000 1SVR 405 611 R7000 1SVR 405 611 R2000 1SVR 405 611 R3000 1SVR 405 612 R4000 1SVR 405 612 R1000 1SVR 405 612 R6000 1SVR 405 612 R4200 1SVR 405 612 R8000 1SVR 405 612 R8200 1SVR 405 612 R9000 1SVR 405 612 R0000 1SVR 405 612 R5000 1SVR 405 612 R5200 1SVR 405 612 R7000 1SVR 405 612 R2000 1SVR 405 612 R3000 1SVR 405 613 R4000 1SVR 405 613 R1000 1SVR 405 613 R6000 1SVR 405 613 R4200 1SVR 405 613 R8000 1SVR 405 613 R8200 1SVR 405 613 R9000 1SVR 405 613 R0000 1SVR 405 613 R5000 1SVR 405 613 R7000 1SVR 405 613 R2000 1SVR 405 613 R3000 1SVR 405 611 R4100 1SVR 405 611 R1100 1SVR 405 611 R6100 1SVR 405 611 R4300 1SVR 405 611 R8100 1SVR 405 611 R8300 1SVR 405 611 R9100 1SVR 405 611 R0100 1SVR 405 611 R5100 1SVR 405 611 R7100 1SVR 405 611 R2100 1SVR 405 611 R3100 1SVR 405 612 R4100 1SVR 405 612 R1100 1SVR 405 612 R6100 1SVR 405 612 R4300 1SVR 405 612 R8100 1SVR 405 612 R8300 1SVR 405 612 R9100 1SVR 405 612 R0100 1SVR 405 612 R5100 1SVR 405 612 R7100 1SVR 405 612 R2100 1SVR 405 612 R3100 1SVR 405 613 R4100 1SVR 405 613 R1100 1SVR 405 613 R6100 1SVR 405 613 R4300 1SVR 405 613 R8100 1SVR 405 613 R8300 1SVR 405 613 R9100 1SVR 405 613 R0100 1SVR 405 613 R5100 1SVR 405 613 R7100 1SVR 405 613 R2100 1SVR 405 613 R3100 1SVR 405 614 R1100 -

Sony's Emotionally Charged Chip

VOLUME 13, NUMBER 5 APRIL 19, 1999 MICROPROCESSOR REPORT THE INSIDERS’ GUIDE TO MICROPROCESSOR HARDWARE Sony’s Emotionally Charged Chip Killer Floating-Point “Emotion Engine” To Power PlayStation 2000 by Keith Diefendorff rate of two million units per month, making it the most suc- cessful single product (in units) Sony has ever built. While Intel and the PC industry stumble around in Although SCE has cornered more than 60% of the search of some need for the processing power they already $6 billion game-console market, it was beginning to feel the have, Sony has been busy trying to figure out how to get more heat from Sega’s Dreamcast (see MPR 6/1/98, p. 8), which has of it—lots more. The company has apparently succeeded: at sold over a million units since its debut last November. With the recent International Solid-State Circuits Conference (see a 200-MHz Hitachi SH-4 and NEC’s PowerVR graphics chip, MPR 4/19/99, p. 20), Sony Computer Entertainment (SCE) Dreamcast delivers 3 to 10 times as many 3D polygons as and Toshiba described a multimedia processor that will be the PlayStation’s 34-MHz MIPS processor (see MPR 7/11/94, heart of the next-generation PlayStation, which—lacking an p. 9). To maintain king-of-the-mountain status, SCE had to official name—we refer to as PlayStation 2000, or PSX2. do something spectacular. And it has: the PSX2 will deliver Called the Emotion Engine (EE), the new chip upsets more than 10 times the polygon throughput of Dreamcast, the traditional notion of a game processor. -

Pluggable Interface Relays CR-M Miniature Relays Data Sheet

Pluggable interface relays CR-M Miniature relays Data sheet Features ½ Standard miniature relays with mechanical status indication ½ 12 different supply voltages: 1 DC versions: 12 V, 24 V, 48 V, 60 V, 110 V, 125 V, 220 V AC versions: 24 V, 48 V, 110 V, 120 V, 230 V ½ 2 Output contacts: 2 c/o (12 A), 3 c/o (10 A) or 4 c/o (6 A) ½ 2CDC 293 035 F0004 Available with or without LED 4 ½ 4-c/o version optionally equipped with gold contacts ½ Integrated test button for manual actuation and locking of output contacts (blue =DC, orange = AC) 3 ½ Cadmium-free contact material ½ Width on socket: 27 mm 5 ½ Suited for logical and standard sockets ½ Pluggable function modules: reverse polarity protection, LED indication, RC elements, overvoltage protection CR-M Approvals ቢ Interface relay ባ Pluggable function module n ቤ Socket l (not 125 V DC versions) ብ Holder j ቦ Marker e (only 4-c/o contact version) Marks g Ordering data Type Supply voltage Order code Interface relays without LED 2 c/o contacts: 250 V, 12 A CR-M012DC2 12 V DC 1SVR 405 611 R4000 CR-M024DC2 24 V DC 1SVR 405 611 R1000 CR-M048DC2 48 V DC 1SVR 405 611 R6000 CR-M060DC2 60 V DC 1SVR 405 611 R4200 CR-M110DC2 110 V DC 1SVR 405 611 R8000 CR-M125DC2 125 V DC 1SVR 405 611 R8200 CR-M220DC2 220 V DC 1SVR 405 611 R9000 CR-M024AC2 24 V AC 1SVR 405 611 R0000 CR-M048AC2 48 V AC 1SVR 405 611 R5000 CR-M110AC2 110 V AC 1SVR 405 611 R7000 CR-M120AC2 120 V AC 1SVR 405 611 R2000 CR-M230AC2 230 V AC 1SVR 405 611 R3000 Bold printed products = stocked products. -

MIPS R5000 Microprocessor Technical Backgrounder

MIPS R5000 Microprocessor Technical Backgrounder Performance: SPECint95 5.5 SPECfp95 5.5 Instruction Set MIPS-IV ISA Compatibility MIPS-I, MIPS-II, AND MIPS-III Pipeline Clock 200 MHz System Interface clock Up to 100 MHz Caches 32 kB I-cache and 32 kB D-cache, each 2-way set associative TLB 48 dual entries; Variable Page size (4 kB to 16 MB in 4x increments) Power dissipation: 10 watts (peak). at maximum operating frequency Supply voltage min. 3.0 Vtyp. 3.3 Vmax. 3.6 V Packaging: 272-pin cavity-down Ball Grid Array (BGA) 223-pin ceramic Pin Grid Array (PGA) Fabrication Technology: Vendor specific process including 0.35 micron Die Size: 80-90 mm2 (Vendor Dependent) Number of Transistors: 3.6 million (4 Transistor SRAM cell), 5.0 million (6 Transistor SRAM cell) (Of these totals, logic transistors number 800,000). mips 1 Open RISC Technology Overview This backgrounder introduces the R5000 microprocessor from MIPS Technologies, Inc. The information presented in this paper discusses new features in the R5000, i.e. how the R5000 differs from previous microprocessors from MIPS. This section provides general information on the R5000, including: • Introduction • The R5000 microprocessor • Packaging • Future upgrades • Block Diagram Introduction to RISC Reduced instruction-set computing (RISC) architectures differ from older complex instruction-set computing (CISC) architectures by streamlining instruction execution. The MIPS architecture, developed by MIPS Technologies, is firmly established as the leading RISC architecture today. On introduction, RISC microprocessors were used for high performance computing applications. Lately, these processors have found their way into the consumer electronics and embedded systems markets as well. -

Selection Guides and Order References for Pluggable Interface Relays CR-P

Pluggable interface relays CR-P, CR-M, CR-U range Selection guides and order references for pluggable interface relays CR-P, CR-M, CR-U range Pluggable pcb relays CR-P Pluggable universal relays CR-U Type Rated control Order code Type Rated control Order code supply voltage supply voltage Interface relays without LED 1 c/o contact: 250 V, 16 A Pluggable miniature relays CR-M without LED Pluggable miniature relays CR-M with LED 2 c/o contacts: 250 V, 10 A CR-P012DC1 12 V DC 1SVR 405 600 R4000 2CDC 291 047 F0004 2CDC 291 045 F0004 CR-U012DC2 12 V DC 1SVR 405 621 R4000 Type Rated control Order code Type Rated control Order code CR-P024DC1 24 V DC 1SVR 405 600 R1000 supply voltage supply voltage CR-U024DC2 24 V DC 1SVR 405 621 R1000 CR-P048DC1 48 V DC 1SVR 405 600 R6000 Interface relays without LED Interface relays with LED CR-U048DC2 48 V DC 1SVR 405 621 R6000 2 c/o contacts : 250 V, 12 A 2 c/o contacts: 250 V, 12 A CR-U110DC2 110 V DC 1SVR 405 621 R8000 CR-P110DC1 110 V DC 1SVR 405 600 R8000 2CDC 291 046 F0004 CR-M012DC2 12 V DC 1SVR 405 611 R4000 CR-M012DC2L 12 V DC 1SVR 405 611 R4100 Accessories for miniature relays CR-M CR-U220DC2 220 V DC 1SVR 405 621 R9000 CR-P024AC1 24 V AC 1SVR 405 600 R0000 CR-M024DC2 24 V DC 1SVR 405 611 R1000 CR-M024DC2L 24 V DC 1SVR 405 611 R1100 CR-U024AC2 24 V AC 1SVR 405 621 R0000 CR-P048AC1 48 V AC 1SVR 405 600 R5000 CR-M048DC2 48 V DC 1SVR 405 611 R6000 CR-M048DC2L 48 V DC 1SVR 405 611 R6100 Type Version Connection Order code CR-U048AC2 48 V AC 1SVR 405 621 R5000 CR-U110AC2 110 V AC 1SVR 405 621 R7000 CR-P110AC1 -

Ilore: Discovering a Lineage of Microprocessors

iLORE: Discovering a Lineage of Microprocessors Samuel Lewis Furman Thesis submitted to the Faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Master of Science in Computer Science & Applications Kirk Cameron, Chair Godmar Back Margaret Ellis May 24, 2021 Blacksburg, Virginia Keywords: Computer history, systems, computer architecture, microprocessors Copyright 2021, Samuel Lewis Furman iLORE: Discovering a Lineage of Microprocessors Samuel Lewis Furman (ABSTRACT) Researchers, benchmarking organizations, and hardware manufacturers maintain repositories of computer component and performance information. However, this data is split across many isolated sources and is stored in a form that is not conducive to analysis. A centralized repository of said data would arm stakeholders across industry and academia with a tool to more quantitatively understand the history of computing. We propose iLORE, a data model designed to represent intricate relationships between computer system benchmarks and computer components. We detail the methods we used to implement and populate the iLORE data model using data harvested from publicly available sources. Finally, we demonstrate the validity and utility of our iLORE implementation through an analysis of the characteristics and lineage of commercial microprocessors. We encourage the research community to interact with our data and visualizations at csgenome.org. iLORE: Discovering a Lineage of Microprocessors Samuel Lewis Furman (GENERAL AUDIENCE ABSTRACT) Researchers, benchmarking organizations, and hardware manufacturers maintain repositories of computer component and performance information. However, this data is split across many isolated sources and is stored in a form that is not conducive to analysis. A centralized repository of said data would arm stakeholders across industry and academia with a tool to more quantitatively understand the history of computing.