See Mips Run.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mipspro C++ Programmer's Guide

MIPSproTM C++ Programmer’s Guide 007–0704–150 CONTRIBUTORS Rewritten in 2002 by Jean Wilson with engineering support from John Wilkinson and editing support from Susan Wilkening. COPYRIGHT Copyright © 1995, 1999, 2002 - 2003 Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The electronic (software) version of this document was developed at private expense; if acquired under an agreement with the USA government or any contractor thereto, it is acquired as "commercial computer software" subject to the provisions of its applicable license agreement, as specified in (a) 48 CFR 12.212 of the FAR; or, if acquired for Department of Defense units, (b) 48 CFR 227-7202 of the DoD FAR Supplement; or sections succeeding thereto. Contractor/manufacturer is Silicon Graphics, Inc., 1600 Amphitheatre Pkwy 2E, Mountain View, CA 94043-1351. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo, IRIX, O2, Octane, and Origin are registered trademarks and OpenMP and ProDev are trademarks of Silicon Graphics, Inc. in the United States and/or other countries worldwide. MIPS, MIPS I, MIPS II, MIPS III, MIPS IV, R2000, R3000, R4000, R4400, R4600, R5000, and R8000 are registered or unregistered trademarks and MIPSpro, R10000, R12000, R1400 are trademarks of MIPS Technologies, Inc., used under license by Silicon Graphics, Inc. Portions of this publication may have been derived from the OpenMP Language Application Program Interface Specification. -

Microprocessors History of Computing Nouf Assaid

MICROPROCESSORS HISTORY OF COMPUTING NOUF ASSAID 1 Table of Contents Introduction 2 Brief History 2 Microprocessors 7 Instruction Set Architectures 8 Von Neumann Machine 9 Microprocessor Design 12 Superscalar 13 RISC 16 CISC 20 VLIW 23 Multiprocessor 24 Future Trends in Microprocessor Design 25 2 Introduction If we take a look around us, we would be sure to find a device that uses a microprocessor in some form or the other. Microprocessors have become a part of our daily lives and it would be difficult to imagine life without them today. From digital wrist watches, to pocket calculators, from microwaves, to cars, toys, security systems, navigation, to credit cards, microprocessors are ubiquitous. All this has been made possible by remarkable developments in semiconductor technology enabling in the last 30 years, enabling the implementation of ideas that were previously beyond the average computer architect’s grasp. In this paper, we discuss the various microprocessor technologies, starting with a brief history of computing. This is followed by an in-depth look at processor architecture, design philosophies, current design trends, RISC processors and CISC processors. Finally we discuss trends and directions in microprocessor design. Brief Historical Overview Mechanical Computers A French engineer by the name of Blaise Pascal built the first working mechanical computer. This device was made completely from gears and was operated using hand cranks. This machine was capable of simple addition and subtraction, but a few years later, a German mathematician by the name of Leibniz made a similar machine that could multiply and divide as well. After about 150 years, a mathematician at Cambridge, Charles Babbage made his Difference Engine. -

MIPS® Architecture for Programmers Volume I-B: Introduction to the Micromips32™ Architecture, Revision 5.03

MIPS® Architecture For Programmers Volume I-B: Introduction to the microMIPS32™ Architecture Document Number: MD00741 Revision 5.03 Sept. 9, 2013 Unpublished rights (if any) reserved under the copyright laws of the United States of America and other countries. This document contains information that is proprietary to MIPS Tech, LLC, a Wave Computing company (“MIPS”) and MIPS’ affiliates as applicable. Any copying, reproducing, modifying or use of this information (in whole or in part) that is not expressly permitted in writing by MIPS or MIPS’ affiliates as applicable or an authorized third party is strictly prohibited. At a minimum, this information is protected under unfair competition and copyright laws. Violations thereof may result in criminal penalties and fines. Any document provided in source format (i.e., in a modifiable form such as in FrameMaker or Microsoft Word format) is subject to use and distribution restrictions that are independent of and supplemental to any and all confidentiality restrictions. UNDER NO CIRCUMSTANCES MAY A DOCUMENT PROVIDED IN SOURCE FORMAT BE DISTRIBUTED TO A THIRD PARTY IN SOURCE FORMAT WITHOUT THE EXPRESS WRITTEN PERMISSION OF MIPS (AND MIPS’ AFFILIATES AS APPLICABLE) reserve the right to change the information contained in this document to improve function, design or otherwise. MIPS and MIPS’ affiliates do not assume any liability arising out of the application or use of this information, or of any error or omission in such information. Any warranties, whether express, statutory, implied or otherwise, including but not limited to the implied warranties of merchantability or fitness for a particular purpose, are excluded. Except as expressly provided in any written license agreement from MIPS or an authorized third party, the furnishing of this document does not give recipient any license to any intellectual property rights, including any patent rights, that cover the information in this document. -

Mipspro™ Compiling and Performance Tuning Guide

MIPSpro™ Compiling and Performance Tuning Guide Document Number 007-2360-006 Contributors Written by Arthur Evans, Wendy Ferguson, Jed Hartman, Jackie Neider Edited by Christina Cary Production by Lorrie Williams Engineering contributions by Dave Anderson, Zaineb Asaf, Dave Babcock, Greg Boyd, Jack Carter, Ann Mei Chang, Wei-Chau Chang, David Ciemiewicz, Rune Dahl, Jim Dehnert, David Frederick, Sanjoy Ghosh, Jay Gischer, Bob Green, Seema Hiranandani, W. Wilson Ho, Marty Itzkowitz, Bhaskar Janakiraman, Woody Lichtenstein, Dror Maydan, Ajit Mayya, Ray Milkey, Michael Murphy, Bron Nelson, Andy Palay, Ron Price, John Wilkinson © Copyright 1996 Silicon Graphics, Inc.— All Rights Reserved This document contains proprietary and confidential information of Silicon Graphics, Inc. The contents of this document may not be disclosed to third parties, copied, or duplicated in any form, in whole or in part, without the prior written permission of Silicon Graphics, Inc. Restricted Rights Legend Use, duplication, or disclosure of the technical data contained in this document by the Government is subject to restrictions as set forth in subdivision (c) (1) (ii) of the Rights in Technical Data and Computer Software clause at DFARS 52.227-7013 and/or in similar or successor clauses in the FAR, or in the DOD or NASA FAR Supplement. Unpublished rights reserved under the Copyright Laws of the United States. Contractor/manufacturer is Silicon Graphics, Inc., 2011 N. Shoreline Blvd., Mountain View, CA 94039-7311. Silicon Graphics, the Silicon Graphics logo, and IRIS are registered trademarks and IRIX, CASEVision, IRIS IM, IRIS Showcase, Impressario, Indigo Magic, Inventor, IRIS-4D, POWER Series, RealityEngine, CHALLENGE, Onyx, Origin2000, and WorkShop are trademarks of Silicon Graphics, Inc. -

VAX MACRO and Instruction Set Reference Manual

VAX MACRO and Instruction Set Reference Manual Order Number: AA-LA89A-TE April 1988 This document describes the features of the VAX MACRO instruction set and assembler. It includes a detailed description of MACRO directives and instructions, as well as information about MACRO source program syntax. Revision/Update Information: This manual supersedes the VAX MACRO and Instruction Set Reference Manual, Version 4.0. Software Version: VMS Version 5.0 digital equipment corporation maynard, massachusetts April 1988 The information in this document is subject to change without notice and should not be construed as a commitment by Digital Equipment Corporation. Digital Equipment Corporation assumes no responsibility for any errors that may appear in this document. The software described in this document is furnished under a license and may be used or copied only in accordance with the terms of such license. No responsibility is assumed for the use or reliability of software on equipment that is not supplied by Digital Equipment Corporation or its affiliated companies. Copyright © 1988 by Digital Equipment Corporation All Rights Reserved. Printed in U.S.A. The postpaid READER'S COMMENTS form on the last page of this document requests the user's critical evaluation to assist in preparing future documentation. The following are trademarks of Digital Equipment Corporation: DEC DIBOL UNIBUS DEC/CMS EduSystem VAX DEC/MMS IAS VAXcluster DECnet MASSBUS VMS DECsystem-10 PDP VT DECSYSTEM-20 PDT DECUS RSTS DECwriter RSX t:JD~DD5lD TM ZK4515 HOW TO ORDER ADDITIONAL DOCUMENTATION DIRECT MAIL ORDERS USA 8t PUERTO Rico* CANADA INTERNATIONAL Digital Equipment Corporation Digital Equipment Digital Equipment Corporation P.O. -

MIPS IV Instruction Set

MIPS IV Instruction Set Revision 3.2 September, 1995 Charles Price MIPS Technologies, Inc. All Right Reserved RESTRICTED RIGHTS LEGEND Use, duplication, or disclosure of the technical data contained in this document by the Government is subject to restrictions as set forth in subdivision (c) (1) (ii) of the Rights in Technical Data and Computer Software clause at DFARS 52.227-7013 and / or in similar or successor clauses in the FAR, or in the DOD or NASA FAR Supplement. Unpublished rights reserved under the Copyright Laws of the United States. Contractor / manufacturer is MIPS Technologies, Inc., 2011 N. Shoreline Blvd., Mountain View, CA 94039-7311. R2000, R3000, R6000, R4000, R4400, R4200, R8000, R4300 and R10000 are trademarks of MIPS Technologies, Inc. MIPS and R3000 are registered trademarks of MIPS Technologies, Inc. The information in this document is preliminary and subject to change without notice. MIPS Technologies, Inc. (MTI) reserves the right to change any portion of the product described herein to improve function or design. MTI does not assume liability arising out of the application or use of any product or circuit described herein. Information on MIPS products is available electronically: (a) Through the World Wide Web. Point your WWW client to: http://www.mips.com (b) Through ftp from the internet site “sgigate.sgi.com”. Login as “ftp” or “anonymous” and then cd to the directory “pub/doc”. (c) Through an automated FAX service: Inside the USA toll free: (800) 446-6477 (800-IGO-MIPS) Outside the USA: (415) 688-4321 (call from a FAX machine) MIPS Technologies, Inc. -

2 MIPS Architecture 29

See MIPS® Run Second Edition ThisPageIntentionallyLeftBlank See MIPS® Run Second Edition Dominic Sweetman AMSTERDAM • BOSTON • HEIDELBERG • LONDON NEW YORK • OXFORD • PARIS • SAN DIEGO SAN FRANCISCO • SINGAPORE • SYDNEY • TOKYO Morgan Kaufmann Publishers is an imprint of Elsevier Publisher: Denise E. M. Penrose Publishing Services Manager: George Morrison Senior Project Manager: Brandy Lilly Editorial Assistant: Kimberlee Honjo Cover Design: Alisa Andreola and Hannus Design Composition: diacriTech Technical Illustration: diacriTech Copyeditor: Denise Moore Proofreader: Katherine Antonsen Indexer: Steve Rath Interior Printer: The Maple-Vail Book Manufacturing Group, Inc. Cover Printer: Phoenix Color Morgan Kaufmann Publishers is an imprint of Elsevier. 500 Sansome Street, Suite 400, San Francisco, CA 94111 This book is printed on acid-free paper. © 2007 by Elsevier Inc. All rights reserved. MIPS, MIPS I, MIPS II, MIPS III, MIPS IV, MIPS V, MIPS16, MIPS16e, MIPS-3D, MIPS32, MIPS64, 4K, 4KE, 4KEc, 4KSc, 4KSd, M4K, 5K, 20Kc, 24K, 24KE, 24Kf, 25Kf, 34K, R3000, R4000, R5000, R10000, CorExtend, MDMX, PDtrace and SmartMIPS are trademarks or registered trademarks of MIPS Technologies, Inc. in the United States and other countries, and used herein under license from MIPS Technologies, Inc. MIPS, MIPS16, MIPS32, MIPS64, MIPS-3D and SmartMIPS, among others, are registered in the U.S. Patent and Trademark Office. Linux® is the registered trademark of Linus Torvalds in the U.S. and other countries. Designations used by companies to distinguish their products are often claimed as trademarks or registered trade- marks. In all instances in which Morgan Kaufmann Publishers is aware of a claim, the product names appear in initial capital or all capital letters. -

Pluggable Interface Relays CR-M Miniature Relays

Data sheet Pluggable interface relays CR-M Miniature relays Pluggable interface relays are used for electrical isolation, amplification and signal matching between the electronic controlling, e.g. PLC (programmable logic controller), PC or field bus systems and the sensor / actuator level. They don’t use additional internal protective circuits and thus are overload-proof against short-time variations like current or voltage peaks. 2CDC 291 002 S0015 Characteristics Approvals – Standard miniature relays with mechanical status indication H ANSI/UL 508, CAN/CSA C22.2 No.14 – 13 different rated control supply voltages: F CAN/CSA C22.2 No.14 DC versions: 12 V, 24 V, 48 V, 60 V, 110 V, 125 V, 220 V J VDE (except 125 V DC devices) AC versions: 24 V, 48 V, 60 V, 110 V, 120 V, 230 V EAC – Output: 2 c/o (SPDT) contacts (12 A), 3 c/o (SPDT) R contacts (10 A) or 4 c/o (SPDT) contacts (6 A) P Lloyds Register (only devices with 4 c/o (SPDT) – Available with or without LED contacts) CCC – 4 c/o (SPDT) contact version optionally equipped with E gold contacts, LED and free wheeling diode L RMRS (except 60 V and 125 V devices) – Integrated test button for manual actuation and locking of output contacts (DC coil = blue, AC coil = orange) that Marks can be removed if necessary a CE – Cadmium-free contact material – Suited for logical and standard sockets – Width on socket: 27 mm (1.063 in) – Pluggable function modules: reverse polarity protection/ free wheeling diode, LED indication, RC elements, overvoltage protection Order data Packing unit = 10 pieces -

IDT79R4600 and IDT79R4700 RISC Processor Hardware User's Manual

IDT79R4600™ and IDT79R4700™ RISC Processor Hardware User’s Manual Revision 2.0 April 1995 Integrated Device Technology, Inc. Integrated Device Technology, Inc. reserves the right to make changes to its products or specifications at any time, without notice, in order to improve design or performance and to supply the best possible product. IDT does not assume any respon- sibility for use of any circuitry described other than the circuitry embodied in an IDT product. ITD makes no representations that circuitry described herein is free from patent infringement or other rights of third parties which may result from its use. No license is granted by implication or otherwise under any patent, patent rights, or other rights of Integrated Device Technology, Inc. LIFE SUPPORT POLICY Integrated Device Technology’s products are not authorized for use as critical components in life sup- port devices or systems unless a specific written agreement pertaining to such intended use is executed between the manufacturer and an officer of IDT. 1. Life support devices or systems are devices or systems that (a) are intended for surgical implant into the body, or (b) support or sustain life, and whose failure to perform, when properly used in accor- dance with instructions for use provided in the labeling, can be reasonably expected to result in a sig- nificant injury to the user. 2. A critical component is any component of a life support device or system whose failure to perform can be reasonably expected to cause the failure of the life support device or system, or to affect its safety or effectiveness. -

Multi-Method Dispatch Using Single-Receiver Projections

Multi-Method Dispatch Using Single-Receiver Projections Wade Holst Duane Szafron Candy Pang Yuri Leontiev g fwade,duane,candy,yuri @cs.ualberta.ca Department of Computing Science University of Alberta Edmonton, Canada Abstract A new technique for multi-method dispatch, called Single-Receiver Projections and abbre- viated SRP, is presented. It operates by performing projections onto single-receiver dispatch tables, and thus benefits from existing research into single-receiver dispatch techniques. It pro- k vides method dispatch in O(k ), where is the arity of the method. Unlike existing multi-method dispatch techniques, it also maintains the set of all applicable methods. This extra information allows SRP to support languages like CLOS that use next-method. SRP is also highly incremen- tal in nature, so it is well-suited for languages that require separate compilation and languages that allow methods and classes to be added at run-time. SRP uses less data-structure memory than all existing multi-method techniques and has the second fastest dispatch time. Furthermore, if the other techniques are extended to support all applicable methods, SRP becomes the fastest dispatch techniques. RESEARCH PAPER Subject Areas: language design and implementation, programming environments Keywords: multi-method, dispatch, object-oriented, implementation, compiler Word Count: 8722 Contact Author: Duane Szafron Department of Computing Science University of Alberta Edmonton, Canada, T6G 2H1 Phone: (780) 492-5468 Fax: (780) 492-1071 Email: [email protected] 1 1 Introduction In object-oriented languages, the method to invoke is determined not only by the method (selector) name, but also by the dynamic types of its arguments. -

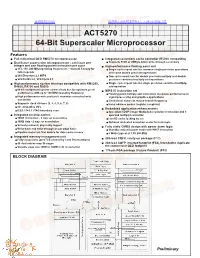

64-Bit Superscaler Microprocessor ACT5270

查询5270供应商 捷多邦,专业PCB打样工厂,24小时加急出货 ACT5270 64-Bit Superscaler Microprocessor Features ■ Full militarized QED RM5270 microprocessor ■ Integrated secondary cache controller (R5000 compatible) ■ Dual Issue superscalar microprocessor - can issue one ● Supports 512K or 2MByte block write-through secondary integer and one floating-point instruction per cycle ■ High-performance floating point unit ● 133, 150, 200 MHz operating frequencies – Consult Factory for ● Single cycle repeat rate for common single precision operations latest speeds and some double precision operations ● 260 Dhrystone2.1 MIPS ● Two cycle repeat rate for double precision multiply and double ● SPECInt95 5.0, SPECfp95 5.3 precision combined multiply-add operations ■ High performance system interface compatible with RM5260, ● Single cycle repeat rate for single precision combined multiply- R4600, R4700 and R5000 add operation ● 64-bit multiplexed system address/data bus for optimum price/ ■ MIPS IV instruction set performance with up to 100 MHz operating frequency ● Floating point multiply-add instruction increases performance in ● High performance write protocols maximize uncached write signal processing and graphics applications bandwidth ● Conditional moves to reduce branch frequency ● Supports clock divisors (2, 3, 4, 5, 6, 7, 8) ● Index address modes (register + register) ● 5V compatible I/O’s ■ Embedded application enhancements ● IEEE 1149.1 JTAG boundary scan ● Specialized DSP integer Multiply-Accumulate instruction and 3 ■ Integrated on-chip caches operand multiply instruction -

On the Efficacy of Source Code Optimizations for Cache-Based Systems

On the efficacy of source code optimizations for cache-based systems Rob F. Van der Wijngaart, MRJ Technology Solutions, NASA Ames Research Center, Moffett Field, CA 94035 William C. Saphir, Lawrence Berkeley National Laboratory, Berkeley, CA 94720 Abstract. Obtaining high performance without machine-specific tuning is an important goal of scientific application programmers. Since most scientific processing is done on commodity microprocessors with hierarchical memory systems, this goal of "portable performance" can be achieved if a common set of optimization principles is effective for all such systems. It is widely believed, or at least hoped, that portable performance can be realized. The rule of thumb for optimization on hierarchical memory systems is to maximize tem- poral and spatial locality of memory references by reusing data. and minimizing memory access stride. We investigate the effects of a number of optimizations on the performance of three related kernels taken from a computational fluid dynamics application. Timing the kernels on a range of processors, we observe an inconsistent and often counterintuitive im- pact of the optimizations on performance. In particular, code variations that have a positive impact on one architecture can have a negative impact on another, and variations expected to be unimportant can produce large effects. Moreover, we find that cache miss rates--as reported by a cache simulation tool, and con- firmed by hardware counters--only partially explain the results. By contrast, the compiler- generated assembly code provides more insight by revealing the importance of processor- specific instructions and of compiler maturity, both of which strongly, and sometimes unex- pectedly, influence performance.