Gpus and GPU Programming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Powervr SGX Series5xt IP Core Family

PowerVR SGX Series5XT IP Core Family The PowerVR™ SGX Series5XT Graphics Processing Unit (GPU) IP core family is a series Features of highly efficient graphics acceleration IP cores that meet the multimedia requirements of • Most comprehensive IP core family the next generation of consumer, communications and computing applications. and roadmap in the industry PowerVR SGX Series5XT architecture is fully scalable for a wide range of area and • USSE2 delivers twice the peak performance requirements, enabling it to target markets from low cost feature-rich mobile floating point and instruction multimedia products to very high performance consoles and computing devices. throughput of Series5 USSE • YUV and colour space accelerators The family incorporates the second-generation Universal Scalable Shader Engine (USSE2™), for improved performance with a feature set that exceeds the requirements of OpenGL 2.0 and Microsoft Shader • Upgraded PowerVR Series5XT Model 3, enabling 2D, 3D and general purpose (GP-GPU) processing in a single core. shader-driven tile-based deferred rendering (TBDR) architecture • Multi-processor options enable scalability to higher performance • Support for all industry standard PowerVR SGX Family mobile and desktop graphics APIs and operating sytems Series5XT SGX543MP1-16, SGX544MP1-16, SGX554MP1-16 • Fully backwards compatible with PowerVR MBX and SGX Series5 Series5 SGX520, SGX530, SGX531, SGX535, SGX540, SGX545 Benefits Multi-standard API and OS • Extensive product line supports all area/performance requirements OpenGL -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 1 of 34 1 BOIES, SCHILLER & FLEXNER LLP WILLIAM A. ISAACSON (pro hac vice) 2 5301 Wisconsin Ave. NW, Suite 800 Washington, D.C. 20015 3 Telephone: (202) 237-2727 Facsimile: (202) 237-6131 4 Email: [email protected] 5 6 BOIES, SCHILLER & FLEXNER LLP BOIES, SCHILLER & FLEXNER LLP JOHN F. COVE, JR. (CA Bar No. 212213) PHILIP J. IOVIENO (pro hac vice) 7 DAVID W. SHAPIRO (CA Bar No. 219265) ANNE M. NARDACCI (pro hac vice) KEVIN J. BARRY (CA Bar No. 229748) 10 North Pearl Street 8 1999 Harrison St., Suite 900 4th Floor Oakland, CA 94612 Albany, NY 12207 9 Telephone: (510) 874-1000 Telephone: (518) 434-0600 Facsimile: (510) 874-1460 Facsimile: (518) 434-0665 10 Email: [email protected] Email: [email protected] [email protected] [email protected] 11 [email protected] 12 Attorneys for Plaintiff Jordan Walker Interim Class Counsel for Direct Purchaser 13 Plaintiffs 14 15 UNITED STATES DISTRICT COURT 16 NORTHERN DISTRICT OF CALIFORNIA 17 18 IN RE GRAPHICS PROCESSING UNITS ) Case No.: M:07-CV-01826-WHA ANTITRUST LITIGATION ) 19 ) MDL No. 1826 ) 20 This Document Relates to: ) THIRD CONSOLIDATED AND ALL DIRECT PURCHASER ACTIONS ) AMENDED CLASS ACTION 21 ) COMPLAINT FOR VIOLATION OF ) SECTION 1 OF THE SHERMAN ACT, 15 22 ) U.S.C. § 1 23 ) ) 24 ) ) JURY TRIAL DEMANDED 25 ) ) 26 ) ) 27 ) 28 THIRD CONSOLIDATED AND AMENDED CLASS ACTION COMPLAINT BY DIRECT PURCHASERS M:07-CV-01826-WHA Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 2 of 34 1 Plaintiffs Jordan Walker, Michael Bensignor, d/b/a Mike’s Computer Services, Fred 2 Williams, and Karol Juskiewicz, on behalf of themselves and all others similarly situated in the 3 United States, bring this action for damages and injunctive relief under the federal antitrust laws 4 against Defendants named herein, demanding trial by jury, and complaining and alleging as 5 follows: 6 NATURE OF THE CASE 7 1. -

Driver Riva Tnt2 64

Driver riva tnt2 64 click here to download The following products are supported by the drivers: TNT2 TNT2 Pro TNT2 Ultra TNT2 Model 64 (M64) TNT2 Model 64 (M64) Pro Vanta Vanta LT GeForce. The NVIDIA TNT2™ was the first chipset to offer a bit frame buffer for better quality visuals at higher resolutions, bit color for TNT2 M64 Memory Speed. NVIDIA no longer provides hardware or software support for the NVIDIA Riva TNT GPU. The last Forceware unified display driver which. version now. NVIDIA RIVA TNT2 Model 64/Model 64 Pro is the first family of high performance. Drivers > Video & Graphic Cards. Feedback. NVIDIA RIVA TNT2 Model 64/Model 64 Pro: The first chipset to offer a bit frame buffer for better quality visuals Subcategory, Video Drivers. Update your computer's drivers using DriverMax, the free driver update tool - Display Adapters - NVIDIA - NVIDIA RIVA TNT2 Model 64/Model 64 Pro Computer. (In Windows 7 RC1 there was the build in TNT2 drivers). http://kemovitra. www.doorway.ru Use the links on this page to download the latest version of NVIDIA RIVA TNT2 Model 64/Model 64 Pro (Microsoft Corporation) drivers. All drivers available for. NVIDIA RIVA TNT2 Model 64/Model 64 Pro - Driver Download. Updating your drivers with Driver Alert can help your computer in a number of ways. From adding. Nvidia RIVA TNT2 M64 specs and specifications. Price comparisons for the Nvidia RIVA TNT2 M64 and also where to download RIVA TNT2 M64 drivers. Windows 7 and Windows Vista both fail to recognize the Nvidia Riva TNT2 ( Model64/Model 64 Pro) which means you are restricted to a low. -

(GPU) Computing

Graphics Processing Unit (GPU) computing This section describes the graphics processing unit (GPU) computing feature of OptiSystem. Note: The GPU computing feature is only configurable with OptiSystem Version 11 (or higher) What is GPU computing? GPU computing or GPGPU takes advantage of a computer’s grahics processing card to augment the speed of general purpose scientific and engineering computing tasks. Compute Unified Device Architecture (CUDA) implementation for OptiSystem NVIDIA revolutionized the GPGPU and accelerated computing when it introduced a new parallel computing architecture: Compute Unified Device Architecture (CUDA). CUDA is both a hardware and software architecture for issuing and managing computations within the GPU, thus allowing it to operate as a generic data-parallel computing device. CUDA allows the programmer to take advantage of the parallel computing power of an NVIDIA graphics card to perform general purpose computations. OptiSystem CUDA implementation The OptiSystem model for GPU computing involves using a central processing unit (CPU) and GPU together in a heterogeneous co-processing computing model. The sequential part of the application runs on the CPU and the computationally-intensive part is accelerated by the GPU. In the OptiSystem GPU programming model, the application has been modified to map the compute-intensive kernels to the GPU. The remainder of the application remains within the CPU. CUDA parallel computing architecture The NVIDIA CUDA parallel computing architecture is enabled on GeForce®, Quadro®, and Tesla™ products. Whereas GeForce and Quadro are designed for consumer graphics and professional visualization respectively, the Tesla product family is designed ground-up for parallel computing and offers exclusive computing 3 GRAPHICS PROCESSING UNIT (GPU) COMPUTING features, and is the recommended choice for the OptiSystem GPU. -

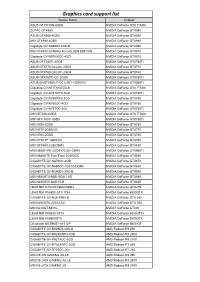

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

NVIDIA Quadro RTX for V-Ray Next

NVIDIA QUADRO RTX V-RAY NEXT GPU Image courtesy of © Dabarti Studio, rendered with V-Ray GPU Quadro RTX Accelerates V-Ray Next GPU Rendering Solutions for V-Ray Next GPU V-Ray Next GPU taps into the power of NVIDIA® Quadro® NVIDIA Quadro® provides a wide range of RTX-enabled RTX™ to speed up production rendering with dedicated RT solutions for desktop, mobile, server-based rendering, and Cores for ray tracing and Tensor Cores for AI-accelerated virtual workstations with NVIDIA Quadro Virtual Data denoising.¹ With up to 18X faster rendering than CPU-based Center Workstation (Quadro vDWS) software.2 With up to 96 solutions and enhanced performance with NVIDIA NVLink™, gigabytes (GB) of GPU memory available,3 Quadro RTX V-Ray Next GPU with RTX support provides incredible provides the power you need for the largest professional performance improvements for your rendering workloads. graphics and rendering workloads. “ Accelerating artist productivity is always our top Benchmark: V-Ray Next GPU Rendering Performance Increase on Quadro RTX GPUs priority, so we’re quick to take advantage of the latest ray-tracing hardware breakthroughs. By Quadro RTX 6000 x2 1885 ™ Quadro RTX 6000 104 supporting NVIDIA RTX in V-Ray GPU, we’re Quadro RTX 4000 783 bringing our customers an exciting new boost in PU 1 0 2 4 6 8 10 12 14 16 18 20 their GPU production rendering speeds.” Relatve Performance – Phillip Miller, Vice President, Product Management, Chaos Group Desktop performance Tests run on 1x Xeon old 6154 3 Hz (37 Hz Turbo), 64 B DDR4 RAM Wn10x64 Drver verson 44128 Performance results may vary dependng on the scene NVIDIA Quadro professional graphics solutions are verified and recommended for the most demanding projects by Chaos Group. -

NVIDIA Launches Tegra X1 Mobile Super Chip

NVIDIA Launches Tegra X1 Mobile Super Chip Maxwell GPU Architecture Delivers First Teraflops Mobile Processor, Powering Deep Learning and Computer Vision Applications NVIDIA today unveiled Tegra® X1, its next-generation mobile super chip with over one teraflops of processing power – delivering capabilities that open the door to unprecedented graphics and sophisticated deep learning and computer vision applications. Tegra X1 is built on the same NVIDIA Maxwell™ GPU architecture rolled out only months ago for the world's top-performing gaming graphics card, the GeForce® GTX 980. The 256-core Tegra X1 provides twice the performance of its predecessor, the Tegra K1, which is based on the previous-generation Kepler™ architecture and debuted at last year's Consumer Electronics Show. Tegra processors are built for embedded products, mobile devices, autonomous machines and automotive applications. Tegra X1 will begin appearing in the first half of the year. It will be featured in the newly announced NVIDIA DRIVE™ car computers. DRIVE PX is an auto-pilot computing platform that can process video from up to 12 onboard cameras to run capabilities providing Surround-Vision, for a seamless 360-degree view around the car, and Auto-Valet, for true self-parking. DRIVE CX is a complete cockpit platform designed to power the advanced graphics required across the increasing number of screens used for digital clusters, infotainment, head-up displays, virtual mirrors and rear-seat entertainment. "We see a future of autonomous cars, robots and drones that see and learn, with seeming intelligence that is hard to imagine," said Jen-Hsun Huang, CEO and co-founder, NVIDIA. -

GPU-Based Deep Learning Inference

Whitepaper GPU-Based Deep Learning Inference: A Performance and Power Analysis November 2015 1 Contents Abstract ......................................................................................................................................................... 3 Introduction .................................................................................................................................................. 3 Inference versus Training .............................................................................................................................. 4 GPUs Excel at Neural Network Inference ..................................................................................................... 5 Inference Optimizations in Caffe and cuDNN 4 ........................................................................................ 5 Experimental Setup and Testing Methodology ........................................................................................ 7 Inference on Small and Large GPUs .......................................................................................................... 8 Conclusion ................................................................................................................................................... 10 References .................................................................................................................................................. 10 2 Abstract Deep learning methods are revolutionizing various areas of machine perception. On a -

A Configurable General Purpose Graphics Processing Unit for Power, Performance, and Area Analysis

Iowa State University Capstones, Theses and Creative Components Dissertations Summer 2019 A configurable general purpose graphics processing unit for power, performance, and area analysis Garrett Lies Follow this and additional works at: https://lib.dr.iastate.edu/creativecomponents Part of the Digital Circuits Commons Recommended Citation Lies, Garrett, "A configurable general purpose graphics processing unit for power, performance, and area analysis" (2019). Creative Components. 329. https://lib.dr.iastate.edu/creativecomponents/329 This Creative Component is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Creative Components by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. A Configurable General Purpose Graphics Processing Unit for Power, Performance, and Area Analysis by Garrett Joseph Lies A Creative Component submitted to the graduate faculty in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Major: Computer Engineering (Very-Large Scale Integration) Program of Study Committee: Joseph Zambreno, Major Professor The student author, whose presentation of the scholarship herein was approved by the program of study committee, is solely responsible for the content of this dissertation/thesis. The Graduate College will ensure this dissertation/thesis is globally accessible and will not permit alterations after a degree is conferred. Iowa State University Ames, Iowa 2019 Copyright c Garrett Joseph Lies, 2019. All rights reserved. ii TABLE OF CONTENTS Page LIST OF TABLES . iv LIST OF FIGURES . .v ACKNOWLEDGMENTS . vi ABSTRACT . vii CHAPTER 1. -

Nvidia Cuda Getting Started Guide for Linux

NVIDIA CUDA GETTING STARTED GUIDE FOR LINUX DU-05347-001_v7.0 | March 2015 Installation and Verification on Linux Systems TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. System Requirements.................................................................................... 1 1.1.1. x86 32-bit Support.................................................................................. 2 1.2. About This Document.................................................................................... 3 Chapter 2. Pre-installation Actions...........................................................................4 2.1. Verify You Have a CUDA-Capable GPU................................................................ 4 2.2. Verify You Have a Supported Version of Linux.......................................................4 2.3. Verify the System Has gcc Installed................................................................... 5 2.4. Choose an Installation Method......................................................................... 5 2.5. Download the NVIDIA CUDA Toolkit....................................................................6 2.6. Handle Conflicting Installation Methods.............................................................. 6 Chapter 3. Package Manager Installation....................................................................8 3.1. Overview.................................................................................................. -

On the Energy Efficiency of Graphics Processing Units for Scientific

On the Energy Efficiency of Graphics Processing Units for Scientific Computing S. Huang, S. Xiao, W. Feng Department of Computer Science Virginia Tech {huangs, shucai, wfeng}@vt.edu Abstract sively parallel, multi-threaded multiprocessor design, combined with an appropriate data-parallel mapping of The graphics processing unit (GPU) has emerged as an application to it. a computational accelerator that dramatically reduces The emergence of more general-purpose program- the time to discovery in high-end computing (HEC). ming environments, such as Brook+ [1] and CUDA [2], However, while today’s state-of-the-art GPU can easily has made it easier to implement large-scale applica- reduce the execution time of a parallel code by many tions on GPUs. The CUDA programming model from orders of magnitude, it arguably comes at the expense NVIDIA was created for developing applications on of significant power and energy consumption. For ex- NVIDIA’s GPU cards, such as the GeForce 8 series. ample, the NVIDIA GTX 280 video card is rated at Similarly, Brook+ supports stream processing on the 236 watts, which is as much as the rest of a compute AMD/ATi GPU cards. For the purposes of this paper, node, thus requiring a 500-W power supply. As a we use CUDA atop an NVIDIA GTX 280 video card consequence, the GPU has been viewed as a “non- for our scientific computation. green” computing solution. GPGPU research has focused predominantly on ac- This paper seeks to characterize, and perhaps de- celerating scientific applications. This paper not only bunk, the notion of a “power-hungry GPU” via an accelerates scientific applications via GPU computing, empirical study of the performance, power, and en- but it also does so in the context of characterizing ergy characteristics of GPUs for scientific computing. -

GPU Programming Using C++ AMP

GPU programming using C++ AMP Petrika Manika Elda Xhumari Julian Fejzaj Dept. of Informatics Dept. of Informatics Dept. of Informatics University of Tirana University of Tirana University of Tirana [email protected] [email protected] [email protected] perform computation in applications traditionally handled by the central processing unit (CPU)1. The architecture of graphics processing units (GPUs) is Abstract very well suited for data-parallel problems. They Nowadays, a challenge for programmers is to support extremely high throughput through many make their programs better. The word "better" parallel processing units and very high memory means more simple, portable and much faster bandwidth. For problems that match the GPU in execution. Heterogeneous computing is a architecture well, it common to easily achieve a 2× new methodology in computer science field. speedup over a CPU implementation of the same GPGPU programming is a new and problem, and tuned implementations can outperform challenging technique which is used for the CPU by a factor of 10 to 100. Programming these solving problems with data parallel nature. In processors, however, remains a challenge because the this paper we describe this new programming architecture differs so significantly from the CPU. This methodology with focus on GPU paper describes the benefits of GPU programming programming using C++ AMP language, and using C++ AMP language, and what kinds of problems what kinds of problems are suitable for are suitable for acceleration using these parallel acceleration using these parallel techniques. techniques. Finally we describe the solution for a simple problem using C++ AMP and the advantages of this solution.