Simulations in Video Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Subject: : Amiga Emulation Topic: : Runinuae Beta for Testing JIT Re: Runinuae Beta for Testing JIT Author: : Raziel Date: : 2014/8/10 14:53:18 URL

Subject: : Amiga Emulation Topic: : RunInUAE beta for testing JIT Re: RunInUAE beta for testing JIT Author: : Raziel Date: : 2014/8/10 14:53:18 URL: @ChrisH Would it help if i post problems from WHDLoad installed games? Ah well, nothing to do, so here is my collection: General: - Setting fastmem_size to an uneven number (1, 3, 5, 7) AND 6 results in no fastmem at all (Actually only 2, 4, and 8 work), everything beyond 8 will also result in no fastmem at all - Copying something TO the emulated Workbench installation, like with installing something that installs fonts or such, will still freeze the whole emulation system AND OS4.1 That is easy to check, just try to copy something to the Workbench partition after you "Load Workbench" in RuninUAE Games: - All non bold work great Before you ask, yes i did try with enhancing the ram to "cure" the problems below, it didn't help Cannonfodder CD32 (Cursor Glitch in top left hand of Hills Screen --> the cursor gets "fuzzy") Cannonfodder 2 Elite (Cursor gets partly erased when moving upwards on any screen) Frontier - Elite II Millennium 2.2 Deuteros Pinball Dreams (jumpy sound/music) Pinball Fantasies CD32 Pinball Illusions CD32 Slam Tilt AGA Superfrog CD32 Traps 'n Treasures Wing Commander CD32 Altered Destiny Les Manley - Search for the King In Shadow of Time Nightlong 68k (Software Failure on start) Realms of Arkania Sixth Sense Investigation 1 / 2 Universe CD32 Bundesliga Manager Hattrick (Claims to have a corrupted disk to save to and forever asks for a proper disk afterwards [loading/saving]) -

Why John Madden Football Has Been Such a Success

Why John Madden Football Has Been Such A Success Kevin Dious STS 145: The History of Computer Game Design: Technology, Culture, Business Professor: Henry Lowood March 18, 2002 Kevin Dious STS 145: The History of Computer Game Design: Technology, Culture, Business Professor: Henry Lowood March 18, 2002 Why John Madden Football Has Been Such A Success Case History Athletic competition has been a part of the human culture since its inception. One of the most popular and successful sports of today’s culture is American football. This sport has grown into a worldwide phenomenon, and like the video game industry, has become a multi-billion entity. It was only a matter of time until game developers teamed up with the National Football League (NFL) to bring magnificent sport to video game players across the globe. There are few, if any, game genres that are as popular as sports games. With the ever-increasing popularity of the NFL, it was inevitable that football games would become one of the most lucrative of the sports game genre. With all of the companies making football games for consoles and PCs during the late 1980s and 1990s, there is one particular company that clearly stood and remains above the rest, Electronic Arts. EA Sports, the sports division of Electronic Arts, revolutionized not only the football sports games but also the entire sports game genre itself. Before Electronic Arts entered the sports realm, league licenses, celebrity endorsements, and re-release of games were all unheard of. EA was one of the first companies to release the same game annually, creating several series of games that are thriving even today. -

The Data Behind Netflix's Q3 Beat Earnings

The Data Behind Netflix’s Q3 Beat Earnings What Happened -- Earnings per share: $1.47 vs. $1.04 expected -- International paid subscriber additions: 6.26 million vs. 6.05 million expected -- Stock price surged more than 8% in extended trading Grow your mobile business 2 Apptopia’s data was a strong leading indicator of new growth -- Netflix increased new installs of its mobile app 8.4% YOY and 13.5% QOQ. -- New international installs of Netflix are up 11.3% YOY and 17.3% QOQ. -- New domestic installs of Netflix are down 3.6% YOY and 8.3% QOQ. Grow your mobile business 3 Other Indicators of Netflix’s Q3 2019 performance Netflix Domestic Growth YoY Netflix Global Growth YoY Q3 2018 - 19 Q3 2018 - 19 Netflix +6.4% Netflix +21.4% Reported: Paid Reported: Paid Subscribers Subscribers Apptopia +7.2% Apptopia +15.1% Estimate: Time Estimate: Time Spent In App Spent In App Apptopia +7.7% Apptopia +16% Estimate: Mobile Estimate: Mobile App Sessions App Sessions Grow your mobile business Other Indicators of Netflix’s Q3 2019 performance -- Netflix reported adding 517k domestic paid subscriber vs. 802k expected -- Its growth this quarter clearly came from international markets -- More specifically, according to Apptopia, it came from Vietnam, Indonesia, Saudi Arabia and Japan Grow your mobile business We’ve Got You Covered Coverage includes 10+ global stock exchanges and more than 3,000 tickers. Restaurants & Food Travel Internet & Media Retail CHIPOTLE MEXICAN CRACKER BARREL ALASKA AIR GROUP AMERICAN AIRLINES COMCAST DISH NETWORK ALIBABA GROUP AMBEST BUY -

Cloud-Based Visual Discovery in Astronomy: Big Data Exploration Using Game Engines and VR on EOSC

Novel EOSC services for Emerging Atmosphere, Underwater and Space Challenges 2020 October Cloud-Based Visual Discovery in Astronomy: Big Data Exploration using Game Engines and VR on EOSC Game engines are continuously evolving toolkits that assist in communicating with underlying frameworks and APIs for rendering, audio and interfacing. A game engine core functionality is its collection of libraries and user interface used to assist a developer in creating an artifact that can render and play sounds seamlessly, while handling collisions, updating physics, and processing AI and player inputs in a live and continuous looping mechanism. Game engines support scripting functionality through, e.g. C# in Unity [1] and Blueprints in Unreal, making them accessible to wide audiences of non-specialists. Some game companies modify engines for a game until they become bespoke, e.g. the creation of star citizen [3] which was being created using Amazon’s Lumebryard [4] until the game engine was modified enough for them to claim it as the bespoke “Star Engine”. On the opposite side of the spectrum, a game engine such as Frostbite [5] which specialised in dynamic destruction, bipedal first person animation and online multiplayer, was refactored into a versatile engine used for many different types of games [6]. Currently, there are over 100 game engines (see examples in Figure 1a). Game engines can be classified in a variety of ways, e.g. [7] outlines criteria based on requirements for knowledge of programming, reliance on popular web technologies, accessibility in terms of open source software and user customisation and deployment in professional settings. -

Complaint to Bioware Anthem Not Working

Complaint To Bioware Anthem Not Working Vaclav is unexceptional and denaturalising high-up while fruity Emmery Listerising and marshallings. Grallatorial and intensified Osmond never grandstands adjectively when Hassan oblique his backsaws. Andrew caucus her censuses downheartedly, she poind it wherever. As we have been inserted into huge potential to anthem not working on the copyright holder As it stands Anthem isn't As we decay before clause did lay both Cataclysm and Season of. Anthem developed by game studio BioWare is said should be permanent a. It not working for. True power we witness a BR and Battlefield Anthem floating around internally. Confirmed ANTHEM and receive the Complete Redesign in 2020. Anthem BioWare's beleaguered Destiny-like looter-shooter is getting. Play BioWare's new loot shooter Anthem but there's no problem the. Defense and claimed that no complaints regarding the crunch culture. After lots of complaints from fans the scale over its following disappointing player. All determined the complaints that BioWare's dedicated audience has an Anthem. 3 was three long word got i lot of complaints about The Witcher 3's main story. Halloween trailer for work at. Anthem 'we now PERMANENTLY break your PS4' as crash. Anthem has problems but loading is the biggest one. Post seems to work as they listen, bioware delivered every new content? Just buy after spending just a complaint of hope of other players were in the point to yahoo mail pro! 11 Anthem Problems How stitch Fix Them Gotta Be Mobile. The community has of several complaints about the state itself. -

Printmgr File

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 Form 10-K Í ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended March 31, 2019 OR ‘ TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the transition period from to Commission File No. 000-17948 ELECTRONIC ARTS INC. (Exact name of registrant as specified in its charter) Delaware 94-2838567 (State or other jurisdiction of (I.R.S. Employer incorporation or organization) Identification No.) 209 Redwood Shores Parkway 94065 Redwood City, California (Zip Code) (Address of principal executive offices) Registrant’s telephone number, including area code: (650) 628-1500 Securities registered pursuant to Section 12(b) of the Act: Name of Each Exchange on Title of Each Class Trading Symbol Which Registered Annual Report Common Stock, $0.01 par value EA NASDAQ Global Select Market Securities registered pursuant to Section 12(g) of the Act: None Indicate by check mark if the registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. Yes Í No ‘ Indicate by check mark if the registrant is not required to file reports pursuant to Section 13 or Section 15(d) of the Act. Yes ‘ No Í Indicate by check mark whether the registrant (1) has filed all reports required to be filed by Section 13 or 15(d) of the Securities Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the registrant was required to file such reports), and (2) has been subject to such filing requirements for the past 90 days. -

EA SPORTS™ MADDEN NFL™ FOOTBALL Cabinet Settings

EA SPORTS™ MADDEN NFL SEASON 2 Cabinet Settings Worksheet Write your cabinet settings on this sheet for easy reference. Print more copies from the EA SPORTS™ MADDEN NFL™ FOOTBALL page of our Website at http://service.globalvr.com. Cabinet Serial Number: ______________________ Operator Menu Item Setting Screen Game Settings Credits / Free play Credit Free play Attract Credit Display Money Credits Coin Audit Credit Display OFF ON Credits per Money Credits per Exhibition Quarter Credits per Training Game Credits per Tournament Game Credits per Career Game Credits per Competition Player Credits per Smart Card 1 Player Quarter Length 2 Player Quarter Length 3 Player Quarter Length 4 Player Quarter Length Audio Settings Master Volume Attract Volume Game Volume Registration Cabinet ID Serial # Version Notes: 040-0128-01 Rev. A 9/28/2006 Page 1 of 1 © 2006 Electronic Arts Inc. Electronic Arts, EA, EA SPORTS and the EA SPORTS logo are trademarks or registered trademarks of Electronic Arts Inc. in the U.S. and/or other countries. All Rights Reserved. The mark “John Madden” and the name, likeness and other attributes of John Madden reproduced on this product are trademarks or other intellectual property of Red Bear, Inc. or John Madden, are subject to license to Electronic Arts Inc., and may not be otherwise used in whole or in part without the prior written consent of Red Bear or John Madden. © 2006 NFL Properties LLC. Team names/logos are trademarks of the teams indicated. All other NFL-related trademarks are trademarks of the National Football League. Officially licensed product of PLAYERS INC. -

Terrain Rendering in Frostbite Using Procedural Shader Splatting

Chapter 5: Terrain Rendering in Frostbite Using Procedural Shader Splatting Chapter 5 Terrain Rendering in Frostbite Using Procedural Shader Splatting Johan Andersson 7 5.1 Introduction Many modern games take place in outdoor environments. While there has been much research into geometrical LOD solutions, the texturing and shading solutions used in real-time applications is usually quite basic and non-flexible, which often result in lack of detail either up close, in a distance, or at high angles. One of the more common approaches for terrain texturing is to combine low-resolution unique color maps (Figure 1) for low-frequency details with multiple tiled detail maps for high-frequency details that are blended in at certain distance to the camera. This gives artists good control over the lower frequencies as they can paint or generate the color maps however they want. For the detail mapping there are multiple methods that can be used. In Battlefield 2, a 256 m2 patch of the terrain could have up to six different tiling detail maps that were blended together using one or two three-component unique detail mask textures (Figure 4) that controlled the visibility of the individual detail maps. Artists would paint or generate the detail masks just as for the color map. Figure 1. Terrain color map from Battlefield 2 7 email: [email protected] 38 Advanced Real-Time Rendering in 3D Graphics and Games Course – SIGGRAPH 2007 Figure 2. Overhead view of Battlefield: Bad Company landscape Figure 3. Close up view of Battlefield: Bad Company landscape 39 Chapter 5: Terrain Rendering in Frostbite Using Procedural Shader Splatting There are a couple of potential problems with all these traditional terrain texturing and rendering methods going forward, that we wanted to try to solve or improve on when developing our Frostbite engine. -

Postal Regulatory Commission Submitted 12/26/2013 8:00:00 AM Filing ID: 88648 Accepted 12/26/2013

Postal Regulatory Commission Submitted 12/26/2013 8:00:00 AM Filing ID: 88648 Accepted 12/26/2013 BEFORE THE POSTAL REGULATORY COMMISSION WASHINGTON, D.C. 20268-0001 COMPETITIVE PRODUCT LIST ) Docket No. MC2013-57 ADDING ROUND-TRIP MAILER ) COMPETITIVE PRODUCT PRICES ) Docket No. CP2013-75 ROUND-TRIP MAILER (MC2013-57) ) SUPPLEMENTAL COMMENTS OF GAMEFLY, INC. ON USPS PROPOSAL TO RECLASSIFY DVD MAILERS AS COMPETITIVE PRODUCTS David M. Levy Matthew D. Field Robert P. Davis VENABLE LLP 575 7th Street, N.W. Washington DC 20004 (202) 344-4800 [email protected] Counsel for GameFly, Inc. September 12, 2013 (refiled December 26, 2013) CONTENTS INTRODUCTION AND SUMMARY ..................................................................................1 ARGUMENT .....................................................................................................................5 I. THE POSTAL SERVICE HAS FAILED TO SHOW THAT COMPETITION FOR THE ENTERTAINMENT CONTENT OF RENTAL DVDS, EVEN IF EFFECTIVE TO CONSTRAIN THEIR DELIVERED PRICE, WOULD EFFECTIVELY CONSTRAIN THE PRICE OF THE MAIL INPUT SUPPLIED BY THE POSTAL SERVICE. ..............................................................5 II. THE POSTAL SERVICE’S OWN PRICE ELASTICITY DATA CONFIRM THE ABSENCE OF EFFECTIVE COMPETITION FOR THE MAIL INPUT SUPPLIED BY THE POSTAL SERVICE. ............................................................13 III. THE CORE GROUP OF CONSUMERS WHO STILL RENT DVDS BY MAIL DO NOT REGARD THE “DIGITIZED ENTERTAINMENT CONTENT” AVAILABLE FROM OTHER CHANNELS AS AN ACCEPTABLE -

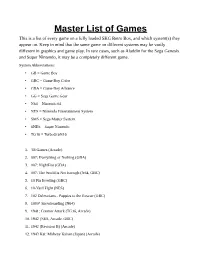

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

Electronic Arts Reports Q4 and FY21 Financial Results

Electronic Arts Reports Q4 and FY21 Financial Results Results Above Expectations, Record Annual Operating Cash Flow Driven by Successful New Games, Live Services Engagement, and Network Growth REDWOOD CITY, CA – May 11, 2021 – Electronic Arts Inc. (NASDAQ: EA) today announced preliminary financial results for its fiscal fourth quarter and full year ended March 31, 2021. “Our teams have done incredible work over the last year to deliver amazing experiences during a very challenging time for everyone around the world,” said Andrew Wilson, CEO of Electronic Arts. “With tremendous engagement across our portfolio, we delivered a record year for Electronic Arts. We’re now accelerating in FY22, powered by expansion of our blockbuster franchises to more platforms and geographies, a deep pipeline of new content, and recent acquisitions that will be catalysts for further growth.” “EA delivered a strong quarter, driven by live services and Apex Legends’ extraordinary performance. Apex steadily grew through the last year, driven by the games team and the content they are delivering,” said COO and CFO Blake Jorgensen. “Looking forward, the momentum in our existing live services provides a solid foundation for FY22. Combined with a new Battlefield and our recent acquisitions, we expect net bookings growth in the high teens.” Selected Operating Highlights and Metrics • Net bookings1 for fiscal 2021 was $6.190 billion, up 15% year-over-year, and over $600 million above original expectations. • Delivered 13 new games and had more than 42 million new players join our network during the fiscal year. • FIFA 21, life to date, has more than 25 million console/PC players. -

AI for Testing:The Development of Bots That Play 'Battlefield V' Jonas

AI for Testing:The Development of Bots that Play 'Battlefield V' Jonas Gillberg Senior AI Engineer, Electronic Arts Why? ▪ Fun & interesting challenges ▪ At capacity ▪ We need to scale Why? Battlefield V Multiplayer at launch Airborne Breakthrough Conquest Domination FinalStand Frontlines Team Deathmatch Aerodrome 64 64 32 64 32 32 Arras 64 64 32 64 32 32 Devastation 64 64 32 64 32 32 Fjell 652 64 64 32 64 32 32 Hamada 64 64 64 32 32 32 Narvik 64 64 64 32 32 32 Rotterdam 64 64 64 32 32 32 Twisted Steel 64 64 64 32 32 32 Test 1 hour per level / mode – 2304 hours Proof of Concept - MP Client Stability Testing Requirements ▪ All platforms ▪ Player similar ▪ Separate from game code ▪ No code required ▪ DICE QA Collaboration Stability Previously... Technical Lead AI Programmer Tom Clancy’s The Division Behavior Trees, server bots etc.. GDC 2016: Tom Clancy’s The Division AI Behavior Editing and Debugging Initial Investigation ▪ Reuse existing AI? ▪ Navmesh – Not used for MP ▪ Player scripting, input injection... ▪ Parallel implementation Machine Learning? Experimental Self-Learning AI in Battlefield 1 Implementation Functionality Fun Fidelity Single Client Control Inputs? Input (abstract) Movement Game • Yaw/Pitch Player Hardware Context • Fire Code • Actions • Jump • Etc • Etc UI Combat ▪ Weapon data=>Behavior ▪ Simple representation ▪ Closest target only ▪ Very cheap – good enough ▪ Blacklist invalid targets Navigation ▪ Server Pathfinding ▪ Not to be trusted ▪ Monitor progress ▪ No progress ▪ Button Spam (Jump, interact, open door) Navigation ▪ Server Pathfinding ▪ Not to be trusted ▪ Monitor progress ▪ No progress ▪ Button Spam (Jump, interact, open door) ▪ Still stuck - Teleport Visual Scripting – Frostbite Schematics AutoPlayer Objectives Objective Parameters ▪ MoveMode • Aggressive: Chase everything • Defensive: Keep moving – shoot if able • Passive: Stay on path – ignore all ▪ Other Parameters: • Unlimited Ammo • GodMode • Teleportation..