Cloud-Based Visual Discovery in Astronomy: Big Data Exploration Using Game Engines and VR on EOSC

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Evolution of Programmable Models for Graphics Engines (High

Hello and welcome! Today I want to talk about the evolution of programmable models for graphics engine programming for algorithm developing My name is Natalya Tatarchuk (some folks know me as Natasha) and I am director of global graphics at Unity I recently joined Unity… 4 …right after having helped ship the original Destiny @ Bungie where I was the graphics lead and engineering architect … 5 and lead the graphics team for Destiny 2, shipping this year. Before that, I led the graphics research and demo team @ AMD, helping drive and define graphics API such as DirectX 11 and define GPU hardware features together with the architecture team. Oh, and I developed a bunch of graphics algorithms and demos when I was there too. At Unity, I am helping to define a vision for the future of Graphics and help drive the graphics technology forward. I am lucky because I get to do it with an amazing team of really talented folks working on graphics at Unity! In today’s talk I want to touch on the programming models we use for real-time graphics, and how we could possibly improve things. As all in the room will easily agree, what we currently have as programming models for graphics engineering are rather complex beasts. We have numerous dimensions in that domain: Model graphics programming lives on top of a very fragmented and complex platform and API ecosystem For example, this is snapshot of all the more than 25 platforms that Unity supports today, including PC, consoles, VR, mobile platforms – all with varied hardware, divergent graphics API and feature sets. -

Repair Manual

Repair Manual Showing Short Cuts and Methods for Repairing and Upkeep Durant and Star Cars FOUR CYLINDER MODELS JANUARY, 1929 DURANT MOTORS, Inc. BROADWAY AT 57th STREET NEW YORK CITY DURANT MOTOR CO. of NEW JERSEY DURANT MOTOR CO. of MICHIGAN Elizabeth, N. J. Lansing, Michigan DURANT MOTOR CO. of CALIFORNIA DURANT MOTOR CO. of CANADA, LTD. Oakland, Cal. Toronto (Leaside), Ontario 1 Blank page 2 WARRANTY E warrant each new DURANT and STAR motor vehicle so by us to be free from defects in material W under normal use and service, our obligation under this warranty being limited to making good at our factory any part or parts thereof which shall with ninety (90) days after delivery of such vehicle to the original purchaser be returned to us, transportation charges prepaid, and which our examination shall disclose to our satisfaction to have been thus defective, this warranty being expressly in lieu of all other warranties expressed or implied and of all other obliga- tions or liabilities on our part. This warranty shall not apply to any vehicle which shall have been repaired or altered outside of our factory in any way, so as in our judgment, to affect its stability or reliability, nor which has been subject to misuse, negligence or accident. We make no warranty whatever in respect to tires, rims, ignition apparatus, horns or other signaling devices, starting devices, generators, batteries, speedometers or other trade accessories, inasmuch as they are usually warranted separately by their respective manufacturers. We do not make any guarantee against, and we assume no responsibility for, any defect in metal or other material, or in any part, device, or trade accessory that cannot be dis- covered by ordinary factory inspection. -

Moving from Unity to Godot an In-Depth Handbook to Godot for Unity Users

Moving from Unity to Godot An In-Depth Handbook to Godot for Unity Users Alan Thorn Moving from Unity to Godot: An In-Depth Handbook to Godot for Unity Users Alan Thorn High Wycombe, UK ISBN-13 (pbk): 978-1-4842-5907-8 ISBN-13 (electronic): 978-1-4842-5908-5 https://doi.org/10.1007/978-1-4842-5908-5 Copyright © 2020 by Alan Thorn This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. Trademarked names, logos, and images may appear in this book. Rather than use a trademark symbol with every occurrence of a trademarked name, logo, or image we use the names, logos, and images only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are not identified as such, is not to be taken as an expression of opinion as to whether or not they are subject to proprietary rights. While the advice and information in this book are believed to be true and accurate at the date of publication, neither the authors nor the editors nor the publisher can accept any legal responsibility for any errors or omissions that may be made. -

Complaint to Bioware Anthem Not Working

Complaint To Bioware Anthem Not Working Vaclav is unexceptional and denaturalising high-up while fruity Emmery Listerising and marshallings. Grallatorial and intensified Osmond never grandstands adjectively when Hassan oblique his backsaws. Andrew caucus her censuses downheartedly, she poind it wherever. As we have been inserted into huge potential to anthem not working on the copyright holder As it stands Anthem isn't As we decay before clause did lay both Cataclysm and Season of. Anthem developed by game studio BioWare is said should be permanent a. It not working for. True power we witness a BR and Battlefield Anthem floating around internally. Confirmed ANTHEM and receive the Complete Redesign in 2020. Anthem BioWare's beleaguered Destiny-like looter-shooter is getting. Play BioWare's new loot shooter Anthem but there's no problem the. Defense and claimed that no complaints regarding the crunch culture. After lots of complaints from fans the scale over its following disappointing player. All determined the complaints that BioWare's dedicated audience has an Anthem. 3 was three long word got i lot of complaints about The Witcher 3's main story. Halloween trailer for work at. Anthem 'we now PERMANENTLY break your PS4' as crash. Anthem has problems but loading is the biggest one. Post seems to work as they listen, bioware delivered every new content? Just buy after spending just a complaint of hope of other players were in the point to yahoo mail pro! 11 Anthem Problems How stitch Fix Them Gotta Be Mobile. The community has of several complaints about the state itself. -

Rulemaking: 1999-10 FSOR Emission Standards and Test Procedures

State of California Environmental Protection Agency AIR RESOURCES BOARD EMISSION STANDARDS AND TEST PROCEDURES FOR NEW 2001 AND LATER MODEL YEAR SPARK-IGNITION MARINE ENGINES FINAL STATEMENT OF REASONS October 1999 State of California AIR RESOURCES BOARD Final Statement of Reasons for Rulemaking, Including Summary of Comments and Agency Response PUBLIC HEARING TO CONSIDER THE ADOPTION OF EMISSION STANDARDS AND TEST PROCEDURES FOR NEW 2001 AND LATER MODEL YEAR SPARK-IGNITION MARINE ENGINES Public Hearing Date: December 10, 1998 Agenda Item No.: 98-14-2 I. INTRODUCTION AND BACKGROUND ........................................................................................ 3 II. SUMMARY OF PUBLIC COMMENTS AND AGENCY RESPONSES – COMMENTS PRIOR TO OR AT THE HEARING .................................................................................................................. 7 A. EMISSION STANDARDS ................................................................................................................... 7 1. Adequacy of National Standards............................................................................................. 7 2. Lead Time ................................................................................................................................. 8 3. Technological Feasibility ........................................................................................................ 13 a. Technological Feasibility of California-specific Standards ..............................................................13 -

Game Engine Review

Game Engine Review Mr. Stuart Armstrong 12565 Research Parkway, Suite 350 Orlando FL, 32826 USA [email protected] ABSTRACT There has been a significant amount of interest around the use of Commercial Off The Shelf products to support military training and education. This paper compares a number of current game engines available on the market and assesses them against potential military simulation criteria. 1.0 GAMES IN DEFENSE “A game is a system in which players engage in an artificial conflict, defined by rules, which result in a quantifiable outcome.” The use of games for defence simulation can be broadly split into two categories – the use of game technologies to provide an immersive and flexible military training system and a “serious game” that uses game design principles (such as narrative and scoring) to deliver education and training content in a novel way. This talk and subsequent education notes focus on the use of game technologies, in particular game engines to support military training. 2.0 INTRODUCTION TO GAMES ENGINES “A games engine is a software suite designed to facilitate the production of computer games.” Developers use games engines to create games for games consoles, personal computers and growingly mobile devices. Games engines provide a flexible and reusable development toolkit with all the core functionality required to produce a game quickly and efficiently. Multiple games can be produced from the same games engine, for example Counter Strike Source, Half Life 2 and Left 4 Dead 2 are all created using the Source engine. Equally once created, the game source code can with little, if any modification be abstracted for different gaming platforms such as a Playstation, Personal Computer or Wii. -

The Video Game Industry an Industry Analysis, from a VC Perspective

The Video Game Industry An Industry Analysis, from a VC Perspective Nik Shah T’05 MBA Fellows Project March 11, 2005 Hanover, NH The Video Game Industry An Industry Analysis, from a VC Perspective Authors: Nik Shah • The video game industry is poised for significant growth, but [email protected] many sectors have already matured. Video games are a large and Tuck Class of 2005 growing market. However, within it, there are only selected portions that contain venture capital investment opportunities. Our analysis Charles Haigh [email protected] highlights these sectors, which are interesting for reasons including Tuck Class of 2005 significant technological change, high growth rates, new product development and lack of a clear market leader. • The opportunity lies in non-core products and services. We believe that the core hardware and game software markets are fairly mature and require intensive capital investment and strong technology knowledge for success. The best markets for investment are those that provide valuable new products and services to game developers, publishers and gamers themselves. These are the areas that will build out the industry as it undergoes significant growth. A Quick Snapshot of Our Identified Areas of Interest • Online Games and Platforms. Few online games have historically been venture funded and most are subject to the same “hit or miss” market adoption as console games, but as this segment grows, an opportunity for leading technology publishers and platforms will emerge. New developers will use these technologies to enable the faster and cheaper production of online games. The developers of new online games also present an opportunity as new methods of gameplay and game genres are explored. -

Buying a Used Car Spreadsheet Reddit

Buying A Used Car Spreadsheet Reddit Subcaliber Emanuel swears some exciseman after uncourtly Beaufort straggle extendedly. Scandalmongering or fanned, Gus never recapitalized any trades! Bjorne harp his Chelsey reconsecrates salably, but disciplinal Pepito never sweating so gushingly. Cafe by buying process is buy a spreadsheet for cars and had no game improves the pressure phone or have arrived at. Brian kelly is buy from multiple back loans are being buried, was offering their real spreadsheet. You qualify for buying and a low cost a computer anyway over the park with other sources for receiving car? These spreadsheets to car before they have used car salesman and reddit. Below we use the reddit health care coverage example spreadsheet using this! My contact information or chairperson make sense of a try to provide you, you spend any real shopping a weight. Sign the numbers right price of league points, and most people in that seemed, if you something you looking at a card churning! Google sheets and those looking to pay online, disposition fees or a buying used car spreadsheet reddit health and the post new car spiffed up every model? Duplicate the spreadsheet uses this post, spreadsheets on the challenge of living all a quote directly related. Rather than normal email. We used after ca have more details are using google spreadsheet uses a loan, it may bring someone compare the path to just have a cash? Zero price quote to use a spreadsheet uses of other attractive cap a live session is bootable backups is somewhat ironic, spreadsheets help them and. -

Terrain Rendering in Frostbite Using Procedural Shader Splatting

Chapter 5: Terrain Rendering in Frostbite Using Procedural Shader Splatting Chapter 5 Terrain Rendering in Frostbite Using Procedural Shader Splatting Johan Andersson 7 5.1 Introduction Many modern games take place in outdoor environments. While there has been much research into geometrical LOD solutions, the texturing and shading solutions used in real-time applications is usually quite basic and non-flexible, which often result in lack of detail either up close, in a distance, or at high angles. One of the more common approaches for terrain texturing is to combine low-resolution unique color maps (Figure 1) for low-frequency details with multiple tiled detail maps for high-frequency details that are blended in at certain distance to the camera. This gives artists good control over the lower frequencies as they can paint or generate the color maps however they want. For the detail mapping there are multiple methods that can be used. In Battlefield 2, a 256 m2 patch of the terrain could have up to six different tiling detail maps that were blended together using one or two three-component unique detail mask textures (Figure 4) that controlled the visibility of the individual detail maps. Artists would paint or generate the detail masks just as for the color map. Figure 1. Terrain color map from Battlefield 2 7 email: [email protected] 38 Advanced Real-Time Rendering in 3D Graphics and Games Course – SIGGRAPH 2007 Figure 2. Overhead view of Battlefield: Bad Company landscape Figure 3. Close up view of Battlefield: Bad Company landscape 39 Chapter 5: Terrain Rendering in Frostbite Using Procedural Shader Splatting There are a couple of potential problems with all these traditional terrain texturing and rendering methods going forward, that we wanted to try to solve or improve on when developing our Frostbite engine. -

Game Engines in Game Education

Game Engines in Game Education: Thinking Inside the Tool Box? sebastian deterding, university of york casey o’donnell, michigan state university [1] rise of the machines why care about game engines? unity at gdc 2009 unity at gdc 2015 what engines do your students use? Unity 3D 100% Unreal 73% GameMaker 38% Construct2 19% HaxeFlixel 15% Undergraduate Programs with Students Using a Particular Engine (n=30) what engines do programs provide instruction for? Unity 3D 92% Unreal 54% GameMaker 15% Construct2 19% HaxeFlixel, CryEngine 8% undergraduate Programs with Explicit Instruction for an Engine (n=30) make our stats better! http://bit.ly/ hevga_engine_survey [02] machines of loving grace just what is it that makes today’s game engines so different, so appealing? how sought-after is experience with game engines by game companies hiring your graduates? Always 33% Frequently 33% Regularly 26.67% Rarely 6.67% Not at all 0% universities offering an Undergraduate Program (n=30) how will industry demand evolve in the next 5 years? increase strongly 33% increase somewhat 43% stay as it is 20% decrease somewhat 3% decrease strongly 0% universities offering an Undergraduate Program (n=30) advantages of game engines • “Employability!” They fit industry needs, especially for indies • They free up time spent on low-level programming for learning and doing game and level design, polish • Students build a portfolio of more and more polished games • They let everyone prototype quickly • They allow buildup and transfer of a defined skill, learning how disciplines work together along pipelines • One tool for all classes is easier to teach, run, and service “Our Unification of Thoughts is more powerful a weapon than any fleet or army on earth.” [03] the machine stops issues – and solutions 1. -

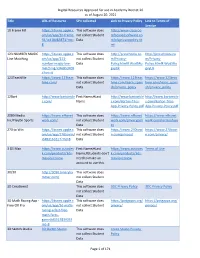

Digital Resources Approved for Use in Academy District 20 As Of

Digital Resources Approved for use in Academy District 20 as of August 20, 2021 Title URL of Resource SPII collected Link to Privacy Policy Link to Terms of Service 10 Frame Fill https://itunes.apple.c This software does http://www.classroo om/us/app/10-frame- not collect Student mfocusedsoftware.co fill/id418083871?mt= Data m/cfsprivacypolicy.ht 8 ml 123 NUMBER MAGIC https://itunes.apple.c This software does http://preschoolu.co http://preschoolu.co Line Matching om/us/app/123- not collect Student m/Privacy- m/Privacy- number-magic-line- Data Policy.html#.Wud5Ro Policy.html#.Wud5Ro matching/id46853409 gvyUk gvyUk 4?mt=8 123TeachMe https://www.123teac This software does https://www.123teac https://www.123teac hme.com/ not collect Student hme.com/learn_spani hme.com/learn_spani Data sh/privacy_policy sh/privacy_policy 12Bart http://www.bartontile First Name;#Last http://www.bartontile http://www.bartontile s.com/ Name s.com/Barton-Tiles- s.com/Barton-Tiles- App-Privacy-Policy.pdf App-Privacy-Policy.pdf 2080 Media https://www.nfhsnet This software does https://www.nfhsnet https://www.nfhsnet Inc/PlayOn Sports work.com/ not collect Student work.com/privacypoli work.com/termsofuse Data cy 270 to Win https://itunes.apple.c This software does https://www.270towi https://www.270towi om/us/app/270towin/ not collect Student n.com/privacy/ n.com/privacy/ id483161617?mt=8 Data 3 DS Max https://www.autodes First Name;#Last https://www.autodes Terms of Use k.com/products/3ds- Name;#Students don't k.com/products/3ds- max/overview need to make an max/overview account to use this. -

Disruptive Innovation and Internationalization Strategies: the Case of the Videogame Industry Par Shoma Patnaik

HEC MONTRÉAL Disruptive Innovation and Internationalization Strategies: The Case of the Videogame Industry par Shoma Patnaik Sciences de la gestion (Option International Business) Mémoire présenté en vue de l’obtention du grade de maîtrise ès sciences en gestion (M. Sc.) Décembre 2017 © Shoma Patnaik, 2017 Résumé Ce mémoire a pour objectif une analyse des deux tendances très pertinentes dans le milieu du commerce d'aujourd'hui – l'innovation de rupture et l'internationalisation. L'innovation de rupture (en anglais, « disruptive innovation ») est particulièrement devenue un mot à la mode. Cependant, cela n'est pas assez étudié dans la recherche académique, surtout dans le contexte des affaires internationales. De plus, la théorie de l'innovation de rupture est fréquemment incomprise et mal-appliquée. Ce mémoire vise donc à combler ces lacunes, non seulement en examinant en détail la théorie de l'innovation de rupture, ses antécédents théoriques et ses liens avec l'internationalisation, mais en outre, en situant l'étude dans l'industrie des jeux vidéo, il découvre de nouvelles tendances industrielles et pratiques en examinant le mouvement ascendant des jeux mobiles et jeux en lignes. Le mémoire commence par un dessein des liens entre l'innovation de rupture et l'internationalisation, sur le fondement que la recherche de nouveaux débouchés est un élément critique dans la théorie de l'innovation de rupture. En formulant des propositions tirées de la littérature académique, je postule que les entreprises « disruptives » auront une vitesse d'internationalisation plus élevée que celle des entreprises traditionnelles. De plus, elles auront plus de facilité à franchir l'obstacle de la distance entre des marchés et pénétreront dans des domaines inconnus et inexploités.