UNIVERSITY of CALIFORNIA RIVERSIDE Robust Passenger

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Penndot Fact Sheet

FACT SHEET Van/Mini-Van Titling and Registration Procedures PURPOSE This fact sheet explains the titling and registration procedures for van and mini-van type vehicles being titled and registered in Pennsylvania. DEFINITIONS Motor home: A motor vehicle designed or adapted for use as mobile dwelling or office; except a motor vehicle equipped with a truck-camper. Passenger Car: A motor vehicle, except a motorcycle, designed primarily for the transportation of persons and designed for carrying no more than 15 passengers including the driver and primarily used for the transportation of persons. The term includes motor vehicles which are designed with seats that may be readily removed and reinstalled, but does not include such vehicles if used primarily for the transportation of property. Truck: A motor vehicle designed primarily for the transportation of property. The term includes motor vehicles designed with seats that may be readily removed and reinstalled if those vehicles are primarily used for the transportation of property. GENERAL RULE Van and mini-van type vehicles are designed by vehicle manufacturers to be used in a multitude of different ways. Many vans are designed with seats for the transportation of persons much like a normal passenger car or station wagon; however, some are manufactured for use as a motor home, while others are designed simply for the transportation of property. Therefore, the proper type of registration plate depends on how the vehicle is to be primarily used. The following rules should help clarify the proper procedures required to title and register a van/mini-van: To register as a passenger car - The van/mini-van must be designed with seating for no more than 15 passengers including the driver, and used for non-commercial purposes. -

Sportz Sizing Chart Rev

Sportz Truck Tent Sizing Chart Sportz III Tailgate 55011 55022 55044 55055 55077 55099 55890 Sportz III Tailgate Camo 56011 56022 56044 - - - - Sportz Mid Size Quad SPORTZ FULL SIZE CREW Truck Size Full Size Long Bed Full Size Short Bed Compact Short Bed Step/Flare Sport Mid Size Short Bed Cab CAB Bed Size 96-98" 72-79" 72-74" 78-82" 72-78" 65" 68-70" Double/Model # 30951 or Double/Model # 30951 or Double/Model # 30951 or Mid Air Mattress Mid Size/Model #32000SP Mid Size/Model #32000SP Twin/Model # 30954 Twin/Model # 30954 Twin/Model # 30954 Twin/Model # 30954 Size/Model #32000SP T Chevrolet C/K * Chevrolet C/K * Chevrolet S-10 * Chevrolet Silverado* Dodge Dakota Short Bed* Dodge Dakota Quad Cab* GMC Sierra 1500 Crew Cab* R Chevrolet Silverado * Chevrolet Silverado * Chevrolet Colorado 6' Ford F-150 '96 & older* Dodge Dakota SLT Short* Toyota Tacoma (Yr 2005 anChevrolet Crew Cab* U Dodge Dakota * Dodge Ram 1500 * Chevrolet Canyon 6' Ford F-250 '96 & older* Dodge Dakota Sport Short* up for 60 inch box)* Ford Super Crew* C Dodge Dakota SLT * Dodge Ram 2500 * Ford Ranger XL * GMC Sierra 1500* Toyota T-100 * Nissan Titan Crew* K Dodge Dakota Sport * Ford F-150 * Ford Ranger XLT * Toyota Tacoma (Yr 2004 and Dodge Ram 1500 * Ford F-250 * GMC S-15 * up for 73.5 inch box)* M Dodge Ram 2500 * Ford F-250 Super Duty* GMC Sonoma * O Dodge Ram 3500 (^DRW) Ford F-350 Super Duty* Isuzu Hombre * D Ford F-150 * GMC Sierra 2500 Series* Jeep Camanche * E Ford F-250 * Nissan Titan* Mazda B 2000* L Ford F-250 Super Duty* Toyota Tundra * Mazda B 2500* Ford F-350 Super Duty* Mazda B 3000* N Ford F-350SD (^DRW) Mazda B 4000* A GMC Sierra * Mitsubishi Pick-up * M Nissan Titan* Nissan Frontier * E Toyota T-100 * Toyota Hilux* Toyota Tundra * Toyota Tacoma(before2004)* Nissan Frontier King Cab ('02 and Newer)* Models Not Applicable: * Indicates truck must be measured to determine long or short bed. -

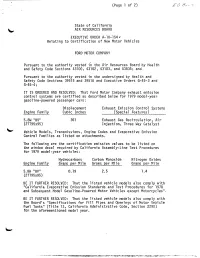

Page 1 Of.Tif

(Page 1 of 2) EO BEST State of California AIR RESOURCES BOARD EXECUTIVE ORDER A-10-154 . Relating to Certification of New Motor Vehicles FORD MOTOR COMPANY Pursuant to the authority vested in the Air Resources Board by Health and Safety Code Sections 43100, 43102, 43103, and 43835; and Pursuant to the authority vested in the undersigned by Health and Safety Code Sections 39515 and 39516 and Executive Orders G-45-3 and G-45-4; IT IS ORDERED AND RESOLVED: That Ford Motor Company exhaust emission control systems are certified as described below for 1979 model-year gasoline-powered passenger cars : Displacement Exhaust Emission Control Systems Engine Family Cubic Inches (Special Features 5. 8W "BV" 351 Exhaust Gas Recirculation, Air (2TT95x95) Injection, Three Way Catalyst Vehicle Models, Transmissions, Engine Codes and Evaporative Emission Control Families as listed on attachments. The following are the certification emission values to be listed on the window decal required by California Assembly-Line Test Procedures for 1979 model-year vehicles : Hydrocarbons Carbon Monoxide Nitrogen Oxides Engine Family Grams per Mile Grams per Mile Grams per Mile 5. 8W "BV" 0. 19 2.5 1.4 (2TT95x95) BE IT FURTHER RESOLVED: That the listed vehicle models also comply with "California Evaporative Emission Standards and Test Procedures for 1978 and Subsequent Model Gasoline-Powered Motor Vehicles except Motorcycles". BE IT FURTHER RESOLVED: That the listed vehicle models also comply with the Board's "Specifications for Fill Pipes and Openings of Motor Vehicle Fuel Tanks" (Title 13, California Administrative Code, Section 2290) for the aforementioned model year. -

Minivan Motoring, Or Why I Miss That Old Car Smell by Sam Patteson

e-Vision volume eight 1 Minivan Motoring, or Why I Miss that Old Car Smell by Sam Patteson It is very difficult to look “cool” while driving a minivan, and I never bothered to try. “Cool” is overrated anyway. What’s not overrated is the urban camouflage a minivan affords. “No one suspects the soccer mom,” Joe deadpanned as he rolled us a joint on the open door of the glove box. I had to agree as I pulled the van into the Shell station to gas up before our long trip to Charleston. I let the tank fill while I checked the various reservoir levels for brake fluid, antifreeze, power steering, and the like. As usual, I needed a quart of oil. Rednecks on last minute beer runs cruised through the parking lot as I returned from the store. Joe blasted Frampton Comes Alive through the open windows. Pete’s guitar had something to say, and the van was rocking. I poured the oil into the engine, slammed the hood home, and slid into the driver’s seat. I turned to quiz Joe on last minute preparations. We had planned this trip for months, and I didn’t want to forget anything. “Suitcases?” He turned to look back at the empty rear of the van. We had removed the back seats in favor of a twin-sized bed. Our suitcases nestled securely between the bed and the back door. “Check.” Pillows and blankets? “Check.” Wallet, money, keys? “Check.” Er… extras? “Check!” Joe coughed and handed me the joint. I took a deep drag and handed it back. -

2020 Truck 2

City of Waterville DEPARTMENT OF PUBLIC WORKS 6 Wentworth Court Waterville, Maine 04901-4892 TEL (207) 680-4744 FAX (207) 877-7532 REQUEST FOR BIDS One-Half Ton 4 x 4 DOUBLE CAB PICKUP TRUCK DATE: March 31, 2020 INSTRUCTIONS TO BIDDERS 1. GENERAL: The City of Waterville is accepting bids for a 1/2 Ton 4 Wheel Drive Double Cab Pickup Truck meeting the specifications accompanying this document. 2. BID SUBMITTAL: Sealed bids will be accepted by the Office of the Director of Public Works, 6 Wentworth Court, Waterville, Maine 04901 up to and including 11:00 A.M. local time, Tuesday, April 28, 2020 at which time they will be publicly opened and read. All bids will be placed in a sealed envelope clearly marked "Bid – 4 WD Double Cab Pickup Truck" in the center with the bidder's name and address in the upper left-hand corner. Bids not dated and time stamped by the Office of the Director of Public Works prior to the specified date and time stated above will be returned unopened. Facsimile or email bids will only be accepted by arrangement with the Office of the Director of Public Works. Arrangements may be made by contacting Frederick Dechaine at (207) 680-4746, [email protected] or by faxing him directly at (207) 877-7532. 3. WITHDRAWAL OR REVISION OF BID: A bidder may withdraw or revise a bid after it has been received by the Office of the Director of Public Works, provided the request is made in writing or in person before the time set for bid opening. -

Vehicle Identification Number (VIN) System

Vehicle Identification Number (VIN) System Position Definition Character Description Country of 1 1 2 3 United States; Canada; Mexico Origin 2 Manufacturer G General Motors Chevrolet; Incomplete Chevrolet Truck; GMC; Incomplete B C D T N 3 Make GMC Truck; Chevrolet Multi Purpose Vehicle; GMC Multi K Y Purpose Vehicle; Cadillac Multi Purpose Vehicle 3001-4000/Hydraulic; 4001-5000/Hydraulic; 5001- GVWR/Brake B C D E F G 6000/Hydraulic; 6001-7000/Hydraulic; 7001-8000/Hydraulic; 4 System H J K 8001-9000/Hydraulic; 9001-10000/Hydraulic; 10001- 14000/Hydraulic; 14001-16000/Hydraulic Truck 5 Line/Chassis C K Conventional Cab/4x2; Conventional Cab/4x4 Type Half Ton; ¾ Ton, 1 Ton; 1/2 Ton Luxury; 3/4 Ton Luxury; 1 6 Series 1 2 3 6 7 8 Ton Luxury Four-Door Cab/Utility; Two-Door Cab; Suburban/Denali XL 7 Body Type 3 4 6 9 Two-Door Utility; Extended Cab/Extended Van V U T W G (LR4) 4.8L Gas; (LQ4) 6.0L Gas; (LM7) 5.3L Gas; (L35) 4.3L 8 Engine Type 1 Gas; (L18) 8.1L Gas; (LB7) 6.6L Diesel 9 Check Digit -- Check Digit 10 Model Year 1 2001 Oshawa, Ontario; Pontiac, Michigan; Fort Wayne, Indiana; 1 E Z J G F 11 Plant Location Janesville, Wisconsin; Silao, Mexico; Flint, Michigan; X Experimental Engineering Manufacturing Plant Sequence 12-17 -- Plant Sequence Number Number Tips to understanding your VIN number: Starting in model year 1954, American automobile manufacturers began stamping and casting identifying numbers on cars and their parts. The vehicle identification number has become referred to as the "VIN". -

2020 Yaris Sedan/Hatchback MEX-Prod Pre-Delivery Service (PDS)

T-SB-0116-19 August 23, 2019 2020 Yaris Sedan/Hatchback MEX-Prod Pre-Delivery Service (PDS) Service Category General Section Pre-Delivery Service Market USA Applicability YEAR(S) MODEL(S) ADDITIONAL INFORMATION 2020 Yaris HB MEX-Prod, Yaris SD MEX-Prod Introduction Pre-Delivery Service (PDS) is a critical step in satisfying our new car customers. Customer feedback indicates the following areas deserve special attention when performing PDS: Careful inspection for paint chips/scratches and body dents/dings. Proper operation of electrical accessories. Interior cleanliness. Proper function of mechanical systems. Customer retention and proper maintenance of vehicles are and have always been a major focus for Toyota. To help remind customers that regular service is essential to the proper maintenance of the vehicle, dealers are required to install a service reminder sticker before delivery. By doing this, customers will be reminded to return to your dealership for service. Your current service reminder sticker may be used. (See PDS Check Sheet item 8 of “Final Inspection and Cleaning.”) A new PDS Check Sheet has been developed for the 2020 model year Yaris Sedan/Hatchback MEX-Prod. Some check points have been added, expanded, or clarified. Bulletins are available for items in bold type. Warranty Policy If the need for additional repairs or adjustment is noted during the PDS, the required service should be performed under warranty. Reimbursement will be managed under the warranty policy. The Warranty Policy and Procedures Manual requires that you maintain the completed PDS Check Sheet in the customer’s file. If you cannot produce a completed form for each retailed vehicle upon TMS and/or Region/Distributor audit, the PDS payment amount will be subject to debit. -

State Laws Impacting Altered-Height Vehicles

State Laws Impacting Altered-Height Vehicles The following document is a collection of available state-specific vehicle height statutes and regulations. A standard system for regulating vehicle and frame height does not exist among the states, so bumper height and/or headlight height specifications are also included. The information has been organized by state and is in alphabetical order starting with Alabama. To quickly navigate through the document, use the 'Find' (Ctrl+F) function. Information contained herein is current as of October 2014, but these state laws and regulations are subject to change. Consult the current statutes and regulations in a particular state before raising or lowering a vehicle to be operated in that state. These materials have been prepared by SEMA to provide guidance on various state laws regarding altered height vehicles and are intended solely as an informational aid. SEMA disclaims responsibility and liability for any damages or claims arising out of the use of or reliance on the content of this informational resource. State Laws Impacting Altered-Height Vehicles Tail Lamps / Tires / Frame / Body State Bumpers Headlights Other Reflectors Wheels Modifications Height of head Height of tail Max. loaded vehicle lamps must be at lamps must be at height not to exceed 13' least 24" but no least 20" but no 6". higher than 54". higher than 60". Alabama Height of reflectors must be at least 24" but no higher than 60". Height of Height of Body floor may not be headlights must taillights must be raised more than 4" be at least 24" at least 20". -

Quantitative Study to Define the Relevant Market in the Passenger Car Sector by Frank Verboven, K.U

1 17 September 2002 Final Report _____________________ Frank Verboven _____________________ Catholic University of Leuven 1 This study has been commissioned by the Directorate General of Competition of the European Commission. Any views expressed in it are those of the author: they do not necessarily reflect the views of the Directorate General of Competition or the European Commission. 1 EXECUTIVE SUMMARY ....................................................................................................................3 1 INTRODUCTION.........................................................................................................................7 2 DEFINING THE RELEVANT MARKET .................................................................................8 2.1 COMPETITIVE CONSTRAINTS ..................................................................................................8 2.2 DEMAND SUBSTITUTION.........................................................................................................9 2.3 PRODUCT VERSUS GEOGRAPHIC MARKET............................................................................10 3 THE RELEVANT GEOGRAPHIC MARKET .......................................................................11 3.1 HISTORICAL EVIDENCE.........................................................................................................11 3.2 OBSTACLES TO CROSS-BORDER TRADE.................................................................................12 4 THE DEMAND FOR NEW PASSENGER CARS...................................................................14 -

2021 NEMPA Winter Drive Awards

Contact: Darren Jacobs Trevor Dorchies Ron Kiino Ram 1500 TRX Captures Official Winter Pickup Truck of New England Crown; Chrysler Pacifica and Jeep® Gladiator Earn Class Honors at NEMPA Winter Vehicle Driving Event 2021 Ram 1500 TRX, in its first year of eligibility, named Official Winter Pickup Truck of New England at annual New England Motor Press Association (NEMPA) winter vehicle competition 2021 Chrysler Pacifica, now with all-wheel-drive capability, takes People Mover best-in-class honors 2021 Jeep® Gladiator named Mid-size Pickup Truck class winner Winners chosen by jurors representing print, television, digital and radio media outlets from Connecticut, Maine, Massachusetts, New Hampshire, Rhode Island and Vermont July 21, 2021, Auburn Hills, Mich. - The 2021 Ram 1500 TRX has been named Official Winter Pickup Truck of New England while the 2021 Chrysler Pacifica and 2021 Jeep® Gladiator earned class honors at the annual New England Motor Press Association (NEMPA) winter vehicle competition. "The Ram 1500 TRX earned the top spot as the overall winner,'' said John Paul, President, New England Motor Press Association. "The mighty TRX is designed to handle the harshest conditions, including snow, and satisfies the New England motorist in every detail from its supercharged engine to its 35-inch tires. The Jeep Gladiator doesn’t just handle New England winters, it dominates them, which is why it’s a favorite and repeat winner in taking NEMPA honors. Chrysler Pacifica now offers true all-wheel-drive that can handle not just New England winters, but with its full suite of safety features, further adds to driver and passenger safety. -

A Two-Point Vehicle Classification System

178 TRANSPORTATION RESEARCH RECORD 1215 A Two-Point Vehicle Classification System BERNARD C. McCULLOUGH, JR., SrAMAK A. ARDEKANI, AND LI-REN HUANG The counting and classification of vehicles is an important part hours required, however, the cost of such a count was often of transportation engineering. In the past 20 years many auto high. To offset such costs, many techniques for the automatic mated systems have been developed to accomplish that labor counting, length determination, and classification of vehicles intensive task. Unfortunately, most of those systems are char have been developed within the past decade. One popular acterized by inaccurate detection systems and/or classification method, especially in Europe, is the Automatic Length Indi methods that result in many classification errors, thus limiting cation and Classification Equipment method, known as the accuracy of the system. This report describes the devel "ALICE," which was introduced by D. D. Nash in 1976 (1). opment of a new vehicle classification database and computer This report covers a simpler, more accurate system of vehicle program, originally designed for use in the Two-Point-Time counting and classification and details the development of the Ratio method of vehicle classification, which greatly improves classification software that will enable it to surpass previous the accuracy of automated classification systems. The program utilizes information provided by either vehicle detection sen systems in accuracy. sors or the program user to determine the velocity, number Although many articles on vehicle classification methods of axles, and axle spacings of a passing vehicle. It then matches have appeared in transportation journals within the past dec the axle numbers and spacings with one of thirty-one possible ade, most have dealt solely with new types, or applications, vehicle classifications and prints the vehicle class, speed, and of vehicle detection systems. -

Report to Congress

REPORT TO CONGRESS Effects of the Alternative Motor Fuels Act CAFE Incentives Policy PREPARED BY: U.S. Department of Transportation U.S. Department of Energy U.S. Environmental Protection Agency March 2002 Table of Contents Highlights.............................................................................................................................iii Executive Summary.............................................................................................................vi I. Introduction.....................................................................................................................1 II. Background.....................................................................................................................3 III. Availability of Alternative Fuel Vehicles.....................................................................13 IV. Availability and Use of Alternative Fuels....................................................................27 V. Analysis of the Effects on Energy Conservation and the Environment...................................................................................................37 VI. Summary of Findings and Recommendations............................................................49 Appendices.........................................................................................................................52 Appendix A: Summary of Federal Register Comments Appendix B: Listing of CAFE Fines Paid by Vehicle Manufacturers Appendix C: U.S. Refueling Site Counts by State