Amdahl's Law Speedup Due to Enhancement E: Extime W/O E Performance W/ E Speedup(E ) = ------= ------Extime W/ E Performance W/O E

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fill Your Boots: Enhanced Embedded Bootloader Exploits Via Fault Injection and Binary Analysis

IACR Transactions on Cryptographic Hardware and Embedded Systems ISSN 2569-2925, Vol. 2021, No. 1, pp. 56–81. DOI:10.46586/tches.v2021.i1.56-81 Fill your Boots: Enhanced Embedded Bootloader Exploits via Fault Injection and Binary Analysis Jan Van den Herrewegen1, David Oswald1, Flavio D. Garcia1 and Qais Temeiza2 1 School of Computer Science, University of Birmingham, UK, {jxv572,d.f.oswald,f.garcia}@cs.bham.ac.uk 2 Independent Researcher, [email protected] Abstract. The bootloader of an embedded microcontroller is responsible for guarding the device’s internal (flash) memory, enforcing read/write protection mechanisms. Fault injection techniques such as voltage or clock glitching have been proven successful in bypassing such protection for specific microcontrollers, but this often requires expensive equipment and/or exhaustive search of the fault parameters. When multiple glitches are required (e.g., when countermeasures are in place) this search becomes of exponential complexity and thus infeasible. Another challenge which makes embedded bootloaders notoriously hard to analyse is their lack of debugging capabilities. This paper proposes a grey-box approach that leverages binary analysis and advanced software exploitation techniques combined with voltage glitching to develop a powerful attack methodology against embedded bootloaders. We showcase our techniques with three real-world microcontrollers as case studies: 1) we combine static and on-chip dynamic analysis to enable a Return-Oriented Programming exploit on the bootloader of the NXP LPC microcontrollers; 2) we leverage on-chip dynamic analysis on the bootloader of the popular STM8 microcontrollers to constrain the glitch parameter search, achieving the first fully-documented multi-glitch attack on a real-world target; 3) we apply symbolic execution to precisely aim voltage glitches at target instructions based on the execution path in the bootloader of the Renesas 78K0 automotive microcontroller. -

Computer Organization and Architecture Designing for Performance Ninth Edition

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION William Stallings Boston Columbus Indianapolis New York San Francisco Upper Saddle River Amsterdam Cape Town Dubai London Madrid Milan Munich Paris Montréal Toronto Delhi Mexico City São Paulo Sydney Hong Kong Seoul Singapore Taipei Tokyo Editorial Director: Marcia Horton Designer: Bruce Kenselaar Executive Editor: Tracy Dunkelberger Manager, Visual Research: Karen Sanatar Associate Editor: Carole Snyder Manager, Rights and Permissions: Mike Joyce Director of Marketing: Patrice Jones Text Permission Coordinator: Jen Roach Marketing Manager: Yez Alayan Cover Art: Charles Bowman/Robert Harding Marketing Coordinator: Kathryn Ferranti Lead Media Project Manager: Daniel Sandin Marketing Assistant: Emma Snider Full-Service Project Management: Shiny Rajesh/ Director of Production: Vince O’Brien Integra Software Services Pvt. Ltd. Managing Editor: Jeff Holcomb Composition: Integra Software Services Pvt. Ltd. Production Project Manager: Kayla Smith-Tarbox Printer/Binder: Edward Brothers Production Editor: Pat Brown Cover Printer: Lehigh-Phoenix Color/Hagerstown Manufacturing Buyer: Pat Brown Text Font: Times Ten-Roman Creative Director: Jayne Conte Credits: Figure 2.14: reprinted with permission from The Computer Language Company, Inc. Figure 17.10: Buyya, Rajkumar, High-Performance Cluster Computing: Architectures and Systems, Vol I, 1st edition, ©1999. Reprinted and Electronically reproduced by permission of Pearson Education, Inc. Upper Saddle River, New Jersey, Figure 17.11: Reprinted with permission from Ethernet Alliance. Credits and acknowledgments borrowed from other sources and reproduced, with permission, in this textbook appear on the appropriate page within text. Copyright © 2013, 2010, 2006 by Pearson Education, Inc., publishing as Prentice Hall. All rights reserved. Manufactured in the United States of America. -

45-Year CPU Evolution: One Law and Two Equations

45-year CPU evolution: one law and two equations Daniel Etiemble LRI-CNRS University Paris Sud Orsay, France [email protected] Abstract— Moore’s law and two equations allow to explain the a) IC is the instruction count. main trends of CPU evolution since MOS technologies have been b) CPI is the clock cycles per instruction and IPC = 1/CPI is the used to implement microprocessors. Instruction count per clock cycle. c) Tc is the clock cycle time and F=1/Tc is the clock frequency. Keywords—Moore’s law, execution time, CM0S power dissipation. The Power dissipation of CMOS circuits is the second I. INTRODUCTION equation (2). CMOS power dissipation is decomposed into static and dynamic powers. For dynamic power, Vdd is the power A new era started when MOS technologies were used to supply, F is the clock frequency, ΣCi is the sum of gate and build microprocessors. After pMOS (Intel 4004 in 1971) and interconnection capacitances and α is the average percentage of nMOS (Intel 8080 in 1974), CMOS became quickly the leading switching capacitances: α is the activity factor of the overall technology, used by Intel since 1985 with 80386 CPU. circuit MOS technologies obey an empirical law, stated in 1965 and 2 Pd = Pdstatic + α x ΣCi x Vdd x F (2) known as Moore’s law: the number of transistors integrated on a chip doubles every N months. Fig. 1 presents the evolution for II. CONSEQUENCES OF MOORE LAW DRAM memories, processors (MPU) and three types of read- only memories [1]. The growth rate decreases with years, from A. -

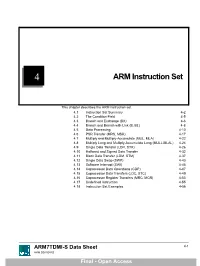

ARM Instruction Set

4 ARM Instruction Set This chapter describes the ARM instruction set. 4.1 Instruction Set Summary 4-2 4.2 The Condition Field 4-5 4.3 Branch and Exchange (BX) 4-6 4.4 Branch and Branch with Link (B, BL) 4-8 4.5 Data Processing 4-10 4.6 PSR Transfer (MRS, MSR) 4-17 4.7 Multiply and Multiply-Accumulate (MUL, MLA) 4-22 4.8 Multiply Long and Multiply-Accumulate Long (MULL,MLAL) 4-24 4.9 Single Data Transfer (LDR, STR) 4-26 4.10 Halfword and Signed Data Transfer 4-32 4.11 Block Data Transfer (LDM, STM) 4-37 4.12 Single Data Swap (SWP) 4-43 4.13 Software Interrupt (SWI) 4-45 4.14 Coprocessor Data Operations (CDP) 4-47 4.15 Coprocessor Data Transfers (LDC, STC) 4-49 4.16 Coprocessor Register Transfers (MRC, MCR) 4-53 4.17 Undefined Instruction 4-55 4.18 Instruction Set Examples 4-56 ARM7TDMI-S Data Sheet 4-1 ARM DDI 0084D Final - Open Access ARM Instruction Set 4.1 Instruction Set Summary 4.1.1 Format summary The ARM instruction set formats are shown below. 3 3 2 2 2 2 2 2 2 2 2 2 1 1 1 1 1 1 1 1 1 1 9876543210 1 0 9 8 7 6 5 4 3 2 1 0 9 8 7 6 5 4 3 2 1 0 Cond 0 0 I Opcode S Rn Rd Operand 2 Data Processing / PSR Transfer Cond 0 0 0 0 0 0 A S Rd Rn Rs 1 0 0 1 Rm Multiply Cond 0 0 0 0 1 U A S RdHi RdLo Rn 1 0 0 1 Rm Multiply Long Cond 0 0 0 1 0 B 0 0 Rn Rd 0 0 0 0 1 0 0 1 Rm Single Data Swap Cond 0 0 0 1 0 0 1 0 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 1 Rn Branch and Exchange Cond 0 0 0 P U 0 W L Rn Rd 0 0 0 0 1 S H 1 Rm Halfword Data Transfer: register offset Cond 0 0 0 P U 1 W L Rn Rd Offset 1 S H 1 Offset Halfword Data Transfer: immediate offset Cond 0 -

Cuda C Best Practices Guide

CUDA C BEST PRACTICES GUIDE DG-05603-001_v9.0 | June 2018 Design Guide TABLE OF CONTENTS Preface............................................................................................................ vii What Is This Document?..................................................................................... vii Who Should Read This Guide?...............................................................................vii Assess, Parallelize, Optimize, Deploy.....................................................................viii Assess........................................................................................................ viii Parallelize.................................................................................................... ix Optimize...................................................................................................... ix Deploy.........................................................................................................ix Recommendations and Best Practices.......................................................................x Chapter 1. Assessing Your Application.......................................................................1 Chapter 2. Heterogeneous Computing.......................................................................2 2.1. Differences between Host and Device................................................................ 2 2.2. What Runs on a CUDA-Enabled Device?...............................................................3 Chapter 3. Application Profiling............................................................................. -

3.2 the CORDIC Algorithm

UC San Diego UC San Diego Electronic Theses and Dissertations Title Improved VLSI architecture for attitude determination computations Permalink https://escholarship.org/uc/item/5jf926fv Author Arrigo, Jeanette Fay Freauf Publication Date 2006 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California 1 UNIVERSITY OF CALIFORNIA, SAN DIEGO Improved VLSI Architecture for Attitude Determination Computations A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Electrical and Computer Engineering (Electronic Circuits and Systems) by Jeanette Fay Freauf Arrigo Committee in charge: Professor Paul M. Chau, Chair Professor C.K. Cheng Professor Sujit Dey Professor Lawrence Larson Professor Alan Schneider 2006 2 Copyright Jeanette Fay Freauf Arrigo, 2006 All rights reserved. iv DEDICATION This thesis is dedicated to my husband Dale Arrigo for his encouragement, support and model of perseverance, and to my father Eugene Freauf for his patience during my pursuit. In memory of my mother Fay Freauf and grandmother Fay Linton Thoreson, incredible mentors and great advocates of the quest for knowledge. iv v TABLE OF CONTENTS Signature Page...............................................................................................................iii Dedication … ................................................................................................................iv Table of Contents ...........................................................................................................v -

Consider an Instruction Cycle Consisting of Fetch, Operators Fetch (Immediate/Direct/Indirect), Execute and Interrupt Cycles

Module-2, Unit-3 Instruction Execution Question 1: Consider an instruction cycle consisting of fetch, operators fetch (immediate/direct/indirect), execute and interrupt cycles. Explain the purpose of these four cycles. Solution 1: The life of an instruction passes through four phases—(i) Fetch, (ii) Decode and operators fetch, (iii) execute and (iv) interrupt. The purposes of these phases are as follows 1. Fetch We know that in the stored program concept, all instructions are also present in the memory along with data. So the first phase is the “fetch”, which begins with retrieving the address stored in the Program Counter (PC). The address stored in the PC refers to the memory location holding the instruction to be executed next. Following that, the address present in the PC is given to the address bus and the memory is set to read mode. The contents of the corresponding memory location (i.e., the instruction) are transferred to a special register called the Instruction Register (IR) via the data bus. IR holds the instruction to be executed. The PC is incremented to point to the next address from which the next instruction is to be fetched So basically the fetch phase consists of four steps: a) MAR <= PC (Address of next instruction from Program counter is placed into the MAR) b) MBR<=(MEMORY) (the contents of Data bus is copied into the MBR) c) PC<=PC+1 (PC gets incremented by instruction length) d) IR<=MBR (Data i.e., instruction is transferred from MBR to IR and MBR then gets freed for future data fetches) 2. -

Akukwe Michael Lotachukwu 18/Sci01/014 Computer Science

AKUKWE MICHAEL LOTACHUKWU 18/SCI01/014 COMPUTER SCIENCE The purpose of every computer is some form of data processing. The CPU supports data processing by performing the functions of fetch, decode and execute on programmed instructions. Taken together, these functions are frequently referred to as the instruction cycle. In addition to the instruction cycle functions, the CPU performs fetch and writes functions on data. When a program runs on a computer, instructions are stored in computer memory until they're executed. The CPU uses a program counter to fetch the next instruction from memory, where it's stored in a format known as assembly code. The CPU decodes the instruction into binary code that can be executed. Once this is done, the CPU does what the instruction tells it to, performing an operation, fetching or storing data or adjusting the program counter to jump to a different instruction. The types of operations that typically can be performed by the CPU include simple math functions like addition, subtraction, multiplication and division. The CPU can also perform comparisons between data objects to determine if they're equal. All the amazing things that computers can do are performed with these and a few other basic operations. After an instruction is executed, the next instruction is fetched and the cycle continues. While performing the execute function of the instruction cycle, the CPU may be asked to execute an instruction that requires data. For example, executing an arithmetic function requires the numbers that will be used for the calculation. To deliver the necessary data, there are instructions to fetch data from memory and write data that has been processed back to memory. -

Multi-Cycle Datapathoperation

Book's Definition of Performance • For some program running on machine X, PerformanceX = 1 / Execution timeX • "X is n times faster than Y" PerformanceX / PerformanceY = n • Problem: – machine A runs a program in 20 seconds – machine B runs the same program in 25 seconds 1 Example • Our favorite program runs in 10 seconds on computer A, which hasa 400 Mhz. clock. We are trying to help a computer designer build a new machine B, that will run this program in 6 seconds. The designer can use new (or perhaps more expensive) technology to substantially increase the clock rate, but has informed us that this increase will affect the rest of the CPU design, causing machine B to require 1.2 times as many clockcycles as machine A for the same program. What clock rate should we tellthe designer to target?" • Don't Panic, can easily work this out from basic principles 2 Now that we understand cycles • A given program will require – some number of instructions (machine instructions) – some number of cycles – some number of seconds • We have a vocabulary that relates these quantities: – cycle time (seconds per cycle) – clock rate (cycles per second) – CPI (cycles per instruction) a floating point intensive application might have a higher CPI – MIPS (millions of instructions per second) this would be higher for a program using simple instructions 3 Performance • Performance is determined by execution time • Do any of the other variables equal performance? – # of cycles to execute program? – # of instructions in program? – # of cycles per second? – average # of cycles per instruction? – average # of instructions per second? • Common pitfall: thinking one of the variables is indicative of performance when it really isn’t. -

Parallel Computing

Lecture 1: Computer Organization 1 Outline • Overview of parallel computing • Overview of computer organization – Intel 8086 architecture • Implicit parallelism • von Neumann bottleneck • Cache memory – Writing cache-friendly code 2 Why parallel computing • Solving an × linear system Ax=b by using Gaussian elimination takes ≈ flops. 1 • On Core i7 975 @ 4.0 GHz,3 which is capable of about 3 60-70 Gigaflops flops time 1000 3.3×108 0.006 seconds 1000000 3.3×1017 57.9 days 3 What is parallel computing? • Serial computing • Parallel computing https://computing.llnl.gov/tutorials/parallel_comp 4 Milestones in Computer Architecture • Analytic engine (mechanical device), 1833 – Forerunner of modern digital computer, Charles Babbage (1792-1871) at University of Cambridge • Electronic Numerical Integrator and Computer (ENIAC), 1946 – Presper Eckert and John Mauchly at the University of Pennsylvania – The first, completely electronic, operational, general-purpose analytical calculator. 30 tons, 72 square meters, 200KW. – Read in 120 cards per minute, Addition took 200µs, Division took 6 ms. • IAS machine, 1952 – John von Neumann at Princeton’s Institute of Advanced Studies (IAS) – Program could be represented in digit form in the computer memory, along with data. Arithmetic could be implemented using binary numbers – Most current machines use this design • Transistors was invented at Bell Labs in 1948 by J. Bardeen, W. Brattain and W. Shockley. • PDP-1, 1960, DEC – First minicomputer (transistorized computer) • PDP-8, 1965, DEC – A single bus -

CS2504: Computer Organization

CS2504, Spring'2007 ©Dimitris Nikolopoulos CS2504: Computer Organization Lecture 4: Evaluating Performance Instructor: Dimitris Nikolopoulos Guest Lecturer: Matthew Curtis-Maury CS2504, Spring'2007 ©Dimitris Nikolopoulos Understanding Performance Why do we study performance? Evaluate during design Evaluate before purchasing Key to understanding underlying organizational motivation How can we (meaningfully) compare two machines? Performance, Cost, Value, etc Main issue: Need to understand what factors in the architecture contribute to overall system performance and the relative importance of these factors Effects of ISA on performance 2 How will hardware change affect performance CS2504, Spring'2007 ©Dimitris Nikolopoulos Airplane Performance Analogy Airplane Passengers Range Speed Boeing 777 375 4630 610 Boeing 747 470 4150 610 Concorde 132 4000 1250 Douglas DC-8-50 146 8720 544 Fighter Jet 4 2000 1500 What metric do we use? Concorde is 2.05 times faster than the 747 747 has 1.74 times higher throughput What about cost? And the winner is: It Depends! 3 CS2504, Spring'2007 ©Dimitris Nikolopoulos Throughput vs. Response Time Response Time: Execution time (e.g. seconds or clock ticks) How long does the program take to execute? How long do I have to wait for a result? Throughput: Rate of completion (e.g. results per second/tick) What is the average execution time of the program? Measure of total work done Upgrading to a newer processor will improve: response time Adding processors to the system will improve: throughput 4 CS2504, Spring'2007 ©Dimitris Nikolopoulos Example: Throughput vs. Response Time Suppose we know that an application that uses both a desktop client and a remote server is limited by network performance. -

Analysis of Body Bias Control Using Overhead Conditions for Real Time Systems: a Practical Approach∗

IEICE TRANS. INF. & SYST., VOL.E101–D, NO.4 APRIL 2018 1116 PAPER Analysis of Body Bias Control Using Overhead Conditions for Real Time Systems: A Practical Approach∗ Carlos Cesar CORTES TORRES†a), Nonmember, Hayate OKUHARA†, Student Member, Nobuyuki YAMASAKI†, Member, and Hideharu AMANO†, Fellow SUMMARY In the past decade, real-time systems (RTSs), which must in RTSs. These techniques can improve energy efficiency; maintain time constraints to avoid catastrophic consequences, have been however, they often require a large amount of power since widely introduced into various embedded systems and Internet of Things they must control the supply voltages of the systems. (IoTs). The RTSs are required to be energy efficient as they are used in embedded devices in which battery life is important. In this study, we in- Body bias (BB) control is another solution that can im- vestigated the RTS energy efficiency by analyzing the ability of body bias prove RTS energy efficiency as it can manage the tradeoff (BB) in providing a satisfying tradeoff between performance and energy. between power leakage and performance without affecting We propose a practical and realistic model that includes the BB energy and the power supply [4], [5].Itseffect is further endorsed when timing overhead in addition to idle region analysis. This study was con- ducted using accurate parameters extracted from a real chip using silicon systems are enabled with silicon on thin box (SOTB) tech- on thin box (SOTB) technology. By using the BB control based on the nology [6], which is a novel and advanced fully depleted sili- proposed model, about 34% energy reduction was achieved.