Neural Systems Analysis of Decision Making During Goal-Directed Navigation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Microscopical Structure of the Hippocampus in the Rat

Bratisl Lek Listy 2008; 109 (3) 106 110 EXPERIMENTAL STUDY The microscopical structure of the hippocampus in the rat El Falougy H, Kubikova E, Benuska J Institute of Anatomy, Faculty of Medicine, Comenius University, Bratislava, Slovakia. [email protected] Abstract: The aim of this work was to study the rat’s hippocampal formation by applying the light microscopic methods. The histological methods used to explore this region of the rat’s brain were the Nissl technique, the Bielschowsky block impregnation method and the rapid Golgi technique. In the Nissl preparations, we identified only three fields of the hippocampus proprius (CA1, CA3 and CA4). CA2 was distinguished in the Bielschowsky impregnated blocks. The rapid Golgi technique, according the available literature, gives the best results by using the fresh samples. In this study, we reached good results by using formalin fixed sections. The layers of the hippocampal formation were differentiated. The pyramidal and granular cells were identified together with their axons and dendrites (Fig. 9, Ref. 22). Full Text (Free, PDF) www.bmj.sk. Key words: archicortex, hippocampal formation, light microscopy, rat. Abbreviations: CA1 regio I cornus ammonis; CA2 regio II in the lateral border of the hippocampus. It continues under the cornus ammonis; CA3 regio III cornus ammonis; CA4 regio corpus callosum as the fornix. Supracommissural hippocampus IV cornus ammonis. (the indusium griseum) extends over the corpus callosum to the anterior portion of the septum as thin strip of gray cortex (5, 6). The microscopical structure of the hippocampal formation The hippocampal formation is subdivided into regions and has been studied intensively since the time of Cajal (1911) and fields according the cell body location, cell body shape and size, his student Lorente de Nó (1934). -

Slicer 3 Tutorial the SPL-PNL Brain Atlas

Slicer 3 Tutorial The SPL-PNL Brain Atlas Ion-Florin Talos, M.D. Surgical Planning Laboratory Brigham and Women’s Hospital -1- http://www.slicer.org Acknowledgments NIH P41RR013218 (Neuroimage Analysis Center) NIH U54EB005149 (NA-MIC) Surgical Planning Laboratory Brigham and Women’s Hospital -2- http://www.slicer.org Disclaimer It is the responsibility of the user of 3DSlicer to comply with both the terms of the license and with the applicable laws, regulations and rules. Surgical Planning Laboratory Brigham and Women’s Hospital -3- http://www.slicer.org Material • Slicer 3 http://www.slicer.org/pages/Special:Slicer_Downloads/Release Atlas data set http://wiki.na-mic.org/Wiki/index.php/Slicer:Workshops:User_Training_101 • MRI • Labels • 3D-models Surgical Planning Laboratory Brigham and Women’s Hospital -4- http://www.slicer.org Learning Objectives • Loading the atlas data • Creating and displaying customized 3D-views of neuroanatomy Surgical Planning Laboratory Brigham and Women’s Hospital -5- http://www.slicer.org Prerequisites • Slicer Training Slicer 3 Training 1: Loading and Viewing Data http://www.na-mic.org/Wiki/index.php/Slicer:Workshops:User_Training_101 Surgical Planning Laboratory Brigham and Women’s Hospital -6- http://www.slicer.org Overview • Part 1: Loading the Brain Atlas Data • Part 2: Creating and Displaying Customized 3D views of neuroanatomy Surgical Planning Laboratory Brigham and Women’s Hospital -7- http://www.slicer.org Loading the Brain Atlas Data Slicer can load: • Anatomic grayscale data (CT, MRI) ……… …………………………………. -

Julius-Kühn-Archiv Everyone Interested

ICP-PR Honey Bee Protection Group 1980 - 2017 The ICP-PR Bee Protection Group held its rst meeting in Wageningen in 1980 and over the subsequent 38 years it has become the established expert forum for discussing the risks of pesticides to bees and developing solutions how to assess and manage this risk. In recent years, the Bee Protection Group has enlarged its scope of interest from honey bees to many other pollinating insects, such as wild bees including bumble bees. 462 The group organizes international scienti c symposia, usually once in every three years. These are open to Julius-Kühn-Archiv everyone interested. The group tries to involve as many countries as possible, by organizing symposia each time in another European country. It operates with working groups studying speci c problems and propo- Pieter A. Oomen, Jens Pistorius (Editors) sing solutions that are subsequently discussed in plenary symposia. A wide range of international experts active in this eld drawn from regulatory authorities, industry, universities and research institutes participate in the discussions. Hazards of pesticides to bees In the past decade the symposium has largely extended beyond Europe, and is established as the internatio- nal expert forum with participants from several continents. 13th International Symposium of the ICP-PR Bee Protection Group 18. - 20. October 2017, València (Spain) - Proceedings - International Symposium of the ICP-PR Bee Group Protection of the ICP-PR Symposium International th Hazards of pesticides to bees of pesticides - 13 to Hazards 462 2018 Julius Kühn-Institut Bundesforschungsinstitut für Kulturp anzen Julius Kühn-Institut, Bundesforschungsinstitut für Kulturpflanzen (JKI) Veröffentlichungen des JKI Das Julius Kühn-Institut ist eine Bundesoberbehörde und ein Bundesforschungsinstitut. -

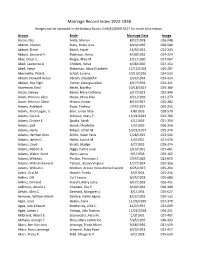

Marriage Record Index 1922-1938 Images Can Be Accessed in the Indiana Room

Marriage Record Index 1922-1938 Images can be accessed in the Indiana Room. Call (812)949-3527 for more information. Groom Bride Marriage Date Image Aaron, Elza Antle, Marion 8/12/1928 026-048 Abbott, Charles Ruby, Hallie June 8/19/1935 030-580 Abbott, Elmer Beach, Hazel 12/9/1922 022-243 Abbott, Leonard H. Robinson, Berta 4/30/1926 024-324 Abel, Oscar C. Ringle, Alice M. 1/11/1930 027-067 Abell, Lawrence A. Childers, Velva 4/28/1930 027-154 Abell, Steve Blakeman, Mary Elizabeth 12/12/1928 026-207 Abernathy, Pete B. Scholl, Lorena 10/15/1926 024-533 Abram, Howard Henry Abram, Elizabeth F. 3/24/1934 029-414 Absher, Roy Elgin Turner, Georgia Lillian 4/17/1926 024-311 Ackerman, Emil Becht, Martha 10/18/1927 025-380 Acton, Dewey Baker, Mary Cathrine 3/17/1923 022-340 Adam, Herman Glen Harpe, Mary Allia 4/11/1936 031-273 Adam, Herman Glenn Hinton, Esther 8/13/1927 025-282 Adams, Adelbert Pope, Thelma 7/14/1927 025-255 Adams, Ancil Logan, Jr. Eiler, Lillian Mae 4/8/1933 028-570 Adams, Cecil A. Johnson, Mary E. 12/21/1923 022-706 Adams, Crozier E. Sparks, Sarah 4/1/1936 031-250 Adams, Earl Snook, Charlotte 1/5/1935 030-250 Adams, Harry Meyer, Lillian M. 10/21/1927 025-376 Adams, Herman Glen Smith, Hazel Irene 2/28/1925 023-502 Adams, James O. Hallet, Louise M. 4/3/1931 027-476 Adams, Lloyd Kirsch, Madge 6/7/1932 028-274 Adams, Robert A. -

HHS Public Access Author Manuscript

HHS Public Access Author manuscript Author Manuscript Author ManuscriptNeuroscience Author Manuscript. Author manuscript; Author Manuscript available in PMC 2015 April 26. Published in final edited form as: Neuroscience. 2012 December 13; 226: 145–155. doi:10.1016/j.neuroscience.2012.09.011. The Distribution of Phosphodiesterase 2a in the Rat Brain D. T. Stephensona,†, T. M. Coskranb, M. P. Kellya,‡, R. J. Kleimana,§, D. Mortonc, S. M. O'neilla, C. J. Schmidta, R. J. Weinbergd, and F. S. Mennitia,* D. T. Stephenson: [email protected]; M. P. Kelly: [email protected]; R. J. Kleiman: [email protected]; F. S. Menniti: [email protected] aNeuroscience Biology, Pfizer Global Research & Development, Eastern Point Road, Groton, CT 06340, USA bInvestigative Pathology, Pfizer Global Research & Development, Eastern Point Road, Groton, CT 06340, USA cToxologic Pathology, Pfizer Global Research & Development, Eastern Point Road, Groton, CT 06340, USA dDepartment of Cell Biology & Physiology, Neuroscience Center, University of North Carolina, Chapel Hill, NC 27599, USA Abstract The phosphodiesterases (PDEs) are a superfamily of enzymes that regulate spatio-temporal signaling by the intracellular second messengers cAMP and cGMP. PDE2A is expressed at high levels in the mammalian brain. To advance our understanding of the role of this enzyme in regulation of neuronal signaling, we here describe the distribution of PDE2A in the rat brain. PDE2A mRNA was prominently expressed in glutamatergic pyramidal cells in cortex, and in pyramidal and dentate granule cells in the hippocampus. Protein concentrated in the axons and nerve terminals of these neurons; staining was markedly weaker in the cell bodies and proximal dendrites. -

Certain Olfactory Centers of the Forebrain of the Giant Panda (Ailuropoda Melanoleuca)

CERTAIN OLFACTORY CENTERS OF THE FOREBRAIN OF THE GIANT PANDA (AILUROPODA MELANOLEUCA) EDWARD W. LAUER Department of Anatomy, University of Michigan THIRTEEN FIGURE6 INTRODUCTION In the spring of 1946 the Laboratory of Comparative Neu- rology at the University of Michigan received from Professor Fred A. Mettler of Columbia University the brain of a giant panda ( Ailuropoda melanoleuca) for histological study. This had been obtained from a mature female melanoleuca, Pan Dee, presented to the New York Zoological Society in 1941 through United China Relief by Mme. Chiang Kai-shek and Mme. H. H. Kung, and which Bad died in the fall of 1945 from acute paralytic enteritis and peritonitis. A report on the topographical anatomy of the brain was made by Mettler and Goss ( '46) who concluded that externally it is identical with that of the bear. Unfortunately, practically no work has been done on the histological structure of the ursine brain making it impossible to compare its microscopic structure with that of the panda. The panda brain was fixed in formalin, embedded in paraffin and sectioned at 30 p. Alternate sections were stained in cresyl violet for cell study and in Weil for demonstration of fiber tracts. Another gross panda brain, also obtained from Pro- ' This investigation was aided by grants from the Horace H. Rackhsm School of Graduate Studies of the University of Michigan and from the A. B. Brower and E. R. Arn Medical Research and Scholarship Fund. 213 214 EDWARD W. LAUER fessor Mettler, was available for orientation. The photo- micrographs used for the illustrations were made with the assistance of Mr. -

Theta Phase Precession Beyond the Hippocampus

Theta phase precession beyond the hippocampus Authors: Sushant Malhotra1,2y, Robert W. A. Cross1y, Matthijs A. A. van der Meer1,3* 1Department of Biology, University of Waterloo, Ontario, Canada 2Systems Design Engineering, University of Waterloo 3Centre for Theoretical Neuroscience, University of Waterloo yThese authors contributed equally. *Correspondence should be addressed to MvdM, Department of Biology, University of Waterloo, 200 Uni- versity Ave W, Waterloo, ON N2L 3G1, Canada. E-mail: [email protected]. Running title: Phase precession beyond the hippocampus 1 Abstract The spike timing of spatially tuned cells throughout the rodent hippocampal formation displays a strikingly robust and precise organization. In individual place cells, spikes precess relative to the theta local field po- tential (6-10 Hz) as an animal traverses a place field. At the population level, theta cycles shape repeated, compressed place cell sequences that correspond to coherent paths. The theta phase precession phenomenon has not only afforded insights into how multiple processing elements in the hippocampal formation inter- act; it is also believed to facilitate hippocampal contributions to rapid learning, navigation, and lookahead. However, theta phase precession is not unique to the hippocampus, suggesting that insights derived from the hippocampal phase precession could elucidate processing in other structures. In this review we consider the implications of extrahippocampal phase precession in terms of mechanisms and functional relevance. We focus on phase precession in the ventral striatum, a prominent output structure of the hippocampus in which phase precession systematically appears in the firing of reward-anticipatory “ramp” neurons. We out- line how ventral striatal phase precession can advance our understanding of behaviors thought to depend on interactions between the hippocampus and the ventral striatum, such as conditioned place preference and context-dependent reinstatement. -

Clonal Architecture of the Mouse Hippocampus

The Journal of Neuroscience, May 1, 2002, 22(9):3520–3530 Clonal Architecture of the Mouse Hippocampus Loren A. Martin,1 Seong-Seng Tan,2 and Dan Goldowitz1 1Department of Anatomy and Neurobiology, University of Tennessee Health Science Center, Memphis, Tennessee 38163, and 2Howard Florey Institute, University of Melbourne, Parkville VIC 3010, Australia Experimental mouse chimeras have proven useful in analyzing layer in a symmetrical manner. Clonally related populations of the cell lineages of various tissues. Here we use experimental GABAergic interneurons are dispersed throughout the hip- mouse chimeras to study cell lineage of the hippocampus. We pocampus and originate from progenitors that are separate examined clonal architecture and lineage relationships of the hip- from either pyramidal or granule cells. Granule and pyramidal pocampal pyramidal cells, dentate granule cells, and GABAergic cells, however, are closely linked in their lineages. Our quanti- interneurons. We quantitatively analyzed like-genotype cohorts of tative analyses yielded estimates of the size of the progenitor these neuronal populations in the hippocampus of the most pools that establish the pyramidal, granule, and GABAergic highly skewed chimeras to provide estimates of the size of the interneuronal populations as consisting of 7000, 400, and 40 progenitor pool that gives rise to these neuronal groups. We progenitors, respectively, for each side of the hippocampus. also compared the percentage chimerism across various brain Last, we found that the hippocampal pyramidal and granule structures to gain insights into the origins of the hippocampus cells share a lineage with cortical and diencephalic cells, point- relative to other neighboring regions of the brain. Our qualitative ing toward a common lineage that crosses the di-telencephalic analyses demonstrate that like-genotype cohorts of pyramidal boundaries. -

The Hippocampus Via Subiculum Upon Exposure to Desiccation

Current Biology Magazine Where next for the study of Primer anhydrobiosis? Studies have largely Direct and relied on detecting gene upregulation The hippocampus via subiculum upon exposure to desiccation. CA1 This approach will fail to identify constitutively expresssed genes that James J. Knierim DG CA2 are important for anhydrobiotic survival but whose expression does not change The hippocampus is one of the most EC CA3 during desiccation and rehydration. thoroughly investigated structures in axons We still do not understand why some the brain. Ever since the 1957 report nematodes, for example, do not of the case study H.M., who famously survive desiccation, while others can lost the ability to form new, declarative Current Biology survive immediate exposure to extreme memories after surgical removal of the desiccation, and yet others require hippocampus and nearby temporal Figure 1. Coronal slice through the trans- verse axis of the hippocampus. preconditioning at a high relative lobe structures to treat intractable The black lines trace the classic ‘trisynaptic humidity. A comparative approach epilepsy, the hippocampus has been loop’. The red lines depict other important is likely to be informative, as has at the forefront of research into the pathways in the hippocampus, including the recently been published comparing neurobiological bases of memory. direct projections from entorhinal cortex (EC) to the genomes of P. vanderplanki and its This research led to the discovery all three CA fi elds, the feedback to the EC via desiccation-intolerant relative P. nubifer. in the hippocampus of long-term the subiculum, the recurrent collateral circuitry of CA3, and the feedback projection from CA3 potentiation, the pre-eminent model to DG. -

Law Enforcement Agency Directory

Michigan Law Enforcement Agencies 08/16/2021 ADRIAN POLICE DEPARTMENT ALBION DPS ALLEGAN POLICE DEPARTMENT CHIEF VINCENT P EMRICK CHIEF SCOTT KIPP CHIEF JAY GIBSON 155 E. MAUMEE STREET 112 W CASS ST 170 MONROE ST ADRIAN MI 49221 ALBION MI 49224 ALLEGAN MI 49010 TX: 517-264-4846 TX: 517-629-3933 TX: 269-673-2115 FAX: 517-264-1927 FAX: 517-629-7828 FAX: 269-673-5170 ADRIAN TOWNSHIP POLICE ALCONA COUNTY SHERIFFS OFFICE ALLEN PARK POLICE DEPARTMENT SHERIFF SCOTT A. STEPHENSON DEPARTMENT CHIEF GARY HANSELMAN 214 WEST MAIN CHIEF CHRISTOPHER S EGAN 2907 TIPTON HWY HARRISVILLE MI 48740 15915 SOUTHFIELD RD ADRIAN MI 49221 TX: 989-724-6271 ALLEN PARK MI 48101 TX: 517-264-1000 FAX: 989-724-6181 TX: 313-386-7800 FAX: 517-265-6300 FAX: 313-386-4158 ALGER COUNTY SHERIFFS OFFICE ADRIAN-BLISSFIELD RAILROAD SHERIFF TODD BROCK ALLEN PARK POLICE POLICE 101 EAST VARNUM STE B DEPARTMENT CHIEF MARK W. DOBRONSKI MUNISING MI 49862 CHIEF CHRISTOPHER S EGAN 38235 N. EXECUTIVE DR. TX: 906-387-4444 15915 SOUTHFIELD RD WESTLAND MI 48185 FAX: 906-387-1728 ALLEN PARK MI 48101 TX: 734-641-2300 TX: 313-386-7800 FAX: 734-641-2323 ALLEGAN COUNTY SHERIFFS FAX: OFFICE AKRON POLICE DEPARTMENT SHERIFF FRANK BAKER ALMA DEPARTMENT OF PUBLIC CHIEF MATTHEW SIMERSON 640 RIVER ST. SAFETY 4380 BEACH STREET ALLEGAN MI 49010 CHIEF KENDRA OVERLA P.O. BOX 295 TX: 269-673-0500 525 EAST SUPERIOR AKRON MI 48701 FAX: 269-673-0406 ALMA MI 48801 TX: 989-691-5354 TX: 989-463-8317 FAX: 989-691-5423 FAX: 989-463-6233 Michigan Law Enforcement Agencies 08/16/2021 ALMONT POLICE DEPARTMENT AMTRAK RAILROAD POLICE ARGENTINE TOWNSHIP POLICE CHIEF ANDREW MARTIN DEPUTY CHIEF JOSEPH DEPARTMENT 817 NORTH MAIN PATTERSON CHIEF DANIEL K ALLEN ALMONT MI 48003 600 DEY ST. -

2012 Annual Report

The year kicked off with a long-awaited Indiana specialty license plate and concluded with a With the groundbreaking ceremony of the International Orangutan record-breaking Christmas celebration. In between, animal conservation was at the forefront in Center came a game-changing moment – this time for orangutans here many ways and included the opening of the outstanding exotic bird exhibit Flights of Fancy, and in the wild. The Center is a unique facility specifically designed to noteworthy births of an elephant and dolphin, and arrivals of a rescued sea lion and baby meet the physical, social, and intellectual needs of these endangered INDIANAPOLIS ZOO walrus. The traditional crowd-pleasing seasonal celebrations also filled the year. And don’t great apes. Its centerpiece, a 150-foot beacon that will be illuminated Annual Report 2012 forget White River Gardens, where orchids flourished in a salute to the natural world. by lights the orangutans turn on, represents the hope that the species not only will survive but also thrive in a world-class environment. Changing the game FOR an ge endangered The Indianapolis Prize Gala showcased our passion for preservation, as this fourth biennial award lauded a distinguished polar bear researcher. The honor was so prestigious it was called the Nobel Prize for the animal conservation world. neration Your generous support is why we celebrate another successful, transformative year. Look inside as we remember some of the highlights and anticipate the challenges ahead. Was 2012 the best year ever for your Indianapolis Zoo? It certainly felt like it. Making a difference for natural world natural for Making adifference THE Dear Friends: We often hear experts speak of the “transformative” power of various entities or ideas. -

The Morphology of the Septum, Hippocampus, and Pallial Commissures in Repliles and Mammals' J

THE MORPHOLOGY OF THE SEPTUM, HIPPOCAMPUS, AND PALLIAL COMMISSURES IN REPLILES AND MAMMALS' J. B. JOHNSTON Institute of Anatomy, University of Minnesota NINETY-THBEE X'IGURES In the mammalian brain the hippocampus extends from the base of the olfactory peduncle over the corpus callosum and bends down into the temporal lobe. Over the corpus callosug there is a well developed hippocampus in monotremes and mar- supials, whife in higher mammals it is reduced to a sIender ves- tige consisting of the stria longitudinalis and indusium. The telencephdic commissures in monotremes and marsupials form two transverse bundles in the rostra1 wall of the third ventricle. We owe our knowledge of the history of the pallial commissures in mammals chiefly to the work of Elliot Smith. This author states that these commissures are both contained in the lamina terminalis. The upper (dorsal) commissure represents the com- missure hippocampi or psalterium; the lower (ventral) contains the comrnissura anterior and the fibers which serve the functions of the corpus callosum. In mammals as the general pallium grows in extent there is a corresponding increase in the number of corpus callosum fibers. These fibers are transferred from the lower to the upper bundle in the lamina terminalis, in which corpus callosum and psalterium then lie side by side. As the pallium grows the corpus callosum becomes larger, rises up and bends on itself until it finally forms the great arched structure which we know in higher mammals md man. During all this process two changes have taken place in the lamina terminalis. First, it was invaded by cells frDm theneigh- boring medial portion of the olfactory lobe so that the paired 1 Neurological studies, University of Minnesota, no.