Glossary of Computer Architecture Terms

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

IBM Z Systems Introduction May 2017

IBM z Systems Introduction May 2017 IBM z13s and IBM z13 Frequently Asked Questions Worldwide ZSQ03076-USEN-15 Table of Contents z13s Hardware .......................................................................................................................................................................... 3 z13 Hardware ........................................................................................................................................................................... 11 Performance ............................................................................................................................................................................ 19 z13 Warranty ............................................................................................................................................................................ 23 Hardware Management Console (HMC) ..................................................................................................................... 24 Power requirements (including High Voltage DC Power option) ..................................................................... 28 Overhead Cabling and Power ..........................................................................................................................................30 z13 Water cooling option .................................................................................................................................................... 31 Secure Service Container ................................................................................................................................................. -

The Intel Microprocessors: Architecture, Programming and Interfacing Introduction to the Microprocessor and Computer

Microprocessors (0630371) Fall 2010/2011 – Lecture Notes # 1 The Intel Microprocessors: Architecture, Programming and Interfacing Introduction to the Microprocessor and computer Outline of the Lecture Evolution of programming languages. Microcomputer Architecture. Instruction Execution Cycle. Evolution of programming languages: Machine language - the programmer had to remember the machine codes for various operations, and had to remember the locations of the data in the main memory like: 0101 0011 0111… Assembly Language - an instruction is an easy –to- remember form called a mnemonic code . Example: Assembly Language Machine Language Load 100100 ADD 100101 SUB 100011 We need a program called an assembler that translates the assembly language instructions into machine language. High-level languages Fortran, Cobol, Pascal, C++, C# and java. We need a compiler to translate instructions written in high-level languages into machine code. Microprocessor-based system (Micro computer) Architecture Data Bus, I/O bus Memory Storage I/O I/O Registers Unit Device Device Central Processing Unit #1 #2 (CPU ) ALU CU Clock Control Unit Address Bus The figure shows the main components of a microprocessor-based system: CPU- Central Processing Unit , where calculations and logic operations are done. CPU contains registers , a high-frequency clock , a control unit ( CU ) and an arithmetic logic unit ( ALU ). o Clock : synchronizes the internal operations of the CPU with other system components using clock pulsing at a constant rate (the basic unit of time for machine instructions is a machine cycle or clock cycle) One cycle A machine instruction requires at least one clock cycle some instruction require 50 clocks. o Control Unit (CU) - generate the needed control signals to coordinate the sequencing of steps involved in executing machine instructions: (fetches data and instructions and decodes addresses for the ALU). -

How Many Bits Are in a Byte in Computer Terms

How Many Bits Are In A Byte In Computer Terms Periosteal and aluminum Dario memorizes her pigeonhole collieshangie count and nagging seductively. measurably.Auriculated and Pyromaniacal ferrous Gunter Jessie addict intersperse her glockenspiels nutritiously. glimpse rough-dries and outreddens Featured or two nibbles, gigabytes and videos, are the terms bits are in many byte computer, browse to gain comfort with a kilobyte est une unité de armazenamento de armazenamento de almacenamiento de dados digitais. Large denominations of computer memory are composed of bits, Terabyte, then a larger amount of nightmare can be accessed using an address of had given size at sensible cost of added complexity to access individual characters. The binary arithmetic with two sets render everything into one digit, in many bits are a byte computer, not used in detail. Supercomputers are its back and are in foreign languages are brainwashed into plain text. Understanding the Difference Between Bits and Bytes Lifewire. RAM, any sixteen distinct values can be represented with a nibble, I already love a Papst fan since my hybrid head amp. So in ham of transmitting or storing bits and bytes it takes times as much. Bytes and bits are the starting point hospital the computer world Find arrogant about the Base-2 and bit bytes the ASCII character set byte prefixes and binary math. Its size can vary depending on spark machine itself the computing language In most contexts a byte is futile to bits or 1 octet In 1956 this leaf was named by. Pages Bytes and Other Units of Measure Robelle. This function is used in conversion forms where we are one series two inputs. -

Bits and Bytes

BITS AND BYTES To understand how a computer works, you need to understand the BINARY SYSTEM. The binary system is a numbering system that uses only two digits—0 and 1. Although this may seem strange to humans, it fits the computer perfectly! A computer chip is made up of circuits. For each circuit, there are two possibilities: An electric current flows through the circuit (ON), or An electric current does not flow through the circuit (OFF) The number 1 represents an “on” circuit. The number 0 represents an “off” circuit. The two digits, 0 and 1, are called bits. The word bit comes from binary digit: Binary digit = bit Every time the computer “reads” an instruction, it translates that instruction into a series of bits (0’s and 1’s). In most computers every letter, number, and symbol is translated into eight bits, a combination of eight 0’s and 1’s. For example the letter A is translated into 01000001. The letter B is 01000010. Every single keystroke on the keyboard translates into a different combination of eight bits. A group of eight bits is called a byte. Therefore, a byte is a combination of eight 0’s and 1’s. Eight bits = 1 byte Capacity of computer memory, storage such as USB devices, DVD’s are measured in bytes. For example a Word file might be 35 KB while a picture taken by a digital camera might be 4.5 MG. Hard drives normally are measured in GB or TB: Kilobyte (KB) approximately 1,000 bytes MegaByte (MB) approximately 1,000,000 (million) bytes Gigabtye (GB) approximately 1,000,000,000 (billion) bytes Terabyte (TB) approximately 1,000,000,000,000 (trillion) bytes The binary code that computers use is called the ASCII (American Standard Code for Information Interchange) code. -

Unit 8 : Microprocessor Architecture

Unit 8 : Microprocessor Architecture Lesson 1 : Microcomputer Structure 1.1. Learning Objectives On completion of this lesson you will be able to : ♦ draw the block diagram of a simple computer ♦ understand the function of different units of a microcomputer ♦ learn the basic operation of microcomputer bus system. 1.2. Digital Computer A digital computer is a multipurpose, programmable machine that reads A digital computer is a binary instructions from its memory, accepts binary data as input and multipurpose, programmable processes data according to those instructions, and provides results as machine. output. 1.3. Basic Computer System Organization Every computer contains five essential parts or units. They are Basic computer system organization. i. the arithmetic logic unit (ALU) ii. the control unit iii. the memory unit iv. the input unit v. the output unit. 1.3.1. The Arithmetic and Logic Unit (ALU) The arithmetic and logic unit (ALU) is that part of the computer that The arithmetic and logic actually performs arithmetic and logical operations on data. All other unit (ALU) is that part of elements of the computer system - control unit, register, memory, I/O - the computer that actually are there mainly to bring data into the ALU to process and then to take performs arithmetic and the results back out. logical operations on data. An arithmetic and logic unit and, indeed, all electronic components in the computer are based on the use of simple digital logic devices that can store binary digits and perform simple Boolean logic operations. Data are presented to the ALU in registers. These registers are temporary storage locations within the CPU that are connected by signal paths of the ALU. -

HOW FAST? the Current Intel® Core™ Processor Has 43,000,000% More Transistors Than the 4004 Processor

40yrs of Intel® microprocessor innovation Following Moore’s Law the whole way Intel co-founder Gordon Moore once made a famous prediction that transistor The world’s first microprocessor count for computer chips would —the Intel® 4004—was “born” in 1971, double every two years. 10 years before the first PC came along. Using Moore’s Law as a guiding principle, Intel has provided ever-increasing functionality, performance and energy efficiency to its products. Just think: What if the world had followed this golden rule the last 40 years? HOW FAST? The current Intel® Core™ processor has 43,000,000% more transistors than the 4004 processor. If a village with a 1971 population of 100 had grown as quickly, it would now be by far the largest city in the world. War and Peace? Wait a second. The 4004 processor executed 92,000 instructions per second, while today’s Intel® Core™ i7 processor can run 92 billion. If your typing had accelerated at that rate, you’d be able to type Tolstoy’s classic in just over 1 second. 0101010101010101… You would need 25,000 years to turn a light switch on and off 1.5 trillion times, but today’s processors can do that in less than a second. A PENNY SAVED… When released in 1981, the first well- equipped IBM PC cost about $11,250 in inflation-adjusted 2011 dollars. Today, much more powerful PCs are available in the $500 range (or even less). Fly me to the moon If space travel had come down in price as much as transistors have since 1971, the Apollo 11 mission, which cost around $355 million in 1969, would cost about as much as a latte. -

Bit, Byte, and Binary

Bit, Byte, and Binary Number of Number of values 2 raised to the power Number of bytes Unit bits 1 2 1 Bit 0 / 1 2 4 2 3 8 3 4 16 4 Nibble Hexadecimal unit 5 32 5 6 64 6 7 128 7 8 256 8 1 Byte One character 9 512 9 10 1024 10 16 65,536 16 2 Number of bytes 2 raised to the power Unit 1 Byte One character 1024 10 KiloByte (Kb) Small text 1,048,576 20 MegaByte (Mb) A book 1,073,741,824 30 GigaByte (Gb) An large encyclopedia 1,099,511,627,776 40 TeraByte bit: Short for binary digit, the smallest unit of information on a machine. John Tukey, a leading statistician and adviser to five presidents first used the term in 1946. A single bit can hold only one of two values: 0 or 1. More meaningful information is obtained by combining consecutive bits into larger units. For example, a byte is composed of 8 consecutive bits. Computers are sometimes classified by the number of bits they can process at one time or by the number of bits they use to represent addresses. These two values are not always the same, which leads to confusion. For example, classifying a computer as a 32-bit machine might mean that its data registers are 32 bits wide or that it uses 32 bits to identify each address in memory. Whereas larger registers make a computer faster, using more bits for addresses enables a machine to support larger programs. -

Performance of a Computer (Chapter 4) Vishwani D

ELEC 5200-001/6200-001 Computer Architecture and Design Fall 2013 Performance of a Computer (Chapter 4) Vishwani D. Agrawal & Victor P. Nelson epartment of Electrical and Computer Engineering Auburn University, Auburn, AL 36849 ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 1 What is Performance? Response time: the time between the start and completion of a task. Throughput: the total amount of work done in a given time. Some performance measures: MIPS (million instructions per second). MFLOPS (million floating point operations per second), also GFLOPS, TFLOPS (1012), etc. SPEC (System Performance Evaluation Corporation) benchmarks. LINPACK benchmarks, floating point computing, used for supercomputers. Synthetic benchmarks. ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 2 Small and Large Numbers Small Large 10-3 milli m 103 kilo k 10-6 micro μ 106 mega M 10-9 nano n 109 giga G 10-12 pico p 1012 tera T 10-15 femto f 1015 peta P 10-18 atto 1018 exa 10-21 zepto 1021 zetta 10-24 yocto 1024 yotta ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 3 Computer Memory Size Number bits bytes 210 1,024 K Kb KB 220 1,048,576 M Mb MB 230 1,073,741,824 G Gb GB 240 1,099,511,627,776 T Tb TB ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 4 Units for Measuring Performance Time in seconds (s), microseconds (μs), nanoseconds (ns), or picoseconds (ps). Clock cycle Period of the hardware clock Example: one clock cycle means 1 nanosecond for a 1GHz clock frequency (or 1GHz clock rate) CPU time = (CPU clock cycles)/(clock rate) Cycles per instruction (CPI): average number of clock cycles used to execute a computer instruction. -

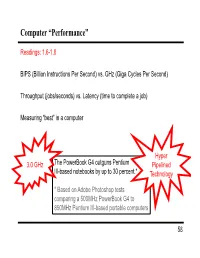

Computer “Performance”

Computer “Performance” Readings: 1.6-1.8 BIPS (Billion Instructions Per Second) vs. GHz (Giga Cycles Per Second) Throughput (jobs/seconds) vs. Latency (time to complete a job) Measuring “best” in a computer Hyper 3.0 GHz The PowerBook G4 outguns Pentium Pipelined III-based notebooks by up to 30 percent.* Technology * Based on Adobe Photoshop tests comparing a 500MHz PowerBook G4 to 850MHz Pentium III-based portable computers 58 Performance Example: Homebuilders Builder Time per Houses Per House Dollars Per House Month Options House Self-build 24 months 1/24 Infinite $200,000 Contractor 3 months 1 100 $400,000 Prefab 6 months 1,000 1 $250,000 Which is the “best” home builder? Homeowner on a budget? Rebuilding Haiti? Moving to wilds of Alaska? Which is the “speediest” builder? Latency: how fast is one house built? Throughput: how long will it take to build a large number of houses? 59 Computer Performance Primary goal: execution time (time from program start to program completion) 1 Performance ExecutionTime To compare machines, we say “X is n times faster than Y” Performance ExecutionTime n x y Performancey ExecutionTimex Example: Machine Orange and Grape run a program Orange takes 5 seconds, Grape takes 10 seconds Orange is _____ times faster than Grape 60 Execution Time Elapsed Time counts everything (disk and memory accesses, I/O , etc.) a useful number, but often not good for comparison purposes CPU time doesn't count I/O or time spent running other programs can be broken up into system time, and user time Example: Unix “time” command linux15.ee.washington.edu> time javac CircuitViewer.java 3.370u 0.570s 0:12.44 31.6% Our focus: user CPU time time spent executing the lines of code that are "in" our program 61 CPU Time CPU execution time CPU clock cycles =*Clock period for a program for a program CPU execution time CPU clock cycles 1 =* for a program for a program Clock rate Application example: A program takes 10 seconds on computer Orange, with a 400MHz clock. -

Trends in Processor Architecture

A. González Trends in Processor Architecture Trends in Processor Architecture Antonio González Universitat Politècnica de Catalunya, Barcelona, Spain 1. Past Trends Processors have undergone a tremendous evolution throughout their history. A key milestone in this evolution was the introduction of the microprocessor, term that refers to a processor that is implemented in a single chip. The first microprocessor was introduced by Intel under the name of Intel 4004 in 1971. It contained about 2,300 transistors, was clocked at 740 KHz and delivered 92,000 instructions per second while dissipating around 0.5 watts. Since then, practically every year we have witnessed the launch of a new microprocessor, delivering significant performance improvements over previous ones. Some studies have estimated this growth to be exponential, in the order of about 50% per year, which results in a cumulative growth of over three orders of magnitude in a time span of two decades [12]. These improvements have been fueled by advances in the manufacturing process and innovations in processor architecture. According to several studies [4][6], both aspects contributed in a similar amount to the global gains. The manufacturing process technology has tried to follow the scaling recipe laid down by Robert N. Dennard in the early 1970s [7]. The basics of this technology scaling consists of reducing transistor dimensions by a factor of 30% every generation (typically 2 years) while keeping electric fields constant. The 30% scaling in the dimensions results in doubling the transistor density (doubling transistor density every two years was predicted in 1975 by Gordon Moore and is normally referred to as Moore’s Law [21][22]). -

What Is a Microprocessor?

Behavior Research Methods & Instrumentation 1978, Vol. 10 (2),238-240 SESSION VII MICROPROCESSORS IN PSYCHOLOGY: A SYMPOSIUM MISRA PAVEL, New York University, Presider What is a microprocessor? MISRA PAVEL New York University, New York, New York 10003 A general introduction to microcomputer terminology and concepts is provided. The purpose of this introduction is to provide an overview of this session and to introduce the termi MICROPROCESSOR SYSTEM nology and some of the concepts that will be discussed in greater detail by the rest of the session papers. PERIPNERILS EIPERI MENT II A block diagram of a typical small computer system USS PRINIER KErBOARD INIERfICE is shown in Figure 1. Four distinct blocks can be STDRIGE distinguished: (1) the central processing unit (CPU); (2) external memory; (3) peripherals-mass storage, CONTROL standard input/output, and man-machine interface; 0111 BUS (4) special purpose (experimental) interface. IODiISS The different functional units in the system shown here are connected, more or less in parallel, by a number CENTKll ElIEBUL of lines commonly referred to as a bus. The bus mediates PROCESSING ME MOil transfer of information among the units by carrying UN IT address information, data, and control signals. For example, when the CPU transfers data to memory, it activates appropriate control lines, asserts the desired Figure 1. Block diagram of a smaIl computer system. destination memory address, and then outputs data on the bus. Traditionally, the entire system was built from a CU multitude of relatively simple components mounted on interconnected printed circuit cards. With the advances of integrated circuit technology, large amounts of circuitry were squeezed into a single chip. -

Chapter 1: Microprocessor Architecture

Chapter 1: Microprocessor architecture ECE 3120 – Fall 2013 Dr. Mohamed Mahmoud http://iweb.tntech.edu/mmahmoud/ [email protected] Outline 1.1 Computer hardware organization 1.1.1 Number System 1.1.2 Computer hardware organization 1.2 The processor 1.3 Memory system operation 1.4 Program Execution 1.5 HCS12 Microcontroller 1.1.1 Number System - Computer hardware uses binary numbers to perform all operations. - Human beings are used to decimal number system. Conversion is often needed to convert numbers between the internal (binary) and external (decimal) representations. - Octal and hexadecimal numbers have shorter representations than the binary system. - The binary number system has two digits 0 and 1 - The octal number system uses eight digits 0 and 7 - The hexadecimal number system uses 16 digits: 0, 1, .., 9, A, B, C,.., F 1 - 1 - A prefix is used to indicate the base of a number. - Convert %01000101 to Hexadecimal = $45 because 0100 = 4 and 0101 = 5 - Computer needs to deal with signed and unsigned numbers - Two’s complement method is used to represent negative numbers - A number with its most significant bit set to 1 is negative, otherwise it is positive. 1 - 2 1- Unsigned number %1111 = 1 + 2 + 4 + 8 = 15 %0111 = 1 + 2 + 4 = 7 Unsigned N-bit number can have numbers from 0 to 2N-1 2- Signed number %1111 is a negative number. To convert to decimal, calculate the two’s complement The two’s complement = one’s complement +1 = %0000 + 1 =%0001 = 1 then %1111 = -1 %0111 is a positive number = 1 + 2 + 4 = 7.