For Problems Sufficiently Hard ... AI Needs Cogsci

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

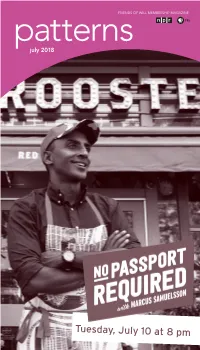

PDF Version of July 2018 Patterns

FRIENDS OF WILL MEMBERSHIP MAGAZINE patterns july 2018 Tuesday, July 10 at 8 pm WILL-TV TM patterns Membership Hotline: 800-898-1065 july 2018 Volume XLVI, Number 1 WILL AM-FM-TV: 217-333-7300 Campbell Hall 300 N. Goodwin Ave., Urbana, IL 61801-2316 Mailing List Exchange Donor records are proprietary and confidential. WILL does not sell, rent or trade its donor lists. Patterns Friends of WILL Membership Magazine Editor/Art Designer: Sarah Whittington Printed by Premier Print Group. Printed with SOY INK on RECYCLED, TM Trademark American Soybean Assoc. RECYCLABLE paper. Radio 90.9 FM: A mix of classical music and NPR information programs, including local news. (Also heard at 106.5 in Danville and with live streaming on will.illinois.edu.) See pages 4-5. 101.1 FM and 90.9 FM HD2: Locally produced music programs and classical music from C24. (101.1 The month of July means we’ve moved into a is available in the Champaign-Urbana area.) See page 6. new fiscal year here at Illinois Public Media. 580 AM: News and information, NPR, BBC, news, agriculture, talk shows. (Also heard on 90.9 FM HD3 First and foremost, I want to give a big thank with live streaming on will.illinois.edu.) See page 7. you to everyone who renewed or increased your gift to Illinois Public Media over the last Television 12 months. You continue to show your love and WILL-HD All your favorite PBS and local programming, in high support for what we do time and again. I am definition when available. -

Basic Administration

System Administration Guide: Basic Administration Sun Microsystems, Inc. 4150 Network Circle Santa Clara, CA 95054 U.S.A. Part No: 817–6958–10 September 2004 Copyright 2004 Sun Microsystems, Inc. 4150 Network Circle, Santa Clara, CA 95054 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, docs.sun.com, AnswerBook, AnswerBook2, JumpStart, Sun Ray, Sun Blade, PatchPro, SunOS, Solstice, Solstice AdminSuite, Solstice DiskSuite, Solaris Solve, Java, JavaStation, OpenWindows, NFS, iPlanet, Netra and Solaris are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. DLT is claimed as a trademark of Quantum Corporation in the United States and other countries. The OPEN LOOK and Sun™ Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. -

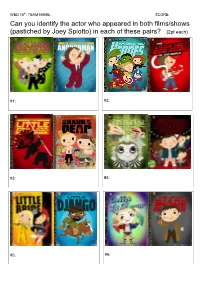

Can You Identify the Actor Who Appeared in Both Films/Shows (Pastiched by Joey Spiotto) in Each of These Pairs? (2Pt Each)

WED 15th: TEAM NAME: SCORE: Can you identify the actor who appeared in both films/shows (pastiched by Joey Spiotto) in each of these pairs? (2pt each) #1: #2: #3: #4: #5: #6: WED 15th: TEAM NAME: SCORE: #7: #8: #9: #10: #11: #12: #13: #14: WED 8th TEAM NAME: SCORE: Can you identify the movies whose posters inspired these 2017-8 Fringe photo shoots? (2pt each) #1. (5.6.4) #2. (7:7.2.3.6) #3. (3.8.6) #4. (3.4,3.3.3.3.4) #5. (10) #6. (4) #7 (4,5,4) #8. (7,2,3,5) #9. (7,4) #10: Whose face has Amy Annette replaced with her own for Q7? ACMS QUIZ – Aug-15 2018 Round # /20 pts Round # /20 pts 1. 1. 2. 2. 3. 3. 4. 4. 5. 5. 6. 6. 7. 7. 8. 8. 9. 9. 10. 10. Round # /20 pts Round # /20 pts 1. 1. 2. 2. 3. 3. 4. 4. 5. 5. 6. 6. 7. 7. 8. 8. 9. 9. 10. 10. ACMS QUIZ – Aug-15 2018 QUESTIONS: WED 15th AUGUST 2018 1) GENERAL KNOWLEDGE (A clue: YOU GOT AN OLOGY.) 1. M.Khan is what? 2. Who broke their hand in March, forcing a sold-out UK tour to be postponed? 3. Jonny Donahoe (new dad!) and Paddy Gervers are in what band together? 4. What is the name of the general manager of the Slough branch of Wernham-Hogg paper merchants? 5. Who plays Captain Martin Crieff in Cabin Pressure? 6. By what name are Ciaran Dowd, James McNicholas and Owen Roberts better known? 7. -

Creativity Support for Computational Literature by Daniel C. Howe A

Creativity Support for Computational Literature By Daniel C. Howe A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy Department of Computer Science New York University September 2009 __________________ Dr. Ken Perlin, Advisor “If I have said anything to the contrary I was mistaken. If I say anything to the contrary again I shall be mistaken again. Unless I am mistaken now. Into the dossier with it in any case, in support of whatever thesis you fancy.” - Samuel Beckett ACKNOWLEDGEMENTS I would like to thank my advisor, Ken Perlin, for his continual support and inspiration over the course of this research. I am also indebted to my committee members, Helen Nissenbaum, Katherine Isbister, Matthew Stone, and Alan Siegal for their ongoing support and generous contributions of time and input at every stage of the project. Additionally I wish to thank John Cayley, Bill Seaman, Braxton Soderman and Linnea Ogden, each of whom provided important contributions to the ideas presented here. While there are too many to mention individually here, I would like to thank my colleagues, collaborators, and friends who have been generous and tolerant enough to put up with me over the past five years. Finally, I would like to thank my parents and brother who have given me their unconditionally support, however misguided or unintelligible my direction, since the beginning. iv ABSTRACT The creativity support community has a long history of providing valuable tools to artists and designers. Similarly, creative digital media practice has proven a valuable pedagogical strategy for teaching core computational ideas. -

2 October 2015

October 2 - October 18 Volume 32 - Issue 20 COBHAM HALL, WISEMAN FERRY Why not take a trip to Cobham Hall at Wisemans Ferry. This is the original home of Solomon Wiseman. Cobham Hall is within the Wisemans Inn Hotel. The current owner has restored much of the upstairs rooms and they are all open to the public with great information boards as to the history. “Can you keep my dog from Gentle Dental Care For Your Whole Family. getting out?” Two for the price of One MARK VINT Check-up and Cleans 9651 2182 Ph 9680 2400 When you mention this ad. Come & meet 270 New Line Road 432 Old Northern Rd vid Dural NSW 2158 Call for a Booking Now! Dr Da Glenhaven Ager [email protected] Opposite Flower Power It’s time for your Spring Clean ! ABN: 84 451 806 754 WWW.DURALAUTO.COM BRISTOL PAINT AND Hills Family Free Sandstone battery DECORATOR CENTRE Run Business backup when you mention Sales “We Certainly Can!” this ad Unit 7 No 6 Victoria Ave Castle Hill, NSW 2158 Buy Direct From the Quarry P 02 9680 2738 F 02 9894 1290 9652 1783 M 0413 109 051 Handsplit 1800 22 33 64 E [email protected] Joe 0416 104 660 Random Flagging $55m2 www.hiddenfence.com.au A BETTER FINISH BEGINS AT BRISTOL 113 Smallwood Rd Glenorie FEATURE ARTICLES “Safelink FM/DM digital Through conditioning offering a free Rechargeable signal” that’s transmitted and clear instructions, your Battery Backup for all through a tough wire installed dog becomes well aware installations. -

Filozofické Aspekty Technologií V Komediálním Sci-Fi Seriálu Červený Trpaslík

Masarykova univerzita Filozofická fakulta Ústav hudební vědy Teorie interaktivních médií Dominik Zaplatílek Bakalářská diplomová práce Filozofické aspekty technologií v komediálním sci-fi seriálu Červený trpaslík Vedoucí práce: PhDr. Martin Flašar, Ph.D. 2020 Prohlašuji, že jsem tuto práci vypracoval samostatně a použil jsem literárních a dalších pramenů a informací, které cituji a uvádím v seznamu použité literatury a zdrojů informací. V Brně dne ....................................... Dominik Zaplatílek Poděkování Tímto bych chtěl poděkovat panu PhDr. Martinu Flašarovi, Ph.D za odborné vedení této bakalářské práce a podnětné a cenné připomínky, které pomohly usměrnit tuto práci. Obsah Úvod ................................................................................................................................................. 5 1. Seriál Červený trpaslík ................................................................................................................... 6 2. Vyobrazené technologie ............................................................................................................... 7 2.1. Android Kryton ....................................................................................................................... 14 2.1.1. Teologická námitka ........................................................................................................ 15 2.1.2. Argument z vědomí ....................................................................................................... 18 2.1.3. Argument z -

The Metaphysical Possibility of Time Travel Fictions Effingham, Nikk

University of Birmingham The metaphysical possibility of time travel fictions Effingham, Nikk DOI: 10.1007/s10670-021-00403-y License: Creative Commons: Attribution (CC BY) Document Version Publisher's PDF, also known as Version of record Citation for published version (Harvard): Effingham, N 2021, 'The metaphysical possibility of time travel fictions', Erkenntnis. https://doi.org/10.1007/s10670-021-00403-y Link to publication on Research at Birmingham portal General rights Unless a licence is specified above, all rights (including copyright and moral rights) in this document are retained by the authors and/or the copyright holders. The express permission of the copyright holder must be obtained for any use of this material other than for purposes permitted by law. •Users may freely distribute the URL that is used to identify this publication. •Users may download and/or print one copy of the publication from the University of Birmingham research portal for the purpose of private study or non-commercial research. •User may use extracts from the document in line with the concept of ‘fair dealing’ under the Copyright, Designs and Patents Act 1988 (?) •Users may not further distribute the material nor use it for the purposes of commercial gain. Where a licence is displayed above, please note the terms and conditions of the licence govern your use of this document. When citing, please reference the published version. Take down policy While the University of Birmingham exercises care and attention in making items available there are rare occasions when an item has been uploaded in error or has been deemed to be commercially or otherwise sensitive. -

Red Dwarf” - by Lee Russell

Falling in love with “Red Dwarf” - by Lee Russell It was around 2003 or 2004 when I fell in love with the British sci-fi comedy show “Red Dwarf”. I had been somewhat aware of the series when it launched in 1988 but the external model shots looked so unrealistic that I didn’t bother to try it… what a mistake! My epiphany came late one night after a long session of distance-studying for a degree with the Open University. Feeling very tired and just looking for something to relax with before going to bed, I was suddenly confronted with one of the funniest comedy scenes I had ever seen. That scene was in the Season VIII episode ‘Back in the Red, part 2’. I tuned in just at the moment that Rimmer was using a hammer to test the anaesthetic that he’d applied to his nether regions. I didn’t know the character or the back story that had brought him to that moment, but Chris Barrie’s wonderful acting sucked me in – I was laughing out loud and had suddenly become ‘a Dwarfer’. With one exception, I have loved every series of Red Dwarf, and in this blog I’ll be reflecting on what has made me come to love it so much over the 12 series that have been broadcast to date. For anyone was hasn’t seen Red Dwarf (and if you haven’t, get out there and find a copy now – seriously), the story begins with three of the series’ main characters who have either survived, or are descended from, a radiation accident that occurred three million years ago and killed all of the rest of the crew of the Jupiter Mining Corp ship ‘Red Dwarf’. -

The Man in the Rubber Mask: the Inside Smegging Story of Red Dwarf Pdf, Epub, Ebook

THE MAN IN THE RUBBER MASK: THE INSIDE SMEGGING STORY OF RED DWARF PDF, EPUB, EBOOK Robert Llewellyn | 352 pages | 14 May 2013 | Cornerstone | 9781908717788 | English | London, United Kingdom The Man In The Rubber Mask: The Inside Smegging Story of Red Dwarf PDF Book Sir David Attenborough. At 4 out of 5 stars I would still recommend The Man in the Rubber Mask for any diehard Dwarfer keen to further develop their knowledge of the show. Whether this is because the comedy level has increased from the previous book, or because I have become more comfortable or more at ease with the personalities of these characters, who can really say. Home 1 Books 2. While waiting in line for the ice- cream truck at around 3pm, the people ahead of him suddenly go berserk, violently attacking each other. Robert recalls the long arduous hours spent in makeup before filming could even begin and the various trials and tribulations of wearing a latex foam face mask and all over bodysuit, especially on things like his inability to eat lunch while on set. My review of book one, Dial G for Gravity can be found here. An irrelevant boop I must confess, however the lack of attention to detail rather grates on my nerves. All in all Dial G for Gravity is a pretty good space comedy, with well thought out characters and some interesting alien races in the Gloabons, Andelians and the Kreitians. Four Past Midnight Collection. Luca Rossi writes in a simple, easy to read prose that flows nicely as the characters develop and the story gradually unfolds. -

'Into the Gloop'

A Situation Comedy in Space 1 SERIES 6 /2 : Episode 0 ‘Into the Gloop’ BY ROB GRANT Director: Ed Bye Producer: Paul Jackson (RUNNING TIME: Zero Hours) ©Rob Grant 2021 The sending of this script does not constitute an offer of a part therein CAST LISTER Raphael Clarkson RIMMER Harmony Hewlett KRYTEN Ellie Griffiths CAT Nikola Skalova YOUNG RIMMER Loïc Baucherel Into the Gloop – 1 – Red Dwarf RED DWARF 1. OPENING TITLES 2. MODEL SHOT. STARBUG IN SPACE Zoom in on Starbug, battered and blasted, barely maintaining hull integrity. CAPTION The action takes place at the end of episode 6, season 6, Out of Time. Senselessly attacked by their future selves, the crew are dead at their posts … 3. INT. STARBUG COCKPIT Smoking, fizzing. The odd console fire. LISTER, KRYTEN and CAT are face down and lifeless at their stations. RIMMER – in his hard light form – is standing by the hatch, horrified by the devastation … A console beside him explodes, snapping him into action. He grabs a bazookoid and heads off into … 4. INT. STARBUG BOWELS Lots of smoke and little fires. Red flashing emergency lights and awooga siren. RIMMER staggers along, rocked by exploding panels and falling debris. He spots what he’s looking for – the Anywhen time machine. If he can destroy that, none of this nightmare will have happened. He raises the bazookoid. There is a metallic creaking above. RIMMER looks up. A huge girder crashes down on him, literally squashing him, concertina-style, like he’s Wile E. Coyote. He staggers clear, like a flattened cartoon, and lurches on, stretching back to his normal shape with each step. -

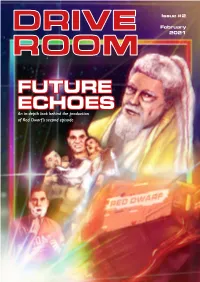

Drive Room Issue 2

Issue #2 February 2021 FUTURE ECHOES An in-depth look behind the production of Red Dwarf’s second episode Contents LEVEL 159 2 Editorial NIVELO 3 Synopsis Well, there was quite a lot to look at for the first ever episode 5 Crew & Other Info of a show, who knew? So this issue will be a little more brief 6 Guest Stars I suspect, a bit leaner, a bit more ‘Green Beret’... but hopefully still a good informative read. 7 Behind The Scenes 11 Adaptations/Other Media My first experience of ‘Future Echoes’ was via the comic-book 14 Character Spotlight version printed in the Red Dwarf Smegazines, and the version 23 Robot Claws used in the Red Dwarf novel. The TV version has therefore 29 always held a bit of a wierd place in my love of the show - as Actor Spotlight much as I can recite it line for line, I’m forever comparing it to 31 Next Issue the other versions! Still, it’s easily one of the best episodes Click/tap on an item to jump to that article. from the first series, if not THE best, and it’s importance in Click/tap the red square at the end of each article to return here. guiding the style of the show cannot be understated. And that’s simple enough that Lister can understand it. “So what is it?” “Thankski Verski Influenced by friends and professionals Muchski Budski!” within the Transformers fandom, I’ve opted to take a leaf out of their book and try and Many thanks to Jordan Hall and James Telfor for create a Red Dwarf fanzine in the vein of providing thier own photographs, memories and other a partwork - where each issue will take a information about the studio filming of Future Echoes deep in-depth dive into a specific episode for this issue. -

Rochester Wins Slugfest County Humane Officer's Hours Increased

Serving our communities since 1889 — www.chronline.com $1 Mid-Week Edition Rochester Wins Slugfest Thursday, Warriors Top Tenino in 17-11 Offensive Affair / Sports 1 April 28, 2016 Billie’s Designer Fabrics State Medal of Honor Downtown Chehalis Business Serves Up Chehalis Officer Rick Silva to Be Honored at Sewing Supplies and Expertise / Main 3 Washington, National Memorials / Main 4 County Humane Officer’s Hours Increased Due to High Demand “Hope for Okanogan” is emblazoned on the back of a backhoe in this photograph pro- vided by Restoration Hope volunteers from Bethel Church. Bethel Church Group Offers Helping Hands to Victims of State Wildfires RESTORATION HOPE: Church Behind Massive Effort to Help Afflicted Clean Up and Start Again By Jordan Nailon [email protected] Fire and brimstone are staples of impassioned preaching from the pulpit, but for the flock at Bethel Church south of Chehalis, those iconic images have taken on a very literal context. Over the past two years, the Pete Caster / [email protected] church has coordinated three trips Alishia Hornburg, the humane oicer for Lewis County, looks at a healthy horse at a farm in Winlock on Wednesday morning. Hornburg visited the farm, which to Central Washington in order to was not in an open investigation, in order to show the characteristics of a well-fed, older horse. provide wildfire relief to citizens who were afflicted by the torrents GROWING CASELOAD: Alishia of wildfire that blazed across that parched landscape. Each relief Hornburg Up to a Full-Time group has consisted of about 60 peo- Schedule With County ple, with the most recent group un- A special dertaking their work during April’s blend of By Natalie Johnson spring break from school.