Uniblock: Scoring and Filtering Corpus with Unicode Block Information

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Assessment of Options for Handling Full Unicode Character Encodings in MARC21 a Study for the Library of Congress

1 Assessment of Options for Handling Full Unicode Character Encodings in MARC21 A Study for the Library of Congress Part 1: New Scripts Jack Cain Senior Consultant Trylus Computing, Toronto 1 Purpose This assessment intends to study the issues and make recommendations on the possible expansion of the character set repertoire for bibliographic records in MARC21 format. 1.1 “Encoding Scheme” vs. “Repertoire” An encoding scheme contains codes by which characters are represented in computer memory. These codes are organized according to a certain methodology called an encoding scheme. The list of all characters so encoded is referred to as the “repertoire” of characters in the given encoding schemes. For example, ASCII is one encoding scheme, perhaps the one best known to the average non-technical person in North America. “A”, “B”, & “C” are three characters in the repertoire of this encoding scheme. These three characters are assigned encodings 41, 42 & 43 in ASCII (expressed here in hexadecimal). 1.2 MARC8 "MARC8" is the term commonly used to refer both to the encoding scheme and its repertoire as used in MARC records up to 1998. The ‘8’ refers to the fact that, unlike Unicode which is a multi-byte per character code set, the MARC8 encoding scheme is principally made up of multiple one byte tables in which each character is encoded using a single 8 bit byte. (It also includes the EACC set which actually uses fixed length 3 bytes per character.) (For details on MARC8 and its specifications see: http://www.loc.gov/marc/.) MARC8 was introduced around 1968 and was initially limited to essentially Latin script only. -

UTR #25: Unicode and Mathematics

UTR #25: Unicode and Mathematics http://www.unicode.org/reports/tr25/tr25-5.html Technical Reports Draft Unicode Technical Report #25 UNICODE SUPPORT FOR MATHEMATICS Version 1.0 Authors Barbara Beeton ([email protected]), Asmus Freytag ([email protected]), Murray Sargent III ([email protected]) Date 2002-05-08 This Version http://www.unicode.org/unicode/reports/tr25/tr25-5.html Previous Version http://www.unicode.org/unicode/reports/tr25/tr25-4.html Latest Version http://www.unicode.org/unicode/reports/tr25 Tracking Number 5 Summary Starting with version 3.2, Unicode includes virtually all of the standard characters used in mathematics. This set supports a variety of math applications on computers, including document presentation languages like TeX, math markup languages like MathML, computer algebra languages like OpenMath, internal representations of mathematics in systems like Mathematica and MathCAD, computer programs, and plain text. This technical report describes the Unicode mathematics character groups and gives some of their default math properties. Status This document has been approved by the Unicode Technical Committee for public review as a Draft Unicode Technical Report. Publication does not imply endorsement by the Unicode Consortium. This is a draft document which may be updated, replaced, or superseded by other documents at any time. This is not a stable document; it is inappropriate to cite this document as other than a work in progress. Please send comments to the authors. A list of current Unicode Technical Reports is found on http://www.unicode.org/unicode/reports/. For more information about versions of the Unicode Standard, see http://www.unicode.org/unicode/standard/versions/. -

Unicode Characters in Proofpower Through Lualatex

Unicode Characters in ProofPower through Lualatex Roger Bishop Jones Abstract This document serves to establish what characters render like in utf8 ProofPower documents prepared using lualatex. Created 2019 http://www.rbjones.com/rbjpub/pp/doc/t055.pdf © Roger Bishop Jones; Licenced under Gnu LGPL Contents 1 Prelude 2 2 Changes 2 2.1 Recent Changes .......................................... 2 2.2 Changes Under Consideration ................................... 2 2.3 Issues ............................................... 2 3 Introduction 3 4 Mathematical operators and symbols in Unicode 3 5 Dedicated blocks 3 5.1 Mathematical Operators block .................................. 3 5.2 Supplemental Mathematical Operators block ........................... 4 5.3 Mathematical Alphanumeric Symbols block ........................... 4 5.4 Letterlike Symbols block ..................................... 6 5.5 Miscellaneous Mathematical Symbols-A block .......................... 7 5.6 Miscellaneous Mathematical Symbols-B block .......................... 7 5.7 Miscellaneous Technical block .................................. 7 5.8 Geometric Shapes block ...................................... 8 5.9 Miscellaneous Symbols and Arrows block ............................. 9 5.10 Arrows block ........................................... 9 5.11 Supplemental Arrows-A block .................................. 10 5.12 Supplemental Arrows-B block ................................... 10 5.13 Combining Diacritical Marks for Symbols block ......................... 11 5.14 -

Mathematical Symbols

List of mathematical symbols This is a list of symbols used in all branches ofmathematics to express a formula or to represent aconstant . A mathematical concept is independent of the symbol chosen to represent it. For many of the symbols below, the symbol is usually synonymous with the corresponding concept (ultimately an arbitrary choice made as a result of the cumulative history of mathematics), but in some situations, a different convention may be used. For example, depending on context, the triple bar "≡" may represent congruence or a definition. However, in mathematical logic, numerical equality is sometimes represented by "≡" instead of "=", with the latter representing equality of well-formed formulas. In short, convention dictates the meaning. Each symbol is shown both inHTML , whose display depends on the browser's access to an appropriate font installed on the particular device, and typeset as an image usingTeX . Contents Guide Basic symbols Symbols based on equality Symbols that point left or right Brackets Other non-letter symbols Letter-based symbols Letter modifiers Symbols based on Latin letters Symbols based on Hebrew or Greek letters Variations See also References External links Guide This list is organized by symbol type and is intended to facilitate finding an unfamiliar symbol by its visual appearance. For a related list organized by mathematical topic, see List of mathematical symbols by subject. That list also includes LaTeX and HTML markup, and Unicode code points for each symbol (note that this article doesn't -

Unicode Character 'AUTOMOBILE' (U+1F697)

(/index.htm) Search You are in (/index.dir) FileFormat.Info (/index.htm) » (/info/index.dir) Info (/info/index.htm) » (/info/unicode/index.dir) Unicode (/info/unicode/index.htm) » (/info/unicode/char/index.dir) Characters (/info/unicode/char/index.htm) » (/info/unicode/char/1F697/index.dir) U+1F697 (/info/unicode/char/1F697/index.htm) Best Online CRM 2 Exercises To Never Do Password protect folders Free for 3 Users. Track your Sales and Never do these waist widening exercises Password protect & hide your files in just 3 Marketing Online. if you want to look ripped clicks! It's dead simple Zoho.com/CRM http://www.adonisgoldenratio.com www.safeplicity.com Unicode Character 'AUTOMOBILE' (U+1F697) (../1f696/index.htm) (../1f698/index.htm) Browser Test Page (browsertest.htm) Outline (as SVG file) (/info/unicode/char/1f697/automobile.svg) Fonts that support U+1F697 (fontsupport.htm) (browsertest.htm) Unicode Data Name AUTOMOBILE Block Transport and Map Symbols (/info/unicode/block/transport_and_map_symbols/index.htm) Category Symbol, Other [So] (/info/unicode/category/So/index.htm) Combine 0 BIDI Other Neutrals [ON] Mirror N Version Unicode 6.0.0 (October 2010) (/info/unicode/version/6.0/index.htm) Encodings Emoji (/info/emoji/index.htm) (/info/emoji/red_car/index.htm) :red_car: (/info/emoji/red_car/index.htm) HTML Entity (decimal) 🚗 HTML Entity (hex) 🚗 How to type in Microsoft Windows (/tip/microsoft/enter_unicode.htm) Alt +1F697 UTF-8 (../../utf8.htm) (hex) 0xF0 0x9F 0x9A 0x97 (f09f9a97) UTF-8 (binary) 11110000:10011111:10011010:10010111 UTF-16 (hex) 0xD83D 0xDE97 (d83dde97) UTF-16 (decimal) 55,357 56,983 UTF-32 (hex) 0x0001F697 (1F697) UTF-32 (decimal) 128,663 C/C++/Java source code "\uD83D\uDE97" Python source code u"\U0001F697" More.. -

Oriya Range: 0B00–0B7F

Oriya Range: 0B00–0B7F This file contains an excerpt from the character code tables and list of character names for The Unicode Standard, Version 14.0 This file may be changed at any time without notice to reflect errata or other updates to the Unicode Standard. See https://www.unicode.org/errata/ for an up-to-date list of errata. See https://www.unicode.org/charts/ for access to a complete list of the latest character code charts. See https://www.unicode.org/charts/PDF/Unicode-14.0/ for charts showing only the characters added in Unicode 14.0. See https://www.unicode.org/Public/14.0.0/charts/ for a complete archived file of character code charts for Unicode 14.0. Disclaimer These charts are provided as the online reference to the character contents of the Unicode Standard, Version 14.0 but do not provide all the information needed to fully support individual scripts using the Unicode Standard. For a complete understanding of the use of the characters contained in this file, please consult the appropriate sections of The Unicode Standard, Version 14.0, online at https://www.unicode.org/versions/Unicode14.0.0/, as well as Unicode Standard Annexes #9, #11, #14, #15, #24, #29, #31, #34, #38, #41, #42, #44, #45, and #50, the other Unicode Technical Reports and Standards, and the Unicode Character Database, which are available online. See https://www.unicode.org/ucd/ and https://www.unicode.org/reports/ A thorough understanding of the information contained in these additional sources is required for a successful implementation. -

Unicodemath a Nearly Plain-Text Encoding of Mathematics Version 3.1 Murray Sargent III Microsoft Corporation 16-Nov-16

Unicode Nearly Plain Text Encoding of Mathematics UnicodeMath A Nearly Plain-Text Encoding of Mathematics Version 3.1 Murray Sargent III Microsoft Corporation 16-Nov-16 1. Introduction ............................................................................................................ 2 2. Encoding Simple Math Expressions ...................................................................... 3 2.1 Fractions .......................................................................................................... 4 2.2 Subscripts and Superscripts........................................................................... 6 2.3 Use of the Blank (Space) Character ............................................................... 8 3. Encoding Other Math Expressions ........................................................................ 8 3.1 Delimiters ........................................................................................................ 8 3.2 Literal Operators ........................................................................................... 11 3.3 Prescripts and Above/Below Scripts ........................................................... 11 3.4 n-ary Operators ............................................................................................. 12 3.5 Mathematical Functions ............................................................................... 13 3.6 Square Roots and Radicals ........................................................................... 14 3.7 Enclosures .................................................................................................... -

Fonts in Mpdf Version 5.X Mpdf Version 5 Supports Truetype Fonts, Reading and Embedding Directly from the .Ttf Font Files

mPDF Fonts in mPDF Version 5.x mPDF version 5 supports Truetype fonts, reading and embedding directly from the .ttf font files. Fonts must follow the Truetype specification and use Unicode mapping to the characters. Truetype collections (.ttc files) and Opentype files (.otf) in Truetype format are also supported. EASY TO ADD NEW FONTS 1. Upload the Truetype font file to the fonts directory (/ttfonts) 2. Define the font file details in the configuration file (config_fonts.php) 3. Access the font by specifying it in your HTML code as the CSS font-family These are some examples of Windows fonts: Arial - The quick, sly fox jumped over the lazy brown dog. Comic Sans MS - The quick, sly fox jumped over the lazy brown dog. Trebuchet - The quick, sly fox jumped over the lazy brown dog. Calibri - The quick, sly fox jumped over the lazy brown dog. QuillScript - The quick, sly fox jumped over the lazy brown dog. Lucidaconsole - The quick, sly fox jumped over the lazy brown dog. Tahoma - The quick, sly fox jumped over the lazy brown dog. AlbaSuper - The quick, sly fox jumped over the lazy brown dog. FULL UNICODE SUPPORT The DejaVu fonts distributed with mPDF contain an extensive set of characters, but it is easy to add fonts to access uncommon characters. Georgian (DejaVuSansCondensed) Ⴀ Ⴁ Ⴂ Ⴃ Ⴄ Ⴅ Ⴆ Ⴇ Ⴈ Ⴉ Ⴊ Ⴋ Ⴌ Ⴍ Ⴎ Ⴏ Ⴐ Ⴑ Ⴒ Ⴓ Cherokee (Quivira) Ꭰ Ꭱ Ꭲ Ꭳ Ꭴ Ꭵ Ꭶ Ꭷ Ꭸ Ꭹ Ꭺ Ꭻ Ꭼ Ꭽ Ꭾ Ꭿ Ꮀ Ꮁ Ꮂ Runic (Junicode) ᚠ ᚡ ᚢ ᚣ ᚤ ᚥ ᚦ ᚧ ᚨ ᚩ ᚪ ᚫ ᚬ ᚭ ᚮ ᚯ ᚰ ᚱ ᚲ ᚳ ᚴ ᚵ ᚶ ᚷ ᚸ ᚹ ᚺ ᚻ ᚼ Greek Extended (Quivira) ἀ ἁ ἂ ἃ ἄ ἅ ἆ ἇ Ἀ Ἁ Ἂ Ἃ Ἄ Ἅ Ἆ Ἇ ἐ ἑ ἒ ἓ ἔ ἕ IPA Extensions (Quivira) -

Pdflib Tutorial 9.0.1

ABC PDFlib, PDFlib+PDI, PPS A library for generating PDF on the fly PDFlib 9.0.1 Tutorial For use with C, C++, Cobol, COM, Java, .NET, Objective-C, Perl, PHP, Python, REALbasic/Xojo, RPG, Ruby Copyright © 1997–2013 PDFlib GmbH and Thomas Merz. All rights reserved. PDFlib users are granted permission to reproduce printed or digital copies of this manual for internal use. PDFlib GmbH Franziska-Bilek-Weg 9, 80339 München, Germany www.pdflib.com phone +49 • 89 • 452 33 84-0 fax +49 • 89 • 452 33 84-99 If you have questions check the PDFlib mailing list and archive at tech.groups.yahoo.com/group/pdflib Licensing contact: [email protected] Support for commercial PDFlib licensees: [email protected] (please include your license number) This publication and the information herein is furnished as is, is subject to change without notice, and should not be construed as a commitment by PDFlib GmbH. PDFlib GmbH assumes no responsibility or lia- bility for any errors or inaccuracies, makes no warranty of any kind (express, implied or statutory) with re- spect to this publication, and expressly disclaims any and all warranties of merchantability, fitness for par- ticular purposes and noninfringement of third party rights. PDFlib and the PDFlib logo are registered trademarks of PDFlib GmbH. PDFlib licensees are granted the right to use the PDFlib name and logo in their product documentation. However, this is not required. Adobe, Acrobat, PostScript, and XMP are trademarks of Adobe Systems Inc. AIX, IBM, OS/390, WebSphere, iSeries, and zSeries are trademarks of International Business Machines Corporation. -

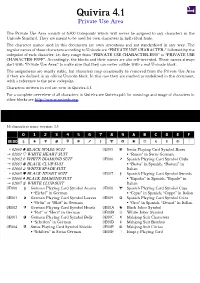

Quivira Private Use Area

Quivira 4.1 Private Use Area The Private Use Area consists of 6,400 Codepoints which will never be assigned to any characters in the Unicode Standard. They are meant to be used for own characters in individual fonts. The character names used in this documents are own inventions and not standardised in any way. The regular names of these characters according to Unicode are “PRIVATE USE CHARACTER-” followed by the codepoint of each character, i.e. they range from “PRIVATE USE CHARACTER-E000” to “PRIVATE USE CHARACTER-F8FF”. Accordingly, the blocks and their names are also self-invented. These names always start with “Private Use Area:” to make sure that they can never collide with a real Unicode block. The assignments are mostly stable, but characters may occasionally be removed from the Private Use Area if they are defined in an official Unicode block. In this case they are marked as undefined in this document, with a reference to the new codepoint. Characters written in red are new in Quivira 4.1. For a complete overview of all characters in Quivira see Quivira.pdf; for meanings and usage of characters in other blocks see http://www.unicode.org. Private Use Area: Playing Card Symbols 0E000 – 0E00F 16 characters since version 3.5 0 1 2 3 4 5 6 7 8 9 A B C D E F 0E00 → 02660 ♠ BLACK SPADE SUIT 0E005 Swiss Playing Card Symbol Roses → 02661 ♡ WHITE HEART SUIT • “Rosen” in Swiss German → 02662 ♢ WHITE DIAMOND SUIT 0E006 Spanish Playing Card Symbol Clubs → 02663 ♣ BLACK CLUB SUIT • “Bastos” in Spanish, “Bastoni” in → 02664 -

A Modular Architecture for Unicode Text Compression

A modular architecture for Unicode text compression Adam Gleave St John’s College A dissertation submitted to the University of Cambridge in partial fulfilment of the requirements for the degree of Master of Philosophy in Advanced Computer Science University of Cambridge Computer Laboratory William Gates Building 15 JJ Thomson Avenue Cambridge CB3 0FD United Kingdom Email: [email protected] 22nd July 2016 Declaration I Adam Gleave of St John’s College, being a candidate for the M.Phil in Advanced Computer Science, hereby declare that this report and the work described in it are my own work, unaided except as may be specified below, and that the report does not contain material that has already been used to any substantial extent for a comparable purpose. Total word count: 11503 Signed: Date: This dissertation is copyright c 2016 Adam Gleave. All trademarks used in this dissertation are hereby acknowledged. Acknowledgements I would like to thank Dr. Christian Steinruecken for his invaluable advice and encouragement throughout the project. I am also grateful to Prof. Zoubin Ghahramani for his guidance and suggestions for extensions to this work. I would further like to thank Olivia Wiles and Shashwat Silas for their comments on drafts of this dissertation. I would also like to express my gratitude to Maria Lomelí García for a fruitful discussion on the relationship between several stochastic processes considered in this dissertation. Abstract Conventional compressors operate on single bytes. This works well on ASCII text, where each character is one byte. However, it fares poorly on UTF-8 texts, where characters can span multiple bytes. -

The Apple Font Tool Suite Tutorial

The Apple Font Tool Suite Tutorial Version 1.0 Copyright © 2002 Apple Computer, Inc. All rights reserved. i Table of Contents Table of Contents...........................................................................................................2 Introduction ...................................................................................................................3 Lesson One: Filling Out the Glyph Repertoire..............................................................6 Lesson Two: Using Add Lists ...................................................................................... 19 Lesson Three: Completing the tables ........................................................................... 33 Lesson Four: Metamorphosis Input Files (MIFs) ....................................................... 51 2 Introduction This tutorial is a general introduction to the Mac OS X Apple Font Tool Suite, illustrating the various techniques needed to work with a real-life font. Further documentation on each of the tools can be found in the Apple Font Tool Suite document and the Quick Reference, both of which are installed by the installer. There is also a text file, ‘Tutorial Command Summary.txt’, which contains all the command lines found in this Tutorial and is handy for cutting and pasting into the terminal window. The tutorial includes a basic font, Apple Simple.ttf, which was created by Apple for demonstration purposes only. The tutorial can be usefully worked through in conjunction with a general font editor such as Fontographer,