Nested Parallelism on GPU: Exploring Parallelization Templates for Irregular Loops and Recursive Computations Da Li, Hancheng Wu, Michela Becchi Dept

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

High Performance Computing Through Parallel and Distributed Processing

Yadav S. et al., J. Harmoniz. Res. Eng., 2013, 1(2), 54-64 Journal Of Harmonized Research (JOHR) Journal Of Harmonized Research in Engineering 1(2), 2013, 54-64 ISSN 2347 – 7393 Original Research Article High Performance Computing through Parallel and Distributed Processing Shikha Yadav, Preeti Dhanda, Nisha Yadav Department of Computer Science and Engineering, Dronacharya College of Engineering, Khentawas, Farukhnagar, Gurgaon, India Abstract : There is a very high need of High Performance Computing (HPC) in many applications like space science to Artificial Intelligence. HPC shall be attained through Parallel and Distributed Computing. In this paper, Parallel and Distributed algorithms are discussed based on Parallel and Distributed Processors to achieve HPC. The Programming concepts like threads, fork and sockets are discussed with some simple examples for HPC. Keywords: High Performance Computing, Parallel and Distributed processing, Computer Architecture Introduction time to solve large problems like weather Computer Architecture and Programming play forecasting, Tsunami, Remote Sensing, a significant role for High Performance National calamities, Defence, Mineral computing (HPC) in large applications Space exploration, Finite-element, Cloud science to Artificial Intelligence. The Computing, and Expert Systems etc. The Algorithms are problem solving procedures Algorithms are Non-Recursive Algorithms, and later these algorithms transform in to Recursive Algorithms, Parallel Algorithms particular Programming language for HPC. and Distributed Algorithms. There is need to study algorithms for High The Algorithms must be supported the Performance Computing. These Algorithms Computer Architecture. The Computer are to be designed to computer in reasonable Architecture is characterized with Flynn’s Classification SISD, SIMD, MIMD, and For Correspondence: MISD. Most of the Computer Architectures preeti.dhanda01ATgmail.com are supported with SIMD (Single Instruction Received on: October 2013 Multiple Data Streams). -

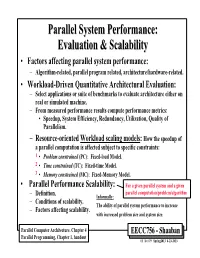

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Scalable Task Parallel Programming in the Partitioned Global Address Space

Scalable Task Parallel Programming in the Partitioned Global Address Space DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By James Scott Dinan, M.S. Graduate Program in Computer Science and Engineering The Ohio State University 2010 Dissertation Committee: P. Sadayappan, Advisor Atanas Rountev Paolo Sivilotti c Copyright by James Scott Dinan 2010 ABSTRACT Applications that exhibit irregular, dynamic, and unbalanced parallelism are grow- ing in number and importance in the computational science and engineering commu- nities. These applications span many domains including computational chemistry, physics, biology, and data mining. In such applications, the units of computation are often irregular in size and the availability of work may be depend on the dynamic, often recursive, behavior of the program. Because of these properties, it is challenging for these programs to achieve high levels of performance and scalability on modern high performance clusters. A new family of programming models, called the Partitioned Global Address Space (PGAS) family, provides the programmer with a global view of shared data and allows for asynchronous, one-sided access to data regardless of where it is physically stored. In this model, the global address space is distributed across the memories of multiple nodes and, for any given node, is partitioned into local patches that have high affinity and low access cost and remote patches that have a high access cost due to communication. The PGAS data model relaxes conventional two-sided communication semantics and allows the programmer to access remote data without the cooperation of the remote processor. -

Oblivious Network RAM and Leveraging Parallelism to Achieve Obliviousness

Oblivious Network RAM and Leveraging Parallelism to Achieve Obliviousness Dana Dachman-Soled1,3 ∗ Chang Liu2 y Charalampos Papamanthou1,3 z Elaine Shi4 x Uzi Vishkin1,3 { 1: University of Maryland, Department of Electrical and Computer Engineering 2: University of Maryland, Department of Computer Science 3: University of Maryland Institute for Advanced Computer Studies (UMIACS) 4: Cornell University January 12, 2017 Abstract Oblivious RAM (ORAM) is a cryptographic primitive that allows a trusted CPU to securely access untrusted memory, such that the access patterns reveal nothing about sensitive data. ORAM is known to have broad applications in secure processor design and secure multi-party computation for big data. Unfortunately, due to a logarithmic lower bound by Goldreich and Ostrovsky (Journal of the ACM, '96), ORAM is bound to incur a moderate cost in practice. In particular, with the latest developments in ORAM constructions, we are quickly approaching this limit, and the room for performance improvement is small. In this paper, we consider new models of computation in which the cost of obliviousness can be fundamentally reduced in comparison with the standard ORAM model. We propose the Oblivious Network RAM model of computation, where a CPU communicates with multiple memory banks, such that the adversary observes only which bank the CPU is communicating with, but not the address offset within each memory bank. In other words, obliviousness within each bank comes for free|either because the architecture prevents a malicious party from ob- serving the address accessed within a bank, or because another solution is used to obfuscate memory accesses within each bank|and hence we only need to obfuscate communication pat- terns between the CPU and the memory banks. -

Compiling for a Multithreaded Dataflow Architecture : Algorithms, Tools, and Experience Feng Li

Compiling for a multithreaded dataflow architecture : algorithms, tools, and experience Feng Li To cite this version: Feng Li. Compiling for a multithreaded dataflow architecture : algorithms, tools, and experience. Other [cs.OH]. Université Pierre et Marie Curie - Paris VI, 2014. English. NNT : 2014PA066102. tel-00992753v2 HAL Id: tel-00992753 https://tel.archives-ouvertes.fr/tel-00992753v2 Submitted on 10 Sep 2014 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Universit´ePierre et Marie Curie Ecole´ Doctorale Informatique, T´el´ecommunications et Electronique´ Compiling for a multithreaded dataflow architecture: algorithms, tools, and experience par Feng LI Th`esede doctorat d'Informatique Dirig´eepar Albert COHEN Pr´esent´ee et soutenue publiquement le 20 mai, 2014 Devant un jury compos´ede: , Pr´esident , Rapporteur , Rapporteur , Examinateur , Examinateur , Examinateur I would like to dedicate this thesis to my mother Shuxia Li, for her love Acknowledgements I would like to thank my supervisor Albert Cohen, there's nothing more exciting than working with him. Albert, you are an extraordi- nary person, full of ideas and motivation. The discussions with you always enlightens me, helps me. I am so lucky to have you as my supervisor when I first come to research as a PhD student. -

Massively Parallel Computers: Why Not Prirallel Computers for the Masses?

Maslsively Parallel Computers: Why Not Prwallel Computers for the Masses? Gordon Bell Abstract In 1989 I described the situation in high performance computers including several parallel architectures that During the 1980s the computers engineering and could deliver teraflop power by 1995, but with no price science research community generally ignored parallel constraint. I felt SIMDs and multicomputers could processing. With a focus on high performance computing achieve this goal. A shared memory multiprocessor embodied in the massive 1990s High Performance looked infeasible then. Traditional, multiple vector Computing and Communications (HPCC) program that processor supercomputers such as Crays would simply not has the short-term, teraflop peak performanc~:goal using a evolve to a teraflop until 2000. Here's what happened. network of thousands of computers, everyone with even passing interest in parallelism is involted with the 1. During the first half of 1992, NEC's four processor massive parallelism "gold rush". Funding-wise the SX3 is the fastest computer, delivering 90% of its situation is bright; applications-wise massit e parallelism peak 22 Glops for the Linpeak benchmark, and Cray's is microscopic. While there are several programming 16 processor YMP C90 has the greatest throughput. models, the mainline is data parallel Fortran. However, new algorithms are required, negating th~: decades of 2. The SIMD hardware approach of Thinking Machines progress in algorithms. Thus, utility will no doubt be the was abandoned because it was only suitable for a few, Achilles Heal of massive parallelism. very large scale problems, barely multiprogrammed, and uneconomical for workloads. It's unclear whether The Teraflop: 1992 large SIMDs are "generation" scalable, and they are clearly not "size" scalable. -

Scheduling on Asymmetric Parallel Architectures

Scheduling on Asymmetric Parallel Architectures Filip Blagojevic Dissertation submitted to the faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science and Applications Committee Members: Dimitrios S. Nikolopoulos (Chair) Kirk W. Cameron Wu-chun Feng David K. Lowenthal Calvin J. Ribbens May 30, 2008 Blacksburg, Virginia Keywords: Multicore processors, Cell BE, process scheduling, high-performance computing, performance prediction, runtime adaptation c Copyright 2008, Filip Blagojevic Scheduling on Asymmetric Parallel Architectures Filip Blagojevic (ABSTRACT) We explore runtime mechanisms and policies for scheduling dynamic multi-grain parallelism on heterogeneous multi-core processors. Heterogeneous multi-core processors integrate con- ventional cores that run legacy codes with specialized cores that serve as computational ac- celerators. The term multi-grain parallelism refers to the exposure of multiple dimensions of parallelism from within the runtime system, so as to best exploit a parallel architecture with heterogeneous computational capabilities between its cores and execution units. To maximize performance on heterogeneous multi-core processors, programs need to expose multiple dimen- sions of parallelism simultaneously. Unfortunately, programming with multiple dimensions of parallelism is to date an ad hoc process, relying heavily on the intuition and skill of program- mers. Formal techniques are needed to optimize multi-dimensional parallel program designs. We investigate user- and kernel-level schedulers that dynamically ”rightsize” the dimensions and degrees of parallelism on the asymmetric parallel platforms. The schedulers address the problem of mapping application-specific concurrency to an architecture with multiple hardware layers of parallelism, without requiring programmer intervention or sophisticated compiler sup- port. -

CUDA C++ Programming Guide

CUDA C++ Programming Guide Design Guide PG-02829-001_v11.4 | September 2021 Changes from Version 11.3 ‣ Added Graph Memory Nodes. ‣ Formalized Asynchronous SIMT Programming Model. CUDA C++ Programming Guide PG-02829-001_v11.4 | ii Table of Contents Chapter 1. Introduction........................................................................................................ 1 1.1. The Benefits of Using GPUs.....................................................................................................1 1.2. CUDA®: A General-Purpose Parallel Computing Platform and Programming Model....... 2 1.3. A Scalable Programming Model.............................................................................................. 3 1.4. Document Structure................................................................................................................. 5 Chapter 2. Programming Model.......................................................................................... 7 2.1. Kernels.......................................................................................................................................7 2.2. Thread Hierarchy...................................................................................................................... 8 2.3. Memory Hierarchy...................................................................................................................10 2.4. Heterogeneous Programming................................................................................................11 2.5. Asynchronous -

14. Parallel Computing 14.1 Introduction 14.2 Independent

14. Parallel Computing 14.1 Introduction This chapter describes approaches to problems to which multiple computing agents are applied simultaneously. By "parallel computing", we mean using several computing agents concurrently to achieve a common result. Another term used for this meaning is "concurrency". We will use the terms parallelism and concurrency synonymously in this book, although some authors differentiate them. Some of the issues to be addressed are: What is the role of parallelism in providing clear decomposition of problems into sub-problems? How is parallelism specified in computation? How is parallelism effected in computation? Is parallel processing worth the extra effort? 14.2 Independent Parallelism Undoubtedly the simplest form of parallelism entails computing with totally independent tasks, i.e. there is no need for these tasks to communicate. Imagine that there is a large field to be plowed. It takes a certain amount of time to plow the field with one tractor. If two equal tractors are available, along with equally capable personnel to man them, then the field can be plowed in about half the time. The field can be divided in half initially and each tractor given half the field to plow. One tractor doesn't get into another's way if they are plowing disjoint halves of the field. Thus they don't need to communicate. Note however that there is some initial overhead that was not present with the one-tractor model, namely the need to divide the field. This takes some measurements and might not be that trivial. In fact, if the field is relatively small, the time to do the divisions might be more than the time saved by the second tractor. -

CS 211: Computer Architecture ¾ Starting with Simple ILP Using Pipelining ¾ Explicit ILP - EPIC ¾ Key Concept: Issue Multiple Instructions/Cycle Instructor: Prof

Computer Architecture • Part I: Processor Architectures CS 211: Computer Architecture ¾ starting with simple ILP using pipelining ¾ explicit ILP - EPIC ¾ key concept: issue multiple instructions/cycle Instructor: Prof. Bhagi Narahari • Part II: Multi Processor Architectures Dept. of Computer Science ¾ move from Processor to System level Course URL: www.seas.gwu.edu/~narahari/cs211/ ¾ can utilize all the techniques covered thus far ¾ i.e., the processors used in a multi-processor can be EPIC ¾ move from fine grain to medium/coarse grain ¾ assume all processor issues are resolved when discussing system level Multiprocessor design Bhagi Narahari, Lab. For Embedded Systems (LEMS), CS, GWU Moving from Fine grained to Coarser Multi-Processor Architectures grained computations. • Introduce Parallel Processing ¾ grains, mapping of s/w to h/w, issues • Overview of Multiprocessor Architectures ¾ Shared-memory, distributed memory ¾ SIMD architectures • Programming and Synchronization ¾ programming constructs, synch constructs, cache • Interconnection Networks • Parallel algorithm design and analysis Bhagi Narahari, Lab. For Embedded Systems (LEMS), CS, GWU Bhagi Narahari, Lab. For Embedded Systems (LEMS), CS, GWU 1 Hardware and Software Parallelism 10 Software vs. Hardware Parallelism (Example) 11 Software parallelism Hardware Parallelism : (three cycles) -- Defined by machine architecture and hardware multiplicity -- Number of instruction issues per machine cycle -- k issues per machine cycle : k-issue processor L4 Software parallelism : Cycle 1 L1 L2 L3 -- Control and data dependence of programs -- Compiler extensions -- OS extensions (parallel scheduling, shared memory allocation, (communication links) Cycle 2 X2 X1 Implicit Parallelism : -- Conventional programming language -- Parallelizing compiler - Cycle 3 + Explicit Parallelism : -- Parallelising constructs in programming languages -- Parallelising programs development tools -- Debugging, validation, testing, etc. -

Parallel Programming in Openmp About the Authors

Parallel Programming in OpenMP About the Authors Rohit Chandra is a chief scientist at NARUS, Inc., a provider of internet business infrastructure solutions. He previously was a principal engineer in the Compiler Group at Silicon Graphics, where he helped design and implement OpenMP. Leonardo Dagum works for Silicon Graphics in the Linux Server Platform Group, where he is responsible for the I/O infrastructure in SGI’s scalable Linux server systems. He helped define the OpenMP Fortran API. His research interests include parallel algorithms and performance modeling for parallel systems. Dave Kohr is a member of the technical staff at NARUS, Inc. He previ- ously was a member of the technical staff in the Compiler Group at Silicon Graphics, where he helped define and implement the OpenMP. Dror Maydan is director of software at Tensilica, Inc., a provider of appli- cation-specific processor technology. He previously was an engineering department manager in the Compiler Group of Silicon Graphics, where he helped design and implement OpenMP. Jeff McDonald owns SolidFX, a private software development company. As the engineering department manager at Silicon Graphics, he proposed the OpenMP API effort and helped develop it into the industry standard it is today. Ramesh Menon is a staff engineer at NARUS, Inc. Prior to NARUS, Ramesh was a staff engineer at SGI, representing SGI in the OpenMP forum. He was the founding chairman of the OpenMP Architecture Review Board (ARB) and supervised the writing of the first OpenMP specifica- tions. Parallel Programming in OpenMP Rohit Chandra Leonardo Dagum Dave Kohr Dror Maydan Jeff McDonald Ramesh Menon Senior Editor Denise E. -

CUDA Dynamic Parallelism

CUDA Dynamic Parallelism ©Jin Wang and Sudhakar Yalamanchili unless otherwise noted (1) Objective • To understand the CUDA Dynamic Parallelism (CDP) execution model, including synchronization and memory model • To understand the benefits of CDP in terms of productivity, workload balance and memory regularity • To understand the launching path of child kernels in the microarchitectural level • To understand the overhead of CDP (2) 1 Reading • CUDA Programming Guide. Appendix C “CUDA Dynamic Parallelism”. • S. Jones, “Introduction to Dynamic Parallelism”, GPU Technology Conference (presentation), Mar 2012. • J. Wang and S. Yalamanchili. “Characterization and Analysis of Dynamic Parallelism in Unstructured GPU Applications.” 2014 IEEE International Symposium on Workload Characterization (IISWC). October 2014. • J. Wang, A. Sidelink, N Rubin, and S. Yalamanchili, “Dynamic Thread Block Launch: A Lightweight Execution Mechanism to Support Irregular Applications on GPUs”, IEEE/ACM International Symposium on Computer Architecture (ISCA), June 2015. (3) Recap: CUDA Execution Model • Kernels/Grids are launched by host (CPU) • Grids are executed on GPU • Results are returned to CPU host device Grid 1 Block Block Kernel 1 (0, 0) (0, 1) Block Block (1, 0) (1, 1) Grid 2 Block Block (0, 0) (0, 1) Kernel 2 Block Block (1, 0) (1, 1) (4) 2 Launching from device • Kernels/Grids can be launched by GPU host device Grid 1 Block Block Kernel 1 (0, 0) (0, 1) Block Block (1, 0) (1, 1) Grid 2 Block Block (0, 0) (0, 1) Block Block (1, 0) (1, 1) (5) Code Example