Generating a Qnoael with Confidence Levels from Disparate Literature

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Table 2 of Supporting Information

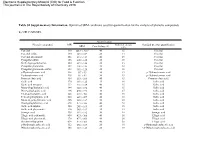

Electronic Supplementary Material (ESI) for Food & Function. This journal is © The Royal Society of Chemistry 2016 Table S1 Supplementary Information. Optimized SRM conditions used for quantification for the analysis of phenolic compounds by UPLC-MS/MS. Quantification Phenolic compound MW Collision energy Standard used for quantification SRM Cone voltage (v) (eV) Catechol 110 108.9 90.9 40 15 Catechol Catechol sulfate 190 189 109 20 15 Catechol Catechol glucuronide 286 285 123 40 15 Catechol Pyrogallol sulfate 206 205 125 20 15 Catechol Methyl pyrogallol sulfate 220 219 124 20 25 Catechol Pyrogallol glucuronide 302 301 125 20 10 Catechol Pyrogallol glucuronide-sulfate 382 381 125 20 10 Catechol p-Hydroxybenzoic acid 138 137 93 30 15 p-Hydroxybenzoic acid Hydroxybenzoic acid 138 137 93 30 15 p-Hydroxybenzoic acid Protocatechuic acid 154 153 109 40 15 Protocatechuic acid Gallic acid 170 169 125 35 10 Gallic acid Gallic acid hexoside 332 331 169 40 15 Gallic acid Mono-O-galloylquinic acid 344 343 191 40 15 Gallic acid Di-O-galloylquinic acid 496 495 191 40 25 Gallic acid Tri-O-galloylquinic acid 648 647 495 40 15 Gallic acid Tetra-O-galloylquinic acid 630 629 477 40 15 Gallic acid Mono-O-galloylshikimic acid 326 325 169 40 20 Gallic acid Di-O-galloylshikimic acid 478 477 325 40 20 Gallic acid Gallic acid sulphate 250 249 169 35 15 Gallic acid Gallic acid glucuronide 346 345 169 35 15 Gallic acid Syringic acid 198 197 182 30 10 Syringic acid Ellagic acid arabinoside 434 433 300 40 30 Ellagic acid Ellagic acid glucuronide -

Universidade Federal Do Rio De Janeiro Kim Ohanna

UNIVERSIDADE FEDERAL DO RIO DE JANEIRO KIM OHANNA PIMENTA INADA EFFECT OF TECHNOLOGICAL PROCESSES ON PHENOLIC COMPOUNDS CONTENTS OF JABUTICABA (MYRCIARIA JABOTICABA) PEEL AND SEED AND INVESTIGATION OF THEIR ELLAGITANNINS METABOLISM IN HUMANS. RIO DE JANEIRO 2018 Kim Ohanna Pimenta Inada EFFECT OF TECHNOLOGICAL PROCESSES ON PHENOLIC COMPOUNDS CONTENTS OF JABUTICABA (MYRCIARIA JABOTICABA) PEEL AND SEED AND INVESTIGATION OF THEIR ELLAGITANNINS METABOLISM IN HUMANS. Tese de Doutorado apresentada ao Programa de Pós-Graduação em Ciências de Alimentos, Universidade Federal do Rio de Janeiro, como requisito parcial à obtenção do título de Doutor em Ciências de Alimentos Orientadores: Profa. Dra. Mariana Costa Monteiro Prof. Dr. Daniel Perrone Moreira RIO DE JANEIRO 2018 DEDICATION À minha família e às pessoas maravilhosas que apareceram na minha vida. ACKNOWLEDGMENTS Primeiramente, gostaria de agradecer a Deus por ter me dado forças para não desistir e por ter colocado na minha vida “pessoas-anjo”, que me ajudaram e me apoiaram até nos momentos em que eu achava que ia dar tudo errado. Aos meus pais Beth e Miti. Eles não mediram esforços para que eu pudesse receber uma boa educação e para que eu fosse feliz. Logo no início da graduação, a situação financeira ficou bem apertada, mas eles continuaram fazendo de tudo para me ajudar. Foram milhares de favores prestados, marmitas e caronas. Meu pai diz que fez anos de curso de inglês e espanhol, porque passou anos acordando cedo no sábado só para me levar no curso que eu fazia no Fundão. Tinha dia que eu saía do curso morta de fome e quando eu entrava no carro, tinha uma marmita com almoço, com direito até a garrafa de suco. -

Berry Flavonoids and Phenolics: Bioavailability and Evidence of Protective Effects

Downloaded from British Journal of Nutrition (2010), 104, S67–S90 doi:10.1017/S0007114510003958 q The Authors 2010 https://www.cambridge.org/core Berry flavonoids and phenolics: bioavailability and evidence of protective effects Daniele Del Rio1, Gina Borges2 and Alan Crozier2* . IP address: 1Human Nutrition Unit, Department of Public Health, University of Parma, Via Volturno 39, 43100 Parma, Italy 2Plant Products and Human Nutrition Group, School of Medicine, College of Medical, Veterinary and Life Sciences, Graham Kerr 170.106.202.8 Building, University of Glasgow, Glasgow G12 8QQ, UK (Received 25 January 2010 – Accepted 24 February 2010) , on 24 Sep 2021 at 06:36:04 Berries contain vitamin C and are also a rich source of phytochemicals, especially anthocyanins which occur along with other classes of phenolic compounds, including ellagitannins, flavan-3-ols, procyanidins, flavonols and hydroxybenzoate derivatives. This review examines studies with both human subjects and animals on the absorption of these compounds, and their glucuronide, sulphate and methylated metabolites, into the cir- culatory system from the gastrointestinal tract and the evidence for their localisation within the body in organs such as the brain and eyes. The involvement of the colonic microflora in catabolising dietary flavonoids that pass from the small to the large intestine is discussed along with the potential fate and role of the resultant phenolic acids that can be produced in substantial quantities. The in vitro and in vivo bioactivities of these , subject to the Cambridge Core terms of use, available at polyphenol metabolites and catabolites are assessed, and the current evidence for their involvement in the protective effects of dietary polyphenols, within the gastrointestinal tract and other parts of the body to which they are transported by the circulatory system, is reviewed. -

The Use of Pomegranate (Punica Granatum L.) Phenolic Compounds As Potential Natural Prevention Against Ibds

15 The Use of Pomegranate (Punica granatum L.) Phenolic Compounds as Potential Natural Prevention Against IBDs Sylvie Hollebeeck, Yvan Larondelle, Yves-Jacques Schneider and Alexandrine During Institut des Sciences de la Vie, UCLouvain, Louvain-la-Neuve Belgium 1. Introduction Phenolic compounds (PCs) are plant secondary metabolites that are integral part of the “normal” human diet. The daily intake of PCs depends on the diet but is commonly evaluated at ca. 1g/day for people who eat several fruits and vegetables per day (Scalbert & Williamson, 2000). PCs may be interesting to prevent the development of inflammatory diseases, more particularly in the gastrointestinal tract, where their concentration may reach levels of up to several hundred µM (Scalbert & Williamson, 2000). Many studies have indeed reported on anti-inflammatory properties of different PCs (see (Calixto et al., 2004; Rahman et al., 2006; Romier et al., 2009; Shapiro et al., 2009), for reviews). Pomegranate (Punica granatum L.) belongs to the Punicaceae family, which includes only two species. More than 500 cultivars of Punica granatum exist with specific characteristics such as fruit size, exocarp and aril color, etc. Originating from the Middle East, pomegranate is now widely cultivated throughout the world, and also widely consumed. Pomegranate has been used for centuries in the folk medicine of many cultures. As described in the review of Lansky et al. (2007), the bark and the roots are believed to have anthelmintic and vermifuge properties, the fruit peel has been used as a cure for diarrhea, oral aphthae, and as a powerful astringent, the juice as a blood tonic, and the flowers as a cure for diabetes mellitus. -

Inhibitory Effect of Tannins from Galls of Carpinus Tschonoskii on the Degranulation of RBL-2H3 Cells

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Tsukuba Repository Inhibitory effect of tannins from galls of Carpinus tschonoskii on the degranulation of RBL-2H3 Cells 著者 Yamada Parida, Ono Takako, Shigemori Hideyuki, Han Junkyu, Isoda Hiroko journal or Cytotechnology publication title volume 64 number 3 page range 349-356 year 2012-05 権利 (C) Springer Science+Business Media B.V. 2012 The original publication is available at www.springerlink.com URL http://hdl.handle.net/2241/117707 doi: 10.1007/s10616-012-9457-y Manuscript Click here to download Manuscript: antiallergic effect of Tannin for CT 20111215 revision for reviewerClick here2.doc to view linked References Parida Yamada・Takako Ono・Hideyuki Shigemori・Junkyu Han・Hiroko Isoda Inhibitory Effect of Tannins from Galls of Carpinus tschonoskii on the Degranulation of RBL-2H3 Cells Parida Yamada・Junkyu Han・Hiroko Isoda (✉) Alliance for Research on North Africa, University of Tsukuba, 1-1-1 Tennodai, Tsukuba, Ibaraki 305-8572, Japan Takako Ono・Hideyuki Shigemori・Junkyu Han・Hiroko Isoda Graduate School of Life and Environmental Sciences, University of Tsukuba, 1-1-1 Tennodai, Tsukuba, Ibaraki 305-8572, Japan Corresponding author Hiroko ISODA, Professor, Ph.D. University of Tsukuba, 1-1-1 Tennodai, Tsukuba, Ibaraki 305-8572, Japan Tel: +81 29-853-5775, Fax: +81 29-853-5776. E-mail: [email protected] 1 Abstract In this study, the anti-allergy potency of thirteen tannins isolated from the galls on buds of Carpinus tschonoskii (including two tannin derivatives) was investigated. RBL-2H3 (rat basophilic leukemia) cells were incubated with these compounds, and the release of β-hexosaminidase and cytotoxicity were measured. -

Electronic Supplementary Information Supplemental Table 1. Fecal And

Electronic Supplementary Material (ESI) for Food & Function. This journal is © The Royal Society of Chemistry 2015 Electronic Supplementary Information Supplemental Table 1. Fecal and urine content of ellagic acid and urolithin A, B analyzed by HPLC. Baseline Week 4 Fecal Fecal Fecal Urine Fecal Fecal Fecal Urine EA UA UB UA EA UA UB UA µg/g µg/g µg/g µg/mg µg/g µg/g µg/g µg/mg stool stool stool creatinine stool stool stool creatinine Non- 0-6.4 0 0 0-2.9 0-174.2 0 0 0-0.6 Producers Producers 0-3.7 0-71 0-36 0.1-28 0-19 14-317 0-85 2.8-36 Supplemental Table 2. Fecal content of punicalagin A/B and punicalin analyzed by HPLC. Baseline Week 4 Fecal Fecal Fecal Fecal Pun A/B Punicalin Pun A/B Punicalin µg/g µg/g µg/g µg/g stool stool stool stool Non- 0 0 0-49 0-7.4 Producers Producers 0 0 0-27 0-5.7 LC-MS/MS analysis of pomegranate extract Five milligrams of pomegranate extract was dissolved in 5mL DMSO and further diluted 10 times in DMSO. The LC-MS/MS system consisted of a LCQ Advantage Finnigan instrument with ion-trap mass spectrometer equipped with an electrospray ionization (ESI) interface, and a Surveyor HPLC unit consisting of an autosampler/injector, quaternary pump, column heater, and diode array detector operated by Xcalibur software (Thermo Finnigan Corp). A Zorbax column (SB-C18 5µm 2.1x 150 mm column, Agilent) was used for the separation of analytes using a gradient elution condition by increasing the percentage of acetonitrile (with 1% acetic acid) in water (with 1% acetic acid) from 2% to 25% in 25 minutes at a flow rate of 0.2 mL/min. -

Acta Sci. Pol., Technol. Aliment. 13(3) 2014, 289-299 I M

M PO RU LO IA N T O N R E U Acta Sci. Pol., Technol. Aliment. 13(3) 2014, 289-299 I M C S ACTA pISSN 1644-0730 eISSN 1889-9594 www.food.actapol.net/ STRUCTURE, OCCURRENCE AND BIOLOGICAL ACTIVITY OF ELLAGITANNINS: A GENERAL REVIEW* Lidia Lipińska1, Elżbieta Klewicka1, Michał Sójka2 1Institute of Fermentation Technology and Microbiology, Lodz University of Technology Wółczańska 171/173, 90-924 Łódź, Poland 2Institute of Chemical Technology of Food, Lodz University of Technology Stefanowskiego 4/10, 90-924 Łódź, Poland ABSTRACT The present paper deals with the structure, occurrence and biological activity of ellagitannins. Ellagitannins belong to the class of hydrolysable tannins, they are esters of hexahydroxydiphenoic acid and monosac- charide (most commonly glucose). Ellagitannins are slowly hydrolysed in the digestive tract, releasing the ellagic acid molecule. Their chemical structure determines physical and chemical properties and biological activity. Ellagitannins occur naturally in some fruits (pomegranate, strawberry, blackberry, raspberry), nuts (walnuts, almonds), and seeds. They form a diverse group of bioactive polyphenols with anti-infl ammatory, anticancer, antioxidant and antimicrobial (antibacterial, antifungal and antiviral) activity. Furthermore, they improve the health of blood vessels. The paper discusses the metabolism and bioavailability of ellagitannins and ellagic acid. Ellagitannins are metabolized in the gastrointestinal tract by intestinal microbiota. They are stable in the stomach and undergo neither hydrolysis to free ellagic acid nor degradation. In turn, ellagic acid can be absorbed in the stomach. This paper shows the role of cancer cell lines in the studies of ellagitannins and ellagic acid metabolism. The biological activity of these compounds is broad and thus the focus is on their antimicrobial, anti-infl ammatory and antitumor properties. -

Phase II Conjugates of Urolithins Isolated from Human Urine and Potential Role of B-Glucuronidases in Their Disposition S

Supplemental material to this article can be found at: http://dmd.aspetjournals.org/content/suppl/2017/03/10/dmd.117.075200.DC1 1521-009X/45/6/657–665$25.00 https://doi.org/10.1124/dmd.117.075200 DRUG METABOLISM AND DISPOSITION Drug Metab Dispos 45:657–665, June 2017 Copyright ª 2017 by The American Society for Pharmacology and Experimental Therapeutics Phase II Conjugates of Urolithins Isolated from Human Urine and Potential Role of b-Glucuronidases in Their Disposition s Jakub P. Piwowarski, Iwona Stanisławska, Sebastian Granica, Joanna Stefanska, and Anna K. Kiss Department of Pharmacognosy and Molecular Basis of Phytotherapy, Faculty of Pharmacy (J.P.P., I.S., S.G., A.K.K.), and Department of Pharmaceutical Microbiology, Centre for Preclinical Research and Technology (CePT) (J.S.), Medical University of Warsaw, Warsaw, Poland; Primary Laboratory of Origin: Medical University of Warsaw, Faculty of Pharmacy Received January 24, 2017; accepted March 1, 2017 ABSTRACT In recent years, many xenobiotics derived from natural products tissue, and urine. The aim of this study was to isolate and Downloaded from have been shown to undergo extensive metabolism by gut micro- structurally characterize urolithin conjugates from the urine of a biota. Ellagitannins, which are high molecular polyphenols, are volunteer who ingested ellagitannin-rich natural products, and to metabolized to dibenzo[b,d]pyran-6-one derivatives—urolithins. evaluate the potential role of b-glucuronidase–triggered cleavage These compounds, in contrast with their parental compounds, have in urolithin disposition. Glucuronides of urolithin A, iso-urolithin A, good bioavailability and are found in plasma and urine at micromo- and urolithin B were isolated and shown to be cleaved by the lar concentrations. -

Health Benefits of Walnut Polyphenols: an Exploration Beyond Their Lipid Profile

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Diposit Digital de la Universitat de Barcelona Critical Reviews in Food Science and Nutrition ISSN: 1040-8398 (Print) 1549-7852 (Online) Journal homepage: http://www.tandfonline.com/loi/bfsn20 Health benefits of walnut polyphenols: An exploration beyond their lipid profile Claudia Sánchez-González, Carlos Ciudad, Véronique Noé & Maria Izquierdo- Pulido To cite this article: Claudia Sánchez-González, Carlos Ciudad, Véronique Noé & Maria Izquierdo-Pulido (2015): Health benefits of walnut polyphenols: An exploration beyond their lipid profile, Critical Reviews in Food Science and Nutrition, DOI: 10.1080/10408398.2015.1126218 To link to this article: http://dx.doi.org/10.1080/10408398.2015.1126218 Accepted author version posted online: 29 Dec 2015. Submit your article to this journal Article views: 61 View related articles View Crossmark data Full Terms & Conditions of access and use can be found at http://www.tandfonline.com/action/journalInformation?journalCode=bfsn20 Download by: [UNIVERSITAT DE BARCELONA] Date: 18 January 2016, At: 10:14 ACCEPTED MANUSCRIPT Health benefits of walnut polyphenols: An exploration beyond their lipid profile Claudia Sánchez-González1 Carlos Ciudad2, Véronique Noé2, & Maria Izquierdo-Pulido1,3*a 1 Departament of Food Science and Nutrition, Facultad de Farmacia y Ciencias de los Alimentos, Universidad de Barcelona, Av. Joan XXIII s/n, 08028 Barcelona, Spain; 2 Department of Biochemistry and Molecular Biology, Universidad de Barcelona, Barcelona, Spain; 3CIBER Physiopathology of Obesity and Nutrition (CIBEROBN), Instituto de Salud Carlos III, Madrid, Spain. * Corresponding author: Dr Maria Izquierdo-Pulido: [email protected] ABSTRACT Walnuts are commonly found in our diet and have been recognized for their nutritious properties for a long time. -

Colonic Metabolism of Phenolic Compounds: from in Vitro to in Vivo Approaches Juana Mosele

Nom/Logotip de la Universitat on s’ha llegit la tesi Colonic metabolism of phenolic compounds: from in vitro to in vivo approaches Juana Mosele http://hdl.handle.net/10803/378039 ADVERTIMENT. L'accés als continguts d'aquesta tesi doctoral i la seva utilització ha de respectar els drets de la persona autora. Pot ser utilitzada per a consulta o estudi personal, així com en activitats o materials d'investigació i docència en els termes establerts a l'art. 32 del Text Refós de la Llei de Propietat Intel·lectual (RDL 1/1996). Per altres utilitzacions es requereix l'autorització prèvia i expressa de la persona autora. En qualsevol cas, en la utilització dels seus continguts caldrà indicar de forma clara el nom i cognoms de la persona autora i el títol de la tesi doctoral. No s'autoritza la seva reproducció o altres formes d'explotació efectuades amb finalitats de lucre ni la seva comunicació pública des d'un lloc aliè al servei TDX. Tampoc s'autoritza la presentació del seu contingut en una finestra o marc aliè a TDX (framing). Aquesta reserva de drets afecta tant als continguts de la tesi com als seus resums i índexs. ADVERTENCIA. El acceso a los contenidos de esta tesis doctoral y su utilización debe respetar los derechos de la persona autora. Puede ser utilizada para consulta o estudio personal, así como en actividades o materiales de investigación y docencia en los términos establecidos en el art. 32 del Texto Refundido de la Ley de Propiedad Intelectual (RDL 1/1996). Para otros usos se requiere la autorización previa y expresa de la persona autora. -

Microbial Metabolite Urolithin B Inhibits Recombinant Human Monoamine Oxidase a Enzyme

H OH metabolites OH Communication Microbial Metabolite Urolithin B Inhibits Recombinant Human Monoamine Oxidase A Enzyme Rajbir Singh 1 , Sandeep Chandrashekharappa 2 , Praveen Kumar Vemula 2, Bodduluri Haribabu 1 and Venkatakrishna Rao Jala 1,* 1 Department of Microbiology and Immunology, James Graham Brown Cancer Center, University of Louisville, Louisville, KY 40202, USA; [email protected] (R.S.); [email protected] (B.H.) 2 Institute for Stem Cell Science and Regenerative Medicine (inStem), University of Agricultural Sciences-Gandhi Krishi Vignan Kendra (UAS-GKVK) Campus, Bellary Road, Bangalore, Karnataka 560065, India; [email protected] (S.C.); [email protected] (P.K.V.) * Correspondence: [email protected] Received: 7 May 2020; Accepted: 17 June 2020; Published: 19 June 2020 Abstract: Urolithins are gut microbial metabolites derived from ellagitannins (ET) and ellagic acid (EA), and shown to exhibit anticancer, anti-inflammatory, anti-microbial, anti-glycative and anti-oxidant activities. Similarly, the parent molecules, ET and EA are reported for their neuroprotection and antidepressant activities. Due to the poor bioavailability of ET and EA, the in vivo functional activities cannot be attributed exclusively to these compounds. Elevated monoamine oxidase (MAO) activities are responsible for the inactivation of monoamine neurotransmitters in neurological disorders, such as depression and Parkinson’s disease. In this study, we examined the inhibitory effects of urolithins (A, B and C) and EA on MAO activity using recombinant human MAO-A and MAO-B enzymes. Urolithin B was found to be a better MAO-A enzyme inhibitor among the tested urolithins and EA with an IC50 value of 0.88 µM, and displaying a mixed mode of inhibition. -

Dr. Duke's Phytochemical and Ethnobotanical Databases List of Chemicals for Tuberculosis

Dr. Duke's Phytochemical and Ethnobotanical Databases List of Chemicals for Tuberculosis Chemical Activity Count (+)-3-HYDROXY-9-METHOXYPTEROCARPAN 1 (+)-8HYDROXYCALAMENENE 1 (+)-ALLOMATRINE 1 (+)-ALPHA-VINIFERIN 3 (+)-AROMOLINE 1 (+)-CASSYTHICINE 1 (+)-CATECHIN 10 (+)-CATECHIN-7-O-GALLATE 1 (+)-CATECHOL 1 (+)-CEPHARANTHINE 1 (+)-CYANIDANOL-3 1 (+)-EPIPINORESINOL 1 (+)-EUDESMA-4(14),7(11)-DIENE-3-ONE 1 (+)-GALBACIN 2 (+)-GALLOCATECHIN 3 (+)-HERNANDEZINE 1 (+)-ISOCORYDINE 2 (+)-PSEUDOEPHEDRINE 1 (+)-SYRINGARESINOL 1 (+)-SYRINGARESINOL-DI-O-BETA-D-GLUCOSIDE 2 (+)-T-CADINOL 1 (+)-VESTITONE 1 (-)-16,17-DIHYDROXY-16BETA-KAURAN-19-OIC 1 (-)-3-HYDROXY-9-METHOXYPTEROCARPAN 1 (-)-ACANTHOCARPAN 1 (-)-ALPHA-BISABOLOL 2 (-)-ALPHA-HYDRASTINE 1 Chemical Activity Count (-)-APIOCARPIN 1 (-)-ARGEMONINE 1 (-)-BETONICINE 1 (-)-BISPARTHENOLIDINE 1 (-)-BORNYL-CAFFEATE 2 (-)-BORNYL-FERULATE 2 (-)-BORNYL-P-COUMARATE 2 (-)-CANESCACARPIN 1 (-)-CENTROLOBINE 1 (-)-CLANDESTACARPIN 1 (-)-CRISTACARPIN 1 (-)-DEMETHYLMEDICARPIN 1 (-)-DICENTRINE 1 (-)-DOLICHIN-A 1 (-)-DOLICHIN-B 1 (-)-EPIAFZELECHIN 2 (-)-EPICATECHIN 6 (-)-EPICATECHIN-3-O-GALLATE 2 (-)-EPICATECHIN-GALLATE 1 (-)-EPIGALLOCATECHIN 4 (-)-EPIGALLOCATECHIN-3-O-GALLATE 1 (-)-EPIGALLOCATECHIN-GALLATE 9 (-)-EUDESMIN 1 (-)-GLYCEOCARPIN 1 (-)-GLYCEOFURAN 1 (-)-GLYCEOLLIN-I 1 (-)-GLYCEOLLIN-II 1 2 Chemical Activity Count (-)-GLYCEOLLIN-III 1 (-)-GLYCEOLLIN-IV 1 (-)-GLYCINOL 1 (-)-HYDROXYJASMONIC-ACID 1 (-)-ISOSATIVAN 1 (-)-JASMONIC-ACID 1 (-)-KAUR-16-EN-19-OIC-ACID 1 (-)-MEDICARPIN 1 (-)-VESTITOL 1 (-)-VESTITONE 1