UCLA Electronic Theses and Dissertations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sean Callery

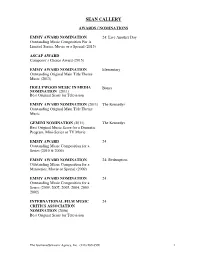

SEAN CALLERY AWARDS / NOMINATIONS EMMY AWARD NOMINATION 24: Live Another Day Outstanding Music Composition For A Limited Series, Movie or a Special (2015) ASCAP AWARD Composer’s Choice Award (2015) EMMY AWARD NOMINATION Elementary Outstanding Original Main Title Theme Music (2013) HOLLYWOOD MUSIC IN MEDIA Bones NOMINATION (2011) Best Original Score for Television EMMY AWARD NOMINATION (2011) The Kennedys Outstanding Original Main Title Theme Music GEMINI NOMINATION (2011) The Kennedys Best Original Music Score for a Dramatic Program, Mini-Series or TV Movie EMMY AWARD 24 Outstanding Music Composition for a Series (2010 & 2006) EMMY AWARD NOMINATION 24: Redemption Outstanding Music Composition for a Miniseries, Movie or Special (2009) EMMY AWARD NOMINATION 24 Outstanding Music Composition for a Series (2009, 2007, 2005, 2004, 2003, 2002) INTERNATIONAL FILM MUSIC 24 CRITICS ASSOCIATION NOMINATION (2006) Best Original Score for Television The Gorfaine/Schwartz Agency, Inc. (818) 260-8500 1 SEAN CALLERY TELEVISION SERIES JESSICA JONES (series) Melissa Rosenberg, Jeph Loeb, exec. prods. ABC / Marvel Entertainment / Tall Girls Melissa Rosenberg, showrunner BONES (series) Barry Josephson, Hart Hanson, Stephen Nathan, Fox / 20 th Century Fox Steve Bees, exec. prods. HOMELAND (pilot & series) Alex Gansa, Howard Gordon, Avi Nir, Gideon Showtime / Fox 21 Raff, Ran Telem, exec. prods. ELEMENTARY (pilot & series) Carl Beverly, Robert Doherty, Sarah Timberman, CBS / Timberman / Beverly Studios Craig Sweeny, exec. prods. MINORITY REPORT (pilot & series) Kevin Falls, Max Borenstein, Darryl Frank, 20 th Century Fox Television / Fox Justin Falvey, exec. prods. Kevin Falls, showrunner MEDIUM (series) Glenn Gordon Caron, Rene Echevarria, Kelsey Paramount TV /CBS Grammer, Ronald Schwary, exec. prods. 24: LIVE ANOTHER DAY (event-series) Kiefer Sutherland, Howard Gordon, Brian 20 TH Century Fox Grazer, Jon Cassar, Evan Katz, Robert Cochran, David Fury, exec. -

STEPHEN MARK, ACE Editor

STEPHEN MARK, ACE Editor Television PROJECT DIRECTOR STUDIO / PRODUCTION CO. DELILAH (series) Various OWN / Warner Bros. Television EP: Craig Wright, Oprah Winfrey, Charles Randolph-Wright NEXT (series) Various Fox / 20th Century Fox Television EP: Manny Coto, Charlie Gogolak, Glenn Ficarra, John Requa SNEAKY PETE (series) Various Amazon / Sony Pictures Television EP: Blake Masters, Bryan Cranston, Jon Avnet, James Degus GREENLEAF (series) Various OWN / Lionsgate Television EP: Oprah Winfrey, Clement Virgo, Craig Wright HELL ON WHEELS (series) Various AMC / Entertainment One Television EP: Mark Richard, John Wirth, Jeremy Gold BLACK SAILS (series) Various Starz / Platinum Dunes EP: Michael Bay, Jonathan Steinberg, Robert Levine, Dan Shotz LEGENDS (pilot)* David Semel TNT / Fox 21 Prod: Howard Gordon, Jonathan Levin, Cyrus Voris, Ethan Reiff DEFIANCE (series) Various Syfy / Universal Cable Productions Prod: Kevin Murphy GAME OF THRONES** (season 2, ep.10) Alan Taylor HBO EP: Devid Benioff, D.B. Weiss BOSS (pilot* + series) Various Starz / Lionsgate Television EP: Farhad Safinia, Gus Van Sant, THE LINE (pilot) Michael Dinner CBS / CBS Television Studios EP: Carl Beverly, Sarah Timberman CANE (series) Various CBS / ABC Studios Prod: Jimmy Smits, Cynthia Cidre, MASTERS OF SCIENCE FICTION (anthology series) Michael Tolkin ABC / IDT Entertainment Jonathan Frakes Prod: Keith Addis, Sam Egan 3 LBS. (series) Various CBS / The Levinson-Fontana Company Prod: Peter Ocko DEADWOOD (series) Various HBO 2007 ACE Eddie Award Nominee | 2006 ACE Eddie Award Winner Prod: David Milch, Gregg Fienberg, Davis Guggenheim 2005 Emmy Nomination WITHOUT A TRACE (pilot) David Nutter CBS / Warner Bros. Television / Jerry Bruckheimer TV Prod: Jerry Bruckheimer, Hank Steinberg SMALLVILLE (pilot + series) David Nutter (pilot) CW / Warner Bros. -

The Top 101 Inspirational Movies –

The Top 101 Inspirational Movies – http://www.SelfGrowth.com The Top 101 Inspirational Movies Ever Made – by David Riklan Published by Self Improvement Online, Inc. http://www.SelfGrowth.com 20 Arie Drive, Marlboro, NJ 07746 ©Copyright by David Riklan Manufactured in the United States No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic mechanical, photocopying, recording, scanning, or otherwise, except as permitted under Section 107 or 108 of the 1976 United States Copyright Act, without the prior written permission of the Publisher. Limit of Liability / Disclaimer of Warranty: While the authors have used their best efforts in preparing this book, they make no representations or warranties with respect to the accuracy or completeness of the contents and specifically disclaim any implied warranties. The advice and strategies contained herein may not be suitable for your situation. You should consult with a professional where appropriate. The author shall not be liable for any loss of profit or any other commercial damages, including but not limited to special, incidental, consequential, or other damages. The Top 101 Inspirational Movies – http://www.SelfGrowth.com The Top 101 Inspirational Movies Ever Made – by David Riklan TABLE OF CONTENTS Introduction 6 Spiritual Cinema 8 About SelfGrowth.com 10 Newer Inspirational Movies 11 Ranking Movie Title # 1 It’s a Wonderful Life 13 # 2 Forrest Gump 16 # 3 Field of Dreams 19 # 4 Rudy 22 # 5 Rocky 24 # 6 Chariots of -

Vehicle Crashes Into Business

TONIGHT Scattered T-storms. Low of 70. Search for The Westfield News The WestfieldNews Search for “I TheT IWestfieldS NOT NewsLOVE Westfield350.com The WestfieldNews THAT IS BLIND, Serving Westfield, Southwick, and surrounding Hilltowns “TIMEBUT IS JEALOUSYTHE ONLY .” WEATHER — LaCRITICWRE NCWITHOUTE DURRE LL TONIGHT AMBITION.” Partly Cloudy. SearchJOHN for STEINBECK The Westfield News LowWestfield350.comWestfield350.org of 55. Thewww.thewestfieldnews.com WestfieldNews Serving Westfield, Southwick, and surrounding Hilltowns “TIME IS THE ONLY VOL.WEATHER 86 NO. 151 TUESDAY, JUNE 27, 2017 CRITIC75 cents WITHOUT VOL.TONIGHT 87 NO. 190 FRIDAY, AUGUST 17, 2018 75AMBITION Cents .” Partly Cloudy. JOHN STEINBECK Low of 55. www.thewestfieldnews.com VOL. 86 NO. 151 TUESDAY,Business JUNE 27, 2017continues as City Council 75 cents returns from summer break By AMY PORTER Correspondent WESTFIELD – After a six week break, City Council mem- bers came back together Thursday, ready to ask questions and move some items forward and others to committee. The Council voted unanimously for a STEP (Sustained Traffic Enforcement Program) grant from the Executive Office of Public Westfield firefighters Keith Lemon and Mike Albert use a chain Safety and Security to the saw to cut a hole in the roof of a Pequot Point Road house in ordr Westfield Police Department in to bring a hose to bear on a smoldering fire in the attic. (Photo by the amount of $6,764, to be added Carl E. Hartdegen) to a previous grant award of $9,534. A second request from the Mayor for immediate consider- Lake house burns ation of an appropriation of $1,219 from the Personnel, Full-time John Oleksak spoke at a public hearing at City Council for a zoning By CARL E. -

2020 Young Adult Summer Reading Catalog

Mail-A-Book Young Adult Summer Reading Catalog 2020 Fiction *Non-Fiction begins on page 28* Airman by Eoin Colfer Thrown into prison for a murder he didn't commit, Conor passes the time scratching designs for a The Absolutely True Diary of a Part-Time flying machine and is finally able to build a glider Indian by Sherman Alexie for a daring escape. A budding cartoonist growing up on the Spokane Indian Reservation leaves his school on the rez to Alice in Zombieland by Gena Showalter attend an all-white farm town high school where Alice Bell must learn to fight the undead to the only other Indian is the school mascot. avenge her family and learn to trust Cole Holland who has secrets of his own. Also available: An Abundance of Katherines by John Green Through the Zombie Looking Glass Colin Singleton always falls for girls named The Queen of Zombie Hearts Katherine, and he's been dumped by all of them. Letting expectations go and allowing love in are All American Boys by Jason Reynolds part of Colin's hilarious quest to find his missing When sixteen-year-old Rashad is mistakenly piece and avenge dumpees everywhere. accused of stealing, classmate Quinn witnesses his brutal beating at the hands of a police officer who The Adventures of Spider-Man: Sinister happens to be the older brother of his best friend. Intentions Told through Rashad and Quinn's alternating Spider-Man takes on some of his most fearsome viewpoints. foes--with a few surprises along the way! Also available: The Adventures of Spider-Man: All the Feels by Danika Stone Spectacular Foes When uber-fan Liv's favorite sci-fi movie character is killed off, she and her best friend Xander, an aspiring actor and Steampunk Airhead Novels enthusiast, launch a campaign to bring him back by Meg Cabot from the dead. -

Representations and Discourse of Torture in Post 9/11 Television: an Ideological Critique of 24 and Battlestar Galactica

REPRESENTATIONS AND DISCOURSE OF TORTURE IN POST 9/11 TELEVISION: AN IDEOLOGICAL CRITIQUE OF 24 AND BATTLSTAR GALACTICA Michael J. Lewis A Thesis Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of MASTER OF ARTS May 2008 Committee: Jeffrey Brown, Advisor Becca Cragin ii ABSTRACT Jeffrey Brown Advisor Through their representations of torture, 24 and Battlestar Galactica build on a wider political discourse. Although 24 began production on its first season several months before the terrorist attacks, the show has become a contested space where opinions about the war on terror and related political and military adventures are played out. The producers of Battlestar Galactica similarly use the space of television to raise questions and problematize issues of war. Together, these two television shows reference a long history of discussion of what role torture should play not just in times of war but also in a liberal democracy. This project seeks to understand the multiple ways that ideological discourses have played themselves out through representations of torture in these television programs. This project begins with a critique of the popular discourse of torture as it portrayed in the popular news media. Using an ideological critique and theories of televisual realism, I argue that complex representations of torture work to both challenge and reify dominant and hegemonic ideas about what torture is and what it does. This project also leverages post-structural analysis and critical gender theory as a way of understanding exactly what ideological messages the programs’ producers are trying to articulate. -

'Nothing but the Truth': Genre, Gender and Knowledge in the US

‘Nothing but the Truth’: Genre, Gender and Knowledge in the US Television Crime Drama 2005-2010 Hannah Ellison Submitted for the degree of Doctor of Philosophy (PhD) University of East Anglia School of Film, Television and Media Studies Submitted January 2014 ©This copy of the thesis has been supplied on condition that anyone who consults it is understood to recognise that its copyright rests with the author and that no quotation from the thesis, nor any information derived therefrom, may be published without the author's prior, written consent. 2 | Page Hannah Ellison Abstract Over the five year period 2005-2010 the crime drama became one of the most produced genres on American prime-time television and also one of the most routinely ignored academically. This particular cyclical genre influx was notable for the resurgence and reformulating of the amateur sleuth; this time remerging as the gifted police consultant, a figure capable of insights that the police could not manage. I term these new shows ‘consultant procedurals’. Consequently, the genre moved away from dealing with the ills of society and instead focused on the mystery of crime. Refocusing the genre gave rise to new issues. Questions are raised about how knowledge is gained and who has the right to it. With the individual consultant spearheading criminal investigation, without official standing, the genre is re-inflected with issues around legitimacy and power. The genre also reengages with age-old questions about the role gender plays in the performance of investigation. With the aim of answering these questions one of the jobs of this thesis is to find a way of analysing genre that accounts for both its larger cyclical, shifting nature and its simultaneously rigid construction of particular conventions. -

Collection of Scripts for Survivors and Paris 7000, 1969-1970

http://oac.cdlib.org/findaid/ark:/13030/c8z60tr2 No online items Collection of scripts for Survivors and Paris 7000, 1969-1970 Finding aid prepared by UCLA Arts Special Collections staff, 2004; initial EAD encoding by Julie Graham; machine-readable finding aid created by Caroline Cubé. UCLA Library Special Collections Room A1713, Charles E. Young Research Library Box 951575 Los Angeles, CA, 90095-1575 (310) 825-4988 [email protected] Online finding aid last updated 19 November 2016. Collection of scripts for Survivors PASC 258 1 and Paris 7000, 1969-1970 Title: Collection of Scripts for Survivors and Paris 7000 Collection number: PASC 258 Contributing Institution: UCLA Library Special Collections Language of Material: English Physical Description: 1.0 linear ft.(2 boxes) Date (inclusive): 1969-1970 Abstract: John Wilder was the producer of the television series The Survivors (1969) and Paris 7000 (1970). The collection consists of scripts and production information related to the two programs. Physical location: Stored off-site at SRLF. Advance notice is required for access to the collection. Please contact UCLA Library Special Collections for paging information. Restrictions on Access Open for research. STORED OFF-SITE AT SRLF. Advance notice is required for access to the collection. Please contact UCLA Library Special Collections for paging information. Restrictions on Use and Reproduction Property rights to the physical object belong to the UC Regents. Literary rights, including copyright, are retained by the creators and their heirs. It is the responsibility of the researcher to determine who holds the copyright and pursue the copyright owner or his or her heir for permission to publish where The UC Regents do not hold the copyright. -

The Walking Dead

Trademark Trial and Appeal Board Electronic Filing System. http://estta.uspto.gov ESTTA Tracking number: ESTTA1080950 Filing date: 09/10/2020 IN THE UNITED STATES PATENT AND TRADEMARK OFFICE BEFORE THE TRADEMARK TRIAL AND APPEAL BOARD Proceeding 91217941 Party Plaintiff Robert Kirkman, LLC Correspondence JAMES D WEINBERGER Address FROSS ZELNICK LEHRMAN & ZISSU PC 151 WEST 42ND STREET, 17TH FLOOR NEW YORK, NY 10036 UNITED STATES Primary Email: [email protected] 212-813-5900 Submission Plaintiff's Notice of Reliance Filer's Name James D. Weinberger Filer's email [email protected] Signature /s/ James D. Weinberger Date 09/10/2020 Attachments F3676523.PDF(42071 bytes ) F3678658.PDF(2906955 bytes ) F3678659.PDF(5795279 bytes ) F3678660.PDF(4906991 bytes ) IN THE UNITED STATES PATENT AND TRADEMARK OFFICE BEFORE THE TRADEMARK TRIAL AND APPEAL BOARD ROBERT KIRKMAN, LLC, Cons. Opp. and Canc. Nos. 91217941 (parent), 91217992, 91218267, 91222005, Opposer, 91222719, 91227277, 91233571, 91233806, 91240356, 92068261 and 92068613 -against- PHILLIP THEODOROU and ANNA THEODOROU, Applicants. ROBERT KIRKMAN, LLC, Opposer, -against- STEVEN THEODOROU and PHILLIP THEODOROU, Applicants. OPPOSER’S NOTICE OF RELIANCE ON INTERNET DOCUMENTS Opposer Robert Kirkman, LLC (“Opposer”) hereby makes of record and notifies Applicant- Registrant of its reliance on the following internet documents submitted pursuant to Rule 2.122(e) of the Trademark Rules of Practice, 37 C.F.R. § 2.122(e), TBMP § 704.08(b), and Fed. R. Evid. 401, and authenticated pursuant to Fed. -

Film Film Film Film

Annette Michelson’s contribution to art and film criticism over the last three decades has been un- paralleled. This volume honors Michelson’s unique C AMERA OBSCURA, CAMERA LUCIDA ALLEN AND TURVEY [EDS.] LUCIDA CAMERA OBSCURA, AMERA legacy with original essays by some of the many film FILM FILM scholars influenced by her work. Some continue her efforts to develop historical and theoretical frame- CULTURE CULTURE works for understanding modernist art, while others IN TRANSITION IN TRANSITION practice her form of interdisciplinary scholarship in relation to avant-garde and modernist film. The intro- duction investigates and evaluates Michelson’s work itself. All in some way pay homage to her extraordi- nary contribution and demonstrate its continued cen- trality to the field of art and film criticism. Richard Allen is Associ- ate Professor of Cinema Studies at New York Uni- versity. Malcolm Turvey teaches Film History at Sarah Lawrence College. They recently collaborated in editing Wittgenstein, Theory and the Arts (Lon- don: Routledge, 2001). CAMERA OBSCURA CAMERA LUCIDA ISBN 90-5356-494-2 Essays in Honor of Annette Michelson EDITED BY RICHARD ALLEN 9 789053 564943 MALCOLM TURVEY Amsterdam University Press Amsterdam University Press WWW.AUP.NL Camera Obscura, Camera Lucida Camera Obscura, Camera Lucida: Essays in Honor of Annette Michelson Edited by Richard Allen and Malcolm Turvey Amsterdam University Press Front cover illustration: 2001: A Space Odyssey. Courtesy of Photofest Cover design: Kok Korpershoek, Amsterdam Lay-out: japes, Amsterdam isbn 90 5356 494 2 (paperback) nur 652 © Amsterdam University Press, Amsterdam, 2003 All rights reserved. Without limiting the rights under copyright reserved above, no part of this book may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form or by any means (electronic, me- chanical, photocopying, recording or otherwise) without the written permis- sion of both the copyright owner and the author of the book. -

Izombie: Six Feet Under and Rising Volume 3 Free Ebook

FREEIZOMBIE: SIX FEET UNDER AND RISING VOLUME 3 EBOOK Mike Allred,Jay Stephens,Chris Roberson | 144 pages | 14 Feb 2012 | DC Comics | 9781401233709 | English | United States iZombie Vol. 3: Six Feet Under & Rising on Apple Books Free shipping. Skip to main content. Email to friends Share on Facebook - opens in a new window or tab Share on Twitter - opens in a new window or tab Share on Pinterest - opens in a new window or tab. Add to Watchlist. People who viewed this item also viewed. Picture Information. Mouse over to Zoom - Click to enlarge. Have one to sell? Sell it yourself. Get the iZombie: Six Feet Under and Rising Volume 3 you ordered or get your money back. Learn more - eBay Money Back Guarantee - opens in new window or tab. Contact seller. Visit store. See other items More See all. Item information Condition:. The item you've selected wasn't added to your cart. Add to Watchlist Unwatch. Watch list is full. No additional import charges at delivery! This item will be posted through the Global Shipping Program and includes international tracking. Learn more - opens in a new window or tab. Doesn't iZombie: Six Feet Under and Rising Volume 3 to Germany See details. Item location:. Posts to:. This amount is subject to change until you make payment. For additional information, see the Global Shipping Program terms and conditions - opens in a new window or tab This amount includes applicable customs duties, taxes, brokerage and other fees. For additional information, see the Global Shipping Program terms and conditions - opens in a new window or tab. -

Normalizing Monstrous Bodies Through Zombie Media

Your Friendly Neighbourhood Zombie: Normalizing Monstrous Bodies Through Zombie Media by Jessica Wind A thesis submitted to the Faculty of Graduate and Postdoctoral Affairs in partial fulfillment of the requirements for the degree of Master of Arts in Communication Carleton University Ottawa, Ontario © 2016 Jess Wind Abstract Our deepest social fears and anxieties are often communicated through the zombie, but these readings aren’t reflected in contemporary zombie media. Increasingly, we are producing a less scary, less threatening zombie — one that is simply struggling to navigate a society in which it doesn’t fit. I begin to rectify the gap between zombie scholarship and contemporary zombie media by mapping the zombie’s shift from “outbreak narratives” to normalized monsters. If the zombie no longer articulates social fears and anxieties, what purpose does it serve? Through the close examination of these “normalized” zombie media, I read the zombie as possessing a non- normative body whose lived experiences reveal and reflect tensions of identity construction — a process that is muddy, in motion, and never easy. We may be done with the uncontrollable horde, but we’re far from done with the zombie and its connection to us and society. !ii Acknowledgements I would like to start by thanking Sheryl Hamilton for going on this wild zombie-filled journey with me. You guided me as I worked through questions and gained confidence in my project. Without your endless support, thorough feedback, and shared passion for zombies it wouldn’t have been nearly as successful. Thank you for your honesty, deadlines, and coffee. I would also like to thank Irena Knezevic for being so willing and excited to read this many pages about zombies.