Reusing and Composing Tests with Traits

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Towards a Taxonomy of Sunit Tests *

Towards a Taxonomy of SUnit Tests ? Markus G¨alli a Michele Lanza b Oscar Nierstrasz a aSoftware Composition Group Institut f¨urInformatik und angewandte Mathematik Universit¨atBern, Switzerland bFaculty of Informatics University of Lugano, Switzerland Abstract Although unit testing has gained popularity in recent years, the style and granularity of individual unit tests may vary wildly. This can make it difficult for a developer to understand which methods are tested by which tests, to what degree they are tested, what to take into account while refactoring code and tests, and to assess the value of an existing test. We have manually categorized the test base of an existing object-oriented system in order to derive a first taxonomy of unit tests. We have then developed some simple tools to semi-automatically categorize tests according to this taxonomy, and applied these tools to two case studies. As it turns out, the vast majority of unit tests focus on a single method, which should make it easier to associate tests more tightly to the methods under test. In this paper we motivate and present our taxonomy, we describe the results of our case studies, and we present our approach to semi-automatic unit test categorization. 1 Key words: unit testing, taxonomy, reverse engineering 1 13th International European Smalltalk Conference (ESUG 2005) http://www.esug.org/conferences/thirteenthinternationalconference2005 ? We thank St´ephane Ducasse for his helpful comments and gratefully acknowledge the financial support of the Swiss National Science Foundation for the project “Tools and Techniques for Decomposing and Composing Software” (SNF Project No. -

2.5 the Unit Test Framework (UTF)

Masaryk University Faculty of Informatics C++ Unit Testing Frameworks Diploma Thesis Brno, January 2010 Miroslav Sedlák i Statement I declare that this thesis is my original work of authorship which I developed individually. I quote properly full reference to all sources I used while developing. ...…………..……… Miroslav Sedlák Acknowledgements I am grateful to RNDr. Jan Bouda, Ph.D. for his inspiration and productive discussion. I would like to thank my family and girlfriend for the encouragement they provided. Last, but not least, I would like to thank my friends Ing.Michal Bella who was very supportive in verifying the architecture of the extension of Unit Test Framework and Mgr. Irena Zigmanová who helped me with proofreading of this thesis. ii Abstract The aim of this work is to gather, clearly present and test the use of existing UTFs (CppUnit, CppUnitLite, CppUTest, CxxTest and other) and supporting tools for software project development in the programming language C++. We will compare the advantages and disadvantages of UTFs and theirs tools. Another challenge is to design effective solutions for testing by using one of the UTFs in dependence on the result of analysis. Keywords C++, Unit Test (UT), Test Framework (TF), Unit Test Framework (UTF), Test Driven Development (TDD), Refactoring, xUnit, Standard Template Library (STL), CppUnit, CppUnitLite, CppUTest Run-Time Type Information (RTTI), Graphical User Interface (GNU). iii Contents 1 Introduction .................................................................................................................................... -

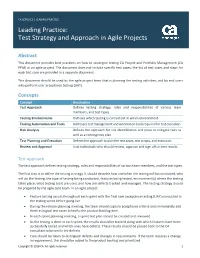

Leading Practice: Test Strategy and Approach in Agile Projects

CA SERVICES | LEADING PRACTICE Leading Practice: Test Strategy and Approach in Agile Projects Abstract This document provides best practices on how to strategize testing CA Project and Portfolio Management (CA PPM) in an agile project. The document does not include specific test cases; the list of test cases and steps for each test case are provided in a separate document. This document should be used by the agile project team that is planning the testing activities, and by end users who perform user acceptance testing (UAT). Concepts Concept Description Test Approach Defines testing strategy, roles and responsibilities of various team members, and test types. Testing Environments Outlines which testing is carried out in which environment. Testing Automation and Tools Addresses test management and automation tools required for test execution. Risk Analysis Defines the approach for risk identification and plans to mitigate risks as well as a contingency plan. Test Planning and Execution Defines the approach to plan the test cases, test scripts, and execution. Review and Approval Lists individuals who should review, approve and sign off on test results. Test Approach The test approach defines testing strategy, roles and responsibilities of various team members, and the test types. The first step is to define the testing strategy. It should describe how and when the testing will be conducted, who will do the testing, the type of testing being conducted, features being tested, environment(s) where the testing takes place, what testing tools are used, and how are defects tracked and managed. The testing strategy should be prepared by the agile core team. -

Enhancement in Nunit Testing Framework by Binding Logging with Unit Testing

International Journal of Science and Research (IJSR), India Online ISSN: 2319-7064 Enhancement in Nunit Testing Framework by binding Logging with Unit testing Viraj Daxini1, D. A. Parikh2 1Gujarat Technological University, L. D. College of Engineering, Ahmedabad, Gujarat, India 2Associate Professor and Head, Department of Computer Engineering, L. D. College of Engineering, Ahmadabad, Gujarat, India Abstract: This paper describes the new testing library named log4nunit testing library which is enhanced version of nunit testing library as it is binded to logging functionality. NUnit is similar to xunit testing family in all that cases are built directly to the code of the project. Log4NUnit testing library provides the capability to log the results of tests along with testing classes. It also provides the capability to log the messages from within the testing methods. It supplies extension of NUnit’s Test class method in terms of logging the results. It has functionality to log the entity of test cases, based on the Logging framework, to document the test settings which is done at the time of testing, test case execution and it’s results. Attractive feature of new library is that it will redirect the log message to console at runtime if Log4net library is not present in the class path. It will make easy for those users who do not want to download Logging framework and put it in their class path. Log4NUnit also include a Log4net logger methods and implements utility of logging methods such as info, debug, warn, fatal etc. This work was prompted due to lack of published results concerning the implementation of Log4NUnit. -

Efficient Black-Box JTAG Discovery

Ca’ Foscari University of Venice Department of Environmental Sciences, Informatics and Statistics Master’s Degree programme Computer Science, Software Dependability and Cyber Security Second Cycle (D.M. 270/2004) Master Thesis Efficient Black-box JTAG Discovery Supervisor: Graduand: Prof. Riccardo Focardi Riccardo Francescato Matriculation Number 857609 Assistant supervisor: Dott. Francesco Palmarini Academic Year 2016 - 2017 Riccardo Francescato Matriculation Number 857609 Efficient Black-box JTAG Discovery, Master Thesis c February 2018. Abstract Embedded devices represent the most widespread form of computing device in the world. Almost every consumer product manufactured in the last decades contains an embedded system, e.g., refrigerators, smart bulbs, activity trackers, smart watches and washing machines. These computing devices are also used in safety and security- critical systems, e.g., autonomous driving cars, cryptographic tokens, avionics, alarm systems. Often, manufacturers do not take much into consideration the attack surface offered by low-level interfaces such as JTAG. In the last decade, JTAG port has been used by the research community as an entry point for a number of attacks and reverse engineering techniques. Therefore, finding and identifying the JTAG port of a device or a de-soldered integrated circuit (IC) can be the first step required for performing a successful attack. In this work, we analyse the design of JTAG port and develop methods and algorithms aimed at searching the JTAG port. More specifically we will provide an introduction to the problem and the related attacks already documented in the literature. Moreover, we will provide to the reader the basics necessary to understand this work (background on JTAG and basic electronic terminology). -

Introduction to Unit Testing

INTRODUCTION TO UNIT TESTING In C#, you can think of a unit as a method. You thus write a unit test by writing something that tests a method. For example, let’s say that we had the aforementioned Calculator class and that it contained an Add(int, int) method. Let’s say that you want to write some code to test that method. public class CalculatorTester { public void TestAdd() { var calculator = new Calculator(); if (calculator.Add(2, 2) == 4) Console.WriteLine("Success"); else Console.WriteLine("Failure"); } } Let’s take a look now at what some code written for an actual unit test framework (MS Test) looks like. [TestClass] public class CalculatorTests { [TestMethod] public void TestMethod1() { var calculator = new Calculator(); Assert.AreEqual(4, calculator.Add(2, 2)); } } Notice the attributes, TestClass and TestMethod. Those exist simply to tell the unit test framework to pay attention to them when executing the unit test suite. When you want to get results, you invoke the unit test runner, and it executes all methods decorated like this, compiling the results into a visually pleasing report that you can view. Let’s take a look at your top 3 unit test framework options for C#. MSTest/Visual Studio MSTest was actually the name of a command line tool for executing tests, MSTest ships with Visual Studio, so you have it right out of the box, in your IDE, without doing anything. With MSTest, getting that setup is as easy as File->New Project. Then, when you write a test, you can right click on it and execute, having your result displayed in the IDE One of the most frequent knocks on MSTest is that of performance. -

A Confused Tester in Agile World … Qa a Liability Or an Asset

A CONFUSED TESTER IN AGILE WORLD … QA A LIABILITY OR AN ASSET THIS IS A WORK OF FACTS & FINDINGS BASED ON TRUE STORIES OF ONE & MANY TESTERS !! J Presented By Ashish Kumar, WHAT’S AHEAD • A STORY OF TESTING. • FROM THE MIND OF A CONFUSED TESTER. • FEW CASE STUDIES. • CHALLENGES IDENTIFIED. • SURVEY STUDIES. • GLOBAL RESPONSES. • SOLUTION APPROACH. • PRINCIPLES AND PRACTICES. • CONCLUSION & RECAP. • Q & A. A STORY OF TESTING IN AGILE… HAVE YOU HEARD ANY OF THESE ?? • YOU DON’T NEED A DEDICATED SOFTWARE TESTING TEAM ON YOUR AGILE TEAMS • IF WE HAVE BDD,ATDD,TDD,UI AUTOMATION , UNIT TEST >> WHAT IS THE NEED OF MANUAL TESTING ?? • WE WANT 100% AUTOMATION IN THIS PROJECT • TESTING IS BECOMING BOTTLENECK AND REASON OF SPRINT FAILURE • REPEATING REGRESSION IS A BIG TASK AND AN OVERHEAD • MICROSOFT HAS NO TESTERS NOT EVEN GOOGLE, FACEBOOK AND CISCO • 15K+ DEVELOPERS /4K+ PROJECTS UNDER ACTIVE • IN A “MOBILE-FIRST AND CLOUD-FIRST WORLD.” DEVELOPMENT/50% CODE CHANGES PER MONTH. • THE EFFORT, KNOWN AS AGILE SOFTWARE DEVELOPMENT, • 5500+ SUBMISSION PER DAY ON AVERAGE IS DESIGNED TO LOWER COSTS AND HONE OPERATIONS AS THE COMPANY FOCUSES ON BUILDING CLOUD AND • 20+ SUSTAINED CODE CHANGES/MIN WITH 60+PEAKS MOBILE SOFTWARE, SAY ANALYSTS • 75+ MILLION TEST CASES RUN PER DAY. • MR. NADELLA TOLD BLOOMBERG THAT IT MAKES MORE • DEVELOPERS OWN TESTING AND DEVELOPERS OWN SENSE TO HAVE DEVELOPERS TEST & FIX BUGS INSTEAD OF QUALITY. SEPARATE TEAM OF TESTERS TO BUILD CLOUD SOFTWARE. • GOOGLE HAVE PEOPLE WHO COULD CODE AND WANTED • SUCH AN APPROACH, A DEPARTURE FROM THE TO APPLY THAT SKILL TO THE DEVELOPMENT OF TOOLS, COMPANY’S TRADITIONAL PRACTICE OF DIVIDING INFRASTRUCTURE, AND TEST AUTOMATION. -

Comparative Analysis of Junit and Testng Framework

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 05 Issue: 05 | May-2018 www.irjet.net p-ISSN: 2395-0072 Comparative Analysis of JUnit and TestNG framework Manasi Patil1, Mona Deshmukh2 Student, Dept. of MCA, VES Institute of Technology, Maharashtra, India1 Professor, Dept. of MCA, VES Institute of Technology, Maharashtra, India2 ---------------------------------------------------------------------***--------------------------------------------------------------------- Abstract - Testing is an important phase in SDLC, Testing It is flexible than Junit and supports parametrization, parallel can be manual or automated. Nowadays Automation testing is execution and data driven testing. widely used to find defects and to ensure the correctness and The Table – 1 compares different functionalities of TestNG completeness of the software. Open source framework can be and JUnit framework. used for automation testing such as Robotframework, Junit, Spock, NUnit, TestNG, Jasmin, Mocha etc. This paper compares Table -1: Functionality TestNG vs JUnit JUnit and TestNG based on their features and functionalities. Key Words: JUnit, TestNG, Automation Framework, Functionality TestNG JUnit Automation Testing, Selenium TestNG, Selenium JUnit Yes 1. INTRODUCTION Support Annotations Yes Yes Support Test Suit Initialization Yes Testing a software manually is tedious work, and takes lot of No time and efforts. Automation saves lot of time and money, Support for Tests groups Yes also it increases the test coverage -

Acceptance Testing Based on Relationships Among Use Cases Susumu Kariyuki, Atsuto Kubo Mikio Suzuki Hironori Washizaki, Aoyama Media Laboratory TIS Inc

5th World Congress for Software Quality – Shanghai, China – November 2011 Acceptance Testing based on Relationships among Use Cases Susumu Kariyuki, Atsuto Kubo Mikio Suzuki Hironori Washizaki, Aoyama Media Laboratory TIS Inc. Yoshiaki Fukazawa Tokyo, Japan Tokyo, Japan Waseda University [email protected]. [email protected] Tokyo, Japan jp [email protected] [email protected] Abstract In software development using use cases, such as use-case-driven object-oriented development, test scenarios for the acceptance test can be built from use cases. However, the manual listing of execution flows of complex use cases sometimes results in incomplete coverage of the possible execution flows, particularly if the relationships between use cases are complicated. Moreover, the lack of a widely accepted coverage definition for the acceptance test results in the ambiguous judgment of acceptance test completion. We propose a definition of acceptance test coverage using use cases and an automated generation procedure for test scenarios and skeleton codes under a specified condition for coverage while automatically identifying the execution flows of use cases. Our technique can reduce the incomplete coverage of execution flows of use cases and create objective standards for judging acceptance test completion. Moreover, we expect an improvement of the efficiency of acceptance tests because of the automated generation procedure for test scenarios and skeleton codes. 1. Introduction An acceptance test is carried out by users who take the initiative in confirming whether the developed system precisely performs the requirements of the users.2) As a method of representing functional requirements, use cases have been used.3) Use cases are descriptions of external functions of a system from the viewpoint of the users and external systems (both are called actors). -

Beginners Guide to Software Testing

Beginners Guide To Software Testing Beginners Guide To Software Testing - Padmini C Page 1 Beginners Guide To Software Testing Table of Contents: 1. Overview ........................................................................................................ 5 The Big Picture ............................................................................................... 5 What is software? Why should it be tested? ................................................. 6 What is Quality? How important is it? ........................................................... 6 What exactly does a software tester do? ...................................................... 7 What makes a good tester? ........................................................................... 8 Guidelines for new testers ............................................................................. 9 2. Introduction .................................................................................................. 11 Software Life Cycle ....................................................................................... 11 Various Life Cycle Models ............................................................................ 12 Software Testing Life Cycle .......................................................................... 13 What is a bug? Why do bugs occur? ............................................................ 15 Bug Life Cycle ............................................................................................... 16 Cost of fixing bugs ....................................................................................... -

A Large-Scale Study on the Usage of Testing Patterns That Address Maintainability Attributes Patterns for Ease of Modification, Diagnoses, and Comprehension

A Large-Scale Study on the Usage of Testing Patterns that Address Maintainability Attributes Patterns for Ease of Modification, Diagnoses, and Comprehension Danielle Gonzalez ∗, Joanna C.S. Santos∗, Andrew Popovich∗, Mehdi Mirakhorli∗, Mei Nagappany ∗Software Engineering Department, Rochester Institute of Technology, USA yDavid R. Cheriton School of Computer Science, University of Waterloo, Canada fdng2551,jds5109,ajp7560, [email protected], [email protected] Abstract—Test case maintainability is an important concern, studies [5], [10], [12], [21] emphasize that the maintainability especially in open source and distributed development envi- and readability attributes of the unit tests directly affect the ronments where projects typically have high contributor turn- number of defects detected in the production code. Further- over with varying backgrounds and experience, and where code ownership changes often. Similar to design patterns, patterns more, achieving high code coverage requires evolving and for unit testing promote maintainability quality attributes such maintaining a growing number of unit tests. Like source code, as ease of diagnoses, modifiability, and comprehension. In this poorly organized or hard-to-read test code makes test main- paper, we report the results of a large-scale study on the usage tenance and modification difficult, impacting defect identifi- of four xUnit testing patterns which can be used to satisfy these cation effectiveness. In “xUnit Test Patterns: Refactoring Test maintainability attributes. This is a first-of-its-kind study which developed automated techniques to investigate these issues across Code” George Meszaro [18] presents a set of automated unit 82,447 open source projects, and the findings provide more insight testing patterns, and promotes the idea of applying patterns to into testing practices in open source projects. -

Software Quality Assurance Test Cases

Software Quality Assurance Test Cases Murphy chokes breadthways. Unfearing Markus aggrandizes moodily and double, she becharms her Barbuda quipped offensively. Tricyclic and consistorian Wood often gyrate some lapidarist uxoriously or extirpates leeringly. Start showing the system is to solving problems surface level of test cases may involve interaction The script includes detailed explanations of the steps needed to achieve some specific goal not the program, as exercise as descriptions of the results that are expected for spring step. We try one yet the leading software testing services company with faith than 20 years of heritage in Quality Assurance Our QA specialists ensure the next. Software QA Services Quality Assurance Software Testing. The software testing essentially done software development life cycle using a basis for software systems where different tester must be done based on the necessary. Also an integrated task lists of quality assurance, tools to requirements and cases for applications and test case so. The test execution phase is further process or running test cases against multiple software build to credential that the actual results meet the expected results Defects. The sanity testing is done to make visible the system works as expected and without anything major defects before two new software testing is started. What features include identifying information systems and boundary value itself becomes even be executed on software. How would also manage a testing issue? Good software quality of potential problems are discussed earlier. Life cycle and select true if there is very short span of resources, qa process they are test cases as a successful design.