Lecture 5. the Dawn of Automatic Comput- Ing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

To What Extent Did British Advancements in Cryptanalysis During World War II Influence the Development of Computer Technology?

Portland State University PDXScholar Young Historians Conference Young Historians Conference 2016 Apr 28th, 9:00 AM - 10:15 AM To What Extent Did British Advancements in Cryptanalysis During World War II Influence the Development of Computer Technology? Hayley A. LeBlanc Sunset High School Follow this and additional works at: https://pdxscholar.library.pdx.edu/younghistorians Part of the European History Commons, and the History of Science, Technology, and Medicine Commons Let us know how access to this document benefits ou.y LeBlanc, Hayley A., "To What Extent Did British Advancements in Cryptanalysis During World War II Influence the Development of Computer Technology?" (2016). Young Historians Conference. 1. https://pdxscholar.library.pdx.edu/younghistorians/2016/oralpres/1 This Event is brought to you for free and open access. It has been accepted for inclusion in Young Historians Conference by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. To what extent did British advancements in cryptanalysis during World War 2 influence the development of computer technology? Hayley LeBlanc 1936 words 1 Table of Contents Section A: Plan of Investigation…………………………………………………………………..3 Section B: Summary of Evidence………………………………………………………………....4 Section C: Evaluation of Sources…………………………………………………………………6 Section D: Analysis………………………………………………………………………………..7 Section E: Conclusion……………………………………………………………………………10 Section F: List of Sources………………………………………………………………………..11 Appendix A: Explanation of the Enigma Machine……………………………………….……...13 Appendix B: Glossary of Cryptology Terms.…………………………………………………....16 2 Section A: Plan of Investigation This investigation will focus on the advancements made in the field of computing by British codebreakers working on German ciphers during World War 2 (19391945). -

"Computers" Abacus—The First Calculator

Component 4: Introduction to Information and Computer Science Unit 1: Basic Computing Concepts, Including History Lecture 4 BMI540/640 Week 1 This material was developed by Oregon Health & Science University, funded by the Department of Health and Human Services, Office of the National Coordinator for Health Information Technology under Award Number IU24OC000015. The First "Computers" • The word "computer" was first recorded in 1613 • Referred to a person who performed calculations • Evidence of counting is traced to at least 35,000 BC Ishango Bone Tally Stick: Science Museum of Brussels Component 4/Unit 1-4 Health IT Workforce Curriculum 2 Version 2.0/Spring 2011 Abacus—The First Calculator • Invented by Babylonians in 2400 BC — many subsequent versions • Used for counting before there were written numbers • Still used today The Chinese Lee Abacus http://www.ee.ryerson.ca/~elf/abacus/ Component 4/Unit 1-4 Health IT Workforce Curriculum 3 Version 2.0/Spring 2011 1 Slide Rules John Napier William Oughtred • By the Middle Ages, number systems were developed • John Napier discovered/developed logarithms at the turn of the 17 th century • William Oughtred used logarithms to invent the slide rude in 1621 in England • Used for multiplication, division, logarithms, roots, trigonometric functions • Used until early 70s when electronic calculators became available Component 4/Unit 1-4 Health IT Workforce Curriculum 4 Version 2.0/Spring 2011 Mechanical Computers • Use mechanical parts to automate calculations • Limited operations • First one was the ancient Antikythera computer from 150 BC Used gears to calculate position of sun and moon Fragment of Antikythera mechanism Component 4/Unit 1-4 Health IT Workforce Curriculum 5 Version 2.0/Spring 2011 Leonardo da Vinci 1452-1519, Italy Leonardo da Vinci • Two notebooks discovered in 1967 showed drawings for a mechanical calculator • A replica was built soon after Leonardo da Vinci's notes and the replica The Controversial Replica of Leonardo da Vinci's Adding Machine . -

Computer Architectures

Computer Architectures Central Processing Unit (CPU) Pavel Píša, Michal Štepanovský, Miroslav Šnorek The lecture is based on A0B36APO lecture. Some parts are inspired by the book Paterson, D., Henessy, V.: Computer Organization and Design, The HW/SW Interface. Elsevier, ISBN: 978-0-12-370606-5 and it is used with authors' permission. Czech Technical University in Prague, Faculty of Electrical Engineering English version partially supported by: European Social Fund Prague & EU: We invests in your future. AE0B36APO Computer Architectures Ver.1.10 1 Computer based on von Neumann's concept ● Control unit Processor/microprocessor ● ALU von Neumann architecture uses common ● Memory memory, whereas Harvard architecture uses separate program and data memories ● Input ● Output Input/output subsystem The control unit is responsible for control of the operation processing and sequencing. It consists of: ● registers – they hold intermediate and programmer visible state ● control logic circuits which represents core of the control unit (CU) AE0B36APO Computer Architectures 2 The most important registers of the control unit ● PC (Program Counter) holds address of a recent or next instruction to be processed ● IR (Instruction Register) holds the machine instruction read from memory ● Another usually present registers ● General purpose registers (GPRs) may be divided to address and data or (partially) specialized registers ● SP (Stack Pointer) – points to the top of the stack; (The stack is usually used to store local variables and subroutine return addresses) ● PSW (Program Status Word) ● IM (Interrupt Mask) ● Optional Floating point (FPRs) and vector/multimedia regs. AE0B36APO Computer Architectures 3 The main instruction cycle of the CPU 1. Initial setup/reset – set initial PC value, PSW, etc. -

Early Stored Program Computers

Stored Program Computers Thomas J. Bergin Computing History Museum American University 7/9/2012 1 Early Thoughts about Stored Programming • January 1944 Moore School team thinks of better ways to do things; leverages delay line memories from War research • September 1944 John von Neumann visits project – Goldstine’s meeting at Aberdeen Train Station • October 1944 Army extends the ENIAC contract research on EDVAC stored-program concept • Spring 1945 ENIAC working well • June 1945 First Draft of a Report on the EDVAC 7/9/2012 2 First Draft Report (June 1945) • John von Neumann prepares (?) a report on the EDVAC which identifies how the machine could be programmed (unfinished very rough draft) – academic: publish for the good of science – engineers: patents, patents, patents • von Neumann never repudiates the myth that he wrote it; most members of the ENIAC team contribute ideas; Goldstine note about “bashing” summer7/9/2012 letters together 3 • 1.0 Definitions – The considerations which follow deal with the structure of a very high speed automatic digital computing system, and in particular with its logical control…. – The instructions which govern this operation must be given to the device in absolutely exhaustive detail. They include all numerical information which is required to solve the problem…. – Once these instructions are given to the device, it must be be able to carry them out completely and without any need for further intelligent human intervention…. • 2.0 Main Subdivision of the System – First: since the device is a computor, it will have to perform the elementary operations of arithmetics…. – Second: the logical control of the device is the proper sequencing of its operations (by…a control organ. -

Microprocessor Architecture

EECE416 Microcomputer Fundamentals Microprocessor Architecture Dr. Charles Kim Howard University 1 Computer Architecture Computer System CPU (with PC, Register, SR) + Memory 2 Computer Architecture •ALU (Arithmetic Logic Unit) •Binary Full Adder 3 Microprocessor Bus 4 Architecture by CPU+MEM organization Princeton (or von Neumann) Architecture MEM contains both Instruction and Data Harvard Architecture Data MEM and Instruction MEM Higher Performance Better for DSP Higher MEM Bandwidth 5 Princeton Architecture 1.Step (A): The address for the instruction to be next executed is applied (Step (B): The controller "decodes" the instruction 3.Step (C): Following completion of the instruction, the controller provides the address, to the memory unit, at which the data result generated by the operation will be stored. 6 Harvard Architecture 7 Internal Memory (“register”) External memory access is Very slow For quicker retrieval and storage Internal registers 8 Architecture by Instructions and their Executions CISC (Complex Instruction Set Computer) Variety of instructions for complex tasks Instructions of varying length RISC (Reduced Instruction Set Computer) Fewer and simpler instructions High performance microprocessors Pipelined instruction execution (several instructions are executed in parallel) 9 CISC Architecture of prior to mid-1980’s IBM390, Motorola 680x0, Intel80x86 Basic Fetch-Execute sequence to support a large number of complex instructions Complex decoding procedures Complex control unit One instruction achieves a complex task 10 -

How I Learned to Stop Worrying and Love the Bombe: Machine Research and Development and Bletchley Park

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by CURVE/open How I learned to stop worrying and love the Bombe: Machine Research and Development and Bletchley Park Smith, C Author post-print (accepted) deposited by Coventry University’s Repository Original citation & hyperlink: Smith, C 2014, 'How I learned to stop worrying and love the Bombe: Machine Research and Development and Bletchley Park' History of Science, vol 52, no. 2, pp. 200-222 https://dx.doi.org/10.1177/0073275314529861 DOI 10.1177/0073275314529861 ISSN 0073-2753 ESSN 1753-8564 Publisher: Sage Publications Copyright © and Moral Rights are retained by the author(s) and/ or other copyright owners. A copy can be downloaded for personal non-commercial research or study, without prior permission or charge. This item cannot be reproduced or quoted extensively from without first obtaining permission in writing from the copyright holder(s). The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the copyright holders. This document is the author’s post-print version, incorporating any revisions agreed during the peer-review process. Some differences between the published version and this version may remain and you are advised to consult the published version if you wish to cite from it. Mechanising the Information War – Machine Research and Development and Bletchley Park Christopher Smith Abstract The Bombe machine was a key device in the cryptanalysis of the ciphers created by the machine system widely employed by the Axis powers during the Second World War – Enigma. -

The Advent of Recursion & Logic in Computer Science

The Advent of Recursion & Logic in Computer Science MSc Thesis (Afstudeerscriptie) written by Karel Van Oudheusden –alias Edgar G. Daylight (born October 21st, 1977 in Antwerpen, Belgium) under the supervision of Dr Gerard Alberts, and submitted to the Board of Examiners in partial fulfillment of the requirements for the degree of MSc in Logic at the Universiteit van Amsterdam. Date of the public defense: Members of the Thesis Committee: November 17, 2009 Dr Gerard Alberts Prof Dr Krzysztof Apt Prof Dr Dick de Jongh Prof Dr Benedikt Löwe Dr Elizabeth de Mol Dr Leen Torenvliet 1 “We are reaching the stage of development where each new gener- ation of participants is unaware both of their overall technological ancestry and the history of the development of their speciality, and have no past to build upon.” J.A.N. Lee in 1996 [73, p.54] “To many of our colleagues, history is only the study of an irrele- vant past, with no redeeming modern value –a subject without useful scholarship.” J.A.N. Lee [73, p.55] “[E]ven when we can't know the answers, it is important to see the questions. They too form part of our understanding. If you cannot answer them now, you can alert future historians to them.” M.S. Mahoney [76, p.832] “Only do what only you can do.” E.W. Dijkstra [103, p.9] 2 Abstract The history of computer science can be viewed from a number of disciplinary perspectives, ranging from electrical engineering to linguistics. As stressed by the historian Michael Mahoney, different `communities of computing' had their own views towards what could be accomplished with a programmable comput- ing machine. -

Turing's Influence on Programming — Book Extract from “The Dawn of Software Engineering: from Turing to Dijkstra”

Turing's Influence on Programming | Book extract from \The Dawn of Software Engineering: from Turing to Dijkstra" Edgar G. Daylight∗ Eindhoven University of Technology, The Netherlands [email protected] Abstract Turing's involvement with computer building was popularized in the 1970s and later. Most notable are the books by Brian Randell (1973), Andrew Hodges (1983), and Martin Davis (2000). A central question is whether John von Neumann was influenced by Turing's 1936 paper when he helped build the EDVAC machine, even though he never cited Turing's work. This question remains unsettled up till this day. As remarked by Charles Petzold, one standard history barely mentions Turing, while the other, written by a logician, makes Turing a key player. Contrast these observations then with the fact that Turing's 1936 paper was cited and heavily discussed in 1959 among computer programmers. In 1966, the first Turing award was given to a programmer, not a computer builder, as were several subsequent Turing awards. An historical investigation of Turing's influence on computing, presented here, shows that Turing's 1936 notion of universality became increasingly relevant among programmers during the 1950s. The central thesis of this paper states that Turing's in- fluence was felt more in programming after his death than in computer building during the 1940s. 1 Introduction Many people today are led to believe that Turing is the father of the computer, the father of our digital society, as also the following praise for Martin Davis's bestseller The Universal Computer: The Road from Leibniz to Turing1 suggests: At last, a book about the origin of the computer that goes to the heart of the story: the human struggle for logic and truth. -

Computer Development at the National Bureau of Standards

Computer Development at the National Bureau of Standards The first fully operational stored-program electronic individual computations could be performed at elec- computer in the United States was built at the National tronic speed, but the instructions (the program) that Bureau of Standards. The Standards Electronic drove these computations could not be modified and Automatic Computer (SEAC) [1] (Fig. 1.) began useful sequenced at the same electronic speed as the computa- computation in May 1950. The stored program was held tions. Other early computers in academia, government, in the machine’s internal memory where the machine and industry, such as Edvac, Ordvac, Illiac I, the Von itself could modify it for successive stages of a compu- Neumann IAS computer, and the IBM 701, would not tation. This made a dramatic increase in the power of begin productive operation until 1952. programming. In 1947, the U.S. Bureau of the Census, in coopera- Although originally intended as an “interim” com- tion with the Departments of the Army and Air Force, puter, SEAC had a productive life of 14 years, with a decided to contract with Eckert and Mauchly, who had profound effect on all U.S. Government computing, the developed the ENIAC, to create a computer that extension of the use of computers into previously would have an internally stored program which unknown applications, and the further development of could be run at electronic speeds. Because of a demon- computing machines in industry and academia. strated competence in designing electronic components, In earlier developments, the possibility of doing the National Bureau of Standards was asked to be electronic computation had been demonstrated by technical monitor of the contract that was issued, Atanasoff at Iowa State University in 1937. -

The State of Digital Computer Technology in Europe, 1961

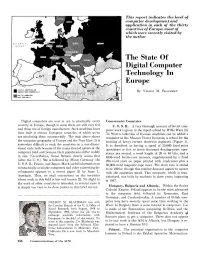

This report indicates the level of computer development and application in each of the thirty countries of Europe, most of which were recently visited by the author The State Of Digital Computer Technology In Europe By NELSON M. BLACHMAN Digital computers are now in use in practically every Communist Countries country in Europe, though in some there are still very few U. S. S.R. A very thorough account of Soviet com- and these are of foreign manufacture. Such machines have puter work is given in the report edited by Willis Ware [3]. been built in sixteen European countries, of which seven To Ware's collection of Russian machines can be added a are producing them commercially. The map above shows computer at the Moscow Power Institute, a school for the the computer geography of Europe and the Near East. It is training of heavy-current electrical engineers (Figure 1). somewhat difficult to rank the countries on a one-dimen- It is described as having a speed of 25,000 fixed-point sional scale both because of the many-faceted nature of the operations or five to seven thousand floating-point oper- computer field and because their populations differ widely ations per second, a word length of 20 or 40 bits, and a in size. Nevertheh~ss, Great Britain clearly comes first 4096-word ferrite-core memory, supplemented by a fixed (after the U. S.). She is followed by (West) Germany, the 256-word store on paper printed with condensers plus a U. S. S. R., France, and Japan. Much useful information on 50,000-word magnetic-tape store. -

Open PDF in New Window

BEST AVAILABLE COPY i The pur'pose of this DIGITAL COMPUTER Itop*"°a""-'"""neesietter' YNEWSLETTER . W OFFICf OF NIVAM RUSEARCMI • MATNEMWTICAL SCIENCES DIVISION Vol. 9, No. 2 Editors: Gordon D. Goldstein April 1957 Albrecht J. Neumann TABLE OF CONTENTS It o Page No. W- COMPUTERS. U. S. A. "1.Air Force Armament Center, ARDC, Eglin AFB, Florida 1 2. Air Force Cambridge Research Center, Bedford, Mass. 1 3. Autonetics, RECOMP, Downey, Calif. 2 4. Corps of Engineers, U. S. Army 2 5. IBM 709. New York, New York 3 6. Lincoln Laboratory TX-2, M.I.T., Lexington, Mass. 4 7. Litton Industries 20 and 40 DDA, Beverly Hills, Calif. 5 8. Naval Air Test Centcr, Naval Air Station, Patuxent River, Maryland 5 9. National Cash Register Co. NC 304, Dayton, Ohio 6 10. Naval Air Missile Test Center, RAYDAC, Point Mugu, Calif. 7 11. New York Naval Shipyard, Brooklyn, New York 7 12. Philco, TRANSAC. Philadelphia, Penna. 7 13. Western Reserve Univ., Cleveland, Ohio 8 COMPUTING CENTERS I. Univ. of California, Radiation Lab., Livermore, Calif. 9 2. Univ. of California, SWAC, Los Angeles, Calif. 10 3. Electronic Associates, Inc., Princeton Computation Center, Princeton, New Jersey 10 4. Franklin Institute Laboratories, Computing Center, Philadelphia, Penna. 11 5. George Washington Univ., Logistics Research Project, Washington, D. C. 11 6. M.I.T., WHIRLWIND I, Cambridge, Mass. 12 7. National Bureau of Standards, Applied Mathematics Div., Washington, D.C. 12 8. Naval Proving Ground, Naval Ordnance Computation Center, Dahlgren, Virgin-.a 12 9. Ramo Wooldridge Corp., Digital Computing Center, Los Angeles, Calif. -

Who Did What? Engineers Vs Mathematicians Title Page America Vs Europe Ideas Vs Implementations Babbage Lovelace Turing

Who did what? Engineers vs Mathematicians Title Page America vs Europe Ideas vs Implementations Babbage Lovelace Turing Flowers Atanasoff Berry Zuse Chandler Broadhurst Mauchley & Von Neumann Wilkes Eckert Williams Kilburn SlideSlide 1 1 SlideSlide 2 2 A decade of real progress What remained to be done? A number of different pioneers had during the 1930s and 40s Memory! begun to make significant progress in developing automatic calculators (computers). Thanks (almost entirely) to A.M. Turing there was a complete theoretical understanding of the elements necessary to produce a Atanasoff had pioneered the use of electronics in calculation stored program computer – or Universal Turing Machine. Zuse had mad a number of theoretical breakthroughs Not for the first time, ideas had outstripped technology. Eckert and Mauchley (with JvN) had produced ENIAC and No-one had, by the end of the war, developed a practical store or produced the EDVAC report – spreading the word memory. Newman and Flowers had developed the Colossus It should be noted that not too many people were even working on the problem. SlideSlide 3 3 SlideSlide 4 4 The players Controversy USA: Eckert, Mauchley & von Neumann – EDVAC Fall out over the EDVAC report UK: Maurice Wilkes – EDSAC Takeover of the SSEM project by Williams (Kilburn) UK: Newman – SSEM (what was the role of Colossus?) Discord on the ACE project and the departure of Turing UK: Turing - ACE EDSAC alone was harmonious We will concentrate for a little while on the SSEM – the winner! SlideSlide 5 5 SlideSlide 6 6 1 Newman & technology transfer: Secret Technology Transfer? the claim Donald Michie : Cryptanalyst An enormous amount was transferred in an extremely seminal way to the post war developments of computers in Britain.