New Controls for Combining Images in Correspondence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UPC Platform Publisher Title Price Available 730865001347

UPC Platform Publisher Title Price Available 730865001347 PlayStation 3 Atlus 3D Dot Game Heroes PS3 $16.00 52 722674110402 PlayStation 3 Namco Bandai Ace Combat: Assault Horizon PS3 $21.00 2 Other 853490002678 PlayStation 3 Air Conflicts: Secret Wars PS3 $14.00 37 Publishers 014633098587 PlayStation 3 Electronic Arts Alice: Madness Returns PS3 $16.50 60 Aliens Colonial Marines 010086690682 PlayStation 3 Sega $47.50 100+ (Portuguese) PS3 Aliens Colonial Marines (Spanish) 010086690675 PlayStation 3 Sega $47.50 100+ PS3 Aliens Colonial Marines Collector's 010086690637 PlayStation 3 Sega $76.00 9 Edition PS3 010086690170 PlayStation 3 Sega Aliens Colonial Marines PS3 $50.00 92 010086690194 PlayStation 3 Sega Alpha Protocol PS3 $14.00 14 047875843479 PlayStation 3 Activision Amazing Spider-Man PS3 $39.00 100+ 010086690545 PlayStation 3 Sega Anarchy Reigns PS3 $24.00 100+ 722674110525 PlayStation 3 Namco Bandai Armored Core V PS3 $23.00 100+ 014633157147 PlayStation 3 Electronic Arts Army of Two: The 40th Day PS3 $16.00 61 008888345343 PlayStation 3 Ubisoft Assassin's Creed II PS3 $15.00 100+ Assassin's Creed III Limited Edition 008888397717 PlayStation 3 Ubisoft $116.00 4 PS3 008888347231 PlayStation 3 Ubisoft Assassin's Creed III PS3 $47.50 100+ 008888343394 PlayStation 3 Ubisoft Assassin's Creed PS3 $14.00 100+ 008888346258 PlayStation 3 Ubisoft Assassin's Creed: Brotherhood PS3 $16.00 100+ 008888356844 PlayStation 3 Ubisoft Assassin's Creed: Revelations PS3 $22.50 100+ 013388340446 PlayStation 3 Capcom Asura's Wrath PS3 $16.00 55 008888345435 -

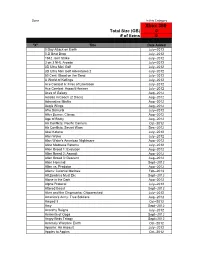

Xbox 360 Total Size (GB) 0 # of Items 0

Done In this Category Xbox 360 Total Size (GB) 0 # of items 0 "X" Title Date Added 0 Day Attack on Earth July--2012 0-D Beat Drop July--2012 1942 Joint Strike July--2012 3 on 3 NHL Arcade July--2012 3D Ultra Mini Golf July--2012 3D Ultra Mini Golf Adventures 2 July--2012 50 Cent: Blood on the Sand July--2012 A World of Keflings July--2012 Ace Combat 6: Fires of Liberation July--2012 Ace Combat: Assault Horizon July--2012 Aces of Galaxy Aug--2012 Adidas miCoach (2 Discs) Aug--2012 Adrenaline Misfits Aug--2012 Aegis Wings Aug--2012 Afro Samurai July--2012 After Burner: Climax Aug--2012 Age of Booty Aug--2012 Air Conflicts: Pacific Carriers Oct--2012 Air Conflicts: Secret Wars Dec--2012 Akai Katana July--2012 Alan Wake July--2012 Alan Wake's American Nightmare Aug--2012 Alice Madness Returns July--2012 Alien Breed 1: Evolution Aug--2012 Alien Breed 2: Assault Aug--2012 Alien Breed 3: Descent Aug--2012 Alien Hominid Sept--2012 Alien vs. Predator Aug--2012 Aliens: Colonial Marines Feb--2013 All Zombies Must Die Sept--2012 Alone in the Dark Aug--2012 Alpha Protocol July--2012 Altered Beast Sept--2012 Alvin and the Chipmunks: Chipwrecked July--2012 America's Army: True Soldiers Aug--2012 Amped 3 Oct--2012 Amy Sept--2012 Anarchy Reigns July--2012 Ancients of Ooga Sept--2012 Angry Birds Trilogy Sept--2012 Anomaly Warzone Earth Oct--2012 Apache: Air Assault July--2012 Apples to Apples Oct--2012 Aqua Oct--2012 Arcana Heart 3 July--2012 Arcania Gothica July--2012 Are You Smarter that a 5th Grader July--2012 Arkadian Warriors Oct--2012 Arkanoid Live -

Star Wars Video Game Planets

Terrestrial Planets Appearing in Star Wars Video Games In order of release. By LCM Mirei Seppen 1. Star Wars: The Empire Strikes Back (1982) Outer Rim Hoth 2. Star Wars (1983) No Terrestrial Planets 3. Star Wars: Jedi Arena (1983) No Terrestrial Planets 4. Star Wars: Return of the Jedi: Death Star Battle (1983) No Terrestrial Planets 5. Star Wars: Return of the Jedi (1984) Outer Rim Forest Moon of Endor 6. Death Star Interceptor (1985) No Terrestrial Planets 7. Star Wars: The Empire Strikes Back (1985) Outer Rim Hoth 8. Star Wars (1987) Mid Rim Iskalon Outer Rim Hoth (Called 'Tina') Kessel Tatooine Yavin 4 9. Ewoks and the Dandelion Warriors (1987) Outer Rim Forest Moon of Endor 10. Star Wars Droids (1988) Outer Rim Aaron 11. Star Wars (1991) Outer Rim Tatooine Yavin 4 12. Star Wars: Attack on the Death Star (1991) No Terrestrial Planets 13. Star Wars: The Empire Strikes Back (1992) Outer Rim Bespin Dagobah Hoth 14. Super Star Wars 1 (1992) Outer Rim Tatooine Yavin 4 15. Star Wars: X-Wing (1993) No Terrestrial Planets 16. Star Wars Chess (1993) No Terrestrial Planets 17. Star Wars Arcade (1993) No Terrestrial Planets 18. Star Wars: Rebel Assault 1 (1993) Outer Rim Hoth Kolaador Tatooine Yavin 4 19. Super Star Wars 2: The Empire Strikes Back (1993) Outer Rim Bespin Dagobah Hoth 20. Super Star Wars 3: Return of the Jedi (1994) Outer Rim Forest Moon of Endor Tatooine 21. Star Wars: TIE Fighter (1994) No Terrestrial Planets 22. Star Wars: Dark Forces 1 (1995) Core Cal-Seti Coruscant Hutt Space Nar Shaddaa Mid Rim Anteevy Danuta Gromas 16 Talay Outer Rim Anoat Fest Wildspace Orinackra 23. -

01 2014 FIFA World Cup Brazil 02 50 Cent : Blood on the Sand 03 AC/DC

01 2014 FIFA World Cup Brazil 02 50 Cent : Blood on the Sand 03 AC/DC Live : Rock Band Track Pack 04 Ace Combat : Assault Horizon 05 Ace Combat 6: Fires of Liberation 06 Adventure Time : Explore the Dungeon Because I DON'T KNOW! 07 Adventure Time : The Secret of the Nameless Kingdom 08 AFL Live 2 09 Afro Samurai 10 Air Conflicts : Vietnam 11 Air Conflicts Pacific Carriers 12 Akai Katana 13 Alan Wake 14 Alan Wake - Bonus Disk 15 Alan Wake's American Nightmare 16 Alice: Madness Returns 17 Alien : Isolation 18 Alien Breed Trilogy 19 Aliens : Colonial Marines 20 Alone In The Dark 21 Alpha Protocol 22 Amped 3 23 Anarchy Reigns 24 Angry Bird Star Wars 25 Angry Bird Trilogy 26 Arcania : The Complete Tale 27 Armored Core Verdict Day 28 Army Of Two - The 40th Day 29 Army of Two - The Devils Cartel 30 Assassin’s Creed 2 31 Assassin's Creed 32 Assassin's Creed - Rogue 33 Assassin's Creed Brotherhood 34 Assassin's Creed III 35 Assassin's Creed IV Black Flag 36 Assassin's Creed La Hermandad 37 Asterix at the Olympic Games 38 Asuras Wrath 39 Autobahn Polizei 40 Backbreaker 41 Backyard Sports Rookie Rush 42 Baja – Edge of Control 43 Bakugan Battle Brawlers 44 Band Hero 45 BandFuse: Rock Legends 46 Banjo Kazooie Nuts and Bolts 47 Bass Pro Shop The Strike 48 Batman Arkham Asylum Goty Edition 49 Batman Arkham City Game Of The Year Edition 50 Batman Arkham Origins Blackgate Deluxe Edition 51 Battle Academy 52 Battle Fantasía 53 Battle vs Cheese 54 Battlefield 2 - Modern Combat 55 Battlefield 3 56 Battlefield 4 57 Battlefield Bad Company 58 Battlefield Bad -

The Star Wars Timeline Gold: Legends Clone Wars Supplement 1

The Star Wars Timeline Gold: Legends Clone Wars Supplement 1 THE STAR WARS TIMELINE GOLD The Old Republic. The Galactic Empire. The New Republic. What might have been. Every legend has its historian. By Nathan P. Butler - Gold Release Number 56 – 85th SWT Release - 08/11/18 ___________________________ starwarsfanworks.com/timeline _facebook.com/swtimelinegold __________________________________ LEGENDS CLONE WARS SUPPLEMENT “The chronology of the Clone Wars is confusing.” --Luke Skywalker Dedicated to all of the fellow Star Wars fans who remain frustrated with the lack of clarity in how the pre-2008 and post-2008 versions of the Clone Wars in the Star Wars: Legends continuity are meant to fit together, if at all -----|----- -Fellow Star Wars fans- The document you are reading was born out of necessity. After the release of Attack of the Clones in 2002, Lucasfilm’s various fictional licensees, including Del Rey, LucasArts, Dark Horse Comics, and others, produced a stunningly detailed three-year saga of the Clone Wars that brought us directly up to the opening of Revenge of the Sith in theaters in 2005. It became one of the most well-orchestrated periods in all of Star Wars: Legends publishing. 2008 changed all of that, then 2014 shifted things yet again. The Clone Wars materials that were produced from 2002 – 2008 were part of the “Official Continuity” (now Legends Continuity) of C-Canon that was meant to expand upon the G-Canon films. When The Clone Wars launched as a multimedia project in 2008, centered around a new film and television series with the direct involvement of George Lucas, the era of the Clone Wars was thrown into disarray by a new level of canonicity, T-Canon, and numerous new assertions. -

Kinect: Disneyland Adventures Launching November 15, 2011

Add concept art to fill back © Disney. © Disney / Pixar. Peter Pan from Walt Disney’s masterpiece “Peter Pan.” REVIEWER’S QUICK GUIDE PORTUGAL Utilize a Força com o “Kinect Star Wars” A partir de 3 de Abril, o “Kinect Star Wars™” dá vida ao universo de Star Wars de uma forma totalmente inovadora. Tirando partido do poder sem comandos do Kinect para a Xbox 360, “Kinect Star Wars” permite aos fãs treinar fisicamente as suas técnicas de Jedi, tomar o poder da Força nas suas mãos, pilotar as características naves e veículos, esbravejar como um Rancor feroz e até dançar com as famosas personagens de Star Wars. Detalhes • Editor: LucasArts (E.U.A. e Canadá) e Microsoft Studios (internacional) • Produtor: Terminal Reality, Good Science e Microsoft Studios • Classificação: PEGI 12 anos • Preço: 49,99 Euros Restrições de Publicação de apreciações e críticas As críticas estão sujeitas a restrições de publicação até Terça-feira, 3 de Abril às 07:01, tanto para a imprensa como para a cobertura online. Ao aceitar uma cópia final do jogo antes do lançamento a 3 de Abril, compromete-se a não publicar online, na imprensa nem partilhar nas redes sociais apreciações sobre o jogo até esta data e hora. ENTRE NA GALÁXIA DE STAR WARS Fiel à saga Star Wars que todos conhecemos e veneramos, “Kinect Star Wars” dá vida à “galáxia muito, muito distante” com gráficos impressionantes e as emblemáticas personagens, veículos, naves, Droids e muito mais. Partilhe a Força com os amigos através dos modos de cooperação, competição e de duelo. A funcionalidade de entrada e saída fácil permite que um segundo jogador se junte imediatamente à ação dos Jedi. -

Embodiment. Games and Culture, 3 (3-4), Pp

This is a repository copy of Gaming and the limits of digital embodiment. White Rose Research Online URL for this paper: https://eprints.whiterose.ac.uk/130590/ Version: Accepted Version Article: Farrow, Robert and Iacovides, Ioanna orcid.org/0000-0001-9674-8440 (2014) Gaming and the limits of digital embodiment. Philosophy and Technology. pp. 221-233. ISSN 2210- 5441 https://doi.org/10.1007/s13347-013-0111-1 Reuse Items deposited in White Rose Research Online are protected by copyright, with all rights reserved unless indicated otherwise. They may be downloaded and/or printed for private study, or other acts as permitted by national copyright laws. The publisher or other rights holders may allow further reproduction and re-use of the full text version. This is indicated by the licence information on the White Rose Research Online record for the item. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ Gaming and the limits of digital embodiment Introduction This paper discusses the nature and limits of player embodiment within digital games. We identify a convergence between everyday bodily actions and activity within digital environments, and a trend towards incorporating natural forms of movement into gaming worlds through mimetic control devices. We examine recent literature in the area of immersion and presence in digital gaming; Calleja’s (2011) recent Player Involvement Model (PIM) of gaming is discussed and found to rely on a problematic notion of embodiment as ‘incorporation’. -

Introduction Disney's Purchase of Lucasfilm in 2012 Heralded a New

To Disney Infinity and Beyond - Star Wars Videogames Before and After the Lucasarts Acquisition Douglas Brown Introduction Disney’s purchase of Lucasfilm in 2012 heralded a new direction for Star Wars, firmly aimed towards Disney's new character-led diversification strategy which CEO Bob Iger had begun with its purchase of Pixar in 20061. It also had some unexpected fallout for the world of videogames both within and beyond the franchise. The absorption of Star Wars into Disney entailed a new approach towards transmedial content creation. This involved the integration of its characters into the macro-transmedial world of the broader Disney ‘meta-franchise’, already expanded beyond the company’s own works by the addition of Marvel Studios. This new approach became apparent, as is explored throughout this volume, through the integration of Star Wars intellectual property (IP) into Disney branded content from movies and TV shows to theme parks, advertisements and merchandising. For video games in particular, the Disney buyout was greater than the Star Wars franchise only. The Lucasfilm portfolio also includes Indiana Jones, the special effects house, Industrial Light and Magic (ILM) and – most importantly for the purposes of this chapter – Lucasarts, a well-respected game development and publishing company with a string of classic videogame IPs attached. Trepidation from gamers over how Disney would deploy and monetise the new IP came tempered with hope that classic game series both within the franchise’s universe and outside of it, including long- dormant IP such as classic adventure games Maniac Mansion2 and Day of The Tentacle3 could perhaps be revived or rebooted. -

Creando Universos : La Narrativa Transmedia

Después de todo, se pone mucho en la creación de un universo y todo lo que va con él, y parece una pena usarlo sólo una vez. Terry Brooks El universo no sólo tiene una historia, si no cualquier historia posible. Stephen Hawking ! II Creando universos la narrativa transmedia Trabajo inal Grado de Comunicación Universitat Oberta de Catalunya Alumno: Joel Pérez Pérez Tutor del TFG: Fidel del Castillo Díaz Curso 2015/16 Resumen La convergencia mediática ha supuesto un cambio tanto en el modo de producción como en el del consumo de los medios. La multiplicación de canales ha supuesto el nacimiento de un nuevo modelo narrativo: la narración transmedia. Esta nueva narrativa utiliza todos los canales disponibles para hacer llegar al consumidor partes diferenciadas de su historia para que éste las interrelacione. Este proceso plantea nuevas exigencias a los consumidores y depende de la participación activa de las comunidades de conocimientos para conseguir desentrañar los misterios planteados. Esta tesis aborda tanto los elementos relacionados como el mismo proceso de implementación de una narrativa transmedia con el fin de detallar cuales son las características que hacen que una historia atrape a sus seguidores de una manera tan eficaz. Palabras clave: convergencia de medios, cultura participativa, inteligencia colectiva, narración transmedia, guión audiovisual, cultura fan, interactividad. ! III Abstract Media convergence has meant a change in the way we consume and produce the media. The channels multiplication has led to the birth of a new narrative model: transmedia storytelling. This new narrative uses all available channels to reach different parts of the story so consumers have to interrelate them. -

Simulating Behavior Trees a Behavior Tree/Planner Hybrid Approach

8 Simulating Behavior Trees A Behavior Tree/Planner Hybrid Approach Daniel Hilburn 8.1 Introduction 8.3 Now Let’s Throw in a Few 8.2 The Jedi AI in Monkey Wrenches … Kinect Star Wars™ 8.4 Conclusion 8.1 Introduction Game AI must handle a high degree of complexity. Designers often represent AI with complex state diagrams that must be implemented and thoroughly tested. AI agents exist in a complex game world and must efficiently query this world and construct models of what is known about it. Animation states must be managed correctly for the AI to interact with the world properly. AI must simultaneously provide a range of control from fully autonomous to fully designer-driven. At the same time, game AI must be flexible. Designers change AI structure quickly and often. AI implementations depend heavily on other AI and game system implementations, which change often as well. Any assumptions made about these external systems can easily become invalid—often with little warning. Game AI must also interact appropriately with a range of player styles, allowing many players to have fun playing your game. There are many techniques available to the AI programmer to help solve these issues. As with any technique, all have their strengths and weaknesses. In the next couple of sections, we’ll give a brief overview of two of these techniques: behavior trees and planners. We’ll briefly outline which problems they attempt to solve and the ones with which they struggle. Then, we’ll discuss a hybrid implementation which draws on the strengths of both approaches. -

Japan Import

Stalker Call Of Pripyat SKU-PAS1067400 Forza 3 - Ultimate Platinum Hits -Xbox 360 NBA Live 07 [Japan Import] Jack Of All Games 856959001342 Pc King Solomons Trivia Challenge Mbx Checkers 3D Karaoke Revolution Glee: Volume 3 Bundle -Xbox 360 Battlefield: Bad Company - Playstation 3 Wii Rock Band Bundle: Guitar, Drums & Microphone PS3 Mortal Kombat Tournament Edition Fight Stick SEGA Ryu ga Gotoku OF THE END for PS3 [Japan Import] Foreign Legion: Buckets of Blood I Confessed to a Childhood Friend of Twins. ~ ~ Seppaku School Funny People Dream Pinball 3D Midnight Club: Los Angeles [Japan Import] Fragile: Sayonara Tsuki no Haikyo [Japan Import] Bowling Champs The Tomb Raider Trilogy (PS3) (UK IMPORT) Disney/Pixar Cars Toon: Mater's Tall Tales [Nintendo Wii] Hataraku Hit [Japan Import] Navy SEAL (PC - 3.5" diskette) Mystery Masters: Wicked Worlds Collection Dynasty Warriors 8 - Xbox 360 Storybook Workshop - Nintendo Wii Learn with Pong Pong the Pig: The Human Body New - Battlefield 3 PC by Electronic Art - 19726 (japan import) Angry Birds Star Wars - Xbox 360 Viva Media No Limit Texas Hold'Em 3D Poker 2 (plus 2 games) Cards & Casino for W indows for Adults X-Plane 10 Flight Simulator - Windows and Mac London 2012 Olympics - Xbox 360 Fisherman's Paradise II (Jewel Case) John Daly's ProStroke Golf - PC Dungeons & Dragons: Chronicles of Mystara Trapped Dead Memories Off 6: T-Wave [Japan Import] Anno 2070 Complete Edition Microsoft Flight Simulator 2004: A Century of Flight - PC New Casual Arcade Crystal Bomb Runner Stop The Alien Hordes Search -

The Top 25 Most Visited Star Wars Video Game Planets by LT Mirei Seppen with an Examination of One Hundred and Twenty Seven Star

The Top 25 Most Visited Star Wars Video Game Planets by LT Mirei Seppen With an examination of one hundred and twenty seven Star Wars video games I have sorted each by planet. This requires being able to visit the planet, so in space outside the planet or in the system doesn't count. Sorry TIE Fighter fans. Most of the results are what was expected, enjoy. =) === (Top 25) Tatooine - 63 Coruscant - 42 Hoth - 38 (+1) Forest Moon of Endor - 33 Naboo - 28 Bespin - 27 Yavin 4 - 27 Geonosis - 23 Kashyyyk - 20 Dagobah - 15 Mustafar - 15 Kamino - 14 Sullust - 10 Dantooine - 9 Felucia - 9 Takodana - 9 Dathomir - 8 Kessel - 8 Utapau - 8 Korriban - 7 Nar Shaddaa - 7 Corellia - 6 Jakku - 6 Ord Mantell - 6 Ryloth - 6 === (Planets Covered by Region) Colonies - Core Corellia Coruscant Deep Core - Expansion Region - Hutt Space Nar Shaddaa Inner Rim Jakku Mid Rim Naboo Kashyyyk Ord Mantell Takodana Outer Rim Bespin Dagobah Dantooine Dathomir Felucia Forest Moon of Endor Geonosis Hoth Kessel Korriban Mustafar Ryloth Sullust Tatooine Utapau Yavin 4 Unknown Regions - Wildspace Kamino === (Game Breakdown) Tatooine - 63 - Angry Birds: Star Wars 1 (2012) - Angry Birds: Star Wars 2 (2013) - Disney Infinity 3.0 (2015) - Kinect Star Wars (2012) - Lego Star Wars 1: The Video Game (2005) - Lego Star Wars 2: The Original Trilogy (2006) - Lego Star Wars 3: The Clone Wars (2011) - Lego Star Wars: The Complete Saga (2007) - Lego Star Wars: The Yoda Chronicles (2013) - Star Wars (1987) - Star Wars (1991) - Star Wars Commander (2014) - Star Wars Episode 1: Jedi Power Battles