Automated Binary Software Attack Surface Reduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Browser Wars

Uppsala universitet Inst. för informationsvetenskap Browser Wars Kampen om webbläsarmarknaden Andreas Högström, Emil Pettersson Kurs: Examensarbete Nivå: C Termin: VT-10 Datum: 2010-06-07 Handledare: Anneli Edman "Anyone who slaps a 'this page is best viewed with Browser X' label on a Web page appears to be yearning for the bad old days, before the Web, when you had very little chance of read- ing a document written on another computer, another word processor, or another network" - Sir Timothy John Berners-Lee, grundare av World Wide Web Consortium, Technology Review juli 1996 Innehållsförteckning Abstract ...................................................................................................................................... 1 Sammanfattning ......................................................................................................................... 2 1 Inledning .................................................................................................................................. 3 1.1 Bakgrund .............................................................................................................................. 3 1.2 Syfte ..................................................................................................................................... 3 1.3 Frågeställningar .................................................................................................................... 3 1.4 Avgränsningar ..................................................................................................................... -

HTTP Cookie - Wikipedia, the Free Encyclopedia 14/05/2014

HTTP cookie - Wikipedia, the free encyclopedia 14/05/2014 Create account Log in Article Talk Read Edit View history Search HTTP cookie From Wikipedia, the free encyclopedia Navigation A cookie, also known as an HTTP cookie, web cookie, or browser HTTP Main page cookie, is a small piece of data sent from a website and stored in a Persistence · Compression · HTTPS · Contents user's web browser while the user is browsing that website. Every time Request methods Featured content the user loads the website, the browser sends the cookie back to the OPTIONS · GET · HEAD · POST · PUT · Current events server to notify the website of the user's previous activity.[1] Cookies DELETE · TRACE · CONNECT · PATCH · Random article Donate to Wikipedia were designed to be a reliable mechanism for websites to remember Header fields Wikimedia Shop stateful information (such as items in a shopping cart) or to record the Cookie · ETag · Location · HTTP referer · DNT user's browsing activity (including clicking particular buttons, logging in, · X-Forwarded-For · Interaction or recording which pages were visited by the user as far back as months Status codes or years ago). 301 Moved Permanently · 302 Found · Help 303 See Other · 403 Forbidden · About Wikipedia Although cookies cannot carry viruses, and cannot install malware on 404 Not Found · [2] Community portal the host computer, tracking cookies and especially third-party v · t · e · Recent changes tracking cookies are commonly used as ways to compile long-term Contact page records of individuals' browsing histories—a potential privacy concern that prompted European[3] and U.S. -

Aquatic Microbial Ecology 75:117

Vol. 75: 117–128, 2015 AQUATIC MICROBIAL ECOLOGY Published online June 4 doi: 10.3354/ame01752 Aquat Microb Ecol Metagenomic assessment of viral diversity in Lake Matoaka, a temperate, eutrophic freshwater lake in southeastern Virginia, USA Jasmin C. Green1, Faraz Rahman1, Matthew A. Saxton2,3, Kurt E. Williamson1,2,* 1Department of Biology, College of William & Mary, Williamsburg, Virginia 23185, USA 2Environmental Science and Policy Program, College of William & Mary, Williamsburg, Virginia 23185, USA 3Present address: Department of Marine Sciences, University of Georgia, Athens, Georgia 30602, USA ABSTRACT: Little is known about the composition and diversity of temperate freshwater viral communities. This study presents a metagenomic analysis of viral community composition, taxo- nomic and functional diversity of temperate, eutrophic Lake Matoaka in southeastern Virginia (USA). Three sampling sites were chosen to represent differences in anthropogenic impacts: the Crim Dell Creek mouth (impacted), the Pogonia Creek mouth (less impacted) and the main body of the lake (mixed). Sequences belonging to tailed bacteriophages were the most abundant at all 3 sites, with Podoviridae predominating. The main lake body harbored the highest virus genotype richness and included cyanophage and eukaryotic algal virus sequences not found at the other 2 sites, while the impacted Crim Dell Creek mouth showed the lowest richness. Cross-contig com- parisons indicated that similar virus genotypes were found at all 3 sites, but at different rank- abundances. Hierarchical cluster analysis of multiple viral metagenomes indicated high genetic similarity between viral communities of related environments, with freshwater, marine, hypersaline, and eukaryote-associated environments forming into clear groups despite large geographic dis- tances between sampling locations within each environment type. -

Discontinued Browsers List

Discontinued Browsers List Look back into history at the fallen windows of yesteryear. Welcome to the dead pool. We include both officially discontinued, as well as those that have not updated. If you are interested in browsers that still work, try our big browser list. All links open in new windows. 1. Abaco (discontinued) http://lab-fgb.com/abaco 2. Acoo (last updated 2009) http://www.acoobrowser.com 3. Amaya (discontinued 2013) https://www.w3.org/Amaya 4. AOL Explorer (discontinued 2006) https://www.aol.com 5. AMosaic (discontinued in 2006) No website 6. Arachne (last updated 2013) http://www.glennmcc.org 7. Arena (discontinued in 1998) https://www.w3.org/Arena 8. Ariadna (discontinued in 1998) http://www.ariadna.ru 9. Arora (discontinued in 2011) https://github.com/Arora/arora 10. AWeb (last updated 2001) http://www.amitrix.com/aweb.html 11. Baidu (discontinued 2019) https://liulanqi.baidu.com 12. Beamrise (last updated 2014) http://www.sien.com 13. Beonex Communicator (discontinued in 2004) https://www.beonex.com 14. BlackHawk (last updated 2015) http://www.netgate.sk/blackhawk 15. Bolt (discontinued 2011) No website 16. Browse3d (last updated 2005) http://www.browse3d.com 17. Browzar (last updated 2013) http://www.browzar.com 18. Camino (discontinued in 2013) http://caminobrowser.org 19. Classilla (last updated 2014) https://www.floodgap.com/software/classilla 20. CometBird (discontinued 2015) http://www.cometbird.com 21. Conkeror (last updated 2016) http://conkeror.org 22. Crazy Browser (last updated 2013) No website 23. Deepnet Explorer (discontinued in 2006) http://www.deepnetexplorer.com 24. Enigma (last updated 2012) No website 25. -

Les Menus Indépendants

les menus indépendants étant un grand fan de minimalisme, et donc pas vraiment attiré vers les environnements de bureaux complets (Gnome/KDE/E17) je me trouve souvent confronté au problème du menu. bien sûr les raccourcis clavier sont parfaits, mais que voulez-vous, j'aime avoir un menu… voici quelques uns des menus indépendants que j'utilise: associés à un raccourcis clavier, un lanceur dans un panel/dock ou intégré dans une zone de notification. Sommaire les menus indépendants....................................................................................................1 compiz-deskmenu...........................................................................................................2 installation..................................................................................................................2 paquet Debian.........................................................................................................2 depuis les sources...................................................................................................2 configuration...............................................................................................................2 MyGTKMenu....................................................................................................................5 installation..................................................................................................................5 configuration...............................................................................................................5 -

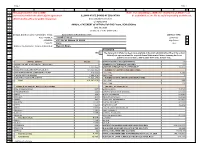

2020 Annual Statement of Affairs

Page 1 Page 1 A B C D E F G H I J 1 This page must be sent to ISBE Note: For submitting to ISBE, the "Statement of Affairs" can 2 and retained within the district/joint agreement ILLINOIS STATE BOARD OF EDUCATION be submitted as one file to avoid separating worksheets. 3 administrative office for public inspection. School Business Services 4 (217)785-8779 5 ANNUAL STATEMENT OF AFFAIRS FOR THE FISCAL YEAR ENDING 6 June 30, 2020 7 (Section 10-17 of the School Code) 8 9 SCHOOL DISTRICT/JOINT AGREEMENT NAME: Aurora East School District 131 DISTRICT TYPE 10 RCDT NUMBER: 31-045-1310-22 Elementary 11 ADDRESS: 417 5th St, Aurora, IL 60505 High School 12 COUNTY: Kane Unit X 1413 NAME OF NEWSPAPER WHERE PUBLISHED: Beacon News 15 ASSURANCE 16 YES x The statement of affairs has been made available in the main administrative office of the school district/joint agreement and the required Annual Statement of Affairs Summary has been published in accordance with Section 10-17 of the School Code. 1817 19 CAPITAL ASSETS VALUE SIZE OF DISTRICT IN SQUARE MILES 13 20 WORKS OF ART & HISTORICAL TREASURES NUMBER OF ATTENDANCE CENTERS 22 21 LAND 2,771,855 9 MONTH AVERAGE DAILY ATTENDANCE 13,327 22 BUILDING & BUILDING IMPROVEMENTS 191,099,506 NUMBER OF CERTIFICATED EMPLOYEES 23 SITE IMPROVMENTS & INFRASTRUCTURE 813,544 FULL-TIME 1,240 24 CAPITALIZED EQUIPMENT 1,553,276 PART-TIME 1 25 CONSTRUCTION IN PROGRESS 13,923,489 NUMBER OF NON-CERTIFICATED EMPLOYEES 26 Total 210,161,670 FULL-TIME 335 27 PART-TIME 113 28 NUMBER OF PUPILS ENROLLED PER GRADE TAX RATE BY FUND -

Giant List of Web Browsers

Giant List of Web Browsers The majority of the world uses a default or big tech browsers but there are many alternatives out there which may be a better choice. Take a look through our list & see if there is something you like the look of. All links open in new windows. Caveat emptor old friend & happy surfing. 1. 32bit https://www.electrasoft.com/32bw.htm 2. 360 Security https://browser.360.cn/se/en.html 3. Avant http://www.avantbrowser.com 4. Avast/SafeZone https://www.avast.com/en-us/secure-browser 5. Basilisk https://www.basilisk-browser.org 6. Bento https://bentobrowser.com 7. Bitty http://www.bitty.com 8. Blisk https://blisk.io 9. Brave https://brave.com 10. BriskBard https://www.briskbard.com 11. Chrome https://www.google.com/chrome 12. Chromium https://www.chromium.org/Home 13. Citrio http://citrio.com 14. Cliqz https://cliqz.com 15. C?c C?c https://coccoc.com 16. Comodo IceDragon https://www.comodo.com/home/browsers-toolbars/icedragon-browser.php 17. Comodo Dragon https://www.comodo.com/home/browsers-toolbars/browser.php 18. Coowon http://coowon.com 19. Crusta https://sourceforge.net/projects/crustabrowser 20. Dillo https://www.dillo.org 21. Dolphin http://dolphin.com 22. Dooble https://textbrowser.github.io/dooble 23. Edge https://www.microsoft.com/en-us/windows/microsoft-edge 24. ELinks http://elinks.or.cz 25. Epic https://www.epicbrowser.com 26. Epiphany https://projects-old.gnome.org/epiphany 27. Falkon https://www.falkon.org 28. Firefox https://www.mozilla.org/en-US/firefox/new 29. -

VENDOR CO-OP 1St Choice Restaurant Equipment and Supply

VENDOR CO-OP 1st Choice Restaurant Equipment and Supply LLC BuyBoard 1-800 Radiator and A/C of El Paso ESC 19 1st Choice Restaurant Equipment & Supply, LLC ESC 19 1st Class Solutions TIPS 22nd Century Technologies Inc. Choice Partners 2717 Group, LLC. 1GPA 2NDGEAR LLC TIPS 2ove1, LLC ESC 19 2TAC Corporation TIPS 2Ten Coffee Roasters ESC 19 3 C Technology LLC TIPS 308 Construction LLC TIPS 323 Link LLC TIPS 360 Degree Customer, Inc. ESC 19 360 Solutions Group TIPS 360Civic 1GPA 3-C Technology BuyBoard 3N1 Office Products Inc TIPS 3P Learning Choice Partners 3P Learning ESC 19 3Rivers Telecom Consulting Plenteous Consulting LLC TIPS 3Sixty Integrated.com BuyBoard 3W Consulting Group LLC ESC 19 3W Consulting Group LLC TIPS 3W Consulting Group, LLC ESC 19 4 Rivers Equipment ESC 19 4 Rivers Equipment LLC BuyBoard 4imprint BuyBoard 4Imprint Choice Partners 4imprint, Inc. ESC 20 4Kboards TIPS 4T Partnership LLc TIPS 4TellX LLC ESC 19 5 L5E LLC TIPS 5-F Mechanical Group Inc. BuyBoard 5W Contracting LLC TIPS 5W Contracting LLC Assignee TIPS 806 Technologies, Inc. Choice Partners 9 to 5 Seating Choice Partners A & H Painting, Inc. 1GPA A & M Awards ESC 19 A 2 Z Educational Supplies BuyBoard A and E Seamless Raingutters Inc TIPS A and S Air Conditioning Assignee TIPS A Bargas and Associates LLC TIPS A King's Image ESC 18 A Lert Roof Systems TIPS A Lingua Franca LLC ESC 19 A Pass Educational Group LLC TIPS A Photo Identification ESC 18 A Photo Identification ESC 20 A Quality HVAC Air Conditioning & Heating 1GPA A to Z Books LLC TIPS A V Pro, Inc. -

Microbetrace: Retooling Molecular Epidemiology for Rapid Public Health Response

bioRxiv preprint doi: https://doi.org/10.1101/2020.07.22.216275; this version posted July 24, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. This article is a US Government work. It is not subject to copyright under 17 USC 105 and is also made available for use under a CC0 license. 1 Title: MicrobeTrace: Retooling Molecular Epidemiology for Rapid Public Health Response 2 Authors: Ellsworth M. Campbell1,*, Anthony Boyles2,, Anupama Shankar1, Jay Kim 2, Sergey 3 Knyazev1,3,4, William M. Switzer1 4 Affiliations 5 1Centers for Disease Control and Prevention, Atlanta, GA 30329 6 2Northrup Grumman, Atlanta, GA 30345 7 3Oak Ridge Institute for Science and Education, Oak Ridge, TN 37830 8 4Department of Computer Science, Georgia State University, Atlanta, GA, 30303 9 Title character count (with spaces): 71 10 Abstract word count: 165 (of limit 150) 11 Manuscript word count, excluding figure captions: 4552 (of limit 5000) 12 Figure caption word count: 490 13 Conflicts of Interest and Source of Funding: None 14 * To whom correspondence should be addressed. 15 Authors contributed equally. 16 Abstract 17 Motivation 18 Outbreak investigations use data from interviews, healthcare providers, laboratories and surveillance 19 systems. However, integrated use of data from multiple sources requires a patchwork of software that 20 present challenges in usability, interoperability, confidentiality, and cost. Rapid integration, visualization 21 and analysis of data from multiple sources can guide effective public health interventions. 22 Results 23 We developed MicrobeTrace to facilitate rapid public health responses by overcoming barriers to data 24 integration and exploration in molecular epidemiology. -

Webkit and Blink: Open Development Powering the HTML5 Revolution

WebKit and Blink: Open Development Powering the HTML5 Revolution Juan J. Sánchez LinuxCon 2013, New Orleans Myself, Igalia and WebKit Co-founder, member of the WebKit/Blink/Browsers team Igalia is an open source consultancy founded in 2001 Igalia is Top 5 contributor to upstream WebKit/Blink Working with many industry actors: tablets, phones, smart tv, set-top boxes, IVI and home automation. WebKit and Blink Juan J. Sánchez Outline The WebKit technology: goals, features, architecture, code structure, ports, webkit2, ongoing work The WebKit community: contributors, committers, reviewers, tools, events How to contribute to WebKit: bugfixing, features, new ports Blink: history, motivations for the fork, differences, status and impact in the WebKit community WebKit and Blink Juan J. Sánchez WebKit: The technology WebKit and Blink Juan J. Sánchez The WebKit project Web rendering engine (HTML, JavaScript, CSS...) The engine is the product Started as a fork of KHTML and KJS in 2001 Open Source since 2005 Among other things, it’s useful for: Web browsers Using web technologies for UI development WebKit and Blink Juan J. Sánchez Goals of the project Web Content Engine: HTML, CSS, JavaScript, DOM Open Source: BSD-style and LGPL licenses Compatibility: regression testing Standards Compliance Stability Performance Security Portability: desktop, mobile, embedded... Usability Hackability WebKit and Blink Juan J. Sánchez Goals of the project NON-goals: “It’s an engine, not a browser” “It’s an engineering project not a science project” “It’s not a bundle of maximally general and reusable code” “It’s not the solution to every problem” http://www.webkit.org/projects/goals.html WebKit and Blink Juan J. -

Hardware Architectures for Secure, Reliable, and Energy-Efficient Real-Time Systems

HARDWARE ARCHITECTURES FOR SECURE, RELIABLE, AND ENERGY-EFFICIENT REAL-TIME SYSTEMS A Dissertation Presented to the Faculty of the Graduate School of Cornell University in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by Daniel Lo August 2015 c 2015 Daniel Lo ALL RIGHTS RESERVED HARDWARE ARCHITECTURES FOR SECURE, RELIABLE, AND ENERGY-EFFICIENT REAL-TIME SYSTEMS Daniel Lo, Ph.D. Cornell University 2015 The last decade has seen an increased ubiquity of computers with the widespread adoption of smartphones and tablets and the continued spread of embedded cyber-physical systems. With this integration into our environ- ments, it has become important to consider the real-world interactions of these computers rather than simply treating them as abstract computing machines. For example, cyber-physical systems typically have real-time constraints in or- der to ensure safe and correct physical interactions. Similarly, even mobile and desktop systems, which are not traditionally considered real-time systems, are inherently time-sensitive because of their interaction with users. Traditional techniques proposed for improving hardware architectures are not designed with these real-time constraints in mind. In this thesis, we explore some of the challenges and opportunities in hardware design for real-time systems. Specifi- cally, we study recent techniques for improving security, reliability, and energy- efficiency of computer systems. Run-time monitoring has been shown to be a promising technique for im- proving system security and reliability. Applying run-time monitoring to real- time systems introduces challenges due to the performance impact of monitor- ing. To address this, we first developed a technique for estimating the worst- case execution time impact of run-time monitoring, enabling it to be applied within existing real-time system design methodologies. -

BIJOU BROWSER Sirer, Fotolia .Com

RevIews Firefox for Mobile (Fennec) Mozilla in the palm of your hand BIJOU BROWSER .com Fotolia Sirer, Firefox goes mobile – a “desktop” browser in the palm of your hand. bookmarks, form data, saved passwords, history, and other information. Finally, BY NATHAN WILLIS Mozilla’s interest in promoting the open web extends beyond the rendered page ith Firefox, Mozilla has main- pect to be able to access the same sites itself, so Fennec had to support the same tained dominance in the open on the go as they do at home or at work, plugins and extensibility that make Fire- wsource web browser market and they expect them to work the same fox a popular platform on PCs. for years – on the desktop. But, as way. If all this strikes you as a tall order, it phones and other pocket-sized devices Other mobile browsers invariably cut is. To simplify development, the team increase in power, extending that reach corners on the web experience by com- decided to target Nokia’s Maemo mobile poses a new challenge. Mozilla competes pressing pages into images; turning off platform first; Maemo is Linux-based against closed source, third-party brows- JavaScript; omitting tabs, video, and and arguably the most desktop-Linux- ers like Opera Mini and platform-spe- audio playback; and so on. In some like of any of the Linux mobile phone cific, preinstalled browsers like Maemo’s cases, this results in a visually identical stacks. It uses standard libraries like MicroB. static page, but increasingly, it means GStreamer, D-Bus, Qt, any others.