Architecture for a Multilingual Wikipedia WORKING PAPER

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Improving Wikimedia Projects Content Through Collaborations Waray Wikipedia Experience, 2014 - 2017

Improving Wikimedia Projects Content through Collaborations Waray Wikipedia Experience, 2014 - 2017 Harvey Fiji • Michael Glen U. Ong Jojit F. Ballesteros • Bel B. Ballesteros ESEAP Conference 2018 • 5 – 6 May 2018 • Bali, Indonesia In 8 November 2013, typhoon Haiyan devastated the Eastern Visayas region of the Philippines when it made landfall in Guiuan, Eastern Samar and Tolosa, Leyte. The typhoon affected about 16 million individuals in the Philippines and Tacloban City in Leyte was one of [1] the worst affected areas. Philippines Eastern Visayas Eastern Visayas, specifically the provinces of Biliran, Leyte, Northern Samar, Samar and Eastern Samar, is home for the Waray speakers in the Philippines. [3] [2] Outline of the Presentation I. Background of Waray Wikipedia II. Collaborations made by Sinirangan Bisaya Wikimedia Community III. Problems encountered IV. Lessons learned V. Future plans References Photo Credits I. Background of Waray Wikipedia (https://war.wikipedia.org) Proposed on or about June 23, 2005 and created on or about September 24, 2005 Deployed lsjbot from Feb 2013 to Nov 2015 creating 1,143,071 Waray articles about flora and fauna As of 24 April 2018, it has a total of 1,262,945 articles created by lsjbot (90.5%) and by humans (9.5%) As of 31 March 2018, it has 401 views per hour Sinirangan Bisaya (Eastern Visayas) Wikimedia Community is the (offline) community that continuously improves Waray Wikipedia and related Wikimedia projects I. Background of Waray Wikipedia (https://war.wikipedia.org) II. Collaborations made by Sinirangan Bisaya Wikimedia Community A. Collaborations with private* and national government** Introductory letter organizations Series of meetings and communications B. -

Włodzimierz Lewoniewski Metoda Porównywania I Wzbogacania

Włodzimierz Lewoniewski Metoda porównywania i wzbogacania informacji w wielojęzycznych serwisach wiki na podstawie analizy ich jakości The method of comparing and enriching informa- on in mullingual wikis based on the analysis of their quality Praca doktorska Promotor: Prof. dr hab. Witold Abramowicz Promotor pomocniczy: dr Krzysztof Węcel Pracę przyjęto dnia: podpis Promotora Kierunek: Specjalność: Poznań 2018 Spis treści 1 Wstęp 1 1.1 Motywacja .................................... 1 1.2 Cel badawczy i teza pracy ............................. 6 1.3 Źródła informacji i metody badawcze ....................... 8 1.4 Struktura rozprawy ................................ 10 2 Jakość danych i informacji 12 2.1 Wprowadzenie .................................. 12 2.2 Jakość danych ................................... 13 2.3 Jakość informacji ................................. 15 2.4 Podsumowanie .................................. 19 3 Serwisy wiki oraz semantyczne bazy wiedzy 20 3.1 Wprowadzenie .................................. 20 3.2 Serwisy wiki .................................... 21 3.3 Wikipedia jako przykład serwisu wiki ....................... 24 3.4 Infoboksy ..................................... 25 3.5 DBpedia ...................................... 27 3.6 Podsumowanie .................................. 28 4 Metody określenia jakości artykułów Wikipedii 29 4.1 Wprowadzenie .................................. 29 4.2 Wymiary jakości serwisów wiki .......................... 30 4.3 Problemy jakości Wikipedii ............................ 31 4.4 -

An Analysis of Contributions to Wikipedia from Tor

Are anonymity-seekers just like everybody else? An analysis of contributions to Wikipedia from Tor Chau Tran Kaylea Champion Andrea Forte Department of Computer Science & Engineering Department of Communication College of Computing & Informatics New York University University of Washington Drexel University New York, USA Seatle, USA Philadelphia, USA [email protected] [email protected] [email protected] Benjamin Mako Hill Rachel Greenstadt Department of Communication Department of Computer Science & Engineering University of Washington New York University Seatle, USA New York, USA [email protected] [email protected] Abstract—User-generated content sites routinely block contri- butions from users of privacy-enhancing proxies like Tor because of a perception that proxies are a source of vandalism, spam, and abuse. Although these blocks might be effective, collateral damage in the form of unrealized valuable contributions from anonymity seekers is invisible. One of the largest and most important user-generated content sites, Wikipedia, has attempted to block contributions from Tor users since as early as 2005. We demonstrate that these blocks have been imperfect and that thousands of attempts to edit on Wikipedia through Tor have been successful. We draw upon several data sources and analytical techniques to measure and describe the history of Tor editing on Wikipedia over time and to compare contributions from Tor users to those from other groups of Wikipedia users. Fig. 1. Screenshot of the page a user is shown when they attempt to edit the Our analysis suggests that although Tor users who slip through Wikipedia article on “Privacy” while using Tor. Wikipedia’s ban contribute content that is more likely to be reverted and to revert others, their contributions are otherwise similar in quality to those from other unregistered participants and to the initial contributions of registered users. -

Language-Agnostic Relation Extraction from Abstracts in Wikis

information Article Language-Agnostic Relation Extraction from Abstracts in Wikis Nicolas Heist, Sven Hertling and Heiko Paulheim * ID Data and Web Science Group, University of Mannheim, Mannheim 68131, Germany; [email protected] (N.H.); [email protected] (S.H.) * Correspondence: [email protected] Received: 5 February 2018; Accepted: 28 March 2018; Published: 29 March 2018 Abstract: Large-scale knowledge graphs, such as DBpedia, Wikidata, or YAGO, can be enhanced by relation extraction from text, using the data in the knowledge graph as training data, i.e., using distant supervision. While most existing approaches use language-specific methods (usually for English), we present a language-agnostic approach that exploits background knowledge from the graph instead of language-specific techniques and builds machine learning models only from language-independent features. We demonstrate the extraction of relations from Wikipedia abstracts, using the twelve largest language editions of Wikipedia. From those, we can extract 1.6 M new relations in DBpedia at a level of precision of 95%, using a RandomForest classifier trained only on language-independent features. We furthermore investigate the similarity of models for different languages and show an exemplary geographical breakdown of the information extracted. In a second series of experiments, we show how the approach can be transferred to DBkWik, a knowledge graph extracted from thousands of Wikis. We discuss the challenges and first results of extracting relations from a larger set of Wikis, using a less formalized knowledge graph. Keywords: relation extraction; knowledge graphs; Wikipedia; DBpedia; DBkWik; Wiki farms 1. Introduction Large-scale knowledge graphs, such as DBpedia [1], Freebase [2], Wikidata [3], or YAGO [4], are usually built using heuristic extraction methods, by exploiting crowd-sourcing processes, or both [5]. -

Detecting Undisclosed Paid Editing in Wikipedia

Detecting Undisclosed Paid Editing in Wikipedia Nikesh Joshi, Francesca Spezzano, Mayson Green, and Elijah Hill Computer Science Department, Boise State University Boise, Idaho, USA [email protected] {nikeshjoshi,maysongreen,elijahhill}@u.boisestate.edu ABSTRACT 1 INTRODUCTION Wikipedia, the free and open-collaboration based online ency- Wikipedia is the free online encyclopedia based on the principle clopedia, has millions of pages that are maintained by thousands of of open collaboration; for the people by the people. Anyone can volunteer editors. As per Wikipedia’s fundamental principles, pages add and edit almost any article or page. However, volunteers should on Wikipedia are written with a neutral point of view and main- follow a set of guidelines when editing Wikipedia. The purpose tained by volunteer editors for free with well-defned guidelines in of Wikipedia is “to provide the public with articles that summa- order to avoid or disclose any confict of interest. However, there rize accepted knowledge, written neutrally and sourced reliably” 1 have been several known incidents where editors intentionally vio- and the encyclopedia should not be considered as a platform for late such guidelines in order to get paid (or even extort money) for advertising and self-promotion. Wikipedia’s guidelines strongly maintaining promotional spam articles without disclosing such. discourage any form of confict-of-interest (COI) editing and require In this paper, we address for the frst time the problem of identi- editors to disclose any COI contribution. Paid editing is a form of fying undisclosed paid articles in Wikipedia. We propose a machine COI editing and refers to editing Wikipedia (in the majority of the learning-based framework using a set of features based on both cases for promotional purposes) in exchange for compensation. -

12€€€€Collaborating on the Sum of All Knowledge Across Languages

Wikipedia @ 20 • ::Wikipedia @ 20 12 Collaborating on the Sum of All Knowledge Across Languages Denny Vrandečić Published on: Oct 15, 2020 Updated on: Nov 16, 2020 License: Creative Commons Attribution 4.0 International License (CC-BY 4.0) Wikipedia @ 20 • ::Wikipedia @ 20 12 Collaborating on the Sum of All Knowledge Across Languages Wikipedia is available in almost three hundred languages, each with independently developed content and perspectives. By extending lessons learned from Wikipedia and Wikidata toward prose and structured content, more knowledge could be shared across languages and allow each edition to focus on their unique contributions and improve their comprehensiveness and currency. Every language edition of Wikipedia is written independently of every other language edition. A contributor may consult an existing article in another language edition when writing a new article, or they might even use the content translation tool to help with translating one article to another language, but there is nothing to ensure that articles in different language editions are aligned or kept consistent with each other. This is often regarded as a contribution to knowledge diversity since it allows every language edition to grow independently of all other language editions. So would creating a system that aligns the contents more closely with each other sacrifice that diversity? Differences Between Wikipedia Language Editions Wikipedia is often described as a wonder of the modern age. There are more than fifty million articles in almost three hundred languages. The goal of allowing everyone to share in the sum of all knowledge is achieved, right? Not yet. The knowledge in Wikipedia is unevenly distributed.1 Let’s take a look at where the first twenty years of editing Wikipedia have taken us. -

Wikimatrix: Mining 135M Parallel Sentences

Under review as a conference paper at ICLR 2020 WIKIMATRIX:MINING 135M PARALLEL SENTENCES IN 1620 LANGUAGE PAIRS FROM WIKIPEDIA Anonymous authors Paper under double-blind review ABSTRACT We present an approach based on multilingual sentence embeddings to automat- ically extract parallel sentences from the content of Wikipedia articles in 85 lan- guages, including several dialects or low-resource languages. We do not limit the extraction process to alignments with English, but systematically consider all pos- sible language pairs. In total, we are able to extract 135M parallel sentences for 1620 different language pairs, out of which only 34M are aligned with English. This corpus of parallel sentences is freely available.1 To get an indication on the quality of the extracted bitexts, we train neural MT baseline systems on the mined data only for 1886 languages pairs, and evaluate them on the TED corpus, achieving strong BLEU scores for many language pairs. The WikiMatrix bitexts seem to be particularly interesting to train MT systems between distant languages without the need to pivot through English. 1 INTRODUCTION Most of the current approaches in Natural Language Processing (NLP) are data-driven. The size of the resources used for training is often the primary concern, but the quality and a large variety of topics may be equally important. Monolingual texts are usually available in huge amounts for many topics and languages. However, multilingual resources, typically sentences in two languages which are mutual translations, are more limited, in particular when the two languages do not involve English. An important source of parallel texts are international organizations like the European Parliament (Koehn, 2005) or the United Nations (Ziemski et al., 2016). -

Critical Point of View: a Wikipedia Reader

w ikipedia pedai p edia p Wiki CRITICAL POINT OF VIEW A Wikipedia Reader 2 CRITICAL POINT OF VIEW A Wikipedia Reader CRITICAL POINT OF VIEW 3 Critical Point of View: A Wikipedia Reader Editors: Geert Lovink and Nathaniel Tkacz Editorial Assistance: Ivy Roberts, Morgan Currie Copy-Editing: Cielo Lutino CRITICAL Design: Katja van Stiphout Cover Image: Ayumi Higuchi POINT OF VIEW Printer: Ten Klei Groep, Amsterdam Publisher: Institute of Network Cultures, Amsterdam 2011 A Wikipedia ISBN: 978-90-78146-13-1 Reader EDITED BY Contact GEERT LOVINK AND Institute of Network Cultures NATHANIEL TKACZ phone: +3120 5951866 INC READER #7 fax: +3120 5951840 email: [email protected] web: http://www.networkcultures.org Order a copy of this book by sending an email to: [email protected] A pdf of this publication can be downloaded freely at: http://www.networkcultures.org/publications Join the Critical Point of View mailing list at: http://www.listcultures.org Supported by: The School for Communication and Design at the Amsterdam University of Applied Sciences (Hogeschool van Amsterdam DMCI), the Centre for Internet and Society (CIS) in Bangalore and the Kusuma Trust. Thanks to Johanna Niesyto (University of Siegen), Nishant Shah and Sunil Abraham (CIS Bangalore) Sabine Niederer and Margreet Riphagen (INC Amsterdam) for their valuable input and editorial support. Thanks to Foundation Democracy and Media, Mondriaan Foundation and the Public Library Amsterdam (Openbare Bibliotheek Amsterdam) for supporting the CPOV events in Bangalore, Amsterdam and Leipzig. (http://networkcultures.org/wpmu/cpov/) Special thanks to all the authors for their contributions and to Cielo Lutino, Morgan Currie and Ivy Roberts for their careful copy-editing. -

The Future of the Past

THE FUTURE OF THE PAST A CASE STUDY ON THE REPRESENTATION OF THE HOLOCAUST ON WIKIPEDIA 2002-2014 Rudolf den Hartogh Master Thesis Global History and International Relations Erasmus University Rotterdam The future of the past A case study on the representation of the Holocaust on Wikipedia Rudolf den Hartogh Master Thesis Global History and International Relations Erasmus School of History, Culture and Communication (ESHCC) Erasmus University Rotterdam July 2014 Supervisor: prof. dr. Kees Ribbens Co-reader: dr. Robbert-Jan Adriaansen Cover page images: 1. Wikipedia-logo_inverse.png (2007) of the Wikipedia user Nohat (concept by Wikipedian Paulussmagnus). Digital image. Retrieved from: http://commons.wikimedia.org (2-12-2013). 2. Holocaust-Victim.jpg (2013) Photographer unknown. Digital image. Retrieved from: http://www.myinterestingfacts.com/ (2-12-2013). 4 This thesis is dedicated to the loving memory of my grandmother, Maagje den Hartogh-Bos, who sadly passed away before she was able to see this thesis completed. 5 Abstract Since its creation in 2001, Wikipedia, ‘the online free encyclopedia that anyone can edit’, has increasingly become a subject of debate among historians due to its radical departure from traditional history scholarship. The medium democratized the field of knowledge production to an extent that has not been experienced before and it was the incredible popularity of the medium that triggered historians to raise questions about the quality of the online reference work and implications for the historian’s craft. However, despite a vast body of research devoted to the digital encyclopedia, no consensus has yet been reached in these debates due to a general lack of empirical research. -

ORES Scalable, Community-Driven, Auditable, Machine Learning-As-A-Service in This Presentation, We Lay out Ideas

ORES Scalable, community-driven, auditable, machine learning-as-a-service In this presentation, we lay out ideas... This is a conversation starter, not a conversation ender. We put forward our best ideas and thinking based on our partial perspectives. We look forward to hearing yours. Outline - Opportunities and fears of artificial intelligence - ORES: A break-away success - The proposal & asks We face a significant challenge... There’s been a significant decline in the number of active contributors to the English-language Wikipedia. Contributors have fallen by 40% over the past eight years, to about 30,000. Hamstrung by our own success? “Research indicates that the problem may be rooted in Wikipedians’ complex bureaucracy and their often hard-line responses to newcomers’ mistakes, enabled by semi-automated tools that make deleting new changes easy.” - MIT Technology Review (December 1, 2015) It’s the revert-new-editors’-first-few-edits-and-alienate-them problem. Imagine an algorithm that can... ● Judge whether an edit was made in good faith, so that new editors don’t have their first contributions wiped out. ● Detect bad-faith edits and help fight vandalism. Machine learning and prediction have the power to support important work in open contribution projects like Wikipedia at massive scales. But it’s far from a no-brainer. Quite the opposite. On the bright side, Machine learning has the potential to help our projects scale by reducing the workload of editors and enhancing the value of our content. by Luiz Augusto Fornasier Milani under CC BY-SA 4.0, from Wikimedia Commons. On the dark side, AIs have the potential to perpetuate biases and silence voices in novel and insidious ways. -

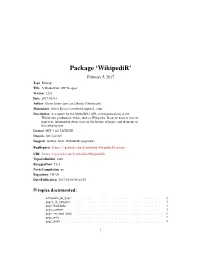

Package 'Wikipedir'

Package ‘WikipediR’ February 5, 2017 Type Package Title A MediaWiki API Wrapper Version 1.5.0 Date 2017-02-04 Author Oliver Keyes [aut, cre], Brock Tilbert [ctb] Maintainer Oliver Keyes <[email protected]> Description A wrapper for the MediaWiki API, aimed particularly at the Wikimedia 'production' wikis, such as Wikipedia. It can be used to retrieve page text, information about users or the history of pages, and elements of the category tree. License MIT + file LICENSE Imports httr, jsonlite Suggests testthat, knitr, WikidataR, pageviews BugReports https://github.com/Ironholds/WikipediR/issues URL https://github.com/Ironholds/WikipediR/ VignetteBuilder knitr RoxygenNote 5.0.1 NeedsCompilation no Repository CRAN Date/Publication 2017-02-05 08:44:55 R topics documented: categories_in_page . .2 pages_in_category . .3 page_backlinks . .4 page_content . .5 page_external_links . .6 page_info . .7 page_links . .8 1 2 categories_in_page query . .9 random_page . .9 recent_changes . 10 revision_content . 11 revision_diff . 12 user_contributions . 14 user_information . 15 WikipediR . 17 Index 18 categories_in_page Retrieves categories associated with a page. Description Retrieves categories associated with a page (or list of pages) on a MediaWiki instance Usage categories_in_page(language = NULL, project = NULL, domain = NULL, pages, properties = c("sortkey", "timestamp", "hidden"), limit = 50, show_hidden = FALSE, clean_response = FALSE, ...) Arguments language The language code of the project you wish to query, if appropriate. project The project you wish to query ("wikiquote"), if appropriate. Should be provided in conjunction with language. domain as an alternative to a language and project combination, you can also provide a domain ("rationalwiki.org") to the URL constructor, allowing for the querying of non-Wikimedia MediaWiki instances. pages A vector of page titles, with or without spaces, that you want to retrieve cate- gories for. -

Europe's Online Encyclopaedias

Europe's online encyclopaedias Equal access to knowledge of general interest in a post-truth era? IN-DEPTH ANALYSIS EPRS | European Parliamentary Research Service Author: Naja Bentzen Members' Research Service PE 630.347 – December 2018 EN The post-truth era – in which emotions seem to trump evidence, while trust in institutions, expertise and mainstream media is declining – is putting our information ecosystem under strain. At a time when information is increasingly being manipulated for ideological and economic purposes, public access to sources of trustworthy, general-interest knowledge – such as national online encyclopaedias – can help to boost our cognitive resilience. Basic, reliable background information about history, culture, society and politics is an essential part of our societies' complex knowledge ecosystem and an important tool for any citizen searching for knowledge, facts and figures. AUTHOR Naja Bentzen, Members' Research Service This paper has been drawn up by the Members' Research Service, within the Directorate-General for Parliamentary Research Services (EPRS) of the Secretariat of the European Parliament. The annexes to this paper are dedicated to individual countries and contain contributions from Ilze Eglite (coordinating information specialist), Evarts Anosovs, Marie-Laure Augere-Granier, Jan Avau, Michele Bigoni, Krisztina Binder, Kristina Grosek, Matilda Ekehorn, Roy Hirsh, Sorina Silvia Ionescu, Ana Martinez Juan, Ulla Jurviste, Vilma Karvelyte, Giorgios Klis, Maria Kollarova, Veronika Kunz, Elena Lazarou, Tarja Laaninen, Odile Maisse, Helmut Masson, Marketa Pape, Raquel Juncal Passos Rocha, Eric Pichon, Anja Radjenovic, Beata Rojek- Podgorska, Nicole Scholz, Anne Vernet and Dessislava Yougova. The graphics were produced by Giulio Sabbati. To contact the authors, please email: [email protected] LINGUISTIC VERSIONS Original: EN Translations: DE, FR Original manuscript, in English, completed in January 2018 and updated in December 2018.