Uniquely Represented Data Structures for Computational Geometry

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Interval Trees Storing and Searching Intervals

Interval Trees Storing and Searching Intervals • Instead of points, suppose you want to keep track of axis-aligned segments: • Range queries: return all segments that have any part of them inside the rectangle. • Motivation: wiring diagrams, genes on genomes Simpler Problem: 1-d intervals • Segments with at least one endpoint in the rectangle can be found by building a 2d range tree on the 2n endpoints. - Keep pointer from each endpoint stored in tree to the segments - Mark segments as you output them, so that you don’t output contained segments twice. • Segments with no endpoints in range are the harder part. - Consider just horizontal segments - They must cross a vertical side of the region - Leads to subproblem: Given a vertical line, find segments that it crosses. - (y-coords become irrelevant for this subproblem) Interval Trees query line interval Recursively build tree on interval set S as follows: Sort the 2n endpoints Let xmid be the median point Store intervals that cross xmid in node N intervals that are intervals that are completely to the completely to the left of xmid in Nleft right of xmid in Nright Another view of interval trees x Interval Trees, continued • Will be approximately balanced because by choosing the median, we split the set of end points up in half each time - Depth is O(log n) • Have to store xmid with each node • Uses O(n) storage - each interval stored once, plus - fewer than n nodes (each node contains at least one interval) • Can be built in O(n log n) time. • Can be searched in O(log n + k) time [k = # -

KP-Trie Algorithm for Update and Search Operations

The International Arab Journal of Information Technology, Vol. 13, No. 6, November 2016 722 KP-Trie Algorithm for Update and Search Operations Feras Hanandeh1, Izzat Alsmadi2, Mohammed Akour3, and Essam Al Daoud4 1Department of Computer Information Systems, Hashemite University, Jordan 2, 3Department of Computer Information Systems, Yarmouk University, Jordan 4Computer Science Department, Zarqa University, Jordan Abstract: Radix-Tree is a space optimized data structure that performs data compression by means of cluster nodes that share the same branch. Each node with only one child is merged with its child and is considered as space optimized. Nevertheless, it can’t be considered as speed optimized because the root is associated with the empty string. Moreover, values are not normally associated with every node; they are associated only with leaves and some inner nodes that correspond to keys of interest. Therefore, it takes time in moving bit by bit to reach the desired word. In this paper we propose the KP-Trie which is consider as speed and space optimized data structure that is resulted from both horizontal and vertical compression. Keywords: Trie, radix tree, data structure, branch factor, indexing, tree structure, information retrieval. Received January 14, 2015; accepted March 23, 2015; Published online December 23, 2015 1. Introduction the exception of leaf nodes, nodes in the trie work merely as pointers to words. Data structures are a specialized format for efficient A trie, also called digital tree, is an ordered multi- organizing, retrieving, saving and storing data. It’s way tree data structure that is useful to store an efficient with large amount of data such as: Large data associative array where the keys are usually strings, bases. -

Through the Concept of Data Structures, the Self- Balancing Binary Search Tree

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 06 Issue: 01 | Jan 2019 www.irjet.net p-ISSN: 2395-0072 THROUGH THE CONCEPT OF DATA STRUCTURES, THE SELF- BALANCING BINARY SEARCH TREE Asniya Sadaf Syed Atiqur Rehman1, Avantika kishor Bakale2, Priyanka Patil3 1,2,3Department of Computer Science and Engineering, Prof Ram Meghe College of Engineering and Management, Badnera -------------------------------------------------------------------------***------------------------------------------------------------------------ ABSTRACT - In the word of computing tree is a known way of implementing balancing tree as binary hierarchical data structure which stores information tree they are originally discovered by Bayer and are naturally in the form of hierarchy style. Tree is a most nowadays extensively (diseased) in the standard powerful and advanced data structure. This paper is literature or algorithm Maintaining the invariant of red mainly focused on self -balancing binary search tree(BST) black tree through Haskell types system using the nested also known as height balanced BST. A BST is a type of data data types this give small list noticeable overhead. Many structure that adjust itself to provide the consistent level gives small list overhead can be removed by the use of of node access. This paper covers the different types of BST existential types [4]. their analysis, complexity and application. 3.1. AVL TREE Key words: AVL Tree, Splay Tree, Skip List, Red Black Tree, BST. AVL Tree is best example of self-balancing search tree; this means that AVL Tree is also binary search tree 1. INTRODUCTION which is also balancing tree. A binary tree is said to be Tree data structures are the similar like Maps and Sets, balanced if different between the height of left and right. -

Lecture 04 Linear Structures Sort

Algorithmics (6EAP) MTAT.03.238 Linear structures, sorting, searching, etc Jaak Vilo 2018 Fall Jaak Vilo 1 Big-Oh notation classes Class Informal Intuition Analogy f(n) ∈ ο ( g(n) ) f is dominated by g Strictly below < f(n) ∈ O( g(n) ) Bounded from above Upper bound ≤ f(n) ∈ Θ( g(n) ) Bounded from “equal to” = above and below f(n) ∈ Ω( g(n) ) Bounded from below Lower bound ≥ f(n) ∈ ω( g(n) ) f dominates g Strictly above > Conclusions • Algorithm complexity deals with the behavior in the long-term – worst case -- typical – average case -- quite hard – best case -- bogus, cheating • In practice, long-term sometimes not necessary – E.g. for sorting 20 elements, you dont need fancy algorithms… Linear, sequential, ordered, list … Memory, disk, tape etc – is an ordered sequentially addressed media. Physical ordered list ~ array • Memory /address/ – Garbage collection • Files (character/byte list/lines in text file,…) • Disk – Disk fragmentation Linear data structures: Arrays • Array • Hashed array tree • Bidirectional map • Heightmap • Bit array • Lookup table • Bit field • Matrix • Bitboard • Parallel array • Bitmap • Sorted array • Circular buffer • Sparse array • Control table • Sparse matrix • Image • Iliffe vector • Dynamic array • Variable-length array • Gap buffer Linear data structures: Lists • Doubly linked list • Array list • Xor linked list • Linked list • Zipper • Self-organizing list • Doubly connected edge • Skip list list • Unrolled linked list • Difference list • VList Lists: Array 0 1 size MAX_SIZE-1 3 6 7 5 2 L = int[MAX_SIZE] -

Augmentation: Range Trees (PDF)

Lecture 9 Augmentation 6.046J Spring 2015 Lecture 9: Augmentation This lecture covers augmentation of data structures, including • easy tree augmentation • order-statistics trees • finger search trees, and • range trees The main idea is to modify “off-the-shelf” common data structures to store (and update) additional information. Easy Tree Augmentation The goal here is to store x.f at each node x, which is a function of the node, namely f(subtree rooted at x). Suppose x.f can be computed (updated) in O(1) time from x, children and children.f. Then, modification a set S of nodes costs O(# of ancestors of S)toupdate x.f, because we need to walk up the tree to the root. Two examples of O(lg n) updates are • AVL trees: after rotating two nodes, first update the new bottom node and then update the new top node • 2-3 trees: after splitting a node, update the two new nodes. • In both cases, then update up the tree. Order-Statistics Trees (from 6.006) The goal of order-statistics trees is to design an Abstract Data Type (ADT) interface that supports the following operations • insert(x), delete(x), successor(x), • rank(x): find x’s index in the sorted order, i.e., # of elements <x, • select(i): find the element with rank i. 1 Lecture 9 Augmentation 6.046J Spring 2015 We can implement the above ADT using easy tree augmentation on AVL trees (or 2-3 trees) to store subtree size: f(subtree) = # of nodes in it. Then we also have x.size =1+ c.size for c in x.children. -

Advanced Data Structures

Advanced Data Structures PETER BRASS City College of New York CAMBRIDGE UNIVERSITY PRESS Cambridge, New York, Melbourne, Madrid, Cape Town, Singapore, São Paulo Cambridge University Press The Edinburgh Building, Cambridge CB2 8RU, UK Published in the United States of America by Cambridge University Press, New York www.cambridge.org Information on this title: www.cambridge.org/9780521880374 © Peter Brass 2008 This publication is in copyright. Subject to statutory exception and to the provision of relevant collective licensing agreements, no reproduction of any part may take place without the written permission of Cambridge University Press. First published in print format 2008 ISBN-13 978-0-511-43685-7 eBook (EBL) ISBN-13 978-0-521-88037-4 hardback Cambridge University Press has no responsibility for the persistence or accuracy of urls for external or third-party internet websites referred to in this publication, and does not guarantee that any content on such websites is, or will remain, accurate or appropriate. Contents Preface page xi 1 Elementary Structures 1 1.1 Stack 1 1.2 Queue 8 1.3 Double-Ended Queue 16 1.4 Dynamical Allocation of Nodes 16 1.5 Shadow Copies of Array-Based Structures 18 2 Search Trees 23 2.1 Two Models of Search Trees 23 2.2 General Properties and Transformations 26 2.3 Height of a Search Tree 29 2.4 Basic Find, Insert, and Delete 31 2.5ReturningfromLeaftoRoot35 2.6 Dealing with Nonunique Keys 37 2.7 Queries for the Keys in an Interval 38 2.8 Building Optimal Search Trees 40 2.9 Converting Trees into Lists 47 2.10 -

Search Trees

Lecture III Page 1 “Trees are the earth’s endless effort to speak to the listening heaven.” – Rabindranath Tagore, Fireflies, 1928 Alice was walking beside the White Knight in Looking Glass Land. ”You are sad.” the Knight said in an anxious tone: ”let me sing you a song to comfort you.” ”Is it very long?” Alice asked, for she had heard a good deal of poetry that day. ”It’s long.” said the Knight, ”but it’s very, very beautiful. Everybody that hears me sing it - either it brings tears to their eyes, or else -” ”Or else what?” said Alice, for the Knight had made a sudden pause. ”Or else it doesn’t, you know. The name of the song is called ’Haddocks’ Eyes.’” ”Oh, that’s the name of the song, is it?” Alice said, trying to feel interested. ”No, you don’t understand,” the Knight said, looking a little vexed. ”That’s what the name is called. The name really is ’The Aged, Aged Man.’” ”Then I ought to have said ’That’s what the song is called’?” Alice corrected herself. ”No you oughtn’t: that’s another thing. The song is called ’Ways and Means’ but that’s only what it’s called, you know!” ”Well, what is the song then?” said Alice, who was by this time completely bewildered. ”I was coming to that,” the Knight said. ”The song really is ’A-sitting On a Gate’: and the tune’s my own invention.” So saying, he stopped his horse and let the reins fall on its neck: then slowly beating time with one hand, and with a faint smile lighting up his gentle, foolish face, he began.. -

Computational Geometry: 1D Range Tree, 2D Range Tree, Line

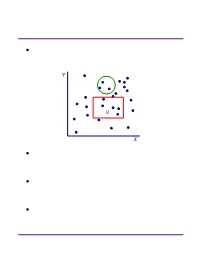

Computational Geometry Lecture 17 Computational geometry Algorithms for solving “geometric problems” in 2D and higher. Fundamental objects: point line segment line Basic structures: point set polygon L17.2 Computational geometry Algorithms for solving “geometric problems” in 2D and higher. Fundamental objects: point line segment line Basic structures: triangulation convex hull L17.3 Orthogonal range searching Input: n points in d dimensions • E.g., representing a database of n records each with d numeric fields Query: Axis-aligned box (in 2D, a rectangle) • Report on the points inside the box: • Are there any points? • How many are there? • List the points. L17.4 Orthogonal range searching Input: n points in d dimensions Query: Axis-aligned box (in 2D, a rectangle) • Report on the points inside the box Goal: Preprocess points into a data structure to support fast queries • Primary goal: Static data structure • In 1D, we will also obtain a dynamic data structure supporting insert and delete L17.5 1D range searching In 1D, the query is an interval: First solution using ideas we know: • Interval trees • Represent each point x by the interval [x, x]. • Obtain a dynamic structure that can list k answers in a query in O(k lg n) time. L17.6 1D range searching In 1D, the query is an interval: Second solution using ideas we know: • Sort the points and store them in an array • Solve query by binary search on endpoints. • Obtain a static structure that can list k answers in a query in O(k + lg n) time. Goal: Obtain a dynamic structure that can list k answers in a query in O(k + lg n) time. -

Range Searching

Range Searching ² Data structure for a set of objects (points, rectangles, polygons) for efficient range queries. Y Q X ² Depends on type of objects and queries. Consider basic data structures with broad applicability. ² Time-Space tradeoff: the more we preprocess and store, the faster we can solve a query. ² Consider data structures with (nearly) linear space. Subhash Suri UC Santa Barbara Orthogonal Range Searching ² Fix a n-point set P . It has 2n subsets. How many are possible answers to geometric range queries? Y 5 Some impossible rectangular ranges 6 (1,2,3), (1,4), (2,5,6). 1 4 Range (1,5,6) is possible. 3 2 X ² Efficiency comes from the fact that only a small fraction of subsets can be formed. ² Orthogonal range searching deals with point sets and axis-aligned rectangle queries. ² These generalize 1-dimensional sorting and searching, and the data structures are based on compositions of 1-dim structures. Subhash Suri UC Santa Barbara 1-Dimensional Search ² Points in 1D P = fp1; p2; : : : ; png. ² Queries are intervals. 15 71 3 7 9 21 23 25 45 70 72 100 120 ² If the range contains k points, we want to solve the problem in O(log n + k) time. ² Does hashing work? Why not? ² A sorted array achieves this bound. But it doesn’t extend to higher dimensions. ² Instead, we use a balanced binary tree. Subhash Suri UC Santa Barbara Tree Search 15 7 24 3 12 20 27 1 4 9 14 17 22 25 29 1 3 4 7 9 12 14 15 17 20 22 24 25 27 29 31 u v xlo =2 x hi =23 ² Build a balanced binary tree on the sorted list of points (keys). -

Assignment of Master's Thesis

CZECH TECHNICAL UNIVERSITY IN PRAGUE FACULTY OF INFORMATION TECHNOLOGY ASSIGNMENT OF MASTER’S THESIS Title: Approximate Pattern Matching In Sparse Multidimensional Arrays Using Machine Learning Based Methods Student: Bc. Anna Kučerová Supervisor: Ing. Luboš Krčál Study Programme: Informatics Study Branch: Knowledge Engineering Department: Department of Theoretical Computer Science Validity: Until the end of winter semester 2018/19 Instructions Sparse multidimensional arrays are a common data structure for effective storage, analysis, and visualization of scientific datasets. Approximate pattern matching and processing is essential in many scientific domains. Previous algorithms focused on deterministic filtering and aggregate matching using synopsis style indexing. However, little work has been done on application of heuristic based machine learning methods for these approximate array pattern matching tasks. Research current methods for multidimensional array pattern matching, discovery, and processing. Propose a method for array pattern matching and processing tasks utilizing machine learning methods, such as kernels, clustering, or PSO in conjunction with inverted indexing. Implement the proposed method and demonstrate its efficiency on both artificial and real world datasets. Compare the algorithm with deterministic solutions in terms of time and memory complexities and pattern occurrence miss rates. References Will be provided by the supervisor. doc. Ing. Jan Janoušek, Ph.D. prof. Ing. Pavel Tvrdík, CSc. Head of Department Dean Prague February 28, 2017 Czech Technical University in Prague Faculty of Information Technology Department of Knowledge Engineering Master’s thesis Approximate Pattern Matching In Sparse Multidimensional Arrays Using Machine Learning Based Methods Bc. Anna Kuˇcerov´a Supervisor: Ing. LuboˇsKrˇc´al 9th May 2017 Acknowledgements Main credit goes to my supervisor Ing. -

I/O-Efficient Spatial Data Structures for Range Queries

I/O-Efficient Spatial Data Structures for Range Queries Lars Arge Kasper Green Larsen MADALGO,∗ Department of Computer Science, Aarhus University, Denmark E-mail: [email protected],[email protected] 1 Introduction Range reporting is a one of the most fundamental topics in spatial databases and computational geometry. In this class of problems, the input consists of a set of geometric objects, such as points, line segments, rectangles etc. The goal is to preprocess the input set into a data structure, such that given a query range, one can efficiently report all input objects intersecting the range. The ranges most commonly considered are axis-parallel rectangles, halfspaces, points, simplices and balls. In this survey, we focus on the planar orthogonal range reporting problem in the external memory model of Aggarwal and Vitter [2]. Here the input consists of a set of N points in the plane, and the goal is to support reporting all points inside an axis-parallel query rectangle. We use B to denote the disk block size in number of points. The cost of answering a query is measured in the number of I/Os performed and the space of the data structure is measured in the number of disk blocks occupied, hence linear space is O(N=B) disk blocks. Outline. In Section 2, we set out by reviewing the classic B-tree for solving one- dimensional orthogonal range reporting, i.e. given N points on the real line and a query interval q = [q1; q2], report all T points inside q. In Section 3 we present optimal solutions for planar orthogonal range reporting, and finally in Section 4, we briefly discuss related range searching problems. -

Balanced Binary Search Trees 1 Introduction 2 BST Analysis

csce750 — Analysis of Algorithms Fall 2020 — Lecture Notes: Balanced Binary Search Trees This document contains slides from the lecture, formatted to be suitable for printing or individ- ual reading, and with some supplemental explanations added. It is intended as a supplement to, rather than a replacement for, the lectures themselves — you should not expect the notes to be self-contained or complete on their own. 1 Introduction CLRS 12, 13 A binary search tree is a data structure that supports these operations: • INSERT(k) • SEARCH(k) • DELETE(k) Basic idea: Store one key at each node. • All keys in the left subtree of n are less than the key stored at n. • All keys in the right subtree of n are greater than the key stored at n. Search and insert are trivial. Delete is slightly trickier, but not too bad. You may notice that these operations are very similar to the opera- tions available for hash tables. However, data structures like BSTs remain important because they can be extended to efficiently sup- port other useful operations like iterating over the elements in order, and finding the largest and smallest elements. These things cannot be done efficiently in hash tables. 2 BST Analysis Each operation can be done in time O(h) on a BST of height h. Worst case: Θ(n) Aside: Does randomization help? • Answer: Sort of. If we know all of the keys at the start, and insert them in a random order, in which each of the n! permutations is equally likely, then the expected tree height is O(lg n).