Parallel Range, Segment and Rectangle Queries with Augmented Maps

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sweeping the Sphere

Sweeping the Sphere Joao˜ Dinis Margarida Mamede Departamento de F´ısica CITI, Departamento de Informatica´ Faculdade de Ciencias,ˆ Universidade de Lisboa Faculdade de Cienciasˆ e Tecnologia, FCT Campo Grande, Edif´ıcio C8 Universidade Nova de Lisboa 1749-016 Lisboa, Portugal 2829-516 Caparica, Portugal [email protected] [email protected] Abstract—We introduce the first sweep line algorithm for and Hong [5], [6], which is O(n log n) worst case optimal, computing spherical Voronoi diagrams, which proves that where n is the number of sites. An asymptotically slower Fortune’s method can be extended to points on a sphere alternative in the worst case is the randomized incremental surface. This algorithm is similar to Fortune’s plane sweep algorithm, sweeping the sphere with a circular line instead of algorithm of Clarkson and Shor [7], whose expected running a straight one. time is O(n log n). The well-known Quickhull algorithm of Like its planar counterpart, the novel linear-space algorithm Barber et al. [8] can be seen as an efficient variation of the has worst-case optimal running time. Furthermore, it copes previous algorithm. very well with degeneracies and is easy to implement. Experi- A different approach is to adapt the randomized incremen- mental results show that the performance of our algorithm is very similar to that of Fortune’s algorithm, both with synthetic tal algorithm that computes the planar Delaunay triangula- data sets and with real data. tion [9], [10]. The essential operation used in the incremental The usual solutions make use of the connection between step, which checks if a circle defined by three sites contains a convex hulls and spherical Delaunay triangulations. -

Interval Trees Storing and Searching Intervals

Interval Trees Storing and Searching Intervals • Instead of points, suppose you want to keep track of axis-aligned segments: • Range queries: return all segments that have any part of them inside the rectangle. • Motivation: wiring diagrams, genes on genomes Simpler Problem: 1-d intervals • Segments with at least one endpoint in the rectangle can be found by building a 2d range tree on the 2n endpoints. - Keep pointer from each endpoint stored in tree to the segments - Mark segments as you output them, so that you don’t output contained segments twice. • Segments with no endpoints in range are the harder part. - Consider just horizontal segments - They must cross a vertical side of the region - Leads to subproblem: Given a vertical line, find segments that it crosses. - (y-coords become irrelevant for this subproblem) Interval Trees query line interval Recursively build tree on interval set S as follows: Sort the 2n endpoints Let xmid be the median point Store intervals that cross xmid in node N intervals that are intervals that are completely to the completely to the left of xmid in Nleft right of xmid in Nright Another view of interval trees x Interval Trees, continued • Will be approximately balanced because by choosing the median, we split the set of end points up in half each time - Depth is O(log n) • Have to store xmid with each node • Uses O(n) storage - each interval stored once, plus - fewer than n nodes (each node contains at least one interval) • Can be built in O(n log n) time. • Can be searched in O(log n + k) time [k = # -

Uwaterloo Latex Thesis Template

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by University of Waterloo's Institutional Repository Approximation Algorithms for Geometric Covering Problems for Disks and Squares by Nan Hu A thesis presented to the University of Waterloo in fulfillment of the thesis requirement for the degree of Master of Mathematics in Computer Science Waterloo, Ontario, Canada, 2013 c Nan Hu 2013 I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required final revisions, as accepted by my examiners. I understand that my thesis may be made electronically available to the public. ii Abstract Geometric covering is a well-studied topic in computational geometry. We study three covering problems: Disjoint Unit-Disk Cover, Depth-(≤ K) Packing and Red-Blue Unit- Square Cover. In the Disjoint Unit-Disk Cover problem, we are given a point set and want to cover the maximum number of points using disjoint unit disks. We prove that the problem is NP-complete and give a polynomial-time approximation scheme (PTAS) for it. In Depth-(≤ K) Packing for Arbitrary-Size Disks/Squares, we are given a set of arbitrary-size disks/squares, and want to find a subset with depth at most K and maxi- mizing the total area. We prove a depth reduction theorem and present a PTAS. In Red-Blue Unit-Square Cover, we are given a red point set, a blue point set and a set of unit squares, and want to find a subset of unit squares to cover all the blue points and the minimum number of red points. -

Neuron C Reference Guide Iii • Introduction to the LONWORKS Platform (078-0391-01A)

Neuron C Provides reference info for writing programs using the Reference Guide Neuron C programming language. 078-0140-01G Echelon, LONWORKS, LONMARK, NodeBuilder, LonTalk, Neuron, 3120, 3150, ShortStack, LonMaker, and the Echelon logo are trademarks of Echelon Corporation that may be registered in the United States and other countries. Other brand and product names are trademarks or registered trademarks of their respective holders. Neuron Chips and other OEM Products were not designed for use in equipment or systems, which involve danger to human health or safety, or a risk of property damage and Echelon assumes no responsibility or liability for use of the Neuron Chips in such applications. Parts manufactured by vendors other than Echelon and referenced in this document have been described for illustrative purposes only, and may not have been tested by Echelon. It is the responsibility of the customer to determine the suitability of these parts for each application. ECHELON MAKES AND YOU RECEIVE NO WARRANTIES OR CONDITIONS, EXPRESS, IMPLIED, STATUTORY OR IN ANY COMMUNICATION WITH YOU, AND ECHELON SPECIFICALLY DISCLAIMS ANY IMPLIED WARRANTY OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of Echelon Corporation. Printed in the United States of America. Copyright © 2006, 2014 Echelon Corporation. Echelon Corporation www.echelon.com Welcome This manual describes the Neuron® C Version 2.3 programming language. It is a companion piece to the Neuron C Programmer's Guide. -

Augmentation: Range Trees (PDF)

Lecture 9 Augmentation 6.046J Spring 2015 Lecture 9: Augmentation This lecture covers augmentation of data structures, including • easy tree augmentation • order-statistics trees • finger search trees, and • range trees The main idea is to modify “off-the-shelf” common data structures to store (and update) additional information. Easy Tree Augmentation The goal here is to store x.f at each node x, which is a function of the node, namely f(subtree rooted at x). Suppose x.f can be computed (updated) in O(1) time from x, children and children.f. Then, modification a set S of nodes costs O(# of ancestors of S)toupdate x.f, because we need to walk up the tree to the root. Two examples of O(lg n) updates are • AVL trees: after rotating two nodes, first update the new bottom node and then update the new top node • 2-3 trees: after splitting a node, update the two new nodes. • In both cases, then update up the tree. Order-Statistics Trees (from 6.006) The goal of order-statistics trees is to design an Abstract Data Type (ADT) interface that supports the following operations • insert(x), delete(x), successor(x), • rank(x): find x’s index in the sorted order, i.e., # of elements <x, • select(i): find the element with rank i. 1 Lecture 9 Augmentation 6.046J Spring 2015 We can implement the above ADT using easy tree augmentation on AVL trees (or 2-3 trees) to store subtree size: f(subtree) = # of nodes in it. Then we also have x.size =1+ c.size for c in x.children. -

Advanced Data Structures

Advanced Data Structures PETER BRASS City College of New York CAMBRIDGE UNIVERSITY PRESS Cambridge, New York, Melbourne, Madrid, Cape Town, Singapore, São Paulo Cambridge University Press The Edinburgh Building, Cambridge CB2 8RU, UK Published in the United States of America by Cambridge University Press, New York www.cambridge.org Information on this title: www.cambridge.org/9780521880374 © Peter Brass 2008 This publication is in copyright. Subject to statutory exception and to the provision of relevant collective licensing agreements, no reproduction of any part may take place without the written permission of Cambridge University Press. First published in print format 2008 ISBN-13 978-0-511-43685-7 eBook (EBL) ISBN-13 978-0-521-88037-4 hardback Cambridge University Press has no responsibility for the persistence or accuracy of urls for external or third-party internet websites referred to in this publication, and does not guarantee that any content on such websites is, or will remain, accurate or appropriate. Contents Preface page xi 1 Elementary Structures 1 1.1 Stack 1 1.2 Queue 8 1.3 Double-Ended Queue 16 1.4 Dynamical Allocation of Nodes 16 1.5 Shadow Copies of Array-Based Structures 18 2 Search Trees 23 2.1 Two Models of Search Trees 23 2.2 General Properties and Transformations 26 2.3 Height of a Search Tree 29 2.4 Basic Find, Insert, and Delete 31 2.5ReturningfromLeaftoRoot35 2.6 Dealing with Nonunique Keys 37 2.7 Queries for the Keys in an Interval 38 2.8 Building Optimal Search Trees 40 2.9 Converting Trees into Lists 47 2.10 -

Search Trees

Lecture III Page 1 “Trees are the earth’s endless effort to speak to the listening heaven.” – Rabindranath Tagore, Fireflies, 1928 Alice was walking beside the White Knight in Looking Glass Land. ”You are sad.” the Knight said in an anxious tone: ”let me sing you a song to comfort you.” ”Is it very long?” Alice asked, for she had heard a good deal of poetry that day. ”It’s long.” said the Knight, ”but it’s very, very beautiful. Everybody that hears me sing it - either it brings tears to their eyes, or else -” ”Or else what?” said Alice, for the Knight had made a sudden pause. ”Or else it doesn’t, you know. The name of the song is called ’Haddocks’ Eyes.’” ”Oh, that’s the name of the song, is it?” Alice said, trying to feel interested. ”No, you don’t understand,” the Knight said, looking a little vexed. ”That’s what the name is called. The name really is ’The Aged, Aged Man.’” ”Then I ought to have said ’That’s what the song is called’?” Alice corrected herself. ”No you oughtn’t: that’s another thing. The song is called ’Ways and Means’ but that’s only what it’s called, you know!” ”Well, what is the song then?” said Alice, who was by this time completely bewildered. ”I was coming to that,” the Knight said. ”The song really is ’A-sitting On a Gate’: and the tune’s my own invention.” So saying, he stopped his horse and let the reins fall on its neck: then slowly beating time with one hand, and with a faint smile lighting up his gentle, foolish face, he began.. -

Computational Geometry: 1D Range Tree, 2D Range Tree, Line

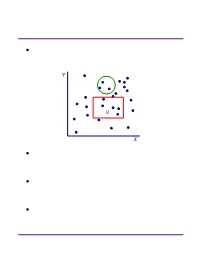

Computational Geometry Lecture 17 Computational geometry Algorithms for solving “geometric problems” in 2D and higher. Fundamental objects: point line segment line Basic structures: point set polygon L17.2 Computational geometry Algorithms for solving “geometric problems” in 2D and higher. Fundamental objects: point line segment line Basic structures: triangulation convex hull L17.3 Orthogonal range searching Input: n points in d dimensions • E.g., representing a database of n records each with d numeric fields Query: Axis-aligned box (in 2D, a rectangle) • Report on the points inside the box: • Are there any points? • How many are there? • List the points. L17.4 Orthogonal range searching Input: n points in d dimensions Query: Axis-aligned box (in 2D, a rectangle) • Report on the points inside the box Goal: Preprocess points into a data structure to support fast queries • Primary goal: Static data structure • In 1D, we will also obtain a dynamic data structure supporting insert and delete L17.5 1D range searching In 1D, the query is an interval: First solution using ideas we know: • Interval trees • Represent each point x by the interval [x, x]. • Obtain a dynamic structure that can list k answers in a query in O(k lg n) time. L17.6 1D range searching In 1D, the query is an interval: Second solution using ideas we know: • Sort the points and store them in an array • Solve query by binary search on endpoints. • Obtain a static structure that can list k answers in a query in O(k + lg n) time. Goal: Obtain a dynamic structure that can list k answers in a query in O(k + lg n) time. -

Navigation Techniques in Augmented and Mixed Reality: Crossing the Virtuality Continuum

Chapter 20 NAVIGATION TECHNIQUES IN AUGMENTED AND MIXED REALITY: CROSSING THE VIRTUALITY CONTINUUM Raphael Grasset 1,2, Alessandro Mulloni 2, Mark Billinghurst 1 and Dieter Schmalstieg 2 1 HIT Lab NZ University of Canterbury, New Zealand 2 Institute for Computer Graphics and Vision Graz University of Technology, Austria 1. Introduction Exploring and surveying the world has been an important goal of humankind for thousands of years. Entering the 21st century, the Earth has almost been fully digitally mapped. Widespread deployment of GIS (Geographic Information Systems) technology and a tremendous increase of both satellite and street-level mapping over the last decade enables the public to view large portions of the 1 2 world using computer applications such as Bing Maps or Google Earth . Mobile context-aware applications further enhance the exploration of spatial information, as users have now access to it while on the move. These applications can present a view of the spatial information that is personalised to the user’s current context (context-aware), such as their physical location and personal interests. For example, a person visiting an unknown city can open a map application on her smartphone to instantly obtain a view of the surrounding points of interest. Augmented Reality (AR) is one increasingly popular technology that supports the exploration of spatial information. AR merges virtual and real spaces and offers new tools for exploring and navigating through space [1]. AR navigation aims to enhance navigation in the real world or to provide techniques for viewpoint control for other tasks within an AR system. AR navigation can be naively thought to have a high degree of similarity with real world navigation. -

Visibility Graphs and Cell Decompositions

Visibility Graphs and Cell Decompositions CIS 390 Kostas Daniilidis With material from Chapter 5 - Roadmaps Principles of Robot Motion: Theory, Algorithms, and Implementation by Howie ChosetÂet al. The MIT Press © 2005 For polygonal obstacles in 2D • Assume robot is a point • Any shortest path from start to goal among a set of disjoint polygonal obstacles is a polygonal path whose inner vertices are convex vertices of the obstacles (imagine a tight rope from start to end) Visibility graph • Vertices: all vertices of the polygonal obstacles plus the start and the goal • Edges: all line segments between vertices that do not intersect obstacles • It is not a planar graph: edges are crossing (not at vertices) Visibility graph construction • Brute force O(n^3) algorithm visits – all n vertices – applies a rotational plane sweep that connects with all other n vertices – and determines whether each segment intersects any of the O(n) edges • We can do better by sorting the vertices. Sweep Line Algorithm • Input: A set of vertices {νi} (whose edges do not intersect) and a vertex ν • Output: A subset of vertices from {νi} that are within line of sight of ν • 1: For each vertex vi, calculate αi, the angle from the horizontal axis to the line segment • ννi. • 2: Create the vertex list E , containing the αi 's sorted in increasing order. • 3: Create the active list S, containing the sorted list of edges that intersect the horizontal • half-line emanating from ν. • 4: for all αi do • 5: if νi is visible to ν then • 6: Add the edge (ν, νi )to the visibility graph. -

Range Searching

Range Searching ² Data structure for a set of objects (points, rectangles, polygons) for efficient range queries. Y Q X ² Depends on type of objects and queries. Consider basic data structures with broad applicability. ² Time-Space tradeoff: the more we preprocess and store, the faster we can solve a query. ² Consider data structures with (nearly) linear space. Subhash Suri UC Santa Barbara Orthogonal Range Searching ² Fix a n-point set P . It has 2n subsets. How many are possible answers to geometric range queries? Y 5 Some impossible rectangular ranges 6 (1,2,3), (1,4), (2,5,6). 1 4 Range (1,5,6) is possible. 3 2 X ² Efficiency comes from the fact that only a small fraction of subsets can be formed. ² Orthogonal range searching deals with point sets and axis-aligned rectangle queries. ² These generalize 1-dimensional sorting and searching, and the data structures are based on compositions of 1-dim structures. Subhash Suri UC Santa Barbara 1-Dimensional Search ² Points in 1D P = fp1; p2; : : : ; png. ² Queries are intervals. 15 71 3 7 9 21 23 25 45 70 72 100 120 ² If the range contains k points, we want to solve the problem in O(log n + k) time. ² Does hashing work? Why not? ² A sorted array achieves this bound. But it doesn’t extend to higher dimensions. ² Instead, we use a balanced binary tree. Subhash Suri UC Santa Barbara Tree Search 15 7 24 3 12 20 27 1 4 9 14 17 22 25 29 1 3 4 7 9 12 14 15 17 20 22 24 25 27 29 31 u v xlo =2 x hi =23 ² Build a balanced binary tree on the sorted list of points (keys). -

I/O-Efficient Spatial Data Structures for Range Queries

I/O-Efficient Spatial Data Structures for Range Queries Lars Arge Kasper Green Larsen MADALGO,∗ Department of Computer Science, Aarhus University, Denmark E-mail: [email protected],[email protected] 1 Introduction Range reporting is a one of the most fundamental topics in spatial databases and computational geometry. In this class of problems, the input consists of a set of geometric objects, such as points, line segments, rectangles etc. The goal is to preprocess the input set into a data structure, such that given a query range, one can efficiently report all input objects intersecting the range. The ranges most commonly considered are axis-parallel rectangles, halfspaces, points, simplices and balls. In this survey, we focus on the planar orthogonal range reporting problem in the external memory model of Aggarwal and Vitter [2]. Here the input consists of a set of N points in the plane, and the goal is to support reporting all points inside an axis-parallel query rectangle. We use B to denote the disk block size in number of points. The cost of answering a query is measured in the number of I/Os performed and the space of the data structure is measured in the number of disk blocks occupied, hence linear space is O(N=B) disk blocks. Outline. In Section 2, we set out by reviewing the classic B-tree for solving one- dimensional orthogonal range reporting, i.e. given N points on the real line and a query interval q = [q1; q2], report all T points inside q. In Section 3 we present optimal solutions for planar orthogonal range reporting, and finally in Section 4, we briefly discuss related range searching problems.