Anytime Tree Search for Combinatorial Optimization Luc Libralesso

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

4 Beyond Classical Search

BEYOND CLASSICAL 4 SEARCH In which we relax the simplifying assumptions of the previous chapter, thereby getting closer to the real world. Chapter 3 addressed a single category of problems: observable, deterministic, known envi- ronments where the solution is a sequence of actions. In this chapter, we look at what happens when these assumptions are relaxed. We begin with a fairly simple case: Sections 4.1 and 4.2 cover algorithms that perform purely local search in the state space, evaluating and modify- ing one or more current states rather than systematically exploring paths from an initial state. These algorithms are suitable for problems in which all that matters is the solution state, not the path cost to reach it. The family of local search algorithms includes methods inspired by statistical physics (simulated annealing)andevolutionarybiology(genetic algorithms). Then, in Sections 4.3–4.4, we examine what happens when we relax the assumptions of determinism and observability. The key idea is that if an agent cannot predict exactly what percept it will receive, then it will need to consider what to do under each contingency that its percepts may reveal. With partial observability, the agent will also need to keep track of the states it might be in. Finally, Section 4.5 investigates online search,inwhichtheagentisfacedwithastate space that is initially unknown and must be explored. 4.1 LOCAL SEARCH ALGORITHMS AND OPTIMIZATION PROBLEMS The search algorithms that we have seen so far are designed to explore search spaces sys- tematically. This systematicity is achieved by keeping one or more paths in memory and by recording which alternatives have been explored at each point along the path. -

A. Local Beam Search with K=1. A. Local Beam Search with K = 1 Is Hill-Climbing Search

4. Give the name that results from each of the following special cases: a. Local beam search with k=1. a. Local beam search with k = 1 is hill-climbing search. b. Local beam search with one initial state and no limit on the number of states retained. b. Local beam search with k = ∞: strictly speaking, this doesn’t make sense. The idea is that if every successor is retained (because k is unbounded), then the search resembles breadth-first search in that it adds one complete layer of nodes before adding the next layer. Starting from one state, the algorithm would be essentially identical to breadth-first search except that each layer is generated all at once. c. Simulated annealing with T=0 at all times (and omitting the termination test). c. Simulated annealing with T = 0 at all times: ignoring the fact that the termination step would be triggered immediately, the search would be identical to first-choice hill climbing because every downward successor would be rejected with probability 1. d. Simulated annealing with T=infinity at all times. d. Simulated annealing with T = infinity at all times: ignoring the fact that the termination step would never be triggered, the search would be identical to a random walk because every successor would be accepted with probability 1. Note that, in this case, a random walk is approximately equivalent to depth-first search. e. Genetic algorithm with population size N=1. e. Genetic algorithm with population size N = 1: if the population size is 1, then the two selected parents will be the same individual; crossover yields an exact copy of the individual; then there is a small chance of mutation. -

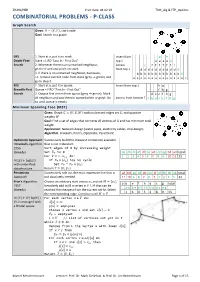

COMBINATORIAL PROBLEMS - P-CLASS Graph Search Given: 퐺 = (푉, 퐸), Start Node Goal: Search in a Graph

ZHAW/HSR Print date: 04.02.19 TSM_Alg & FTP_Optimiz COMBINATORIAL PROBLEMS - P-CLASS Graph Search Given: 퐺 = (푉, 퐸), start node Goal: Search in a graph DFS 1. Start at a, put it on stack. Insert from g h Depth-First- Stack = LIFO "Last In - First Out" top ↓ e e e e e Search 2. Whenever there is an unmarked neighbour, Access c f f f f f f f go there and and put it on stack from top ↓ d d d d d d d d d d d 3. If there is no unmarked neighbour, backtrack; b b b b b b b b b b b b b i.e. remove current node from stack (grey ⇒ green) and a a a a a a a a a a a a a a a go to step 2. BFS 1. Start at a, put it in queue. Insert from top ↓ h g Breadth-First Queue = FIFO "First In - First Out" f h g Search 2. Output first vertex from queue (grey ⇒ green). Mark d e c f h g all neighbors and put them in queue (white ⇒ grey). Do Access from bottom ↑ a b d e c f h g so until queue is empty Minimum Spanning Tree (MST) Given: Graph 퐺 = (푉, 퐸, 푊) with undirected edges set 퐸, with positive weights 푊 Goal: Find a set of edges that connects all vertices of G and has minimum total weight. Application: Network design (water pipes, electricity cables, chip design) Algorithm: Kruskal's, Prim's, Optimistic, Pessimistic Optimistic Approach Successively build the cheapest connection available =Kruskal's algorithm that is not redundant. -

6 Adversarial Search

6 ADVERSARIAL SEARCH In which we examine the problems that arise when we try to plan ahead in a world where other agents are planning against us. 6.1 GAMES Chapter 2 introduced multiagent environments, in which any given agent will need to con- sider the actions of other agents and how they affect its own welfare. The unpredictability of these other agents can introduce many possible contingencies into the agent’s problem- solving process, as discussed in Chapter 3. The distinction between cooperative and compet- itive multiagent environments was also introduced in Chapter 2. Competitive environments, in which the agents’ goals are in conflict, give rise to adversarial search problems—often GAMES known as games. Mathematical game theory, a branch of economics, views any multiagent environment as a game provided that the impact of each agent on the others is “significant,” regardless of whether the agents are cooperative or competitive.1 In AI, “games” are usually of a rather specialized kind—what game theorists call deterministic, turn-taking, two-player, zero-sum ZEROSUM GAMES games of perfect information. In our terminology, this means deterministic, fully observable PERFECT INFORMATION environments in which there are two agents whose actions must alternate and in which the utility values at the end of the game are always equal and opposite. For example, if one player wins a game of chess (+1), the other player necessarily loses (–1). It is this opposition between the agents’ utility functions that makes the situation adversarial. We will consider multiplayer games, non-zero-sum games, and stochastic games briefly in this chapter, but will delay discussion of game theory proper until Chapter 17. -

"Large Scale Monte Carlo Tree Search on GPU"

Large Scale Monte Carlo Tree Search on GPU GPU+HK大ávモンテカルロ木探索 Doctoral Dissertation ¿7論£ Kamil Marek Rocki ロツキ カミル >L#ク Submitted to Department of Computer Science, Graduate School of Information Science and Technology, The University of Tokyo in partial fulfillment of the requirements for the degree of Doctor of Information Science and Technology Thesis Supervisor: Reiji Suda (½田L¯) Professor of Computer Science August, 2011 Abstract: Monte Carlo Tree Search (MCTS) is a method for making optimal decisions in artificial intelligence (AI) problems, typically for move planning in combinatorial games. It combines the generality of random simulation with the precision of tree search. Research interest in MCTS has risen sharply due to its spectacular success with computer Go and its potential application to a number of other difficult problems. Its application extends beyond games, and MCTS can theoretically be applied to any domain that can be described in terms of (state, action) pairs, as well as it can be used to simulate forecast outcomes such as decision support, control, delayed reward problems or complex optimization. The main advantages of the MCTS algorithm consist in the fact that, on one hand, it does not require any strategic or tactical knowledge about the given domain to make reasonable decisions, on the other hand algorithm can be halted at any time to return the current best estimate. So far, current research has shown that the algorithm can be parallelized on multiple CPUs. The motivation behind this work was caused by the emerging GPU- based systems and their high computational potential combined with the relatively low power usage compared to CPUs. -

Beam Search for Integer Multi-Objective Optimization

Beam Search for integer multi-objective optimization Thibaut Barthelemy Sophie N. Parragh Fabien Tricoire Richard F. Hartl University of Vienna, Department of Business Administration, Austria {thibaut.barthelemy,sophie.parragh,fabien.tricoire,richard.hartl}@univie.ac.at Abstract Beam search is a tree search procedure where, at each level of the tree, at most w nodes are kept. This results in a metaheuristic whose solving time is polynomial in w. Popular for single-objective problems, beam search has only received little attention in the context of multi-objective optimization. By introducing the concepts of oracle and filter, we define a paradigm to understand multi-objective beam search algorithms. Its theoretical analysis engenders practical guidelines for the design of these algorithms. The guidelines, suitable for any problem whose variables are integers, are applied to address a bi-objective 0-1 knapsack problem. The solver obtained outperforms the existing non-exact methods from the literature. 1 Introduction Everyday life decisions are often a matter of multi-objective optimization. Indeed, life is full of tradeoffs, as reflected for instance by discussions in parliaments or boards of directors. As a result, almost all cost-oriented problems of the classical literature give also rise to quality, durability, social or ecological concerns. Such goals are often conflicting. For instance, lower costs may lead to lower quality and vice versa. A generic approach to multi-objective optimization consists in computing a set of several good compromise solutions that decision makers discuss in order to choose one. Numerous methods have been developed to find the set of compromise solutions to multi-objective combi- natorial problems. -

IJIR Paper Template

International Journal of Research e-ISSN: 2348-6848 p-ISSN: 2348-795X Available at https://journals.pen2print.org/index.php/ijr/ Volume 06 Issue 10 September 2019 Study of Informed Searching Algorithm for Finding the Shortest Path Wint Aye Khaing1, Kyi Zar Nyunt2, Thida Win2, Ei Ei Moe3 1Faculty of Information Science, University of Computer Studies (Taungoo), Myanmar 2 Faculty of Information Science, University of Computer Studies (Taungoo), Myanmar 3 Faculty of Computer Science, University of Computer Studies (Taungoo), Myanmar [email protected],[email protected] [email protected], [email protected], [email protected], [email protected] Abstract: frequently deal with this question when planning trips with their cars. There are also many applications like logistic While technological revolution has active role to the planning or traffic simulation that need to solve a huge increase of computer information, growing computational number of such route queries. Shortest path can be either capabilities of devices, and raise the level of knowledge inconvenient for the client if he has to wait for the response abilities, and skills. Increase developments in science and or experience for the service provider if he has to make a lot technology. In city area traffic the shortest path finding is of computing power available. While algorithm is a very difficult in a road network. Shortest path searching is procedure or formula for solve problem. Algorithm usually very important in some special case as medical emergency, means a small procedure that solves a recurrent problem. spying, theft catching, fire brigade etc. In this paper used the shortest path algorithms for solving the shortest path 2. -

Compiler Construction

Compiler construction PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 10 Dec 2011 02:23:02 UTC Contents Articles Introduction 1 Compiler construction 1 Compiler 2 Interpreter 10 History of compiler writing 14 Lexical analysis 22 Lexical analysis 22 Regular expression 26 Regular expression examples 37 Finite-state machine 41 Preprocessor 51 Syntactic analysis 54 Parsing 54 Lookahead 58 Symbol table 61 Abstract syntax 63 Abstract syntax tree 64 Context-free grammar 65 Terminal and nonterminal symbols 77 Left recursion 79 Backus–Naur Form 83 Extended Backus–Naur Form 86 TBNF 91 Top-down parsing 91 Recursive descent parser 93 Tail recursive parser 98 Parsing expression grammar 100 LL parser 106 LR parser 114 Parsing table 123 Simple LR parser 125 Canonical LR parser 127 GLR parser 129 LALR parser 130 Recursive ascent parser 133 Parser combinator 140 Bottom-up parsing 143 Chomsky normal form 148 CYK algorithm 150 Simple precedence grammar 153 Simple precedence parser 154 Operator-precedence grammar 156 Operator-precedence parser 159 Shunting-yard algorithm 163 Chart parser 173 Earley parser 174 The lexer hack 178 Scannerless parsing 180 Semantic analysis 182 Attribute grammar 182 L-attributed grammar 184 LR-attributed grammar 185 S-attributed grammar 185 ECLR-attributed grammar 186 Intermediate language 186 Control flow graph 188 Basic block 190 Call graph 192 Data-flow analysis 195 Use-define chain 201 Live variable analysis 204 Reaching definition 206 Three address -

Job Shop Scheduling with Beam Search

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Bilkent University Institutional Repository European Journal of Operational Research 118 (1999) 390±412 www.elsevier.com/locate/orms Theory and Methodology Job shop scheduling with beam search I. Sabuncuoglu *, M. Bayiz Department of Industrial Engineering, Bilkent University, 06533 Ankara, Turkey Received 1 July 1997; accepted 1 August 1998 Abstract Beam Search is a heuristic method for solving optimization problems. It is an adaptation of the branch and bound method in which only some nodes are evaluated in the search tree. At any level, only the promising nodes are kept for further branching and remaining nodes are pruned o permanently. In this paper, we develop a beam search based scheduling algorithm for the job shop problem. Both the makespan and mean tardiness are used as the performance measures. The proposed algorithm is also compared with other well known search methods and dispatching rules for a wide variety of problems. The results indicate that the beam search technique is a very competitive and promising tool which deserves further research in the scheduling literature. Ó 1999 Elsevier Science B.V. All rights reserved. Keywords: Scheduling; Beam search; Job shop 1. Introduction (Lowerre, 1976). There have been a number of applications reported in the literature since then. Beam search is a heuristic method for solving Fox (1983) used beam search for solving complex optimization problems. It is an adaptation of the scheduling problems by a system called ISIS. La- branch and bound method in which only some ter, Ow and Morton (1988) studied the eects of nodes are evaluated. -

Randomized Parallel Algorithms for Backtrack Search and Branch-And-Bound Computation

Randomized Parallel Algorithms for Backtrack Search and Branch-and-Bound Computation RICHARD M. KARP AND YANJUN ZHANG University of California at Berkeley, Berkeley, California Abstract. Universal randomized methods for parallelizing sequential backtrack search and branch-and-bound computation are presented. These methods execute on message-passing multi- processor systems, and require no global data structures or complex communication protocols. For backtrack search, it is shown that, uniformly on all instances, the method described in this paper is likely to yield a speed-up within a small constant factor from optimal, when all solutions to the problem instance are required. For branch-and-bound computation, it is shown that, uniformly on all instances, the execution time of this method is unlikely to exceed a certain inherent lower bound by more than a constant factor. These randomized methods demonstrate the effectiveness of randomization in distributed parallel computation. Categories and Subject Descriptors: F.2.2 [Analysis of Algorithms and Problem Complexity]: Non-numerical Algorithms-computation on discrete structures; sorting and searching; F.1.2 [Com- putation by Abstract Devices]: Modes of computation-parallelism and concurrency; probabilistic computation; G.2.1 [Discrete Mathematics]: Combinatorics-combinutoria~ algorithms; G.3 [Prob- ability and Statistics]: probabilistic algotithms; 1.2.8 [Artificial Intelligence]: Problem Solving, Control Methods, and Search-backtracking; graph and tree search strategies General Terms: Algorithms Additional Key Words and Phrases: Backtrack search, branch-and-bound, distributed parallel computation 1. Introduction A combinatorial search problem, or simply search problem, is a problem of finding certain arrangements of some combinatorial objects among a large set of possible arrangements. -

Heuristics Table of Contents

Part I: Heuristics Table of Contents List of Contributors ......................................................... ix Preface ...................................................................... xi I. Heuristics ........................................................... 1 1. Heuristic Search for Planning Under Uncertainty Blai Bonet and Eric A. Hansen . 3 2. Heuristics, Planning, and Cognition Hector Geffner . 23 3. Mechanical Generation of Admissible Heuristics Robert Holte, Jonathan Schaeffer, and Ariel Felner . 43 4. Space Complexity of Combinatorial Search Richard E. Korf . 53 5. Paranoia Versus Overconfidence in Imperfect-Information Games Austin Parker, Dana Nau and V.S. Subrahmanian . 63 6. Heuristic Search: Pearl's Significance From a Personal Perspective Ira Pohl ................................................................. 89 II. Probability ......................................................... 103 7. Inference in Bayesian Networks: A Historical Perspective Adnan Darwiche . 105 8. Graphical Models of the Visual Cortex Thomas Dean . 121 9. On the Power of Belief Propagation: A Constraint Propagation Perspective Rina Dechter, Bozhena Bidyuk, Robert Mateescu, and Emma Rollon . 143 10. Bayesian Nonparametric Learning: Expressive Priors for Intelligent Systems Michael I. Jordan . 167 v 1 Heuristic Search for Planning under Uncertainty Blai Bonet and Eric A. Hansen 1 Introduction The artificial intelligence (AI) subfields of heuristic search and automated planning are closely related, with planning problems often providing a -

Improved Optimal and Approximate Power Graph Compression for Clearer Visualisation of Dense Graphs

Improved Optimal and Approximate Power Graph Compression for Clearer Visualisation of Dense Graphs Tim Dwyer∗ Christopher Mears† Kerri Morgan‡ Todd Niven§ Kim Marriott¶ Mark Wallacek Monash University, Australia (a) Flat graph with 30 nodes and 65 (b) Power Graph rendering computed using the (c) Power Graph computed using Beam Search (see 5.1) finds 15 links. heuristic of Royer et al. [12]: 7 modules, 36 links. modules to reduce the link count to 25. Figure 1: Three renderings of a network of dependencies between methods, properties and fields in a software system. In the Power Graph renderings an edge between a node and a module implies the node is connected to every member of the module. An edge between two modules implies a bipartite clique. In this way the Power Graph shows the precise connectivity of the directed graph but with much less clutter. ABSTRACT visualise in a way that individual links can still be followed. Such Drawings of highly connected (dense) graphs can be very difficult graphs occur frequently in nature as power-law or small-world net- to read. Power Graph Analysis offers an alternate way to draw a works. In practice, very dense graphs are often visualised in a way graph in which sets of nodes with common neighbours are shown that focuses less on high-fidelity readability of edges and more on grouped into modules. An edge connected to the module then im- highlighting highly-connected nodes or clusters of nodes through plies a connection to each member of the module. Thus, the entire techniques such as force-directed layout or abstraction determined graph may be represented with much less clutter and without loss by community detection [8].