IJIR Paper Template

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AI-KI-B Heuristic Search

AI-KI-B Heuristic Search Ute Schmid & Diedrich Wolter Practice: Johannes Rabold Cognitive Systems and Smart Environments Applied Computer Science, University of Bamberg last change: 3. Juni 2019, 10:05 Outline Introduction of heuristic search algorithms, based on foundations of problem spaces and search • Uniformed Systematic Search • Depth-First Search (DFS) • Breadth-First Search (BFS) • Complexity of Blocks-World • Cost-based Optimal Search • Uniform Cost Search • Cost Estimation (Heuristic Function) • Heuristic Search Algorithms • Hill Climbing (Depth-First, Greedy) • Branch and Bound Algorithms (BFS-based) • Best First Search • A* • Designing Heuristic Functions • Problem Types Cost and Cost Estimation • \Real" cost is known for each operator. • Accumulated cost g(n) for a leaf node n on a partially expanded path can be calculated. • For problems where each operator has the same cost or where no information about costs is available, all operator applications have equal cost values. For cost values of 1, accumulated costs g(n) are equal to path-length d. • Sometimes available: Heuristics for estimating the remaining costs to reach the final state. • h^(n): estimated costs to reach a goal state from node n • \bad" heuristics can misguide search! Cost and Cost Estimation cont. Evaluation Function: f^(n) = g(n) + h^(n) Cost and Cost Estimation cont. • \True costs" of an optimal path from an initial state s to a final state: f (s). • For a node n on this path, f can be decomposed in the already performed steps with cost g(n) and the yet to perform steps with true cost h(n). • h^(n) can be an estimation which is greater or smaller than the true costs. -

CAP 4630 Artificial Intelligence

CAP 4630 Artificial Intelligence Instructor: Sam Ganzfried [email protected] 1 • http://www.ultimateaiclass.com/ • https://moodle.cis.fiu.edu/ • HW1 out 9/5 today, due 9/28 (maybe 10/3) – Remember that you have up to 4 late days to use throughout the semester. – https://www.cs.cmu.edu/~sganzfri/HW1_AI.pdf – http://ai.berkeley.edu/search.html • Office hours 2 • https://www.cs.cmu.edu/~sganzfri/Calendar_AI.docx • Midterm exam: on 10/19 • Final exam pushed back (likely on 12/12 instead of 12/5) • Extra lecture on NLP on 11/16 • Will likely only cover 1-1.5 lectures on logic and on planning • Only 4 homework assignments instead of 5 3 Heuristic functions 4 8-puzzle • The object of the puzzle is to slide the tiles horizontally or vertically into the empty space until the configuration matches the goal configuration. • The average solution cost for a randomly generated 8-puzzle instance is about 22 steps. The branching factor is about 3 (when the empty tile is in the middle, four moves are possible; when it is in a corner, two; and when it is along an edge, three). This means that an exhaustive tree search to depth 22 would look at about 3^22 = 3.1*10^10 states. A graph search would cut this down by a factor of about 170,000 because only 181,440 distinct states are reachable (homework exercise). This is a manageable number, but for a 15-puzzle, it would be 10^13, so we will need a good heuristic function. -

4 Beyond Classical Search

BEYOND CLASSICAL 4 SEARCH In which we relax the simplifying assumptions of the previous chapter, thereby getting closer to the real world. Chapter 3 addressed a single category of problems: observable, deterministic, known envi- ronments where the solution is a sequence of actions. In this chapter, we look at what happens when these assumptions are relaxed. We begin with a fairly simple case: Sections 4.1 and 4.2 cover algorithms that perform purely local search in the state space, evaluating and modify- ing one or more current states rather than systematically exploring paths from an initial state. These algorithms are suitable for problems in which all that matters is the solution state, not the path cost to reach it. The family of local search algorithms includes methods inspired by statistical physics (simulated annealing)andevolutionarybiology(genetic algorithms). Then, in Sections 4.3–4.4, we examine what happens when we relax the assumptions of determinism and observability. The key idea is that if an agent cannot predict exactly what percept it will receive, then it will need to consider what to do under each contingency that its percepts may reveal. With partial observability, the agent will also need to keep track of the states it might be in. Finally, Section 4.5 investigates online search,inwhichtheagentisfacedwithastate space that is initially unknown and must be explored. 4.1 LOCAL SEARCH ALGORITHMS AND OPTIMIZATION PROBLEMS The search algorithms that we have seen so far are designed to explore search spaces sys- tematically. This systematicity is achieved by keeping one or more paths in memory and by recording which alternatives have been explored at each point along the path. -

Fast and Efficient Black Box Optimization Using the Parameter-Less Population Pyramid

Fast and Efficient Black Box Optimization Using the Parameter-less Population Pyramid B. W. Goldman [email protected] Department of Computer Science and Engineering, Michigan State University, East Lansing, 48823, USA W. F. Punch [email protected] Department of Computer Science and Engineering, Michigan State University, East Lansing, 48823, USA doi:10.1162/EVCO_a_00148 Abstract The parameter-less population pyramid (P3) is a recently introduced method for per- forming evolutionary optimization without requiring any user-specified parameters. P3’s primary innovation is to replace the generational model with a pyramid of mul- tiple populations that are iteratively created and expanded. In combination with local search and advanced crossover, P3 scales to problem difficulty, exploiting previously learned information before adding more diversity. Across seven problems, each tested using on average 18 problem sizes, P3 outperformed all five advanced comparison algorithms. This improvement includes requiring fewer evaluations to find the global optimum and better fitness when using the same number of evaluations. Using both algorithm analysis and comparison, we find P3’s effectiveness is due to its ability to properly maintain, add, and exploit diversity. Unlike the best comparison algorithms, P3 was able to achieve this quality without any problem-specific tuning. Thus, unlike previous parameter-less methods, P3 does not sacrifice quality for applicability. There- fore we conclude that P3 is an efficient, general, parameter-less approach to black box optimization which is more effective than existing state-of-the-art techniques. Keywords Genetic algorithms, linkage learning, local search, parameter-less. 1 Introduction A primary purpose of evolutionary optimization is to efficiently find good solutions to challenging real-world problems with minimal prior knowledge about the problem itself. -

Artificial Intelligence 1: Informed Search

Informed Search II 1. When A* fails – Hill climbing, simulated annealing 2. Genetic algorithms When A* doesn’t work AIMA 4.1 A few slides adapted from CS 471, UBMC and Eric Eaton (in turn, adapted from slides by Charles R. Dyer, University of Wisconsin-Madison)...... Outline Local Search: Hill Climbing Escaping Local Maxima: Simulated Annealing Genetic Algorithms CIS 391 - Intro to AI 3 Local search and optimization Local search: • Use single current state and move to neighboring states. Idea: start with an initial guess at a solution and incrementally improve it until it is one Advantages: • Use very little memory • Find often reasonable solutions in large or infinite state spaces. Useful for pure optimization problems. • Find or approximate best state according to some objective function • Optimal if the space to be searched is convex CIS 391 - Intro to AI 4 Hill Climbing Hill climbing on a surface of states h(s): Estimate of distance from a peak (smaller is better) OR: Height Defined by Evaluation Function (greater is better) CIS 391 - Intro to AI 6 Hill-climbing search I. While ( uphill points): • Move in the direction of increasing evaluation function f II. Let snext = arg maxfs ( ) , s a successor state to the current state n s • If f(n) < f(s) then move to s • Otherwise halt at n Properties: • Terminates when a peak is reached. • Does not look ahead of the immediate neighbors of the current state. • Chooses randomly among the set of best successors, if there is more than one. • Doesn’t backtrack, since it doesn’t remember where it’s been a.k.a. -

A. Local Beam Search with K=1. A. Local Beam Search with K = 1 Is Hill-Climbing Search

4. Give the name that results from each of the following special cases: a. Local beam search with k=1. a. Local beam search with k = 1 is hill-climbing search. b. Local beam search with one initial state and no limit on the number of states retained. b. Local beam search with k = ∞: strictly speaking, this doesn’t make sense. The idea is that if every successor is retained (because k is unbounded), then the search resembles breadth-first search in that it adds one complete layer of nodes before adding the next layer. Starting from one state, the algorithm would be essentially identical to breadth-first search except that each layer is generated all at once. c. Simulated annealing with T=0 at all times (and omitting the termination test). c. Simulated annealing with T = 0 at all times: ignoring the fact that the termination step would be triggered immediately, the search would be identical to first-choice hill climbing because every downward successor would be rejected with probability 1. d. Simulated annealing with T=infinity at all times. d. Simulated annealing with T = infinity at all times: ignoring the fact that the termination step would never be triggered, the search would be identical to a random walk because every successor would be accepted with probability 1. Note that, in this case, a random walk is approximately equivalent to depth-first search. e. Genetic algorithm with population size N=1. e. Genetic algorithm with population size N = 1: if the population size is 1, then the two selected parents will be the same individual; crossover yields an exact copy of the individual; then there is a small chance of mutation. -

On the Design of (Meta)Heuristics

On the design of (meta)heuristics Tony Wauters CODeS Research group, KU Leuven, Belgium There are two types of people • Those who use heuristics • And those who don’t ☺ 2 Tony Wauters, Department of Computer Science, CODeS research group Heuristics Origin: Ancient Greek: εὑρίσκω, "find" or "discover" Oxford Dictionary: Proceeding to a solution by trial and error or by rules that are only loosely defined. Tony Wauters, Department of Computer Science, CODeS research group 3 Properties of heuristics + Fast* + Scalable* + High quality solutions* + Solve any type of problem (complex constraints, non-linear, …) - Problem specific (but usually transferable to other problems) - Cannot guarantee optimallity * if well designed 4 Tony Wauters, Department of Computer Science, CODeS research group Heuristic types • Constructive heuristics • Metaheuristics • Hybrid heuristics • Matheuristics: hybrid of Metaheuristics and Mathematical Programming • Other hybrids: Machine Learning, Constraint Programming,… • Hyperheuristics 5 Tony Wauters, Department of Computer Science, CODeS research group Constructive heuristics • Incrementally construct a solution from scratch. • Easy to understand and implement • Usually very fast • Reasonably good solutions 6 Tony Wauters, Department of Computer Science, CODeS research group Metaheuristics A Metaheuristic is a high-level problem independent algorithmic framework that provides a set of guidelines or strategies to develop heuristic optimization algorithms. Instead of reinventing the wheel when developing a heuristic -

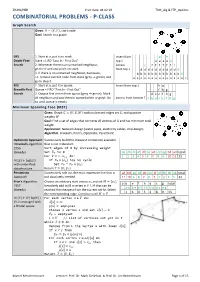

COMBINATORIAL PROBLEMS - P-CLASS Graph Search Given: 퐺 = (푉, 퐸), Start Node Goal: Search in a Graph

ZHAW/HSR Print date: 04.02.19 TSM_Alg & FTP_Optimiz COMBINATORIAL PROBLEMS - P-CLASS Graph Search Given: 퐺 = (푉, 퐸), start node Goal: Search in a graph DFS 1. Start at a, put it on stack. Insert from g h Depth-First- Stack = LIFO "Last In - First Out" top ↓ e e e e e Search 2. Whenever there is an unmarked neighbour, Access c f f f f f f f go there and and put it on stack from top ↓ d d d d d d d d d d d 3. If there is no unmarked neighbour, backtrack; b b b b b b b b b b b b b i.e. remove current node from stack (grey ⇒ green) and a a a a a a a a a a a a a a a go to step 2. BFS 1. Start at a, put it in queue. Insert from top ↓ h g Breadth-First Queue = FIFO "First In - First Out" f h g Search 2. Output first vertex from queue (grey ⇒ green). Mark d e c f h g all neighbors and put them in queue (white ⇒ grey). Do Access from bottom ↑ a b d e c f h g so until queue is empty Minimum Spanning Tree (MST) Given: Graph 퐺 = (푉, 퐸, 푊) with undirected edges set 퐸, with positive weights 푊 Goal: Find a set of edges that connects all vertices of G and has minimum total weight. Application: Network design (water pipes, electricity cables, chip design) Algorithm: Kruskal's, Prim's, Optimistic, Pessimistic Optimistic Approach Successively build the cheapest connection available =Kruskal's algorithm that is not redundant. -

Job Shop Scheduling with Metaheuristics for Car Workshops

Job Shop Scheduling with Metaheuristics for Car Workshops Hein de Haan s2068125 Master Thesis Artificial Intelligence University of Groningen July 2016 First Supervisor Dr. Marco Wiering (Artificial Intelligence, University of Groningen) Second Supervisor Prof. Dr. Lambert Schomaker (Artificial Intelligence, University of Groningen) Abstract For this thesis, different algorithms were created to solve the problem of Jop Shop Scheduling with a number of constraints. More specifically, the setting of a (sim- plified) car workshop was used: the algorithms had to assign all tasks of one day to the mechanics, taking into account minimum and maximum finish times of tasks and mechanics, the use of bridges, qualifications and the delivery and usage of car parts. The algorithms used in this research are Hill Climbing, a Genetic Algorithm with and without a Hill Climbing component, two different Particle Swarm Opti- mizers with and without Hill Climbing, Max-Min Ant System with and without Hill Climbing and Tabu Search. Two experiments were performed: one focussed on the speed of the algorithms, the other on their performance. The experiments point towards the Genetic Algorithm with Hill Climbing as the best algorithm for this problem. 2 Contents 1 Introduction 5 1.1 Scheduling . 5 1.2 Research Question . 7 2 Theoretical Background of Job Shop Scheduling 9 2.1 Formal Description . 9 2.2 Early Work . 11 2.3 Current Algorithms . 13 2.3.1 Simulated Annealing . 13 2.3.2 Neural Networks . 14 3 Methods 19 3.1 Introduction . 19 3.2 Hill Climbing . 20 3.2.1 Introduction . 20 3.2.2 Hill Climbing for Job Shop Scheduling . -

Lecture 7 of 41 AI Applications 1 of 3 and Heuristic Search by Iterative Improvement

LectureLecture 77 ofof 4141 AI Applications 1 of 3 and Heuristic Search by Iterative Improvement Monday, 08 September 2003 William H. Hsu Department of Computing and Information Sciences, KSU http://www.kddresearch.org http://www.cis.ksu.edu/~bhsu Reading for Next Week: Handout, Chapter 4 Ginsberg U. of Alberta Computer Games page: http://www.cs.ualberta.ca/~games/ Chapter 6, Russell and Norvig 2e Kansas State University CIS 730: Introduction to Artificial Intelligence Department of Computing and Information Sciences LectureLecture OutlineOutline • Today’s Reading – Sections 4.3 – 4.5, Russell and Norvig – Recommended references: Chapter 4, Ginsberg; Chapter 3, Winston • Reading for Next Week: Chapter 6, Russell and Norvig • More Heuristic Search – Best-First Search: A/A* concluded – Iterative improvement • Hill-climbing • Simulated annealing (SA) – Search as function maximization • Problems: ridge; foothill; plateau, jump discontinuity • Solutions: macro operators; global optimization (genetic algorithms / SA) • Next Lecture: Constraint Satisfaction Search, Heuristics (Concluded) • Next Week: Adversarial Search (e.g., Game Tree Search) – Competitive problems – Minimax algorithm Kansas State University CIS 730: Introduction to Artificial Intelligence Department of Computing and Information Sciences AIAI ApplicationsApplications [1]:[1]: HumanHuman--ComputerComputer InteractionInteraction (HCI)(HCI) Visualization •Wearable Computers © 1999 Visible Decisions, Inc. •© 2004 MIT Media Lab (http://www.vdi.com) •http://www.media.mit.edu/wearables/ •Projection Keyboards •© 2003 Headmap Group •http://www.headmap.org/book/wearables/ Verbmobil © 2002 Institut für Neuroinformatik http://snipurl.com/8uiu Kansas State University CIS 730: Introduction to Artificial Intelligence Department of Computing and Information Sciences AIAI ApplicationsApplications [2]:[2]: GamesGames • Human-Level AI in Games • Turn-Based: Empire, Strategic Conquest, Civilization, Empire Earth • “Real-Time” Strategy: Warcraft/Starcraft, TA, C&C, B&W, etc. -

Metaheuristics

DM865 – Spring 2020 Heuristics and Approximation Algorithms Metaheuristics Marco Chiarandini Department of Mathematics & Computer Science University of Southern Denmark Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Outline Variable Neighborhood Search 1. Stochastic Local Search 2. Simulated Annealing 3. Iterated Local Search 4. Tabu Search 5. Variable Neighborhood Search 2 Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Escaping Local Optima Variable Neighborhood Search Possibilities: • Non-improving steps: in local optima, allow selection of candidate solutions with equal or worse evaluation function value, e.g., using minimally worsening steps. (Can lead to long walks in plateaus, i.e., regions of search positions with identical evaluation function.) • Diversify the neighborhood • Restart: re-initialize search whenever a local optimum is encountered. (Often rather ineffective due to cost of initialization.) Note: None of these mechanisms is guaranteed to always escape effectively from local optima. 3 Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Variable Neighborhood Search Diversification vs Intensification • Goal-directed and randomized components of LS strategy need to be balanced carefully. • Intensification: aims at greedily increasing solution quality, e.g., by exploiting the evaluation function. • Diversification: aims at preventing search stagnation, that is, the search process getting trapped in confined regions. Examples: • Iterative Improvement (II): intensification strategy. • Uninformed Random Walk/Picking (URW/P): diversification strategy. Balanced combination of intensification and diversification mechanisms forms the basis for advanced LS methods. 4 Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Outline Variable Neighborhood Search 1. Stochastic Local Search 2. Simulated Annealing 3. Iterated Local Search 4. -

Beam Search for Integer Multi-Objective Optimization

Beam Search for integer multi-objective optimization Thibaut Barthelemy Sophie N. Parragh Fabien Tricoire Richard F. Hartl University of Vienna, Department of Business Administration, Austria {thibaut.barthelemy,sophie.parragh,fabien.tricoire,richard.hartl}@univie.ac.at Abstract Beam search is a tree search procedure where, at each level of the tree, at most w nodes are kept. This results in a metaheuristic whose solving time is polynomial in w. Popular for single-objective problems, beam search has only received little attention in the context of multi-objective optimization. By introducing the concepts of oracle and filter, we define a paradigm to understand multi-objective beam search algorithms. Its theoretical analysis engenders practical guidelines for the design of these algorithms. The guidelines, suitable for any problem whose variables are integers, are applied to address a bi-objective 0-1 knapsack problem. The solver obtained outperforms the existing non-exact methods from the literature. 1 Introduction Everyday life decisions are often a matter of multi-objective optimization. Indeed, life is full of tradeoffs, as reflected for instance by discussions in parliaments or boards of directors. As a result, almost all cost-oriented problems of the classical literature give also rise to quality, durability, social or ecological concerns. Such goals are often conflicting. For instance, lower costs may lead to lower quality and vice versa. A generic approach to multi-objective optimization consists in computing a set of several good compromise solutions that decision makers discuss in order to choose one. Numerous methods have been developed to find the set of compromise solutions to multi-objective combi- natorial problems.