Fast and Efficient Black Box Optimization Using the Parameter-Less Population Pyramid

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AI-KI-B Heuristic Search

AI-KI-B Heuristic Search Ute Schmid & Diedrich Wolter Practice: Johannes Rabold Cognitive Systems and Smart Environments Applied Computer Science, University of Bamberg last change: 3. Juni 2019, 10:05 Outline Introduction of heuristic search algorithms, based on foundations of problem spaces and search • Uniformed Systematic Search • Depth-First Search (DFS) • Breadth-First Search (BFS) • Complexity of Blocks-World • Cost-based Optimal Search • Uniform Cost Search • Cost Estimation (Heuristic Function) • Heuristic Search Algorithms • Hill Climbing (Depth-First, Greedy) • Branch and Bound Algorithms (BFS-based) • Best First Search • A* • Designing Heuristic Functions • Problem Types Cost and Cost Estimation • \Real" cost is known for each operator. • Accumulated cost g(n) for a leaf node n on a partially expanded path can be calculated. • For problems where each operator has the same cost or where no information about costs is available, all operator applications have equal cost values. For cost values of 1, accumulated costs g(n) are equal to path-length d. • Sometimes available: Heuristics for estimating the remaining costs to reach the final state. • h^(n): estimated costs to reach a goal state from node n • \bad" heuristics can misguide search! Cost and Cost Estimation cont. Evaluation Function: f^(n) = g(n) + h^(n) Cost and Cost Estimation cont. • \True costs" of an optimal path from an initial state s to a final state: f (s). • For a node n on this path, f can be decomposed in the already performed steps with cost g(n) and the yet to perform steps with true cost h(n). • h^(n) can be an estimation which is greater or smaller than the true costs. -

CAP 4630 Artificial Intelligence

CAP 4630 Artificial Intelligence Instructor: Sam Ganzfried [email protected] 1 • http://www.ultimateaiclass.com/ • https://moodle.cis.fiu.edu/ • HW1 out 9/5 today, due 9/28 (maybe 10/3) – Remember that you have up to 4 late days to use throughout the semester. – https://www.cs.cmu.edu/~sganzfri/HW1_AI.pdf – http://ai.berkeley.edu/search.html • Office hours 2 • https://www.cs.cmu.edu/~sganzfri/Calendar_AI.docx • Midterm exam: on 10/19 • Final exam pushed back (likely on 12/12 instead of 12/5) • Extra lecture on NLP on 11/16 • Will likely only cover 1-1.5 lectures on logic and on planning • Only 4 homework assignments instead of 5 3 Heuristic functions 4 8-puzzle • The object of the puzzle is to slide the tiles horizontally or vertically into the empty space until the configuration matches the goal configuration. • The average solution cost for a randomly generated 8-puzzle instance is about 22 steps. The branching factor is about 3 (when the empty tile is in the middle, four moves are possible; when it is in a corner, two; and when it is along an edge, three). This means that an exhaustive tree search to depth 22 would look at about 3^22 = 3.1*10^10 states. A graph search would cut this down by a factor of about 170,000 because only 181,440 distinct states are reachable (homework exercise). This is a manageable number, but for a 15-puzzle, it would be 10^13, so we will need a good heuristic function. -

Artificial Intelligence 1: Informed Search

Informed Search II 1. When A* fails – Hill climbing, simulated annealing 2. Genetic algorithms When A* doesn’t work AIMA 4.1 A few slides adapted from CS 471, UBMC and Eric Eaton (in turn, adapted from slides by Charles R. Dyer, University of Wisconsin-Madison)...... Outline Local Search: Hill Climbing Escaping Local Maxima: Simulated Annealing Genetic Algorithms CIS 391 - Intro to AI 3 Local search and optimization Local search: • Use single current state and move to neighboring states. Idea: start with an initial guess at a solution and incrementally improve it until it is one Advantages: • Use very little memory • Find often reasonable solutions in large or infinite state spaces. Useful for pure optimization problems. • Find or approximate best state according to some objective function • Optimal if the space to be searched is convex CIS 391 - Intro to AI 4 Hill Climbing Hill climbing on a surface of states h(s): Estimate of distance from a peak (smaller is better) OR: Height Defined by Evaluation Function (greater is better) CIS 391 - Intro to AI 6 Hill-climbing search I. While ( uphill points): • Move in the direction of increasing evaluation function f II. Let snext = arg maxfs ( ) , s a successor state to the current state n s • If f(n) < f(s) then move to s • Otherwise halt at n Properties: • Terminates when a peak is reached. • Does not look ahead of the immediate neighbors of the current state. • Chooses randomly among the set of best successors, if there is more than one. • Doesn’t backtrack, since it doesn’t remember where it’s been a.k.a. -

A. Local Beam Search with K=1. A. Local Beam Search with K = 1 Is Hill-Climbing Search

4. Give the name that results from each of the following special cases: a. Local beam search with k=1. a. Local beam search with k = 1 is hill-climbing search. b. Local beam search with one initial state and no limit on the number of states retained. b. Local beam search with k = ∞: strictly speaking, this doesn’t make sense. The idea is that if every successor is retained (because k is unbounded), then the search resembles breadth-first search in that it adds one complete layer of nodes before adding the next layer. Starting from one state, the algorithm would be essentially identical to breadth-first search except that each layer is generated all at once. c. Simulated annealing with T=0 at all times (and omitting the termination test). c. Simulated annealing with T = 0 at all times: ignoring the fact that the termination step would be triggered immediately, the search would be identical to first-choice hill climbing because every downward successor would be rejected with probability 1. d. Simulated annealing with T=infinity at all times. d. Simulated annealing with T = infinity at all times: ignoring the fact that the termination step would never be triggered, the search would be identical to a random walk because every successor would be accepted with probability 1. Note that, in this case, a random walk is approximately equivalent to depth-first search. e. Genetic algorithm with population size N=1. e. Genetic algorithm with population size N = 1: if the population size is 1, then the two selected parents will be the same individual; crossover yields an exact copy of the individual; then there is a small chance of mutation. -

On the Design of (Meta)Heuristics

On the design of (meta)heuristics Tony Wauters CODeS Research group, KU Leuven, Belgium There are two types of people • Those who use heuristics • And those who don’t ☺ 2 Tony Wauters, Department of Computer Science, CODeS research group Heuristics Origin: Ancient Greek: εὑρίσκω, "find" or "discover" Oxford Dictionary: Proceeding to a solution by trial and error or by rules that are only loosely defined. Tony Wauters, Department of Computer Science, CODeS research group 3 Properties of heuristics + Fast* + Scalable* + High quality solutions* + Solve any type of problem (complex constraints, non-linear, …) - Problem specific (but usually transferable to other problems) - Cannot guarantee optimallity * if well designed 4 Tony Wauters, Department of Computer Science, CODeS research group Heuristic types • Constructive heuristics • Metaheuristics • Hybrid heuristics • Matheuristics: hybrid of Metaheuristics and Mathematical Programming • Other hybrids: Machine Learning, Constraint Programming,… • Hyperheuristics 5 Tony Wauters, Department of Computer Science, CODeS research group Constructive heuristics • Incrementally construct a solution from scratch. • Easy to understand and implement • Usually very fast • Reasonably good solutions 6 Tony Wauters, Department of Computer Science, CODeS research group Metaheuristics A Metaheuristic is a high-level problem independent algorithmic framework that provides a set of guidelines or strategies to develop heuristic optimization algorithms. Instead of reinventing the wheel when developing a heuristic -

Job Shop Scheduling with Metaheuristics for Car Workshops

Job Shop Scheduling with Metaheuristics for Car Workshops Hein de Haan s2068125 Master Thesis Artificial Intelligence University of Groningen July 2016 First Supervisor Dr. Marco Wiering (Artificial Intelligence, University of Groningen) Second Supervisor Prof. Dr. Lambert Schomaker (Artificial Intelligence, University of Groningen) Abstract For this thesis, different algorithms were created to solve the problem of Jop Shop Scheduling with a number of constraints. More specifically, the setting of a (sim- plified) car workshop was used: the algorithms had to assign all tasks of one day to the mechanics, taking into account minimum and maximum finish times of tasks and mechanics, the use of bridges, qualifications and the delivery and usage of car parts. The algorithms used in this research are Hill Climbing, a Genetic Algorithm with and without a Hill Climbing component, two different Particle Swarm Opti- mizers with and without Hill Climbing, Max-Min Ant System with and without Hill Climbing and Tabu Search. Two experiments were performed: one focussed on the speed of the algorithms, the other on their performance. The experiments point towards the Genetic Algorithm with Hill Climbing as the best algorithm for this problem. 2 Contents 1 Introduction 5 1.1 Scheduling . 5 1.2 Research Question . 7 2 Theoretical Background of Job Shop Scheduling 9 2.1 Formal Description . 9 2.2 Early Work . 11 2.3 Current Algorithms . 13 2.3.1 Simulated Annealing . 13 2.3.2 Neural Networks . 14 3 Methods 19 3.1 Introduction . 19 3.2 Hill Climbing . 20 3.2.1 Introduction . 20 3.2.2 Hill Climbing for Job Shop Scheduling . -

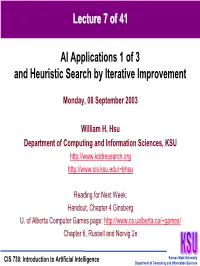

Lecture 7 of 41 AI Applications 1 of 3 and Heuristic Search by Iterative Improvement

LectureLecture 77 ofof 4141 AI Applications 1 of 3 and Heuristic Search by Iterative Improvement Monday, 08 September 2003 William H. Hsu Department of Computing and Information Sciences, KSU http://www.kddresearch.org http://www.cis.ksu.edu/~bhsu Reading for Next Week: Handout, Chapter 4 Ginsberg U. of Alberta Computer Games page: http://www.cs.ualberta.ca/~games/ Chapter 6, Russell and Norvig 2e Kansas State University CIS 730: Introduction to Artificial Intelligence Department of Computing and Information Sciences LectureLecture OutlineOutline • Today’s Reading – Sections 4.3 – 4.5, Russell and Norvig – Recommended references: Chapter 4, Ginsberg; Chapter 3, Winston • Reading for Next Week: Chapter 6, Russell and Norvig • More Heuristic Search – Best-First Search: A/A* concluded – Iterative improvement • Hill-climbing • Simulated annealing (SA) – Search as function maximization • Problems: ridge; foothill; plateau, jump discontinuity • Solutions: macro operators; global optimization (genetic algorithms / SA) • Next Lecture: Constraint Satisfaction Search, Heuristics (Concluded) • Next Week: Adversarial Search (e.g., Game Tree Search) – Competitive problems – Minimax algorithm Kansas State University CIS 730: Introduction to Artificial Intelligence Department of Computing and Information Sciences AIAI ApplicationsApplications [1]:[1]: HumanHuman--ComputerComputer InteractionInteraction (HCI)(HCI) Visualization •Wearable Computers © 1999 Visible Decisions, Inc. •© 2004 MIT Media Lab (http://www.vdi.com) •http://www.media.mit.edu/wearables/ •Projection Keyboards •© 2003 Headmap Group •http://www.headmap.org/book/wearables/ Verbmobil © 2002 Institut für Neuroinformatik http://snipurl.com/8uiu Kansas State University CIS 730: Introduction to Artificial Intelligence Department of Computing and Information Sciences AIAI ApplicationsApplications [2]:[2]: GamesGames • Human-Level AI in Games • Turn-Based: Empire, Strategic Conquest, Civilization, Empire Earth • “Real-Time” Strategy: Warcraft/Starcraft, TA, C&C, B&W, etc. -

Metaheuristics

DM865 – Spring 2020 Heuristics and Approximation Algorithms Metaheuristics Marco Chiarandini Department of Mathematics & Computer Science University of Southern Denmark Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Outline Variable Neighborhood Search 1. Stochastic Local Search 2. Simulated Annealing 3. Iterated Local Search 4. Tabu Search 5. Variable Neighborhood Search 2 Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Escaping Local Optima Variable Neighborhood Search Possibilities: • Non-improving steps: in local optima, allow selection of candidate solutions with equal or worse evaluation function value, e.g., using minimally worsening steps. (Can lead to long walks in plateaus, i.e., regions of search positions with identical evaluation function.) • Diversify the neighborhood • Restart: re-initialize search whenever a local optimum is encountered. (Often rather ineffective due to cost of initialization.) Note: None of these mechanisms is guaranteed to always escape effectively from local optima. 3 Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Variable Neighborhood Search Diversification vs Intensification • Goal-directed and randomized components of LS strategy need to be balanced carefully. • Intensification: aims at greedily increasing solution quality, e.g., by exploiting the evaluation function. • Diversification: aims at preventing search stagnation, that is, the search process getting trapped in confined regions. Examples: • Iterative Improvement (II): intensification strategy. • Uninformed Random Walk/Picking (URW/P): diversification strategy. Balanced combination of intensification and diversification mechanisms forms the basis for advanced LS methods. 4 Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Outline Variable Neighborhood Search 1. Stochastic Local Search 2. Simulated Annealing 3. Iterated Local Search 4. -

On the Time Complexity of Algorithm Selection Hyper-Heuristics for Multimodal Optimisation

The Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19) On the Time Complexity of Algorithm Selection Hyper-Heuristics for Multimodal Optimisation Andrei Lissovoi, Pietro S. Oliveto, John Alasdair Warwicker Rigorous Research, Department of Computer Science The University of Sheffield, Sheffield S1 4DP, United Kingdom fa.lissovoi,p.oliveto,j.warwickerg@sheffield.ac.uk Abstract of hard combinatorial optimisation problems (see (Burke et al. 2010; 2013) for surveys of results). Selection hyper-heuristics are automated algorithm selec- Despite their numerous successes, very limited rigor- tion methodologies that choose between different heuristics ous theoretical understanding of their behaviour and per- during the optimisation process. Recently selection hyper- ¨ heuristics choosing between a collection of elitist randomised formance is available (Lehre and Ozcan 2013; Alanazi and local search heuristics with different neighbourhood sizes Lehre 2014). Recently, it has been proved that a simple se- have been shown to optimise a standard unimodal benchmark lection hyper-heuristic called Random Descent (Cowling, function from evolutionary computation in the optimal ex- Kendall, and Soubeiga 2001; 2002), choosing between eli- pected runtime achievable with the available low-level heuris- tist randomised local search (RLS) heuristics with differ- tics. In this paper we extend our understanding to the domain ent neighbourhood sizes, optimises a standard unimodal of multimodal optimisation by considering a hyper-heuristic benchmark function from evolutionary computation called from the literature that can switch between elitist and non- LEADINGONES in the best possible runtime (up to lower elitist heuristics during the run. We first identify the range order terms) achievable with the available low-level heuris- of parameters that allow the hyper-heuristic to hillclimb ef- ficiently and prove that it can optimise a standard hillclimb- tics (Lissovoi, Oliveto, and Warwicker 2018). -

Boosting Hill-Climbing Through Online Relaxation Refinement

Proceedings of the Twenty-Seventh International Conference on Automated Planning and Scheduling (ICAPS 2017) Complete Local Search: Boosting Hill-Climbing through Online Relaxation Refinement Maximilian Fickert, Jorg¨ Hoffmann Saarland Informatics Campus Saarland University Saarbrucken,¨ Germany {fickert,hoffmann}@cs.uni-saarland.de Abstract online only. Online relaxation refinement appears to be the right answer, but has barely been addressed. Seipp (2012) Several known heuristic functions can capture the input at dif- touches Cartesian-abstraction online refinement, yet only ferent levels of precision, and support relaxation-refinement operations guaranteeing to converge to exact information in briefly at the end of his M.Sc. thesis (with disappointing re- a finite number of steps. A natural idea is to use such re- sults). Steinmetz and Hoffmann (2017) refine a critical-path finement online, during search, yet this has barely been ad- dead-end detector instead of a distance estimator. Wilt and dressed. We do so here for local search, where relaxation re- Ruml’s (2013) bidirectional search uses the backwards part finement is particularly appealing: escape local minima not as a growing perimeter to improve the forward-part heuris- by search, but by removing them from the search surface. tic function, which can be viewed as a form of relaxation Thanks to convergence, such an escape is always possible. refinement. Certainly, this is not all there is to be done. We design a family of hill-climbing algorithms along these lines. We show that these are complete, even when using That said, online relaxation refinement is one form of on- helpful actions pruning. Using them with the partial delete line heuristic-function learning, which has been explored in relaxation heuristic hCFF, the best-performing variant out- some depth for different forms of learning. -

Iterative Improvement Search Hill Climbing, Simulated Annealing, WALKSAT, and Genetic Algorithms Andrew W

Iterative Improvement Search Hill Climbing, Simulated Annealing, WALKSAT, and Genetic Algorithms Andrew W. Moore Professor School of Computer Science Carnegie Mellon University www.cs.cmu.edu/~awm [email protected] 412-268-7599 Note to other teachers and users of these slides. Andrew would be delighted if you found this source material useful in giving your own lectures. Feel free to use these slides verbatim, or to modify them to fit your own needs. PowerPoint originals are available. If you make use of a significant portion of these slides in your own lecture, please include this message, or the following link to the source repository of Slide 1 Andrew’s tutorials: http://www.cs.cmu.edu/~awm/tutorials . Comments and corrections gratefully received. Example Problems Channel Routing Lots of Chip Real-estate Same connectivity, much less space Slide 2 1 Example Problems Also: parking lot layout, product design, aero- dynamic design, “Million Queens” problem, radiotherapy treatment planning, … Slide 3 Informal characterization These are problems in which… • There is some combinatorial structure being optimized. • There is a cost function: Structure Æ Real, to be optimized, or at least a reasonable solution is to be found. • (So basic CSP methods only solve part of the problem … they satisfy constraints but don’t look for optimal constraint-satisfier.) • Searching all possible structures is intractable. • Depth first search approaches are too expensive. • There’s no known algorithm for finding the optimal solution efficiently. • Very informally, similar solutions have similar costs. Slide 4 2 Iterative Improvement Intuition: consider the configurations to be laid out on the surface of a landscape. -

IJIR Paper Template

International Journal of Research e-ISSN: 2348-6848 p-ISSN: 2348-795X Available at https://journals.pen2print.org/index.php/ijr/ Volume 06 Issue 10 September 2019 Study of Informed Searching Algorithm for Finding the Shortest Path Wint Aye Khaing1, Kyi Zar Nyunt2, Thida Win2, Ei Ei Moe3 1Faculty of Information Science, University of Computer Studies (Taungoo), Myanmar 2 Faculty of Information Science, University of Computer Studies (Taungoo), Myanmar 3 Faculty of Computer Science, University of Computer Studies (Taungoo), Myanmar [email protected],[email protected] [email protected], [email protected], [email protected], [email protected] Abstract: frequently deal with this question when planning trips with their cars. There are also many applications like logistic While technological revolution has active role to the planning or traffic simulation that need to solve a huge increase of computer information, growing computational number of such route queries. Shortest path can be either capabilities of devices, and raise the level of knowledge inconvenient for the client if he has to wait for the response abilities, and skills. Increase developments in science and or experience for the service provider if he has to make a lot technology. In city area traffic the shortest path finding is of computing power available. While algorithm is a very difficult in a road network. Shortest path searching is procedure or formula for solve problem. Algorithm usually very important in some special case as medical emergency, means a small procedure that solves a recurrent problem. spying, theft catching, fire brigade etc. In this paper used the shortest path algorithms for solving the shortest path 2.