AI-KI-B Heuristic Search

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

NI\SI\ I-J!'.~!.:}',-~)N, Vifm:Nu\ National Aeronautics and Space Administration Langley Research Center Hampton, Virginia 23665

https://ntrs.nasa.gov/search.jsp?R=19830020606 2020-03-21T01:58:58+00:00Z NASA Technical Memorandum 83216 NASA-TM-8321619830020606 DECISION-MAKING AND PROBLEM SOLVING METHODS IN AUTOMATION TECHNOLOGY W. W. HANKINS) J. E. PENNINGTON) AND L. K. BARKER MAY 1983 : "J' ,. (,lqir; '.I '. .' I_ \ i !- ,( ~, . L!\NGLEY F~I:~~~:r.. r~CH (TN! El~ L1B!(,!\,f(,(, NA~;I\ NI\SI\ I-J!'.~!.:}',-~)N, Vifm:nU\ National Aeronautics and Space Administration Langley Research Center Hampton, Virginia 23665 TABLE OF CONTENTS Page SUMMARY ••••••••••••••••••••••••••••••••• •• 1 INTRODUCTION •• .,... ••••••••••••••••••••••• •• 2 DETECTION AND RECOGNITION •••••••••••••••••••••••• •• 3 T,ypes of Sensors Computer Vision CONSIGHT-1 S,ystem SRI Vision Module Touch and Contact Sensors PLANNING AND SCHEDULING ••••••••••••••••••••••••• •• 7 Operations Research Methods Linear Systems Nonlinear S,ystems Network Models Queueing Theory D,ynamic Programming Simulation Artificial Intelligence (AI) Methods Nets of Action Hierarchies (NOAH) System Monitoring Resource Allocation Robotics LEARNING ••••• ••••••••••••••••••••••••••• •• 13 Expert Systems Learning Concept and Linguistic Constructions Through English Dialogue Natural Language Understanding Robot Learning The Computer as an Intelligent Partner Learning Control THEOREM PROVING ••••••••••••••••••••••••••••• •• 15 Logical Inference Disproof by Counterexample Proof by Contradiction Resolution Principle Heuristic Methods Nonstandard Techniques Mathematical Induction Analogies Question-Answering Systems Man-Machine Systems -

CAP 4630 Artificial Intelligence

CAP 4630 Artificial Intelligence Instructor: Sam Ganzfried [email protected] 1 • http://www.ultimateaiclass.com/ • https://moodle.cis.fiu.edu/ • HW1 out 9/5 today, due 9/28 (maybe 10/3) – Remember that you have up to 4 late days to use throughout the semester. – https://www.cs.cmu.edu/~sganzfri/HW1_AI.pdf – http://ai.berkeley.edu/search.html • Office hours 2 • https://www.cs.cmu.edu/~sganzfri/Calendar_AI.docx • Midterm exam: on 10/19 • Final exam pushed back (likely on 12/12 instead of 12/5) • Extra lecture on NLP on 11/16 • Will likely only cover 1-1.5 lectures on logic and on planning • Only 4 homework assignments instead of 5 3 Heuristic functions 4 8-puzzle • The object of the puzzle is to slide the tiles horizontally or vertically into the empty space until the configuration matches the goal configuration. • The average solution cost for a randomly generated 8-puzzle instance is about 22 steps. The branching factor is about 3 (when the empty tile is in the middle, four moves are possible; when it is in a corner, two; and when it is along an edge, three). This means that an exhaustive tree search to depth 22 would look at about 3^22 = 3.1*10^10 states. A graph search would cut this down by a factor of about 170,000 because only 181,440 distinct states are reachable (homework exercise). This is a manageable number, but for a 15-puzzle, it would be 10^13, so we will need a good heuristic function. -

2011-2012 ABET Self-Study Questionnaire for Engineering

ABET SELF-STUDY REPOrt for Computer Engineering July 1, 2012 CONFIDENTIAL The information supplied in this Self-Study Report is for the confidential use of ABET and its authorized agents, and will not be disclosed without authorization of the institution concerned, except for summary data not identifiable to a specific institution. Table of Contents BACKGROUND INFORMATION ................................................................................... 5 A. Contact Information ............................................................................................. 5 B. Program History ................................................................................................... 6 C. Options ................................................................................................................. 7 D. Organizational Structure ...................................................................................... 8 E. Program Delivery Modes ..................................................................................... 8 F. Program Locations ............................................................................................... 9 G. Deficiencies, Weaknesses or Concerns from Previous Evaluation(s) ................. 9 H. Joint Accreditation ............................................................................................... 9 CRITERION 1. STUDENTS ........................................................................................... 10 A. Student Admissions .......................................................................................... -

Fast and Efficient Black Box Optimization Using the Parameter-Less Population Pyramid

Fast and Efficient Black Box Optimization Using the Parameter-less Population Pyramid B. W. Goldman [email protected] Department of Computer Science and Engineering, Michigan State University, East Lansing, 48823, USA W. F. Punch [email protected] Department of Computer Science and Engineering, Michigan State University, East Lansing, 48823, USA doi:10.1162/EVCO_a_00148 Abstract The parameter-less population pyramid (P3) is a recently introduced method for per- forming evolutionary optimization without requiring any user-specified parameters. P3’s primary innovation is to replace the generational model with a pyramid of mul- tiple populations that are iteratively created and expanded. In combination with local search and advanced crossover, P3 scales to problem difficulty, exploiting previously learned information before adding more diversity. Across seven problems, each tested using on average 18 problem sizes, P3 outperformed all five advanced comparison algorithms. This improvement includes requiring fewer evaluations to find the global optimum and better fitness when using the same number of evaluations. Using both algorithm analysis and comparison, we find P3’s effectiveness is due to its ability to properly maintain, add, and exploit diversity. Unlike the best comparison algorithms, P3 was able to achieve this quality without any problem-specific tuning. Thus, unlike previous parameter-less methods, P3 does not sacrifice quality for applicability. There- fore we conclude that P3 is an efficient, general, parameter-less approach to black box optimization which is more effective than existing state-of-the-art techniques. Keywords Genetic algorithms, linkage learning, local search, parameter-less. 1 Introduction A primary purpose of evolutionary optimization is to efficiently find good solutions to challenging real-world problems with minimal prior knowledge about the problem itself. -

Search Algorithms ◦ Breadth-First Search (BFS) ◦ Uniform-Cost Search ◦ Depth-First Search (DFS) ◦ Depth-Limited Search ◦ Iterative Deepening Best-First Search

9-04-2013 Uninformed (blind) search algorithms ◦ Breadth-First Search (BFS) ◦ Uniform-Cost Search ◦ Depth-First Search (DFS) ◦ Depth-Limited Search ◦ Iterative Deepening Best-First Search HW#1 due today HW#2 due Monday, 9/09/13, in class Continue reading Chapter 3 Formulate — Search — Execute 1. Goal formulation 2. Problem formulation 3. Search algorithm 4. Execution A problem is defined by four items: 1. initial state 2. actions or successor function 3. goal test (explicit or implicit) 4. path cost (∑ c(x,a,y) – sum of step costs) A solution is a sequence of actions leading from the initial state to a goal state Search algorithms have the following basic form: do until terminating condition is met if no more nodes to consider then return fail; select node; {choose a node (leaf) on the tree} if chosen node is a goal then return success; expand node; {generate successors & add to tree} Analysis ◦ b = branching factor ◦ d = depth ◦ m = maximum depth g(n) = the total cost of the path on the search tree from the root node to node n h(n) = the straight line distance from n to G n S A B C G h(n) 5.8 3 2.2 2 0 Uninformed search strategies use only the information available in the problem definition Breadth-first search ◦ Uniform-cost search Depth-first search ◦ Depth-limited search ◦ Iterative deepening search • Expand shallowest unexpanded node • Implementation: – fringe is a FIFO queue, i.e., new successors go at end idea: order the branches under each node so that the “most promising” ones are explored first g(n) is the total cost -

Artificial Intelligence 1: Informed Search

Informed Search II 1. When A* fails – Hill climbing, simulated annealing 2. Genetic algorithms When A* doesn’t work AIMA 4.1 A few slides adapted from CS 471, UBMC and Eric Eaton (in turn, adapted from slides by Charles R. Dyer, University of Wisconsin-Madison)...... Outline Local Search: Hill Climbing Escaping Local Maxima: Simulated Annealing Genetic Algorithms CIS 391 - Intro to AI 3 Local search and optimization Local search: • Use single current state and move to neighboring states. Idea: start with an initial guess at a solution and incrementally improve it until it is one Advantages: • Use very little memory • Find often reasonable solutions in large or infinite state spaces. Useful for pure optimization problems. • Find or approximate best state according to some objective function • Optimal if the space to be searched is convex CIS 391 - Intro to AI 4 Hill Climbing Hill climbing on a surface of states h(s): Estimate of distance from a peak (smaller is better) OR: Height Defined by Evaluation Function (greater is better) CIS 391 - Intro to AI 6 Hill-climbing search I. While ( uphill points): • Move in the direction of increasing evaluation function f II. Let snext = arg maxfs ( ) , s a successor state to the current state n s • If f(n) < f(s) then move to s • Otherwise halt at n Properties: • Terminates when a peak is reached. • Does not look ahead of the immediate neighbors of the current state. • Chooses randomly among the set of best successors, if there is more than one. • Doesn’t backtrack, since it doesn’t remember where it’s been a.k.a. -

A. Local Beam Search with K=1. A. Local Beam Search with K = 1 Is Hill-Climbing Search

4. Give the name that results from each of the following special cases: a. Local beam search with k=1. a. Local beam search with k = 1 is hill-climbing search. b. Local beam search with one initial state and no limit on the number of states retained. b. Local beam search with k = ∞: strictly speaking, this doesn’t make sense. The idea is that if every successor is retained (because k is unbounded), then the search resembles breadth-first search in that it adds one complete layer of nodes before adding the next layer. Starting from one state, the algorithm would be essentially identical to breadth-first search except that each layer is generated all at once. c. Simulated annealing with T=0 at all times (and omitting the termination test). c. Simulated annealing with T = 0 at all times: ignoring the fact that the termination step would be triggered immediately, the search would be identical to first-choice hill climbing because every downward successor would be rejected with probability 1. d. Simulated annealing with T=infinity at all times. d. Simulated annealing with T = infinity at all times: ignoring the fact that the termination step would never be triggered, the search would be identical to a random walk because every successor would be accepted with probability 1. Note that, in this case, a random walk is approximately equivalent to depth-first search. e. Genetic algorithm with population size N=1. e. Genetic algorithm with population size N = 1: if the population size is 1, then the two selected parents will be the same individual; crossover yields an exact copy of the individual; then there is a small chance of mutation. -

On the Design of (Meta)Heuristics

On the design of (meta)heuristics Tony Wauters CODeS Research group, KU Leuven, Belgium There are two types of people • Those who use heuristics • And those who don’t ☺ 2 Tony Wauters, Department of Computer Science, CODeS research group Heuristics Origin: Ancient Greek: εὑρίσκω, "find" or "discover" Oxford Dictionary: Proceeding to a solution by trial and error or by rules that are only loosely defined. Tony Wauters, Department of Computer Science, CODeS research group 3 Properties of heuristics + Fast* + Scalable* + High quality solutions* + Solve any type of problem (complex constraints, non-linear, …) - Problem specific (but usually transferable to other problems) - Cannot guarantee optimallity * if well designed 4 Tony Wauters, Department of Computer Science, CODeS research group Heuristic types • Constructive heuristics • Metaheuristics • Hybrid heuristics • Matheuristics: hybrid of Metaheuristics and Mathematical Programming • Other hybrids: Machine Learning, Constraint Programming,… • Hyperheuristics 5 Tony Wauters, Department of Computer Science, CODeS research group Constructive heuristics • Incrementally construct a solution from scratch. • Easy to understand and implement • Usually very fast • Reasonably good solutions 6 Tony Wauters, Department of Computer Science, CODeS research group Metaheuristics A Metaheuristic is a high-level problem independent algorithmic framework that provides a set of guidelines or strategies to develop heuristic optimization algorithms. Instead of reinventing the wheel when developing a heuristic -

Job Shop Scheduling with Metaheuristics for Car Workshops

Job Shop Scheduling with Metaheuristics for Car Workshops Hein de Haan s2068125 Master Thesis Artificial Intelligence University of Groningen July 2016 First Supervisor Dr. Marco Wiering (Artificial Intelligence, University of Groningen) Second Supervisor Prof. Dr. Lambert Schomaker (Artificial Intelligence, University of Groningen) Abstract For this thesis, different algorithms were created to solve the problem of Jop Shop Scheduling with a number of constraints. More specifically, the setting of a (sim- plified) car workshop was used: the algorithms had to assign all tasks of one day to the mechanics, taking into account minimum and maximum finish times of tasks and mechanics, the use of bridges, qualifications and the delivery and usage of car parts. The algorithms used in this research are Hill Climbing, a Genetic Algorithm with and without a Hill Climbing component, two different Particle Swarm Opti- mizers with and without Hill Climbing, Max-Min Ant System with and without Hill Climbing and Tabu Search. Two experiments were performed: one focussed on the speed of the algorithms, the other on their performance. The experiments point towards the Genetic Algorithm with Hill Climbing as the best algorithm for this problem. 2 Contents 1 Introduction 5 1.1 Scheduling . 5 1.2 Research Question . 7 2 Theoretical Background of Job Shop Scheduling 9 2.1 Formal Description . 9 2.2 Early Work . 11 2.3 Current Algorithms . 13 2.3.1 Simulated Annealing . 13 2.3.2 Neural Networks . 14 3 Methods 19 3.1 Introduction . 19 3.2 Hill Climbing . 20 3.2.1 Introduction . 20 3.2.2 Hill Climbing for Job Shop Scheduling . -

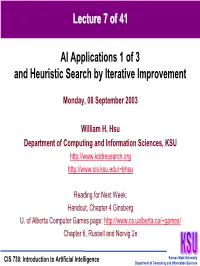

Lecture 7 of 41 AI Applications 1 of 3 and Heuristic Search by Iterative Improvement

LectureLecture 77 ofof 4141 AI Applications 1 of 3 and Heuristic Search by Iterative Improvement Monday, 08 September 2003 William H. Hsu Department of Computing and Information Sciences, KSU http://www.kddresearch.org http://www.cis.ksu.edu/~bhsu Reading for Next Week: Handout, Chapter 4 Ginsberg U. of Alberta Computer Games page: http://www.cs.ualberta.ca/~games/ Chapter 6, Russell and Norvig 2e Kansas State University CIS 730: Introduction to Artificial Intelligence Department of Computing and Information Sciences LectureLecture OutlineOutline • Today’s Reading – Sections 4.3 – 4.5, Russell and Norvig – Recommended references: Chapter 4, Ginsberg; Chapter 3, Winston • Reading for Next Week: Chapter 6, Russell and Norvig • More Heuristic Search – Best-First Search: A/A* concluded – Iterative improvement • Hill-climbing • Simulated annealing (SA) – Search as function maximization • Problems: ridge; foothill; plateau, jump discontinuity • Solutions: macro operators; global optimization (genetic algorithms / SA) • Next Lecture: Constraint Satisfaction Search, Heuristics (Concluded) • Next Week: Adversarial Search (e.g., Game Tree Search) – Competitive problems – Minimax algorithm Kansas State University CIS 730: Introduction to Artificial Intelligence Department of Computing and Information Sciences AIAI ApplicationsApplications [1]:[1]: HumanHuman--ComputerComputer InteractionInteraction (HCI)(HCI) Visualization •Wearable Computers © 1999 Visible Decisions, Inc. •© 2004 MIT Media Lab (http://www.vdi.com) •http://www.media.mit.edu/wearables/ •Projection Keyboards •© 2003 Headmap Group •http://www.headmap.org/book/wearables/ Verbmobil © 2002 Institut für Neuroinformatik http://snipurl.com/8uiu Kansas State University CIS 730: Introduction to Artificial Intelligence Department of Computing and Information Sciences AIAI ApplicationsApplications [2]:[2]: GamesGames • Human-Level AI in Games • Turn-Based: Empire, Strategic Conquest, Civilization, Empire Earth • “Real-Time” Strategy: Warcraft/Starcraft, TA, C&C, B&W, etc. -

Lecture Notes

Lecture Notes Ganesh Ramakrishnan August 15, 2007 2 Contents 1 Search and Optimization 3 1.1 Search . 3 1.1.1 Depth First Search . 5 1.1.2 Breadth First Search (BFS) . 6 1.1.3 Hill Climbing . 6 1.1.4 Beam Search . 8 1.2 Optimal Search . 9 1.2.1 Branch and Bound . 10 1.2.2 A∗ Search . 11 1.3 Constraint Satisfaction . 11 1.3.1 Algorithms for map coloring . 13 1.3.2 Resource Scheduling Problem . 15 1 2 CONTENTS Chapter 1 Search and Optimization Consider the following problem: Cab Driver Problem A cab driver needs to find his way in a city from one point (A) to another (B). Some routes are blocked in gray (probably be- cause they are under construction). The task is to find the path(s) from (A) to (B). Figure 1.1 shows an instance of the problem.. Figure 1.2 shows the map of the united states. Suppose you are set to the following task Map coloring problem Color the map such that states that share a boundary are colored differently.. A simple minded approach to solve this problem is to keep trying color combinations, facing dead ends, back tracking, etc. The program could run for a very very long time (estimated to be a lower bound of 1010 years) before it finds a suitable coloring. On the other hand, we could try another approach which will perform the task much faster and which we will discuss subsequently. The second problem is actually isomorphic to the first problem and to prob- lems of resource allocation in general. -

Metaheuristics

DM865 – Spring 2020 Heuristics and Approximation Algorithms Metaheuristics Marco Chiarandini Department of Mathematics & Computer Science University of Southern Denmark Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Outline Variable Neighborhood Search 1. Stochastic Local Search 2. Simulated Annealing 3. Iterated Local Search 4. Tabu Search 5. Variable Neighborhood Search 2 Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Escaping Local Optima Variable Neighborhood Search Possibilities: • Non-improving steps: in local optima, allow selection of candidate solutions with equal or worse evaluation function value, e.g., using minimally worsening steps. (Can lead to long walks in plateaus, i.e., regions of search positions with identical evaluation function.) • Diversify the neighborhood • Restart: re-initialize search whenever a local optimum is encountered. (Often rather ineffective due to cost of initialization.) Note: None of these mechanisms is guaranteed to always escape effectively from local optima. 3 Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Variable Neighborhood Search Diversification vs Intensification • Goal-directed and randomized components of LS strategy need to be balanced carefully. • Intensification: aims at greedily increasing solution quality, e.g., by exploiting the evaluation function. • Diversification: aims at preventing search stagnation, that is, the search process getting trapped in confined regions. Examples: • Iterative Improvement (II): intensification strategy. • Uninformed Random Walk/Picking (URW/P): diversification strategy. Balanced combination of intensification and diversification mechanisms forms the basis for advanced LS methods. 4 Stochastic Local Search Simulated Annealing Iterated Local Search Tabu Search Outline Variable Neighborhood Search 1. Stochastic Local Search 2. Simulated Annealing 3. Iterated Local Search 4.