Spacejmp: Programming with Multiple Virtual Address Spaces

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

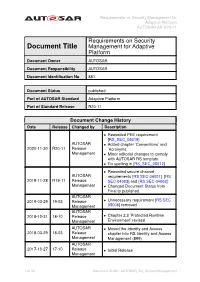

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status published Part of AUTOSAR Standard Adaptive Platform Part of Standard Release R20-11 Document Change History Date Release Changed by Description • Reworded PEE requirement [RS_SEC_05019] AUTOSAR • Added chapter ’Conventions’ and 2020-11-30 R20-11 Release ’Acronyms’ Management • Minor editorial changes to comply with AUTOSAR RS template • Fix spelling in [RS_SEC_05012] • Reworded secure channel AUTOSAR requirements [RS SEC 04001], [RS 2019-11-28 R19-11 Release SEC 04003] and [RS SEC 04003] Management • Changed Document Status from Final to published AUTOSAR 2019-03-29 19-03 Release • Unnecessary requirement [RS SEC Management 05006] removed AUTOSAR 2018-10-31 18-10 Release • Chapter 2.3 ’Protected Runtime Management Environment’ revised AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 32 Document ID 881: AUTOSAR_RS_SecurityManagement Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

微软郑宇:大数据解决城市大问题 18 更智能的家庭 19 微博拾粹 Weibo Highlights

2015年1月 第33期 P7 WWT: 数字宇宙,虚拟星空 P16 P12 带着微软坐过山车 P4 P10 1 微软亚洲研究院 2015年1月 第33期 以“开放”和“极客创新”精神铸造一个“新”微软 3 第十六届“二十一世纪的计算”学术研讨会成功举办 4 微软与清华大学联合举办2014年微软亚太教育峰会 5 你还记得一夜暴红的机器人小冰吗? 7 Peter Lee:带着微软坐过山车 10 计算机科学家周以真专访 12 WWT:数字宇宙,虚拟星空 16 微软郑宇:大数据解决城市大问题 18 更智能的家庭 19 微博拾粹 Weibo Highlights @微软亚洲研究院 官方微博精彩选摘 20 让虚拟世界更真实 22 4K时代,你不能不知道的HEVC 25 小冰的三项绝技是如何炼成的? 26 科研伴我成长——上海交通大学ACM班学生在微软亚洲研究院的幸福实习生活 28 年轻的心与渐行渐近的梦——记微软-斯坦福产品设计创新课程ME310 30 如何写好简历,找到心仪的暑期实习 32 微软研究员Eric Horvitz解读“人工智能百年研究” 33 2 以“开放”和“极客创新”精神 铸造一个“新”微软 爆竹声声辞旧岁,喜气洋“羊”迎新年。转眼间,这一 年的时光即将伴着哒哒的马蹄声奔驰而去。在这辞旧迎新的 时刻,我们怀揣着对技术改变未来的美好憧憬,与所有为推 动互联网不断发展、为人类科技进步不断奋斗的科技爱好者 一起,敲响新年的钟声。挥别2014,一起期盼那充满希望 的新一年! 即将辞行的是很不平凡的一年。这一年的互联网风起云 涌,你方唱罢我登场,比起往日的峥嵘有过之而无不及:可 穿戴设备和智能家居成为众人争抢的高地;人工智能在逐渐 触手可及的同时又引起争议不断;一轮又一轮创业大潮此起 彼伏,不断有优秀的新公司和新青年展露头角。这一年的微 软也在众人的关注下发生着翻天覆地的变化:第三任CEO Satya Nadella屡新,确定以移动业务和云业务为中心 的公司战略,以开放的姿态拥抱移动互联的新时代,发布新一代Windows 10操作系统……于我个人而言,这一 年,就任微软亚太研发集团主席开启了我20年微软旅程上的新篇章。虽然肩上的担子更重了,但心中的激情却 丝毫未减。 “开放”和“极客创新”精神是我们在Satya时代看到微软文化的一个演进。“开放”是指不固守陈规,不囿 于往日的羁绊,用户在哪里需要我们,微软的服务就去哪里。推出Office for iOS/Android,开源.Net都是“开放” 的“新”微软对用户做出的承诺。关于“极客创新”,大家可能都已经听说过“车库(Garage)”这个词了,当年比 尔∙盖茨最早就是在车库里开始创业的。2014年,微软在全球范围内举办了“极客马拉松大赛(Hackthon)”,并鼓 励员工在业余时间加入“微软车库”项目,将自己的想法变为现实。这一系列的举措都是希望激发微软员工的“极 客创新”精神,我们也欣喜地看到变化正在微软内部潜移默化地进行着。“微软车库”目前已经公布了一部分有趣 的项目,我相信,未来还会有更多让人眼前一亮的创新。 2015年,我希望微软亚洲研究院和微软亚太研发集团的同事能够保持和发扬“开放”和“极客创新”的精 神。我们一起铸造一个“新”微软,为用户创造更多的惊喜! 微软亚洲研究院院长 3 第十六届“二十一世纪的计算”学术研讨会成功举办 2014年10月29日由微软亚洲研究院与北京大学联合主办的“二 形图像等领域的突破:微软语音助手小娜的背后,蕴藏着一系列人 十一世纪的计算”大型学术研讨会,在北京大学举行。包括有“计 工智能技术;大数据和机器学习技术帮助我们更好地认识城市和生 算机科学领域的诺贝尔奖”之称的图灵奖获得者、来自微软及学术 -

Common Object File Format (COFF)

Application Report SPRAAO8–April 2009 Common Object File Format ..................................................................................................................................................... ABSTRACT The assembler and link step create object files in common object file format (COFF). COFF is an implementation of an object file format of the same name that was developed by AT&T for use on UNIX-based systems. This format encourages modular programming and provides powerful and flexible methods for managing code segments and target system memory. This appendix contains technical details about the Texas Instruments COFF object file structure. Much of this information pertains to the symbolic debugging information that is produced by the C compiler. The purpose of this application note is to provide supplementary information on the internal format of COFF object files. Topic .................................................................................................. Page 1 COFF File Structure .................................................................... 2 2 File Header Structure .................................................................. 4 3 Optional File Header Format ........................................................ 5 4 Section Header Structure............................................................. 5 5 Structuring Relocation Information ............................................... 7 6 Symbol Table Structure and Content........................................... 11 SPRAAO8–April 2009 -

Operating System Support for Redundant Multithreading

Operating System Support for Redundant Multithreading Dissertation zur Erlangung des akademischen Grades Doktoringenieur (Dr.-Ing.) Vorgelegt an der Technischen Universität Dresden Fakultät Informatik Eingereicht von Dipl.-Inf. Björn Döbel geboren am 17. Dezember 1980 in Lauchhammer Betreuender Hochschullehrer: Prof. Dr. Hermann Härtig Technische Universität Dresden Gutachter: Prof. Frank Mueller, Ph.D. North Carolina State University Fachreferent: Prof. Dr. Christof Fetzer Technische Universität Dresden Statusvortrag: 29.02.2012 Eingereicht am: 21.08.2014 Verteidigt am: 25.11.2014 FÜR JAKOB *† 15. Februar 2013 Contents 1 Introduction 7 1.1 Hardware meets Soft Errors 8 1.2 An Operating System for Tolerating Soft Errors 9 1.3 Whom can you Rely on? 12 2 Why Do Transistors Fail And What Can Be Done About It? 15 2.1 Hardware Faults at the Transistor Level 15 2.2 Faults, Errors, and Failures – A Taxonomy 18 2.3 Manifestation of Hardware Faults 20 2.4 Existing Approaches to Tolerating Faults 25 2.5 Thesis Goals and Design Decisions 36 3 Redundant Multithreading as an Operating System Service 39 3.1 Architectural Overview 39 3.2 Process Replication 41 3.3 Tracking Externalization Events 42 3.4 Handling Replica System Calls 45 3.5 Managing Replica Memory 49 3.6 Managing Memory Shared with External Applications 57 3.7 Hardware-Induced Non-Determinism 63 3.8 Error Detection and Recovery 65 4 Can We Put the Concurrency Back Into Redundant Multithreading? 71 4.1 What is the Problem with Multithreaded Replication? 71 4.2 Can we make Multithreading -

High Velocity Kernel File Systems with Bento

High Velocity Kernel File Systems with Bento Samantha Miller, Kaiyuan Zhang, Mengqi Chen, and Ryan Jennings, University of Washington; Ang Chen, Rice University; Danyang Zhuo, Duke University; Thomas Anderson, University of Washington https://www.usenix.org/conference/fast21/presentation/miller This paper is included in the Proceedings of the 19th USENIX Conference on File and Storage Technologies. February 23–25, 2021 978-1-939133-20-5 Open access to the Proceedings of the 19th USENIX Conference on File and Storage Technologies is sponsored by USENIX. High Velocity Kernel File Systems with Bento Samantha Miller Kaiyuan Zhang Mengqi Chen Ryan Jennings Ang Chen‡ Danyang Zhuo† Thomas Anderson University of Washington †Duke University ‡Rice University Abstract kernel-level debuggers and kernel testing frameworks makes this worse. The restricted and different kernel programming High development velocity is critical for modern systems. environment also limits the number of trained developers. This is especially true for Linux file systems which are seeing Finally, upgrading a kernel module requires either rebooting increased pressure from new storage devices and new demands the machine or restarting the relevant module, either way on storage systems. However, high velocity Linux kernel rendering the machine unavailable during the upgrade. In the development is challenging due to the ease of introducing cloud setting, this forces kernel upgrades to be batched to meet bugs, the difficulty of testing and debugging, and the lack of cloud-level availability goals. support for redeployment without service disruption. Existing Slow development cycles are a particular problem for file approaches to high-velocity development of file systems for systems. -

Virtual Memory - Paging

Virtual memory - Paging Johan Montelius KTH 2020 1 / 32 The process code heap (.text) data stack kernel 0x00000000 0xC0000000 0xffffffff Memory layout for a 32-bit Linux process 2 / 32 Segments - a could be solution Processes in virtual space Address translation by MMU (base and bounds) Physical memory 3 / 32 one problem Physical memory External fragmentation: free areas of free space that is hard to utilize. Solution: allocate larger segments ... internal fragmentation. 4 / 32 another problem virtual space used code We’re reserving physical memory that is not used. physical memory not used? 5 / 32 Let’s try again It’s easier to handle fixed size memory blocks. Can we map a process virtual space to a set of equal size blocks? An address is interpreted as a virtual page number (VPN) and an offset. 6 / 32 Remember the segmented MMU MMU exception no virtual addr. offset yes // < within bounds index + physical address segment table 7 / 32 The paging MMU MMU exception virtual addr. offset // VPN available ? + physical address page table 8 / 32 the MMU exception exception virtual address within bounds page available Segmentation Paging linear address physical address 9 / 32 a note on the x86 architecture The x86-32 architecture supports both segmentation and paging. A virtual address is translated to a linear address using a segmentation table. The linear address is then translated to a physical address by paging. Linux and Windows do not use use segmentation to separate code, data nor stack. The x86-64 (the 64-bit version of the x86 architecture) has dropped many features for segmentation. -

Virtual Memory in X86

Fall 2017 :: CSE 306 Virtual Memory in x86 Nima Honarmand Fall 2017 :: CSE 306 x86 Processor Modes • Real mode – walks and talks like a really old x86 chip • State at boot • 20-bit address space, direct physical memory access • 1 MB of usable memory • No paging • No user mode; processor has only one protection level • Protected mode – Standard 32-bit x86 mode • Combination of segmentation and paging • Privilege levels (separate user and kernel) • 32-bit virtual address • 32-bit physical address • 36-bit if Physical Address Extension (PAE) feature enabled Fall 2017 :: CSE 306 x86 Processor Modes • Long mode – 64-bit mode (aka amd64, x86_64, etc.) • Very similar to 32-bit mode (protected mode), but bigger address space • 48-bit virtual address space • 52-bit physical address space • Restricted segmentation use • Even more obscure modes we won’t discuss today xv6 uses protected mode w/o PAE (i.e., 32-bit virtual and physical addresses) Fall 2017 :: CSE 306 Virt. & Phys. Addr. Spaces in x86 Processor • Both RAM hand hardware devices (disk, Core NIC, etc.) connected to system bus • Mapped to different parts of the physical Virtual Addr address space by the BIOS MMU Data • You can talk to a device by performing Physical Addr read/write operations on its physical addresses Cache • Devices are free to interpret reads/writes in any way they want (driver knows) System Interconnect (Bus) : all addrs virtual DRAM Network … Disk (Memory) Card : all addrs physical Fall 2017 :: CSE 306 Virt-to-Phys Translation in x86 0xdeadbeef Segmentation 0x0eadbeef Paging 0x6eadbeef Virtual Address Linear Address Physical Address Protected/Long mode only • Segmentation cannot be disabled! • But can be made a no-op (a.k.a. -

Address Translation

CS 152 Computer Architecture and Engineering Lecture 8 - Address Translation John Wawrzynek Electrical Engineering and Computer Sciences University of California at Berkeley http://www.eecs.berkeley.edu/~johnw http://inst.eecs.berkeley.edu/~cs152 9/27/2016 CS152, Fall 2016 CS152 Administrivia § Lab 2 due Friday § PS 2 due Tuesday § Quiz 2 next Thursday! 9/27/2016 CS152, Fall 2016 2 Last time in Lecture 7 § 3 C’s of cache misses – Compulsory, Capacity, Conflict § Write policies – Write back, write-through, write-allocate, no write allocate § Multi-level cache hierarchies reduce miss penalty – 3 levels common in modern systems (some have 4!) – Can change design tradeoffs of L1 cache if known to have L2 § Prefetching: retrieve memory data before CPU request – Prefetching can waste bandwidth and cause cache pollution – Software vs hardware prefetching § Software memory hierarchy optimizations – Loop interchange, loop fusion, cache tiling 9/27/2016 CS152, Fall 2016 3 Bare Machine Physical Physical Address Inst. Address Data Decode PC Cache D E + M Cache W Physical Memory Controller Physical Address Address Physical Address Main Memory (DRAM) § In a bare machine, the only kind of address is a physical address 9/27/2016 CS152, Fall 2016 4 Absolute Addresses EDSAC, early 50’s § Only one program ran at a time, with unrestricted access to entire machine (RAM + I/O devices) § Addresses in a program depended upon where the program was to be loaded in memory § But it was more convenient for programmers to write location-independent subroutines How -

Lecture 12: Virtual Memory Finale and Interrupts/Exceptions 1 Review And

EE360N: Computer Architecture Lecture #12 Department of Electical and Computer Engineering The University of Texas at Austin October 9, 2008 Disclaimer: The contents of this document are scribe notes for The University of Texas at Austin EE360N Fall 2008, Computer Architecture.∗ The notes capture the class discussion and may contain erroneous and unverified information and comments. Lecture 12: Virtual Memory Finale and Interrupts/Exceptions Lecture #12: October 8, 2008 Lecturer: Derek Chiou Scribe: Michael Sullivan and Peter Tran Lecture 12 of EE360N: Computer Architecture summarizes the significance of virtual memory and introduces the concept of interrupts and exceptions. As such, this document is divided into two sections. Section 1 details the key points from the discussion in class on virtual memory. Section 2 goes on to introduce interrupts and exceptions, and shows why they are necessary. 1 Review and Summation of Virtual Memory The beginning of this lecture wraps up the review of virtual memory. A broad summary of virtual memory systems is given first, in Subsection 1.1. Next, the individual components of the hardware and OS that are required for virtual memory support are covered in 1.2. Finally, the addressing of caches and the interface between the cache and virtual memory are described in 1.3. 1.1 Summary of Virtual Memory Systems Virtual memory automatically provides the illusion of a large, private, uniform storage space for each process, even on a limited amount of physically addressed memory. The virtual memory space available to every process looks the same every time the process is run, irrespective of the amount of memory available, or the program’s actual placement in main memory. -

Virtual Memory

CSE 410: Systems Programming Virtual Memory Ethan Blanton Department of Computer Science and Engineering University at Buffalo Introduction Address Spaces Paging Summary References Virtual Memory Virtual memory is a mechanism by which a system divorces the address space in programs from the physical layout of memory. Virtual addresses are locations in program address space. Physical addresses are locations in actual hardware RAM. With virtual memory, the two need not be equal. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References Process Layout As previously discussed: Every process has unmapped memory near NULL Processes may have access to the entire address space Each process is denied access to the memory used by other processes Some of these statements seem contradictory. Virtual memory is the mechanism by which this is accomplished. Every address in a process’s address space is a virtual address. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References Physical Layout The physical layout of hardware RAM may vary significantly from machine to machine or platform to platform. Sometimes certain locations are restricted Devices may appear in the memory address space Different amounts of RAM may be present Historically, programs were aware of these restrictions. Today, virtual memory hides these details. The kernel must still be aware of physical layout. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References The Memory Management Unit The Memory Management Unit (MMU) translates addresses. It uses a per-process mapping structure to transform virtual addresses into physical addresses. The MMU is physical hardware between the CPU and the memory bus. -

Building a Better Mousetrap

ISN_FINAL (DO NOT DELETE) 10/24/2013 6:04 PM SUPER-INTERMEDIARIES, CODE, HUMAN RIGHTS IRA STEVEN NATHENSON* Abstract We live in an age of intermediated network communications. Although the internet includes many intermediaries, some stand heads and shoulders above the rest. This article examines some of the responsibilities of “Super-Intermediaries” such as YouTube, Twitter, and Facebook, intermediaries that have tremendous power over their users’ human rights. After considering the controversy arising from the incendiary YouTube video Innocence of Muslims, the article suggests that Super-Intermediaries face a difficult and likely impossible mission of fully servicing the broad tapestry of human rights contained in the International Bill of Human Rights. The article further considers how intermediary content-control pro- cedures focus too heavily on intellectual property, and are poorly suited to balancing the broader and often-conflicting set of values embodied in human rights law. Finally, the article examines a num- ber of steps that Super-Intermediaries might take to resolve difficult content problems and ultimately suggests that intermediaries sub- scribe to a set of process-based guiding principles—a form of Digital Due Process—so that intermediaries can better foster human dignity. * Associate Professor of Law, St. Thomas University School of Law, inathen- [email protected]. I would like to thank the editors of the Intercultural Human Rights Law Review, particularly Amber Bounelis and Tina Marie Trunzo Lute, as well as symposium editor Lauren Smith. Additional thanks are due to Daniel Joyce and Molly Land for their comments at the symposium. This article also benefitted greatly from suggestions made by the participants of the 2013 Third Annual Internet Works-in-Progress conference, including Derek Bambauer, Marc Blitz, Anupam Chander, Chloe Georas, Andrew Gilden, Eric Goldman, Dan Hunter, Fred von Lohmann, Mark Lemley, Rebecca Tushnet, and Peter Yu.