Training Question Answering Models from Synthetic Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Zatanna and the House of Secrets Graphic Novels for Kids & Teens 741.5 Dc’S New Youth Movement Spring 2020 - No

MEANWHILE ZATANNA AND THE HOUSE OF SECRETS GRAPHIC NOVELS FOR KIDS & TEENS 741.5 DC’S NEW YOUTH MOVEMENT SPRING 2020 - NO. 40 PLUS...KIRBY AND KURTZMAN Moa Romanova Romanova Caspar Wijngaard’s Mary Saf- ro’s Ben Pass- more’s Pass- more David Lapham Masters Rick Marron Burchett Greg Rucka. Pat Morisi Morisi Kieron Gillen The Comics & Graphic Novel Bulletin of satirical than the romantic visions of DC Comics is in peril. Again. The ever- Williamson and Wood. Harvey’s sci-fi struggling publisher has put its eggs in so stories often centered on an ordinary many baskets, from Grant Morrison to the shmoe caught up in extraordinary circum- “New 52” to the recent attempts to get hip with stances, from the titular tale of a science Brian Michael Bendis, there’s hardly a one left nerd and his musclehead brother to “The uncracked. The first time DC tried to hitch its Man Who Raced Time” to William of “The wagon to someone else’s star was the early Dimension Translator” (below). Other 1970s. Jack “the King” Kirby was largely re- tales involved arrogant know-it-alls who sponsible for the success of hated rival Marvel. learn they ain’t so smart after all. The star He was lured to join DC with promises of great- of “Television Terror”, the vicious despot er freedom and authority. Kirby was tired of in “The Radioactive Child” and the title being treated like a hired hand. He wanted to character of “Atom Bomb Thief” (right)— be the idea man, the boss who would come up with characters and concepts, then pass them all pay the price for their hubris. -

The Hell Scene and Theatricality in Man and Superman

233 The Amphitheatre and Ann’s Theatre: The Hell Scene and Theatricality in Man and Superman Yumiko Isobe Synopsis: Although George Bernard Shaw’s Man and Superman (1903) is one of his most important dramatic works, it has seldom been staged. This is because, in terms of content and direction, there are technical issues that make it difficult to stage. In particular, Act Three features a dream in Hell, where four dead characters hold a lengthy philosophical symposium, has been considered independent of the main plot of John Tanner and Ann Whitefield. Tanner’s dream emphatically asserts its theatricality by the scenery, the amphitheatre in the Spanish desert where Tanner dozes off. The scene’s geography, which has not received adequate attention, indicates the significance of the dream as a theatre for the protagonist’s destiny with Ann. At the same time, it suggests that the whole plot stands out as a play by Ann, to which Tanner is a “subject.” The comedy thus succeeds in expressing the playwright’s philosophy of the Superman through the play-within-a- play and metatheatre. Introduction Hell in the third act of Man and Superman: A Comedy and a Philosophy (1903) by George Bernard Shaw turns into a philosophical symposium, as the subtitle of the play suggests, and this intermediate part is responsible for the difficulties associated with the staging of the play. The scene, called “the Hell scene” or “Don Juan in Hell,” has been cut out of performances of the whole play, and even performed separately; however, the interlude by itself has attracted considerable attention, not only from stage managers and actors, but also from critics, provoking a range of multi-layered examinations. -

Super Satan: Milton’S Devil in Contemporary Comics

Super Satan: Milton’s Devil in Contemporary Comics By Shereen Siwpersad A Thesis Submitted to Leiden University, Leiden, the Netherlands in Partial Fulfillment of the Requirements for the Degree of MA English Literary Studies July, 2014, Leiden, the Netherlands First Reader: Dr. J.F.D. van Dijkhuizen Second Reader: Dr. E.J. van Leeuwen Date: 1 July 2014 Table of Contents Introduction …………………………………………………………………………... 1 - 5 1. Milton’s Satan as the modern superhero in comics ……………………………….. 6 1.1 The conventions of mission, powers and identity ………………………... 6 1.2 The history of the modern superhero ……………………………………... 7 1.3 Religion and the Miltonic Satan in comics ……………………………….. 8 1.4 Mission, powers and identity in Steve Orlando’s Paradise Lost …………. 8 - 12 1.5 Authority, defiance and the Miltonic Satan in comics …………………… 12 - 15 1.6 The human Satan in comics ……………………………………………… 15 - 17 2. Ambiguous representations of Milton’s Satan in Steve Orlando’s Paradise Lost ... 18 2.1 Visual representations of the heroic Satan ……………………………….. 18 - 20 2.2 Symbolic colors and black gutters ……………………………………….. 20 - 23 2.3 Orlando’s representation of the meteor simile …………………………… 23 2.4 Ambiguous linguistic representations of Satan …………………………... 24 - 25 2.5 Ambiguity and discrepancy between linguistic and visual codes ………... 25 - 26 3. Lucifer Morningstar: Obedience, authority and nihilism …………………………. 27 3.1 Lucifer’s rejection of authority ………………………..…………………. 27 - 32 3.2 The absence of a theodicy ………………………………………………... 32 - 35 3.3 Carey’s flawed and amoral God ………………………………………….. 35 - 36 3.4 The implications of existential and metaphysical nihilism ……………….. 36 - 41 Conclusion ……………………………………………………………………………. 42 - 46 Appendix ……………………………………………………………………………… 47 Figure 1.1 ……………………………………………………………………… 47 Figure 1.2 ……………………………………………………………………… 48 Figure 1.3 ……………………………………………………………………… 48 Figure 1.4 ………………………………………………………………………. -

Black Label Blæk 'Leibl 1 an Edgy, Provocative New Imprint| Featuring Standalone| | Stories From| Comics’ Premier Storytellers

I Black Label blæk 'le bl 1 An edgy, provocative new imprint| featuring standalone| | stories from| comics’ premier storytellers. 2 A state of mind achieved through an understanding of the forefront of fashion, design, entertainment and style. “An amazing showcase for Lee Bermejo, proving once again that no one can render Batman or Gotham City quite like him.” —IGN “The story promises to be grand and cinematic, and Bermejo’s painterly, detailed art rarely disappoints.” —Paste Magazine “Well-written, gorgeously drawn, and dark as hell.” —Geek Dad The Joker is dead… Did Batman kill him? He will go to hell and back to find out. Batman: Damned is a visceral thrill ride and supernatural horror story told by two of comics’ greatest modern creators: Brian Azzarello and Lee Bermejo. The Joker has been murdered. His killer’s identity is a mystery. Batman is the World’s Greatest Detective. But what happens when the person he is searching for is the man staring back at him in the mirror? With no memory of what happened on the night of the murder, Batman is going to need some help. Who better to set him straight than John Constantine? The problem is that as much as John loves a good mystery, he loves messing with people’s heads even more. With John’s “help,” the pair will delve into the sordid underbelly of Gotham as they race toward the mind-blowing truth of who murdered The Joker. Batman: Damned | Brian Azzarello | Lee Bermejo | 9781401291402 | HC | $29.99 / $39.99 CAN | DC Black Label | September 10, 2019 About the author: About the illustrator: Brian Azzarello has been writing comics Lee Bermejo began drawing comics in 1997 professionally since the mid-1990s. -

DC Comics Jumpchain CYOA

DC Comics Jumpchain CYOA CYOA written by [text removed] [text removed] [text removed] cause I didn’t lol The lists of superpowers and weaknesses are taken from the DC Wiki, and have been reproduced here for ease of access. Some entries have been removed, added, or modified to better fit this format. The DC universe is long and storied one, in more ways than one. It’s a universe filled with adventure around every corner, not least among them on Earth, an unassuming but cosmically significant planet out of the way of most space territories. Heroes and villains, from the bottom of the Dark Multiverse to the top of the Monitor Sphere, endlessly struggle for justice, for power, and for control over the fate of the very multiverse itself. You start with 1000 Cape Points (CP). Discounted options are 50% off. Discounts only apply once per purchase. Free options are not mandatory. Continuity === === === === === Continuity doesn't change during your time here, since each continuity has a past and a future unconnected to the Crises. If you're in Post-Crisis you'll blow right through 2011 instead of seeing Flashpoint. This changes if you take the relevant scenarios. You can choose your starting date. Early Golden Age (eGA) Default Start Date: 1939 The original timeline, the one where it all began. Superman can leap tall buildings in a single bound, while other characters like Batman, Dr. Occult, and Sandman have just debuted in their respective cities. This continuity occurred in the late 1930s, and takes place in a single universe. -

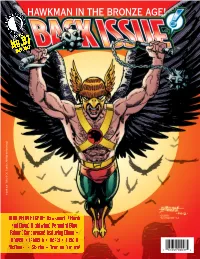

Hawkman in the Bronze Age!

HAWKMAN IN THE BRONZE AGE! July 2017 No.97 ™ $8.95 Hawkman TM & © DC Comics. All Rights Reserved. BIRD PEOPLE ISSUE: Hawkworld! Hawk and Dove! Nightwing! Penguin! Blue Falcon! Condorman! featuring Dixon • Howell • Isabella • Kesel • Liefeld McDaniel • Starlin • Truman & more! 1 82658 00097 4 Volume 1, Number 97 July 2017 EDITOR-IN-CHIEF Michael Eury PUBLISHER John Morrow Comics’ Bronze Age and Beyond! DESIGNER Rich Fowlks COVER ARTIST George Pérez (Commissioned illustration from the collection of Aric Shapiro.) COVER COLORIST Glenn Whitmore COVER DESIGNER Michael Kronenberg PROOFREADER Rob Smentek SPECIAL THANKS Alter Ego Karl Kesel Jim Amash Rob Liefeld Mike Baron Tom Lyle Alan Brennert Andy Mangels Marc Buxton Scott McDaniel John Byrne Dan Mishkin BACK SEAT DRIVER: Editorial by Michael Eury ............................2 Oswald Cobblepot Graham Nolan Greg Crosby Dennis O’Neil FLASHBACK: Hawkman in the Bronze Age ...............................3 DC Comics John Ostrander Joel Davidson George Pérez From guest-shots to a Shadow War, the Winged Wonder’s ’70s and ’80s appearances Teresa R. Davidson Todd Reis Chuck Dixon Bob Rozakis ONE-HIT WONDERS: DC Comics Presents #37: Hawkgirl’s First Solo Flight .......21 Justin Francoeur Brenda Rubin A gander at the Superman/Hawkgirl team-up by Jim Starlin and Roy Thomas (DCinthe80s.com) Bart Sears José Luís García-López Aric Shapiro Hawkman TM & © DC Comics. Joe Giella Steve Skeates PRO2PRO ROUNDTABLE: Exploring Hawkworld ...........................23 Mike Gold Anthony Snyder The post-Crisis version of Hawkman, with Timothy Truman, Mike Gold, John Ostrander, and Grand Comics Jim Starlin Graham Nolan Database Bryan D. Stroud Alan Grant Roy Thomas Robert Greenberger Steven Thompson BRING ON THE BAD GUYS: The Penguin, Gotham’s Gentleman of Crime .......31 Mike Grell Titans Tower Numerous creators survey the history of the Man of a Thousand Umbrellas Greg Guler (titanstower.com) Jack C. -

Invited Review

INVITED REVIEW Presolar grains from meteorites: Remnants from the early times of the solar system Katharina Lodders a,* and Sachiko Amari b a Planetary Chemistry Laboratory, Department of Earth and Planetary Sciences and McDonnell Center for the Space Sciences, Washington University, Campus Box 1169, One Brookings Drive, St. Louis, MO 63130, USA b Department of Physics and McDonnell Center for the Space Sciences, Washington University, Campus Box 1105, One Brookings Drive, St. Louis, MO 63130, USA Received 5 October 2004; accepted 4 January 2005 Abstract This review provides an introduction to presolar grains – preserved stardust from the interstellar molecular cloud from which our solar system formed – found in primitive meteorites. We describe the search for the presolar components, the currently known presolar mineral populations, and the chemical and isotopic characteristics of the grains and dust-forming stars to identify the grains’ most probable stellar sources. Keywords: Presolar grains; Interstellar dust; Asymptotic giant branch (AGB) stars; Novae; Supernovae; Nucleosynthesis; Isotopic ratios; Meteorites 1. Introduction The history of our solar system started with the gravitational collapse of an interstellar molecular cloud laden with gas and dust supplied from dying stars. The dust from this cloud is the topic of this review. A small fraction of this dust escaped destruction during the many processes that occurred after molecular cloud collapse about 4.55 Ga ago. We define presolar grains as stardust that formed in stellar outflows or ejecta and remained intact throughout its journey into the solar system where it was preserved in meteorites. The survival and presence of genuine stardust in meteorites was not expected in the early years of meteorite studies. -

Justice League: Origins

JUSTICE LEAGUE: ORIGINS Written by Chad Handley [email protected] EXT. PARK - DAY An idyllic American park from our Rockwellian past. A pick- up truck pulls up onto a nearby gravel lot. INT. PICK-UP TRUCK - DAY MARTHA KENT (30s) stalls the engine. Her young son, CLARK, (7) apprehensively peeks out at the park from just under the window. MARTHA KENT Go on, Clark. Scoot. CLARK Can’t I go with you? MARTHA KENT No, you may not. A woman is entitled to shop on her own once in a blue moon. And it’s high time you made friends your own age. Clark watches a group of bigger, rowdier boys play baseball on a diamond in the park. CLARK They won’t like me. MARTHA KENT How will you know unless you try? Go on. I’ll be back before you know it. EXT. PARK - BASEBALL DIAMOND - MOMENTS LATER Clark watches the other kids play from afar - too scared to approach. He is about to give up when a fly ball plops on the ground at his feet. He stares at it, unsure of what to do. BIG KID 1 (yelling) Yo, kid. Little help? Clark picks up the ball. Unsure he can throw the distance, he hesitates. Rolls the ball over instead. It stops halfway to Big Kid 1, who rolls his eyes, runs over and picks it up. 2. BIG KID 1 (CONT’D) Nice throw. The other kids laugh at him. Humiliated, Clark puts his hands in his pockets and walks away. Big Kid 2 advances on Big Kid 1; takes the ball away from him. -

Two Worlds… One Librarian… My Experiences As a Children’S Librarian at a Public and School Library

Date submitted: 23/06/2010 Two Worlds… One Librarian… My Experiences as a Children’s Librarian at a Public and School Library Ida A. Joiner Carnegie Library of Pittsburgh St. Benedict the Moor School and Joiner Consultants Pittsburgh, PA, USA E-mail: [email protected] Meeting: 108. Libraries for Children and Young Adults with School Libraries and Resource Centers WORLD LIBRARY AND INFORMATION CONGRESS: 76TH IFLA GENERAL CONFERENCE AND ASSEMBLY 10-15 August 2010, Gothenburg, Sweden http://www.ifla.org/en/ifla76 Abstract: This presentation will give librarians, administrators, and others the opportunity to learn about the importance of collaborations between public and school libraries based on my experiences as a children’s librarian at the Carnegie Library of Pittsburgh (CLP) library and at the Saint Benedict the Moor School library at the same time. In this current era of decreased funding and school and library closings at an ever increasing exponential rate, it is imperative that public libraries and school libraries build partnerships with each other to share resources, programs, staff, and other initiatives! From my experiences having feet in both worlds, I have found that both types of libraries benefit from the expertise of each other and it is a win/win situation for everyone… students, parents, teachers, librarians, and administrators too! In this paper, I will discuss some of the successful collaborations between the Carnegie Library of Pittsburgh and Saint Benedict the Moor School through school projects utilizing the Carnegie Library electronic and print resources including eCLP, My StoryMaker, EBSCO, Naxos, Net Library and others; story times for children at both CLP and Saint Benedict the Moor; collection development of resources; book donations from the Carnegie Library of Pittsburgh to Saint Benedict the Moor school; programs such as children’s and teen’s clubs, reading groups, activities, and the award winning: 1 “Black, and White and Read All Over series; and my blog: A Children’s Book a Day, Keeps the Scary Monster Away. -

Download John Constantine, Hellblazer Vol. 8: Rake at the Gates of Hell PDF

Download: John Constantine, Hellblazer Vol. 8: Rake at the Gates of Hell PDF Free [534.Book] Download John Constantine, Hellblazer Vol. 8: Rake at the Gates of Hell PDF By Garth Ennis John Constantine, Hellblazer Vol. 8: Rake at the Gates of Hell you can download free book and read John Constantine, Hellblazer Vol. 8: Rake at the Gates of Hell for free here. Do you want to search free download John Constantine, Hellblazer Vol. 8: Rake at the Gates of Hell or free read online? If yes you visit a website that really true. If you want to download this ebook, i provide downloads as a pdf, kindle, word, txt, ppt, rar and zip. Download pdf #John Constantine, Hellblazer Vol. 8: Rake at the Gates of Hell | #380538 in Books | Garth Ennis | 2014-06-10 | 2014-06-10 | Original language: English | PDF # 1 | 10.20 x .80 x 6.70l, 1.16 | File type: PDF | 384 pages | John Constantine Hellblazer Vol 8 Rake at the Gates of Hell | |9 of 9 people found the following review helpful.| End of the Ennis era | By HughBrint |Despite 's synopsis, this also contains the Damnation's Flame arc, issues 72-77, so there's more here than they think. The stories within end the run of Hellblazer with Garth Ennis until he returns, briefly, for the Son Of Man arc. John's seemingly last stand with The First Of The Fallen is delivered with all of Ennis's visceral and gritty writ | About the Author | Irish-born writer Garth Ennis is the acclaimed author of the PREACHER graphic novel series, and also has written numerous JOHN CONSTANTINE: HELLBLAZER graphic novels, as well as THE AUTHORITY, THE BOYS, WAR STORIES, PRIDE AND JOY and other title John Constantine heads towards a final showdown with a revenge-crazed Satan during a raging race riot, and in addition to desperately trying to save his dwindling number of living friends, Constantine also has one final reunion with his lost love Kit. -

52: Book 1 Free

FREE 52: BOOK 1 PDF Geoff Johns,Grant Morrison,Greg Rucka | 584 pages | 28 Jun 2016 | DC Comics | 9781401263256 | English | United States 52 Vol 1 | DC Database | Fandom Goodreads helps you keep track of books you want to read. Want to Read saving…. Want to Read Currently Reading Read. Other editions. Enlarge cover. Error rating book. Refresh and try again. Open Preview See a Problem? Details if other :. Thanks for telling us about the problem. Return to Book Page. Preview — 52, Vol. Grant Morrison. Greg Rucka. Mark Waid. Keith Giffen Illustrator. Eddy Barrows Illustrator. Chris Batista Illustrator. Ken Lashley Illustrator. Shawn Moll Illustrator. Todd Nauck Illustrator. Joe Bennett Illustrator. After the events rendered in Infinite Crisis, the inhabitants of the DC Universe suffered through a year 52 weeks; hence the title without Superman, Batman, and Wonder Woman. How does one survive in 52: Book 1 dangerous world without superheroes? This paperback, the first of a four-volume series, begins to answer that perilous question? Nonstop action amid planetary anarchy. Get A Copy. Paperbackpages. More Details Original Title. Other Editions 8. Friend Reviews. To see what your friends thought of this book, please sign up. To ask other readers questions about 52, Vol. Lists with This Book. Community Reviews. Showing Average rating 3. Rating details. More filters. Sort order. Start your review of 52, Vol. But whyyyy? Well, partly because there are reasons why these characters are unpopular and barely known in the first place and partly because none of the myriad of storylines going on are at all interesting! Black Adam, ruler of Kahndaq, is forming a coalition against US hegemony while lethally dealing with supervillains. -

Ebook Download Batman Noir the Court of Owls Ebook Free Download

BATMAN NOIR THE COURT OF OWLS PDF, EPUB, EBOOK Scott Snyder | 296 pages | 12 Dec 2017 | DC Comics | 9781401273958 | English | United States Batman Noir The Court Of Owls PDF Book I mean, I love Batman the same as you. Bruce performs an autopsy on Alan Wayne, and determines that he was tortured to death in a similar manner to the earlier murder victim. They use a special mixture of metal in order to give the Talons immortality. He wakes up in a giant labyrinth and is welcomed by the Court of Owls. In the storyline " Night of the Owls ", which ran through the Batman-related books, the Court of Owls, angered at William Cobb's defeat at the hands of Batman, awaken all of their other Talons to reclaim Gotham City — literally and ideologically — from Batman. Picture Information. My hope at the end of the day is that everyone will be pleased, even though that is completely impossible. With an imitative this big, seeing how many new readers came to the table to read comics after having lapsed, or never having read one at all, was a real thrill. Gotham was his city. It's like being at a con all the time. I enjoyed the living hell out of this. Batman receives a brutal beating, and almost accepts death until a picture of the tortured Alan Wayne spurs him on. Though March's regenerative capabilities meant that he could never be sure if Lincoln March would return. The Court of Owls reflects that they may release him in a decade or so if they decide they need him.