Pac-Man Conquers Academia: Two Decades of Research Using A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A History of Video Game Consoles Introduction the First Generation

A History of Video Game Consoles By Terry Amick – Gerald Long – James Schell – Gregory Shehan Introduction Today video games are a multibillion dollar industry. They are in practically all American households. They are a major driving force in electronic innovation and development. Though, you would hardly guess this from their modest beginning. The first video games were played on mainframe computers in the 1950s through the 1960s (Winter, n.d.). Arcade games would be the first glimpse for the general public of video games. Magnavox would produce the first home video game console featuring the popular arcade game Pong for the 1972 Christmas Season, released as Tele-Games Pong (Ellis, n.d.). The First Generation Magnavox Odyssey Rushed into production the original game did not even have a microprocessor. Games were selected by using toggle switches. At first sales were poor because people mistakenly believed you needed a Magnavox TV to play the game (GameSpy, n.d., para. 11). By 1975 annual sales had reached 300,000 units (Gamester81, 2012). Other manufacturers copied Pong and began producing their own game consoles, which promptly got them sued for copyright infringement (Barton, & Loguidice, n.d.). The Second Generation Atari 2600 Atari released the 2600 in 1977. Although not the first, the Atari 2600 popularized the use of a microprocessor and game cartridges in video game consoles. The original device had an 8-bit 1.19MHz 6507 microprocessor (“The Atari”, n.d.), two joy sticks, a paddle controller, and two game cartridges. Combat and Pac Man were included with the console. In 2007 the Atari 2600 was inducted into the National Toy Hall of Fame (“National Toy”, n.d.). -

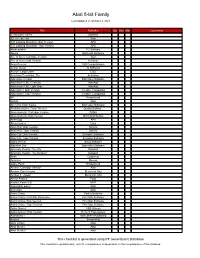

Atari 8-Bit Family

Atari 8-bit Family Last Updated on October 2, 2021 Title Publisher Qty Box Man Comments 221B Baker Street Datasoft 3D Tic-Tac-Toe Atari 747 Landing Simulator: Disk Version APX 747 Landing Simulator: Tape Version APX Abracadabra TG Software Abuse Softsmith Software Ace of Aces: Cartridge Version Atari Ace of Aces: Disk Version Accolade Acey-Deucey L&S Computerware Action Quest JV Software Action!: Large Label OSS Activision Decathlon, The Activision Adventure Creator Spinnaker Software Adventure II XE: Charcoal AtariAge Adventure II XE: Light Gray AtariAge Adventure!: Disk Version Creative Computing Adventure!: Tape Version Creative Computing AE Broderbund Airball Atari Alf in the Color Caves Spinnaker Software Ali Baba and the Forty Thieves Quality Software Alien Ambush: Cartridge Version DANA Alien Ambush: Disk Version Micro Distributors Alien Egg APX Alien Garden Epyx Alien Hell: Disk Version Syncro Alien Hell: Tape Version Syncro Alley Cat: Disk Version Synapse Software Alley Cat: Tape Version Synapse Software Alpha Shield Sirius Software Alphabet Zoo Spinnaker Software Alternate Reality: The City Datasoft Alternate Reality: The Dungeon Datasoft Ankh Datamost Anteater Romox Apple Panic Broderbund Archon: Cartridge Version Atari Archon: Disk Version Electronic Arts Archon II - Adept Electronic Arts Armor Assault Epyx Assault Force 3-D MPP Assembler Editor Atari Asteroids Atari Astro Chase Parker Brothers Astro Chase: First Star Rerelease First Star Software Astro Chase: Disk Version First Star Software Astro Chase: Tape Version First Star Software Astro-Grover CBS Games Astro-Grover: Disk Version Hi-Tech Expressions Astronomy I Main Street Publishing Asylum ScreenPlay Atari LOGO Atari Atari Music I Atari Atari Music II Atari This checklist is generated using RF Generation's Database This checklist is updated daily, and it's completeness is dependent on the completeness of the database. -

Behind the Maze Full Text

BEHIND THE MAZE A SHORT PARODY BY NFT'd with ❤ on Kododot You know them as the celebrity ghosts in the highest grossing video game of all time, a voice-over artist declared through Audrey's surround sound. A young woman sat alone in her digital studio reviewing raw footage of the four legendary Pac-Man ghosts. Blinky, Pinky, Inky, and Clyde hovered over a cobalt-blue leather sofa waiting to field interview questions. Behind them, an 8-bit grid of cobalt-blue LED lights replicated their notorious maze against black flats. Audrey paused the video and cued up the narrator's next line. Their relentless pursuit of Pac-Man rocketed them to superstardom. Audrey’s left index finger slid across the trackpad as she dragged and dropped another video clip into her editing project — a panning shot of the four famous ghosts from left to right. Blinky was the first in line. Beads of sweat dotted his forehead as the red ghost clenched his jaw and ground his teeth. He jumped at every single sound — at every single movement. Even without audio, his anger and aggression overshadowed the other three. To his right, Pinky fidgeted over her seat. The token female seemed lost in thought as she trembled and picked at her floating pink hem. Next in line, the bashful cyan ghost named Inky stared at something off-camera. Maybe he was avoiding eye contact; probably, he was just spacing out. Clyde was last in line. The orange ghost with bloodshot eyes fixated on a crewman lingering around the craft services table. -

PAC-MAN Plus™ by NAMCO

PAC-MAN Plus™ b y N A M C O Product Description PAC-MAN is back in PAC-MAN Plus by Namco. There are new treats to eat, new ghosts to chomp, and mazes that turn invisible! Guide PAC-MAN through new colored mazes and avoid pesky ghosts while chompin' on the dots! But watch out – ghosts that match the maze color are immune to Power Pellets! Gobbling up new food makes ghosts turn blue and edible, but they can also make the ghosts, maze, dots, and Power Pellets disappear! Chomp on invisible items and earn quadruple points! It's all the PAC-MAN retro action you remember, PLUS so much more ! Screenshots Using the Application 1. How to Start PAC-MAN Plus Go to your game downloads and select PAC-MAN Plus by Namco 2. How to Play PAC-MAN Plus Guide PAC-MAN through the maze by using the navigation keys (UP, DOWN, LEFT, RIGHT), avoiding the ghosts while gobbling up all the PAC-dots. Gobble up the fruit for the chance to turn the maze or the ghosts invisible, making them worth even more points. Chomp some fruit or a Power Pellet to momentarily turn the ghosts blue. When they're blue you can eat them for bonus points. Get an extra life when you reach 10,000 points. PAC-dots = 10 points each. Power Pellets = 50 points each. Eat a Ghost = 200 points for the first ghost, double the points for each subsequent ghost (double if invisible). GAME MODES Expert : A new and improved arcade version. -

Pac-Man Is Overkill

2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) October 25-29, 2020, Las Vegas, NV, USA (Virtual) Pac-Man is Overkill Renato Fernando dos Santos1;2, Ragesh K. Ramachandran3, Marcos A. M. Vieira2 and Gaurav S. Sukhatme3 Abstract— Pursuit-Evasion Game (PEG) consists of a team of pursuers trying to capture one or more evaders. PEG is important due to its application in surveillance, search and rescue, disaster robotics, boundary defense and so on. In general, PEG requires exponential time to compute the minimum number of pursuers to capture an evader. To mitigate this, we have designed a parallel optimal algorithm to minimize the capture time in PEG. Given a discrete topology, this algorithm also outputs the minimum number of pursuers to capture an evader. A classic example of PEG is the popular arcade game, Pac-Man. Although Pac-Man topology has almost 300 nodes, our algorithm can handle this. We show that Pac- Man is overkill, i.e., given the Pac-Man game topology, Pac-Man game contains more pursuers/ghosts (four) than it is necessary (two) to capture evader/Pac-man. We evaluate the proposed algorithm on many different topologies. I. INTRODUCTION Pac-Man is a popular maze arcade game developed and released in 1980 [1]. Basically, the game is all about control- Fig. 1. A screenshot of the Pac-Man game. The yellow colored pie shaped object is the Pac-Man. The four entities at the center of the maze are the ling a “pie or pizza” shaped object to eat all the dots inside ghosts. -

Pacman the Movie Full Movie Hd 1080P

1 / 4 Pac-Man: The Movie Full Movie Hd 1080p 3. Enjoy Your Full Movie in HD 1080p Quality!! Recommended Movie : Annie (2014) : goo.gl/0StrJe Broken Horses (2015) : goo.. hindi movie indu sarkar full movie, free download hindi movie indu ... online and download . khatrrimaza All 720p, Bluray, 1080p Hd Movie .... Pixels - Full Movie | 2015 Let's join, fullHD Movies/Season/Episode here! ... for Free with full In HD Quality , Pixels (2015) is a perfect movie in 2015, now you can watch ... 03 GB : 2015-10-24 08:03 : Пікселі / Pixels (2015) 1080p Ukr/Eng | Sub ... they attack the Earth, using the games like PAC-MAN, Donkey Kong, Galaga, .... pacman game illustration, Ghostbusters, Pac-Man , transportation; Advertisements ... Ghost Buster logo, cinema, wallpaper, movie, Ghostbusters, film .... Problem is, nobody loves a Bad Guy. ... BLU 1080p. ... Better still, the movie has real heart and soul. ... Movie Review: "Wreck-It Ralph" (2012) HD Online Player (Mahesh Khaleja Full Movie Hd 1080p T) > https://geags.com/1ioeaa Movies To Watch Free, Telugu Movies, Watches Online, .... Kamen Rider Heisei Generations: Dr. Pac-Man vs. Ex-Aid & Ghost with Legend Riders mở đầu trên Genm Corp, bị tấn công bởi bộ ba kẻ khủng bố cầm súng .... Click the link 2. Create your free account & you will be re- directed to your movie!! 3. Enjoy Your Full Movie in HD 1080p Quality!! Recommended Movie : Annie .... 67 Pacman Backgrounds images in Full HD, 2K and 4K sizes. The best quality and size only with us!. Watch Online Torrents PutLocker HD 1080p 1989 year 編集する ... Watch When Harry Met Sally. -

An Evaluation of the Benefits of Look-Ahead in Pac-Man

An Evaluation of the Benefits of Look-Ahead in Pac-Man Thomas Thompson, Lewis McMillan, John Levine and Alastair Andrew Abstract— The immensely popular video game Pac-Man has we feel that such practices are best applied in small scope challenged players for nearly 30 years, with the very best human problems. While this could most certainly apply to ghost competitors striking a highly honed balance between the games avoidance strategies, the ability to look ahead into the game two key factors; the ‘chomping’ of pills (or pac-dots) throughout the level whilst avoiding the ghosts that haunt the maze trying to world and begin to plan paths through the maze is often capture the titular hero. We believe that in order to achieve this ignored. We consider the best means to attack the Pac-Man it is important for an agent to plan-ahead in creating paths in problem is to view at varying levels of reasoning; from high the maze while utilising a reactive control to escape the clutches level strategy formulation to low level reactive control. of the ghosts. In this paper we evaluate the effectiveness of such In our initial work in this domain, we sought to assess a look-ahead against greedy and random behaviours. Results indicate that a competent agent, on par with novice human our hypothesis that the benefits of simple lookahead in Pac- players can be constructed using a simple framework. man can be applied to generate a more competent agent than those using greedy or random decision processes. In I. INTRODUCTION this paper we give a recap of the Pac-Man domain and the Pac-Man provides a point in history where video games particulars of our implementation in Section II and related moved into new territory; with players having their fill of the work inSection III. -

Video Game Collection MS 17 00 Game This Collection Includes Early Game Systems and Games As Well As Computer Games

Finding Aid Report Video Game Collection MS 17_00 Game This collection includes early game systems and games as well as computer games. Many of these materials were given to the WPI Archives in 2005 and 2006, around the time Gordon Library hosted a Video Game traveling exhibit from Stanford University. As well as MS 17, which is a general video game collection, there are other game collections in the Archives, with other MS numbers. Container List Container Folder Date Title None Series I - Atari Systems & Games MS 17_01 Game This collection includes video game systems, related equipment, and video games. The following games do not work, per IQP group 2009-2010: Asteroids (1 of 2), Battlezone, Berzerk, Big Bird's Egg Catch, Chopper Command, Frogger, Laser Blast, Maze Craze, Missile Command, RealSports Football, Seaquest, Stampede, Video Olympics Container List Container Folder Date Title Box 1 Atari Video Game Console & Controllers 2 Original Atari Video Game Consoles with 4 of the original joystick controllers Box 2 Atari Electronic Ware This box includes miscellaneous electronic equipment for the Atari videogame system. Includes: 2 Original joystick controllers, 2 TAC-2 Totally Accurate controllers, 1 Red Command controller, Atari 5200 Series Controller, 2 Pong Paddle Controllers, a TV/Antenna Converter, and a power converter. Box 3 Atari Video Games This box includes all Atari video games in the WPI collection: Air Sea Battle, Asteroids (2), Backgammon, Battlezone, Berzerk (2), Big Bird's Egg Catch, Breakout, Casino, Cookie Monster Munch, Chopper Command, Combat, Defender, Donkey Kong, E.T., Frogger, Haunted House, Sneak'n Peek, Surround, Street Racer, Video Chess Box 4 AtariVideo Games This box includes the following videogames for Atari: Word Zapper, Towering Inferno, Football, Stampede, Raiders of the Lost Ark, Ms. -

Ms. Pac-Man Versus Ghost Team CIG 2016 Competition

1 Ms. Pac-Man Versus Ghost Team CIG 2016 Competition Piers R. Williams∗, Diego Perez-Liebanay and Simon M. Lucasz School of Computer Science and Electronic Engineering, University of Essex, Colchester CO4 3SQ, UK Email: fpwillic∗, dperezy, [email protected] Abstract—This paper introduces the revival of the popular Ms. many competitions currently active in the area of games. The Pac-Man Versus Ghost Team competition. We present an updated Starcraft competition [17] runs on the original Starcraft: Brood game engine with Partial Observability constraints, a new Multi- War (Blizzard Entertainment, 1998). Starcraft is a complex Agent Systems approach to developing Ghost agents, and several sample controllers to ease the development of entries. A restricted Real-Time Strategy (RTS) game with thousands of potential communication protocol is provided for the Ghosts, providing actions at each time step. Starcraft also features PO, greatly a more challenging environment than before. The competition complicating the task of writing strong AI. The General Video will debut at the IEEE Computational Intelligence and Games Game Artificial Intelligence (GVGAI) competition [19] runs Conference 2016. Some preliminary results showing the effects of a custom game engine that emulates a wide variety of games, Partial Observability and the benefits of simple communication are also presented. many of which are based on old classic arcade games. The Geometry Friends competition [20] features a co-operative track for two heterogeneous agents to solve mazes, a similar I. INTRODUCTION task to the ghost control of Ms. Pac-Man. Ms. Pac-Man is an arcade game that was immensely popular Previous competitions have been organised that focused on when released in 1982. -

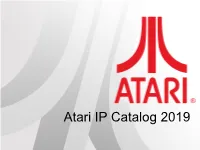

Atari IP Catalog 2019 IP List (Highlighted Links Are Included in Deck)

Atari IP Catalog 2019 IP List (Highlighted Links are Included in Deck) 3D Asteroids Basketball Fatal Run Miniature Golf Retro Atari Classics Super Asteroids & Missile 3D Tic-Tac-Toe Basketbrawl Final Legacy Minimum Return to Haunted House Command A Game of Concentration Bionic Breakthrough Fire Truck * Missile Command Roadrunner Super Baseball Adventure Black Belt Firefox * Missile Command 2 * RollerCoaster Tycoon Super Breakout Adventure II Black Jack Flag Capture Missile Command 3D Runaway * Super Bunny Breakout Agent X * Black Widow * Flyball * Monstercise Saboteur Super Football Airborne Ranger Boogie Demo Food Fight (Charley Chuck's) Monte Carlo * Save Mary Superbug * Air-Sea Battle Booty Football Motor Psycho Scrapyard Dog Surround Akka Arrh * Bowling Frisky Tom MotoRodeo Secret Quest Swordquest: Earthworld Alien Brigade Boxing * Frog Pond Night Driver Sentinel Swordquest: Fireworld Alpha 1 * Brain Games Fun With Numbers Ninja Golf Shark Jaws * Swordquest: Waterworld Anti-Aircraft * Breakout Gerry the Germ Goes Body Off the Wall Shooting Arcade Tank * Aquaventure Breakout * Poppin Orbit * Sky Diver Tank II * Asteroids Breakout Boost Goal 4 * Outlaw Sky Raider * Tank III * Asteroids Deluxe * Canyon Bomber Golf Outlaw * Slot Machine Telepathy Asteroids On-line Casino Gotcha * Peek-A-Boo Slot Racers Tempest Asteroids: Gunner Castles and Catapults Gran Trak 10 * Pin Pong * Smokey Joe * Tempest 2000 Asteroids: Gunner+ Caverns of Mars Gran Trak 20 * Planet Smashers Soccer Tempest 4000 Atari 80 Classic Games in One! Centipede Gravitar Pong -

The Pac-Man Dossier

The Pac-Man Dossier http://home.comcast.net/~jpittman2/pacman/pacmandossier.html 2 of 48 1/4/2015 10:34 PM The Pac-Man Dossier http://home.comcast.net/~jpittman2/pacman/pacmandossier.html version 1.0.26 June 16, 2011 Foreword Welcome to The Pac-Man Dossier ! This web page is dedicated to providing Pac-Man players of all skill levels with the most complete and detailed study of the game possible. New discoveries found during the research for this page have allowed for the clearest view yet of the actual ghost behavior and pathfinding logic used by the game. Laid out in hyperlinked chapters and sections, the dossier is easy to navigate using the Table of Contents below, or you can read it in linear fashion from top-to-bottom. Chapter 1 is purely the backstory of Namco and Pac-Man's designer, Toru Iwatani, chronicling the development cycle and release of the arcade classic. If you want to get right to the technical portions of the document, however, feel free to skip ahead to Chapter 2 and start reading there. Chapter 3 and Chapter 4 are dedicated to explaining pathfinding logic and discussions of unique ghost behavior. Chapter 5 is dedicated to the “split screen” level, and several Appendices follow, offering reference tables , an easter egg , vintage guides , a glossary , and more. Lastly, if you are unable to find what you're looking for or something seems unclear in the text, please feel free to contact me ( [email protected] ) and ask! If you enjoy the information presented on this website, please consider contributing a small donation to support it and defray the time/maintenance costs associated with keeping it online and updated. -

Intellivision Development, Back in the Day

Intellivision Development, Back In The Day Intellivision Development, Back In The Day Page 1 of 28 Intellivision Development, Back In The Day Table of Contents Introduction......................................................................................................................................3 Overall Process................................................................................................................................5 APh Technological Consulting..........................................................................................................6 Host Hardware and Operating System........................................................................................6 Development Tools......................................................................................................................7 CP-1610 Assembler................................................................................................................7 Text Editor...............................................................................................................................7 Pixel Editor..............................................................................................................................8 Test Harnesses............................................................................................................................8 Tight Finances...........................................................................................................................10 Mattel Electronics...........................................................................................................................11