Accelerated Primality Testing Using Gpus

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SIMD Extensions

SIMD Extensions PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 12 May 2012 17:14:46 UTC Contents Articles SIMD 1 MMX (instruction set) 6 3DNow! 8 Streaming SIMD Extensions 12 SSE2 16 SSE3 18 SSSE3 20 SSE4 22 SSE5 26 Advanced Vector Extensions 28 CVT16 instruction set 31 XOP instruction set 31 References Article Sources and Contributors 33 Image Sources, Licenses and Contributors 34 Article Licenses License 35 SIMD 1 SIMD Single instruction Multiple instruction Single data SISD MISD Multiple data SIMD MIMD Single instruction, multiple data (SIMD), is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data simultaneously. Thus, such machines exploit data level parallelism. History The first use of SIMD instructions was in vector supercomputers of the early 1970s such as the CDC Star-100 and the Texas Instruments ASC, which could operate on a vector of data with a single instruction. Vector processing was especially popularized by Cray in the 1970s and 1980s. Vector-processing architectures are now considered separate from SIMD machines, based on the fact that vector machines processed the vectors one word at a time through pipelined processors (though still based on a single instruction), whereas modern SIMD machines process all elements of the vector simultaneously.[1] The first era of modern SIMD machines was characterized by massively parallel processing-style supercomputers such as the Thinking Machines CM-1 and CM-2. These machines had many limited-functionality processors that would work in parallel. -

Primegrid: Searching for a New World Record Prime Number

PrimeGrid: Searching for a New World Record Prime Number rimeGrid [1] is a volunteer computing project which has mid-19th century the only known method of primality proving two main aims; firstly to find large prime numbers, and sec- was to exhaustively trial divide the candidate integer by all primes Pondly to educate members of the project and the wider pub- up to its square root. With some small improvements due to Euler, lic about the mathematics of primes. This means engaging people this method was used by Fortuné Llandry in 1867 to prove the from all walks of life in computational mathematics is essential to primality of 3203431780337 (13 digits long). Only 9 years later a the success of the project. breakthrough was to come when Édouard Lucas developed a new In the first regard we have been very successful – as of No- method based on Group Theory, and proved 2127 – 1 (39 digits) to vember 2013, over 70% of the primes on the Top 5000 list [2] of be prime. Modified slightly by Lehmer in the 1930s, Lucas Se- largest known primes were discovered by PrimeGrid. The project quences are still in use today! also holds various records including the discoveries of the largest The next important breakthrough in primality testing was the known Cullen and Woodall Primes (with a little over 2 million development of electronic computers in the latter half of the 20th and 1 million decimal digits, respectively), the largest known Twin century. In 1951 the largest known prime (proved with the aid Primes and Sophie Germain Prime Pairs, and the longest sequence of a mechanical calculator), was (2148 + 1)/17 at 49 digits long, of primes in arithmetic progression (26 of them, with a difference but this was swiftly beaten by several successive discoveries by of over 23 million between each). -

GIMPS Project Discovers Largest Known Prime Number : 277,232,917-1

GIMPS Project Discovers Largest Known Prime Number : 277,232,917-1 The Great Internet Mersenne Prime Search (GIMPS) has discovered the largest known prime number, 277,232,917-1, having 23,249,425 digits. A computer volunteered by Jonathan Pace made the find on December 26, 2017. Jonathan is one of thousands of volunteers using free GIMPS software available at www.mersenne.org. The new prime number, also known as M77232917, is calculated by multiplying together 77,232,917 twos, and then subtracting one. It is nearly one million digits larger than the previous record prime number, in a special class of extremely rare prime numbers known as Mersenne primes. It is only the 50th known Mersenne prime ever discovered, each increasingly difficult to find. Mersenne primes were named for the French monk Marin Mersenne, who studied these numbers more than 350 years ago. GIMPS, founded in 1996, has discovered the last 16 Mersenne primes. Volunteers download a free program to search for these primes, with a cash award offered to anyone lucky enough to find a new prime. Prof. Chris Caldwell maintains an authoritative web site on the largest known primes, and has an excellent history of Mersenne primes. The primality proof took six days of non-stop computing on a PC with an Intel i5-6600 CPU. To prove there were no errors in the prime discovery process, the new prime was independently verified using four different programs on four different hardware configurations. • Aaron Blosser verified it using Prime95 on an Intel Xeon server in 37 hours. • David Stanfill verified it using gpuOwL on an AMD RX Vega 64 GPU in 34 hours. -

Tuning IBM System X Servers for Performance

Front cover Tuning IBM System x Servers for Performance Identify and eliminate performance bottlenecks in key subsystems Expert knowledge from inside the IBM performance labs Covers Windows, Linux, and VMware ESX David Watts Alexandre Chabrol Phillip Dundas Dustin Fredrickson Marius Kalmantas Mario Marroquin Rajeev Puri Jose Rodriguez Ruibal David Zheng ibm.com/redbooks International Technical Support Organization Tuning IBM System x Servers for Performance August 2009 SG24-5287-05 Note: Before using this information and the product it supports, read the information in “Notices” on page xvii. Sixth Edition (August 2009) This edition applies to IBM System x servers running Windows Server 2008, Windows Server 2003, Red Hat Enterprise Linux, SUSE Linux Enterprise Server, and VMware ESX. © Copyright International Business Machines Corporation 1998, 2000, 2002, 2004, 2007, 2009. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Contents Notices . xvii Trademarks . xviii Foreword . xxi Preface . xxiii The team who wrote this book . xxiv Become a published author . xxix Comments welcome. xxix Part 1. Introduction . 1 Chapter 1. Introduction to this book . 3 1.1 Operating an efficient server - four phases . 4 1.2 Performance tuning guidelines . 5 1.3 The System x Performance Lab . 5 1.4 IBM Center for Microsoft Technologies . 7 1.5 Linux Technology Center . 7 1.6 IBM Client Benchmark Centers . 8 1.7 Understanding the organization of this book . 10 Chapter 2. Understanding server types . 13 2.1 Server scalability . 14 2.2 Authentication services . 15 2.2.1 Windows Server 2008 Active Directory domain controllers . -

C 2013 Alexander Jih-Hing Yee a FASTER FFT in the MID-WEST

c 2013 Alexander Jih-Hing Yee A FASTER FFT IN THE MID-WEST BY ALEXANDER JIH-HING YEE THESIS Submitted in partial fulfillment of the requirements for the degree of Master of Science in Computer Science in the Graduate College of the University of Illinois at Urbana-Champaign, 2013 Urbana, Illinois Adviser: Professor Marc Snir ABSTRACT FFT implementations today generally fall into two categories: Library gen- erators (such as FFTW and Spiral) and specialized FFTs (such as prime95). Specialized FFTs have the obvious limitation of being specialized. However they are hand-tuned and generally offer superior performance. Library gen- erators are generic and easier to port. But their performance is generally suboptimal. We describe in this paper an FFT library that was built while paying special attention to locality. The library achieves significantly better perfor- mance than FFTW, for long vectors. Unlike FFTW or Spiral, the recursive decomposition of the FFT is not created by a library generator; it is created by macro expansion that has a few selectable parameters. This provides an interface that can be more easily modified by users. ii To my parents, for their love and support. iii TABLE OF CONTENTS LIST OF TABLES . v LIST OF FIGURES . vi LIST OF ABBREVIATIONS . vii CHAPTER 1 INTRODUCTION . 1 CHAPTER 2 FFT LIBRARIES . 3 2.1 Library Generators . 3 2.2 Specialized FFTs . 6 CHAPTER 3 OUR IMPLEMENTATION . 9 3.1 Generalized Bailey's 4-step Algorithm . 9 3.2 Blocking/Data Padding . 12 3.3 Fast Bit-Reversal . 15 3.4 Vector Instructions . 16 3.5 Micro-Optimizations: Loop Unrolling . -

GIMPS Project Discovers Largest Known Prime Number: 277,232,917-1

GIMPS Project Discovers Largest Known Prime Number: 277,232,917-1 RALEIGH, NC., January 3, 2018 -- The Great Internet Mersenne Prime Search (GIMPS) has discovered the largest known prime number, 277,232,917-1, having 23,249,425 digits. A computer volunteered by Jonathan Pace made the find on December 26, 2017. Jonathan is one of thousands of volunteers using free GIMPS software available at www.mersenne.org/download/. The new prime number, also known as M77232917, is calculated by multiplying together 77,232,917 twos, and then subtracting one. It is nearly one million digits larger than the previous record prime number, in a special class of extremely rare prime numbers known as Mersenne primes. It is only the 50th known Mersenne prime ever discovered, each increasingly difficult to find. Mersenne primes were named for the French monk Marin Mersenne, who studied these numbers more than 350 years ago. GIMPS, founded in 1996, has discovered the last 16 Mersenne primes. Volunteers download a free program to search for these primes, with a cash award offered to anyone lucky enough to find a new prime. Prof. Chris Caldwell maintains an authoritative web site on the largest known primes, and has an excellent history of Mersenne primes. The primality proof took six days of non-stop computing on a PC with an Intel i5-6600 CPU. To prove there were no errors in the prime discovery process, the new prime was independently verified using four different programs on four different hardware configurations. Aaron Blosser verified it using Prime95 on an Intel Xeon server in 37 hours. -

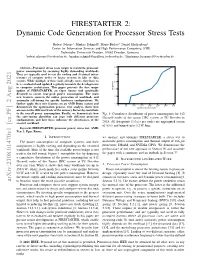

FIRESTARTER 2: Dynamic Code Generation for Processor Stress Tests

FIRESTARTER 2: Dynamic Code Generation for Processor Stress Tests Robert Schöne¹, Markus Schmidl², Mario Bielert³, Daniel Hackenberg³ Center for Information Services and High Performance Computing (ZIH) Technische Universität Dresden, 01062 Dresden, Germany ¹[email protected], ²[email protected], ³{firstname.lastname}@tu-dresden.de Abstract—Processor stress tests target to maximize processor 1.0 power consumption by executing highly demanding workloads. They are typically used to test the cooling and electrical infras- 0.8 tructure of compute nodes or larger systems in labs or data centers. While multiple of these tools already exists, they have to 0.6 be re-evaluated and updated regularly to match the developments in computer architecture. This paper presents the first major Proportion 0.4 update of FIRESTARTER, an Open Source tool specifically designed to create near-peak power consumption. The main new features concern the online generation of workloads and 0.2 automatic self-tuning for specific hardware configurations. We further apply these new features on an AMD Rome system and 0.0 0 50 100 150 200 250 300 350 demonstrate the optimization process. Our analysis shows how power in bins of 0.1W accesses to the different levels of the memory hierarchy contribute to the overall power consumption. Finally, we demonstrate how Fig. 1: Cumulative distribution of power consumption for 612 the auto-tuning algorithm can cope with different processor Haswell nodes of the taurus HPC system at TU Dresden in configurations and how these influence the effectiveness of the 1 Sa=s created workload. 2018. All datapoints ( per node) are aggregated (mean of 60 s) and binned into 0:1 W bins. -

Connecting Citizens to Science

ConnectingConnecting CitizensCitizens toto ScienceScience AA LearningLearning EE--volutionvolution MiguelMiguel AngelAngel MarquinaMarquina CERN,CERN, SwitzerlandSwitzerland CERN University of Geneva UN Institute for Training and Research “All for science, science for all” 45th EUCEN Conference Connecting Citizens to Science… Charmey - May 31, 2013 Miguel Angel Marquina ScienceScience andand InnovationInnovation ResearchResearch vsvs HEHE structuresstructures ResearchResearch environments,environments, purepure joyjoy ButBut sometimes,sometimes, IvoryIvory TowersTowers MajorMajor societalsocietal investmentinvestment onon formingforming suchsuch ““eliteelite”” ReturnReturn toto Society,Society, eveneven ifif justjust aa token,token, withwith educationeducation andand learninglearning opportunities?opportunities? 45th EUCEN Conference Connecting Citizens to Science… Charmey - May 31, 2013 Miguel Angel Marquina ScienceScience andand InnovationInnovation GlobalGlobal ChallengesChallenges ToughTough timetime forfor Researchers,Researchers, competingcompeting for:for: ResourcesResources –– Funding,Funding, ICTICT BigBig DataData challengeschallenges Non/semiNon/semi--automatableautomatable analysisanalysis ImpactImpact onon Society,Society, reachingreaching thethe publicpublic 45th EUCEN Conference Connecting Citizens to Science… Charmey - May 31, 2013 Miguel Angel Marquina ScienceScience andand InnovationInnovation CitizenCitizen InvolvementInvolvement CitizensCitizens havehave beenbeen passivepassive watcherswatchers soso farfar -

How to and Guides

How To and Guides Clean Installing Windows 10 Killer Network Drivers Running Memtest86+ HWiNFO - Full Guide How to Describe a Technical Problem DISM and SFC Reading HWiNFO logs Cleaning a Computer Making a System Dossier Making Windows installation media in Linux Clean Installing Windows 10 1. Create a bootable USB flash drive using the Media Creation Tool from Microsoft. This will also wipe any data stored on the USB flash drive. It is best to disconnect all storage disks except from the main (C Drive) disk “from the computer before installing Windows 10. 2. Boot into your USB that has the Windows 10 Media on it. You can do this by entering your systems' BIOS and change the BIOS boot order to have USB media as the first priority (this can usually be found under the boot tab), or simply look for the words "boot menu" when you see your BIOS boot screen, press the corresponding function key and choose the USB flash drive to boot from it. 3. Follow the steps on screen to install Windows 10. 4. Click Install now 5. Continue on until you hit the license key screen. Here you can either enter your license code or, if Windows has been installed to this computer before, click on the "I don't have a product key" link. 6. Continue on until you hit the “Which type of installation do you want?” screen. Click "Custom". 7. Click on each partition of the target drive and select delete. Once all the partitions are gone you will be left with unallocated space. -

Zvýšení Výpočetní Kapacity HPC V Rámci Projektů Hifi a ADONIS

Institute of Physios ASCR, V. V. I. Na SIDvance 2, 182 21 Praha 8 beamUnes !nfo(aIi beams eu wwwe1 beamseu cli I Klasifikace dokumentu UC - Undassified TC ID / Revize 00172578/C Statut dokumentu Document Released Číslo dokumentu N/A WBS kód 5.5 - RP6 Simulations Ii P85 kód E.HPC2.3, E.HPC2.4 Projektové rozděleni Engineering dokumentace Bc Scientific documents (EBcS) Typ Dokumentu Specification (SP) Zvýšení výpočetní kapacity HPC v rámci projektù HiFI a ADONIS [Příloha 2 — Výpočetní HPC zařízení) [TP1 8_600) Klíčová slova HPC, výpočetní, cluster, úložiště dat, NAS, počítač, UPS, Kritická infrastruktura, síť, LAN, Inflniband Pracovní pozice Jméno, Příjmení Odpovědná Computing Engineer, Edwin Chacon Golcher, osoba Junior Researcher kPS Ondøej Klimo HPC Cluster Engineer, Jaromír Němeček, Pøipravil Computing Engineer, Edwin Chacon Golcher, Junior Researcher RP6 Ondøej Klimo *** EUROPEANU1ION European Structural and Invesling Funds Opnratianal Pmgramm Rssearch, * * : FZU * QľveIopmenI and Educaflon I. Institute ot Physics ASCR, V. v. Na Slovance 2, 182 21 Praha 8 beamLines cti _:a,?zli aam: oJ vwe beams.ou Datum vytvoření Datum Posledních RSS TC ID/revize Systems Engineer 014754/A.001 31-Aug-2018 18:10 31-Aug-2018 18:12 Aleksei Kuzmenko 1 014754/A.002 13-Qct-2Q18 21:43 13-Oct-2018 21:45 Aleksei Kuzmenko 014754/A.003 07-Dec-2018 13:19 [ 07-Dec-2018 13:20 Pavel Tùma t2 Revize dokumentu Jméno, Příjmení Pracovní pozice Datum Podpis 4 (revidujícícho) Environmental Protection Hana Maňásková Engineer 12. 17.-%o.ff ø/ ň‘2 Jiří Vaculík Building team Manager 10 ( ‚ A-cnt. Ladislav Pùst Manager installation of technology O.1Z. -

(Mersenne Primes Search) 11191649 Jun Li June, 2012

Institute of Information and Mathematical Sciences Prime Number Search Algorithms (Mersenne Primes Search) 11191649 Jun Li June, 2012 Contents CHAPTER 1 INTRODUCTION ......................................................................................................... 1 1.1 BACKGROUND .................................................................................................................................... 1 1.2 MERSENNE PRIME ............................................................................................................................. 1 1.3 STUDY HISTORY ................................................................................................................................ 2 1.3.1 Early history [4] ......................................................................................................................... 2 1.3.2 Modern History .......................................................................................................................... 3 1.3.3 Recent History ............................................................................................................................ 4 CHAPTER 2 METHODOLOGY ........................................................................................................ 6 2.1 DEFINITION AND THEOREMS ............................................................................................................. 6 2.2 DISTRIBUTION LAW .......................................................................................................................... -

Prime Numbers– Things Long-Known and Things New- Found

Karl-Heinz Kuhl PRIME NUMBERS– THINGS LONG-KNOWN AND THINGS NEW- FOUND A JOURNEY THROUGH THE LANDSCAPE OF THE PRIME NUMBERS Amazing properties and insights – not from the perspective of a mathematician, but from that of a voyager who, pausing here and there in the landscape of the prime numbers, approaches their secrets in a spirit of playful adventure, eager to experiment and share their fascination with others who may be interested. Third, revised and updated edition (2020) 0 Prime Numbers – things long- known and things new-found A journey through the landscape of the prime numbers Amazing properties and insights – not from the perspective of a mathematician, but from that of a voyager who, pausing here and there in the landscape of the prime numbers, approaches their secrets in a spirit of playful adventure, eager to experiment and share their fascination with others who may be interested. Dipl.-Phys. Karl-Heinz Kuhl Parkstein, December 2020 1 1 + 2 + 3 + 4 + ⋯ = − 12 (Ramanujan) Web: https://yapps-arrgh.de (Yet another promising prime number source: amazing recent results from a guerrilla hobbyist) Link to the latest online version https://yapps-arrgh.de/primes_Online.pdf Some of the text and Mathematica programs have been removed from the free online version. The printed and e-book versions, however, contain both the text and the programs in their entirety. Recent supple- ments to the book can be found here: https://yapps-arrgh.de/data/Primenumbers_supplement.pdf Please feel free to contact the author if you would like a deeper insight into the many Mathematica programs.